David Scott Bernstein's Blog, page 29

December 23, 2015

Just Telling Teams to Self-Organize Doesn’t Work

“Continuous attention to technical excellence and good design enhances agility,” is one of the principles of the Agile Manifesto. In retrospect, at their ten-year reunion, at least some of the original authors of the Agile Manifesto felt they didn’t state this strongly enough.

I believe technical excellence doesn’t just enhance agility, it enables it. Cobbling code together in iterations the way most developers write code in a traditional development environment, with little attention to technical excellence, can incur so much technical debt that development grinds to a halt. I’ve seen many teams get into this situation, not right away, but by their fourth or fifth year into development things slow way down.

What is technical excellence? Given the state of our industry and the low success rates reported by study after study, I’d say that most developers don’t know what technical excellence is. This isn’t their fault. We haven’t been taught these things in school and we don’t generally agree on a core set of standards and practices.

So asking teams to just figure it out and self-organize can often set a team up to fail. But this is what Scrum says and many ScrumMasters are under the illusion that developers can just figure this stuff out, even though much of technical excellence is in direct opposition to what they were taught in school.

Whenever I get to teach for one of my clients on the East Coast, who hires a lot of entry level developers, I can always see some of my newly graduated students chuckle in the corner when I tell them that excessive “what” comments (comments that explain what the code is doing) can be bad because the code itself should express what it’s doing. They’re surprised. They say they were told by their professors that as long as every line of code has a comment explaining it, even if the code is obvious (like “x++;”), then it doesn’t matter what the code says. But professional developers know this to be a bad practice.

When I ask developers, even senior developers, what the SOLID principles are or what methodologies they follow, I often get blank stares. These ideas should be top-of-mind and considered with every line of code we write. I’m not talking about volumes of information, just a few dozen core principles and practices. But these things aren’t universally known in our field. So asking developers to “just figure it out” may not work as well as management might expect and that’s the case on many of the Scrum teams I’ve observed.

Instead, give them the resources to be successful. Build a library of useful books (there are many). Bring in a class. Hire a consultant. Find someone who’s been there and done that so you can learn from their mistakes without having to “reinvent the wheel.” It’s true that this is what I do for a living but I’m not just plugging my services. There are plenty of other sources to get a team headed for technical excellence. One good and free option is to keep reading this blog as I cover several aspects of technical excellence in my posts.

December 16, 2015

Be Anthropomorphic

I have had the good fortune of working on two dolphin research projects in my life so far. Dolphins are highly intelligent and their brains are, on average, larger than ours. I like to say that I have the privilege of working with the two most intelligent species on the planet, dolphins and developers.

When studying animal behavior, we have to be very careful not to project our own interpretations on our subjects. This is called anthropomorphization, which is seeing others in terms of ourselves. In some sense, it can’t be helped because the only way we can understand anything is in terms of ourselves. But in academics, anthropomorphization is considered a bad thing because it leads to projections and false understandings.

In software, however, anthropomorphization is a good thing! It is impossible for us to visualize the flow of electrons through circuitry millions of times a second and so instead we visualize the problem we’re solving in software to help us understand the code we’re writing.

I think of my programs as a little world and the objects as people in that world. Like people, objects have purpose in life. They have goals and aspirations, as well as challenges. Like any good parent, I want to see my objects reach fulfillment by giving them the capabilities to achieve their purpose.

This has an impact on how I treat my objects. For example, I try to avoid getters and setters when I can because I’d rather an object change its internal state as the result of doing something useful. If I wanted your driver’s license number I wouldn’t put my hand in your pocket, remove your wallet, and take your driver’s license. This would be inappropriate—and could get me into trouble. Shouldn’t the same be true for the objects we use?

If I want an object’s ID, rather than take it I should *request* it. This is more than just good object citizenship, it protects me as well because perhaps the way the ID is stored will change and if I was intimately involved in the implementation for getting the ID, when it changes it would break my code. But if one of the responsibilities of the object is to return its ID upon request and it changes the way the ID is stored, as long as the interface for retrieving the ID doesn’t change then none of its dependencies will need to change.

December 9, 2015

How Open-Closed are you?

Of the handful of programming principles that I live by, the Open-Closed Principle is perhaps the most important. I consider the Open-Closed Principle to be the central goal of all software development and what we should be striving for when doing development, beyond just getting our features to work.

I was talking with Jim Shore, co-author of the book The Art of Agile Development, a few years ago about the Open-Closed Principle and he said that he felt it was outdated. It is true that the Open-Closed Principle was first created at a time where you might have to wait overnight to get your program compiled, and without advanced refactoring tools or integrated development environments. The way we build software today is very different, but I believe that the Open-Closed Principle has tremendous application even in today’s environments.

The Open-Closed Principle says that entities (methods, classes, modules) should be “open for extension but closed for modification.” What does this mean? It’s basically saying: We want to build code in such a way that it won’t need to be changed very often.

When I asked developers what they would rather do, write a new feature or take a feature and integrate it into an existing system, invariably they respond that they’d rather write the new feature. When I ask why, they give me all sorts of examples: it’s more fun to implement something new, the existing system may be difficult to understand or poorly written, and integration is typically where the really nasty bugs show up. In fact, it’s almost universally true that bugs are more prevalent when we modify existing code than when we write new code.

If this is the case, that it’s safer to write new code than change existing code and that developers prefer to write new code over changing existing code, then why not set ourselves up to succeed? After all, we’re the developers and we can create code any way we want. This is exactly what the open-closed principle is saying.

Of course, it would be wonderful if moving from version one of a product to version two only required us to add new code and not change any existing code, but that’s not usually possible, so the question becomes: how to minimize changing code and maximize writing new code.

Ideally, for any new feature or change of an existing feature, I would like to change code in only one place and then add new code. This is what open-closed-ness means to me. If I can add a new feature by simply adding new code and perhaps updating a factory then I consider my design to be open to that new feature.

This is a question I ask over and over again in design reviews and code reviews: “How Open-Closed is your design?” By this I mean how open are you for extending behavior and where do you have to change code to implement new behavior? Adding new features should require minimal changes to existing code.

When we think about building software in this way, it has a huge impact on our approach. We suddenly see that there are different ways to extend behavior. For example, we may want to add a new variation to an existing system such as a new form of encryption. We may want to change the sequence of steps in an algorithm or we may want to change the number of steps in an algorithm or any number of other kinds of changes. If we’re able to encapsulate these kinds of changes so that when we need to make them later we can do so without affecting the rest of the system, then we would say we’ll be open for that change.

Following the Open-Closed Principle, we are forced to write code that’s more cohesive, less redundant, and of overall higher quality.

December 2, 2015

The Single Responsibility Principle

The Single Responsibility Principle says that any entity, whether a class, a method, or a module, should have a single responsibility.

But what is a “responsibility” and how big is it? Is a feature a responsibility or a task or what? I like how Bob Martin defines a responsibility. He says any entity such as a class should have one reason to exist and therefore one reason to change. So a responsibility is *a reason to change*, and this can get very small indeed.

The Single Responsibility Principle echoes the code quality cohesion. Cohesive classes are about one thing. Cohesive methods are about fulfilling a functional aspect of that one thing. While I see developers violate the Single Responsibility Principle all the time, I have to say that the concept is familiar to all of us. If we can name something succinctly then we probably hit upon a single responsibility. If a class or a method is difficult to name then it indicates we’re either unclear on its purpose or that it has too many responsibilities.

Why are having too many responsibilities a bad thing? The answer is that the more responsibilities a class has, the more potential there is to couple to it for orthogonal reasons. As soon as I have multiple reasons to access a class, I have multiple classes using a class that have nothing to do with each other and when the class being used has to change, it often has unexpected side effects on the other classes that use it. By following the Single Responsibility Principle, I have much less opportunity to make this mistake.

November 25, 2015

Don’t Test Private Methods

I often get the question when teaching developers test driven development (TDD), “How do you test private method?” The short answer is you don’t, but there’s also a longer answer that Michael Features gave in his book, *Working Effectively with Legacy Code.*

Certainly, you *could* test private methods, and I’ve seen developers do all sorts of tricks to make that happen—wrapping the private method in a public delegate or proxy or even accessing the private method through reflection—but perhaps having to resort to these tricks is a code smell that’s telling us something’s wrong.

Feathers points out that if you can test the private method through its public interface (the public method that calls the private method) then you can consider the private method tested and code coverage will show the private method was covered. This is why myself and others say that you don’t need to write tests for private methods when doing TDD.

But if you still feel uneasy about whether you have the behavior in the private method covered by tests then maybe something else is going on. Perhaps your private method has too much responsibility and should be broken out into its own testable class. Then the method can be declared public so it can be tested directly and the caller can hold a private reference to its class so the public method doesn’t bloat the caller’s interface.

Private methods are for hiding implementation and we don’t want to test implementation details when we do TDD, that’s better achieved as part of a QA effort, if needed.

November 18, 2015

More Reasons Why Agile Works

Clearly, the success rate of Agile projects is higher on average than Waterfall projects. I propose that we can trace some of this success back to technical practices. In Waterfall development, there was no incentive to write good code. Developers got once chance to write a feature and once it works the feature was never revisited so developers don’t see a need to focus on code quality. They may never see the code again.

But in iterative development, developers often have to go back into code they wrote in the last iteration to enhance code they wrote previously. There’s nothing more embarrassing than criticizing poorly written code only to realize that I was the one who wrote it. Going back into code we wrote is a good incentive to make the code understandable and I believe this is one of the major factors leading to a higher success rate in Agile.

Software development is a technical activity and to do it well requires technical skills. Most developers are not taught about Agile in school, so they often struggle to do Agile correctly. And some who are new to Agile see it as an undisciplined approach. Nothing could be farther from the truth.

Agile presupposes disciplined, high-quality software development that focuses on maintainability. If we allow technical debt to accumulate then it will prevent us from moving fast. It’s not enough to get feedback, we have to do something about it.

If developers apply the same poor quality practices to Agile that they do to Waterfall then their project is at a severe disadvantage. Even if they can deliver something it’s generally of such low quality that it costs a fortune to maintain.

Instead, we must keep code quality high as we continue to enhance our software. That’s the only way to make significant gains in Agile development. Adopting practices that support building quality code, such as TDD and refactoring, are core practices of the most productive Agile teams.

November 11, 2015

Go Deep Rather than Wide

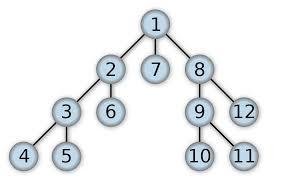

When defining, building, and testing a system, we can go deep or wide. Deep means taking a vertical slice that provides functionality across layers of a system, end to end, which enables a feature. For example, we may enable a user to log in, which would include the client-side UI all the way to the server storage. Wide means building out each layer first before wiring them together so all the services in a layer would be built out together.

Whenever possible, go deep rather than wide. When building a product, build out functionality through features rather than in layers and avoid anything that delays having running code. When you build deep you are always creating needed behavior and building it the way those behaviors will be used. This is far more efficient than creating libraries of features that can be called from different layers in a system.

I resisted this idea for a long time, convinced that careful planning and segmentation of work could help us deliver more value faster but it turns out that in most cases building the most valuable features first is the best place to start.

Being feature-driven doesn’t preclude the emergence of an architecture, but that architecture comes about organically over time, and tends to be more resilient than ones that were created at the beginning of a project. Just as it’s far easier to edit written text than to start with an empty page, it’s easier to start with a working system and refactor it to improve the design and make it more supportable.

Going deep focuses development on features the way they’ll be used in production. This helps pieces of code fit together more easily and provides working features faster than building a library of services that will eventually be consumed.

November 4, 2015

Don’t Write for Reuse

Reuse. This was the promise of object-oriented programming. Back in the early 1990’s we were told to move from C to C++ because it promoted reuse. That was wrong.

The way to reuse code is through delegation, by calling a piece of code. Delegation has been around since assembly language so OO languages didn’t introduce it. Being able to keep code and data together in objects does help make code more automatous but most people assumed that the reuse OO promoted was through inheritance.

Inheritance is a powerful feature in OO languages but inheritance has been heavily misused by developers. Inheritance is generally not the best way to promote code reuse. I have a lot more to say about that, which will have to wait for a future blog posts (or perhaps several blog posts.)

My rule of thumb for code reuse is if I have only one client who wants to consume my service then I’ll write the service for that one client. When I get a second client with similar needs then I’ll look for ways to promote reuse. Trying to anticipate what other clients need when I only have one client is inefficient and error-prone. Instead, code for what you need today and if tomorrow you discover you can use some of the pieces you already have then look for reuse. This keeps development focused on tangible results and is, in the long run, more efficient.

October 28, 2015

What Makes a Good Test?

Many developers assume they know how to write a good test, but in my experience few developers really do. They test units of code rather than units of behavior and their tests become implementation dependent and break when the code is refactored. Rather than providing a safety net for developers to catch errors when refactoring, these tests break when code is changed, even if the behavior hasn’t changed. Instead of supporting us when refactoring, implementation dependent tests end up just causing extra work.

A good test defines behavior without specifying implementation details. It states the result it wants, not how to get it. For example, if a document can be sorted we may write a test for this by passing in an unsorted document and validate the document we get back is sorted. The document doesn’t care how it’s sorted, just that it is sorted. If we later add a business rule that says, “For documents under 100 lines long use quick sort, otherwise use bubble sort,” we’d add two tests, one to validate quick sort was selected for sorting a document 99 lines long, and another test to validate bubble sort is selected for a document 100 lines long.

In this case, since we’re testing a boundary we need two tests, one below the boundary and one above. We generally pick values on the border, in this case 99 for below and 100 for above. Notice, we don’t have a test for 98 because that would behave like the test for 99. Likewise, we don’t have a test for 101 as it would behave the same as the test for 100.

If we built our sort algorithms test-first then we may have tests that reach into the implementation of “sort” but that’s not Document’s concern. From the document’s perspective it’s only concerned with whether the document is sorted after sort is invoked and from the document factory’s perspective, it’s only concerned that the right sort algorithm is invoked based on the number of lines in the document. These are separate concerns that end up being separate, implementation-independent tests.

October 21, 2015

Is Priority Best for Ordering a Backlog?

When I was first introduced to Agile I had a hard time understanding how to order the backlog. Agile says to do the most important, the most valuable work first but I used to think that was overly simplistic. If I’m building a house I shouldn’t build the master bedroom first just because that’s where I’ll be spending most of my time. I have to start with the foundation.

Complex construction of physical things often has an ideal sequence. In the case of a house, the foundation is built toward the beginning of construction and the roof is built toward the end. I reasoned that something similar must be the case for building software.

I was wrong.

What I’ve come to realize is that we want to build software features as independently from other features as possible. We can do this if we build a feature at a time. In Waterfall, we build to the release, not to the feature. When building to a release there’s nothing stopping us from creating lots of dependencies between features. While this can be seen as an efficient way to get to a release, it often binds features together in ways that make the software hard to extend in the future.

There are times when more than one feature relies on the same capabilities or services as other features. Sometimes it makes most sense to build the easiest feature first then build the harder feature that depends on the easier feature. But most of the time, delivering the highest value features will help your users the most.

Instead of focusing on the release, focus on the feature and build it to be independent from other features in the system. By doing this, you’ll build the most important stuff first and you’ll build it so it can be more easily extended in the future.