Tom Stafford's Blog, page 47

September 22, 2013

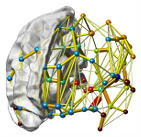

The rise of the circuit-based human

I’ve got a piece in The Observer about how we’re moving towards viewing the brain as a series of modifiable brain circuits each responsible for distinct aspects of experience and behaviour.

I’ve got a piece in The Observer about how we’re moving towards viewing the brain as a series of modifiable brain circuits each responsible for distinct aspects of experience and behaviour.

The ‘brain circuit’ aspect is not new but the fact that neuroscience and medicine, on the billion-dollar global level, are reorienting themselves to focus on identifying and, crucially, altering brain circuits is a significant change we’ve seen only recently.

What many people don’t realise, is the extent to which direct stimulation of brain circuits by implanted electrodes is already happening.

Perhaps more surprising for some is the explosion in deep brain stimulation procedures, where electrodes are implanted in the brains of patients to alter electronically the activity in specific neural circuits. Medtronic, just one of the manufacturers of these devices, claims that its stimulators have been used in more than 100,000 patients. Most of these involve well-tested and validated treatments for Parkinson’s disease, but increasingly they are being trialled for a wider range of problems. Recent studies have examined direct brain stimulation for treating pain, epilepsy, eating disorders, addiction, controlling aggression, enhancing memory and for intervening in a range of other behavioural problems.

More on how we are increasingly focussed on hacking our circuits in the rest of the article.

Link to ‘Changing brains: why neuroscience is ending the Prozac era’.

September 21, 2013

This complex and tragic event supports my own view

As shots rang out across the courtyard, I ducked behind my desk, my adrenaline pumping. Enraged by the inexplicable violence of this complex and multi-faceted attack, I promised the public I would use this opportunity to push my own pet theory of mass shootings.

As shots rang out across the courtyard, I ducked behind my desk, my adrenaline pumping. Enraged by the inexplicable violence of this complex and multi-faceted attack, I promised the public I would use this opportunity to push my own pet theory of mass shootings.

Only a few days have passed since this terrible tragedy and I want to start by paying lip service to the need for respectful remembrance and careful evidence-gathering before launching into my half-cocked ideas.

The cause was simple. It was whatever my prejudices suggested would cause a mass shooting and this is being widely ignored by the people who have the power to implement my prejudices as public policy.

I want to give you some examples of how ignoring my prejudices directly led to the mass shooting.

The gunmen grew up in an American town and had a series of experiences, some common to millions of American people, some unique to him. But it wasn’t until he started to involve himself in the one thing that I particularly object to, that he started on the path to mass murder.

The signs were clear to everyone but they were ignored because other people haven’t listened to the same point-of-view I expressed on the previous occasion the opportunity arose.

Research on the risk factors for mass shootings has suggested that there are a number of characteristics that have an uncertain statistical link to these tragic events but none that allow us to definitively predict a future mass shooting.

But I want to use the benefit of hindsight to underline one factor I most agree with and describe it as if it can be clearly used to prevent future incidents.

I am going to try and convince you of this in two ways. I am going to selectively discuss research which supports my position and I’m going to quote an expert to demonstrate that someone with a respected public position agrees with me.

Several scientific papers in a complex and unsettled debate about this topic could be taken to support my position. A government report also has a particular statistic which I like to quote.

Highlighting these findings may make it seem like my position is the most probable explanation despite no clear overall conclusion but a single quote from one of the experts will seal the issue in my favour.

“Mass shootings” writes forensic psychiatrist Anand Pandya, an Associate Clinical Professor in the Department of Psychiatry and Behavioral Neurosciences at the UCLA School of Medicine, Los Angeles, “have repeatedly led to political discourse”. But I take from his work that my own ideas, to quote Professor Pandya, “may be useful after future gun violence”.

Be warned. People who don’t share my biases are pushing their own evidence-free theories in the media, but without hesitation, I can definitely say they are wrong and, moreover, biased.

It is clear that the main cause of this shooting was the thing I disliked before the mass shooting happened. I want to disingenuously imply that if my ideas were more widely accepted, this tragedy could have been averted.

Do we want more young people to die because other people don’t agree with me?

UPDATE: Due to the huge negative reaction this article has received, I would like to make some minor concession to my critics while accusing them of dishonesty and implying that they are to blame for innocent deaths. Clearly, we should be united by in the face of such terrible events and I am going to appeal to your emotions to emphasise that not standing behind my ideas suggests that you are against us as a country and a community.

September 19, 2013

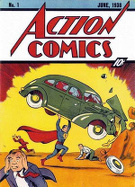

A comic repeat with video games and violence

An article in the Guardian Headquarters blog discusses the not very clear evidence for the link between computer games and violence and makes a comparison to the panic over ‘horror comics’ in the 1950s.

An article in the Guardian Headquarters blog discusses the not very clear evidence for the link between computer games and violence and makes a comparison to the panic over ‘horror comics’ in the 1950s.

The Fifties campaign against comics was driven by a psychiatrist called Fredric Wertham and his book The Seduction of the Innocent.

We’ve discussed before on Mind Hacks how Wertham has been misunderstood. He wasn’t out to ban comics, just keep adult themes out of kids magazines.

However, his idea of what ‘adult themes’ might be were certainly pretty odd. This is Wertham’s testimony to a hearing in the US Senate.

I would like to point out to you one other crime comic book which we have found to be particularly injurious to the ethical development of children and those are the Superman comic books. They arose in children’s fantasies of sadistic joy in seeing other people punished over and over again, while you yourself remain immune. We have called it the “Superman complex.” In these comic books, the crime is always real and Superman’s triumph over [evil] is unreal. Moreover, these books like any other, teach complete contempt of the police…

I may say here on this subject there is practically no controversy… as long as the crime comic books industry exists in its present form, there are no secure homes. …crime comic books, as I define them, are the overwhelming majority of all comic books… There is an endless stream of brutality… I can only say that, in my opinion, this is a public-health problem.

The ‘Superman causes sadism’ part aside, this is a remarkably similar argument to the one used about violent video games. It’s not a matter of taste or decency, it’s a public health problem.

In fact, an article in the Mayo Clinic Proceedings had a neat comparison between arguments about 1950s comic books and modern day video games which turn out to be very similar.

Moral of the story: wait for sixty years when the debate about violent holograms kicks off and they’ll leave you to play your video games in peace.

Link to ‘What is the link between violent video games and aggression?’

Link to article on video games and comic panics in Mayo Clinic Proceedings.

September 14, 2013

A coming revolution walks a fine line

The Chronicle of Higher Education has an excellent in-depth article on the most likely candidate for a revolution in mental health research: the National Institute of Mental Health’s RDoC or Research Domain Criteria project.

The Chronicle of Higher Education has an excellent in-depth article on the most likely candidate for a revolution in mental health research: the National Institute of Mental Health’s RDoC or Research Domain Criteria project.

The article is probably the best description of the project this side of the scientific literature and considering that the RDoC is likely to fundamentally change how mental illness is understood, and eventually, treated, it is essential reading.

It is also interesting for the fact that the leaders of the RDoC project continue to hammer the reputation of the long-standing psychiatric diagnosis manual – the DSM.

First though, it’s worth knowing a little about what the RDoC actually is. Essentially, it’s a catalogue of well-defined neural circuits that are associated with specific cognitive functions or emotional responses.

If you look at the RDoC matrix you can see how everything has been divided. For example, stress regulation is associated with the raphe nuclei circuits and serotonin system.

The idea is that mental illnesses would be better understood as dysfunctions in various of these core components of behaviour rather than the traditional collections of symptoms that have been rather haphazardly formed into diagnoses.

Conceptually, it’s like a sort of neuropsychological Lego that should allow researchers to focus on agreed components of the brain and see how they map on to genes, behaviour, experience and so on.

It’s meant to be updated over time so ‘bricks’ can be modified or added as they become confirmed. An advantage is that is may allow a more accessible structure to understanding the brain for those not trained in neuroscience but there is a danger that it will over-simplify the components of experience and behaviour in some people’s minds (and, of course, research).

The RDoC has been around for several years but it recently hit the headlines when NIMH director Thomas Insel wrote a blog post promoting it two weeks before the launch of the American Psychiatric Association’s diagnostic manual, the DSM-5, saying that the DSM ‘lacks validity’ and that “patients with mental disorders deserve better”.

After a media storm, Insel wrote another piece jointly with the president of the American Psychiatric Association that involved some furious backpeddling where the DSM was described as a “key resource for delivering the best available care” and “complementary” to the RDoC approach.

But in the Chronicle article, head of the RDoC project, Bruce Cuthbert, makes no bones about the DSM’s faults:

“If you think about it the way I think about it, actually the DSM is sloppy in both counts. There’s no particular biological test in it, but the psychology is also very weak psychology. It’s folk psychology without any quantification involved.”

The DSM will not, of course, suddenly disappear. “As flawed as the DSM is, we have no substitute for the clinical realm for insurance reimbursement,” ex-NIMH Director Steven Hyman says in the article.

It’s worth noting that this is not actually true. In the US, diagnosis is usually made according to DSM definitions but insurance reimbursement is charged by codes from the ICD-10 – the free diagnostic manual from the World Health Organisation.

But the fact that the most senior psychiatric researchers in the US are now openly and persistently highlighting that the DSM is not fit for the purpose of advancing science and psychiatric treatment is a damning condemnation of the manual – no matter how they try and sugar-coat it.

The fact is, the NIMH have to walk a fine line. They need to both condemn the DSM for being a mess while trying not to shatter confidence in a system used to treat millions of patients every year.

Best of luck with that.

Link to Chronicle article ‘A Revolution in Mental Health’.

September 13, 2013

Drug addiction: The complex truth

We’re told studies have proven that drugs like heroin and cocaine instantly hook a user. But it isn’t that simple – little-known experiments over 30 years ago tell a very different tale.

Drugs are scary. The words “heroin” and “cocaine” make people flinch. It’s not just the associations with crime and harmful health effects, but also the notion that these substances can undermine the identities of those who take them. One try, we’re told, is enough to get us hooked. This, it would seem, is confirmed by animal experiments.

Many studies have shown rats and monkeys will neglect food and drink in favour of pressing levers to obtain morphine (the lab form of heroin). With the right experimental set up, some rats will self-administer drugs until they die. At first glance it looks like a simple case of the laboratory animals losing control of their actions to the drugs they need. It’s easy to see in this a frightening scientific fable about the power of these drugs to rob us of our free will.

But there is more to the real scientific story, even if it isn’t widely talked about. The results of a set of little-known experiments carried out more than 30 years ago paint a very different picture, and illustrate how easy it is for neuroscience to be twisted to pander to popular anxieties. The vital missing evidence is a series of studies carried out in the late 1970s in what has become known as “Rat Park”. Canadian psychologist Bruce Alexander, at the Simon Fraser University in British Columbia, Canada, suspected that the preference of rats to morphine over water in previous experiments might be affected by their housing conditions.

To test his hypothesis he built an enclosure measuring 95 square feet (8.8 square metres) for a colony of rats of both sexes. Not only was this around 200 times the area of standard rodent cages, but Rat Park had decorated walls, running wheels and nesting areas. Inhabitants had access to a plentiful supply of food, perhaps most importantly the rats lived in it together.

Rats are smart, social creatures. Living in a small cage on their own is a form of sensory deprivation. Rat Park was what neuroscientists would call an enriched environment, or – if you prefer to look at it this way – a non-deprived one. In Alexander’s tests, rats reared in cages drank as much as 20 times more morphine than those brought up in Rat Park.

Inhabitants of Rat Park could be induced to drink more of the morphine if it was mixed with sugar, but a control experiment suggested that this was because they liked the sugar, rather than because the sugar allowed them to ignore the bitter taste of the morphine long enough to get addicted. When naloxone, which blocks the effects of morphine, was added to the morphine-sugar mix, the rats’ consumption didn’t drop. In fact, their consumption increased, suggesting they were actively trying to avoid the effects of morphine, but would put up with it in order to get sugar.

‘Woefully incomplete’

The results are catastrophic for the simplistic idea that one use of a drug inevitably hooks the user by rewiring their brain. When Alexander’s rats were given something better to do than sit in a bare cage they turned their noses up at morphine because they preferred playing with their friends and exploring their surroundings to getting high.

Further support for his emphasis on living conditions came from another set of tests his team carried out in which rats brought up in ordinary cages were forced to consume morphine for 57 days in a row. If anything should create the conditions for chemical rewiring of their brains, this should be it. But once these rats were moved to Rat Park they chose water over morphine when given the choice, although they did exhibit some minor withdrawal symptoms.

You can read more about Rat Park in the original scientific report. A good summary is in this comic by Stuart McMillen. The results aren’t widely cited in the scientific literature, and the studies were discontinued after a few years because they couldn’t attract funding. There have been criticisms of the study’s design and the few attempts that have been made to replicate the results have been mixed.

Nonetheless the research does demonstrate that the standard “exposure model” of addiction is woefully incomplete. It takes far more than the simple experience of a drug – even drugs as powerful as cocaine and heroin – to make you an addict. The alternatives you have to drug use, which will be influenced by your social and physical environment, play important roles as well as the brute pleasure delivered via the chemical assault on your reward circuits.

For a psychologist like me it suggests that even addictions can be thought of using the same theories we use to think about other choices, there isn’t a special exception for drug-related choices. Rat Park also suggests that when stories about the effects of drugs on the brain are promoted to the neglect of the discussion of the personal and social contexts of addiction, science is servicing our collective anxieties rather than informing us.

This is my BBC Future article from tuesday. The original is here. The Foddy article I link to in the last paragraph is great, read that. As is Stuart’s comic.

September 6, 2013

A furious infection but a fake fear of water

RadioLab has an excellent short episode on one of the most morbidly fascinating of brain infections – rabies.

RadioLab has an excellent short episode on one of the most morbidly fascinating of brain infections – rabies.

Rabies is a virus that can very quickly infect the brain. When it does, it causes typical symptoms of encephalitis (brain inflammation) – headache, sore neck, fever, delirium and breathing problems – and it is almost always fatal.

It also has some curious behavioural effects. It can make people hyper-reactive and can lead to uncontrolled muscle spasms due to its effect on the action coordination systems in the brain. With the pain and distress, some people can become aggressive.

This is known as the ‘furious’ stage and when we describe some as ‘rabid with anger’ it is a metaphor drawn from exactly this.

Rabies can also cause what is misleadingly called ‘hydrophobia’ or fear of water. You can see this in various videos that have been uploaded to YouTube that show rabies-infected patients trying to swallow and reacting quite badly.

But rabies doesn’t actually instil a fear of water in the infected person but instead causes dysphagia – difficulty with swallowing – due to the same disruption to the brain’s action control systems.

We tend to take swallowing for granted but it is actually one of our most complex actions and requires about 50 muscles to complete successfully.

Problems swallowing are not uncommon after brain injury (particularly after stroke) and speech and language therapists can spend a lot of their time on neurorehabilitation wards training people to reuse and re-coordinate their swallow to stop them choking on food.

As we know from waterboarding, choking can induce panic, and it’s not so much that rabies creates a fear of water, but a difficulty swallowing and hence experiences of choking. This makes the person want to avoid trying to swallow liquids.

Bathing, for example, wouldn’t trigger this aversion and that’s why rabies doesn’t really cause a ‘fear of water’ but more a ‘fear of choking on liquids due to impaired swallowing’.

The RadioLab episode discusses the case that launched the controversial Milwaukee protocol – a technique for treating rabies that involves putting you into a drug-induced coma to protect your brain until your body has produced the anti-rabies antibodies.

It’s a fascinating and compelling episode so well worth checking out.

Link to RadioLab episode ‘Rodney Versus Death’.

September 2, 2013

Why the other queue always seem to move faster than yours

Whether it is supermarkets or traffic, there are two possible explanations for why you feel the world is against you, explains Tom Stafford.

Sometimes I feel like the whole world is against me. The other lanes of traffic always move faster than mine. The same goes for the supermarket queues. While I’m at it, why does it always rain on those occasions I don’t carry an umbrella, and why do wasps always want to eat my sandwiches at a picnic and not other people’s?

It feels like there are only two reasonable explanations. Either the universe itself has a vendetta against me, or some kind of psychological bias is creating a powerful – but mistaken – impression that I get more bad luck than I should. I know this second option sounds crazy, but let’s just explore this for a moment before we get back to the universe-victim theory.

My impressions of victimisation are based on judgements of probability. Either I am making a judgement of causality (forgetting an umbrella makes it rain) or a judgement of association (wasps prefer the taste of my sandwiches to other people’s sandwiches). Fortunately, psychologists know a lot about how we form impressions of causality and association, and it isn’t all good news.

Our ability to think about causes and associations is fundamentally important, and always has been for our evolutionary ancestors – we needed to know if a particular berry makes us sick, or if a particular cloud pattern predicts bad weather. So it isn’t surprising that we automatically make judgements of this kind. We don’t have to mentally count events, tally correlations and systematically discount alternative explanations. We have strong intuitions about what things go together, intuitions that just spring to mind, often after very little experience. This is good for making decisions in a world where you often don’t have enough time to think before you act, but with the side-effect that these intuitions contain some predictable errors.

One such error is what’s called “illusory correlation”, a phenomenon whereby two things that are individually salient seem to be associated when they are not. In a classic experiment volunteers were asked to look through psychiatrists’ fabricated case reports of patients who had responded to the Rorschach ink blot test. Some of the case reports noted that the patients were homosexual, and some noted that they saw things such as women’s clothes, or buttocks in the ink blots. The case reports had been prepared so that there was no reliable association between the patient notes and the ink blot responses, but experiment participants – whether trained or untrained in psychiatry – reported strong (but incorrect) associations between some ink blot signs and patient homosexuality.

One explanation is that things that are relatively uncommon, such as homosexuality in this case, and the ink blot responses which contain mention of women’s clothes, are more vivid (because of their rarity). This, and an effect of existing stereotypes, creates a mistaken impression that the two things are associated when they are not. This is a side effect of an intuitive mental machinery for reasoning about the world. Most of the time it is quick and delivers reliable answers – but it seems to be susceptible to error when dealing with rare but vivid events, particularly where preconceived biases operate. Associating bad traffic behaviour with ethnic minority drivers, or cyclists, is another case where people report correlations that just aren’t there. Both the minority (either an ethnic minority, or the cyclists) and bad behaviour stand out. Our quick-but-dirty inferential machinery leaps to the conclusion that the events are commonly associated, when they aren’t.

So here we have a mechanism which might explain my queuing woes. The other lanes or queues moving faster is one salient event, and my intuition wrongly associates it with the most salient thing in my environment – me. What, after all, is more important to my world than me. Which brings me back to the universe-victim theory. When my lane is moving along I’m focusing on where I’m going, ignoring the traffic I’m overtaking. When my lane is stuck I’m thinking about me and my hard luck, looking at the other lane. No wonder the association between me and being overtaken sticks in memory more.

This distorting influence of memory on our judgements lies behind a good chunk of my feelings of victimisation. In some situations there is a real bias. You really do spend more time being overtaken in traffic than you do overtaking, for example, because the overtaking happens faster. And the smoke really does tend follow you around the campfire, because wherever you sit creates a warm up-draught that the smoke fills. But on top of all of these is a mind that over-exaggerates our own importance, giving each of us the false impression that we are more important in how events work out than we really are.

This is my BBC Future post from last Tuesday. The original is here.

August 29, 2013

Peter Huttenlocher has left the building

The New York Times has an obituary for child neurologist Peter Huttenlocher, who surprised everyone by finding that the human brain loses connections as part of growing into adulthood.

The New York Times has an obituary for child neurologist Peter Huttenlocher, who surprised everyone by finding that the human brain loses connections as part of growing into adulthood.

Huttenlocher counted synapses – the connections between neurons – and as a paediatric neurologist was particularly interested in how the number of synapses changed as we grow from children to adults.

Before Huttenlocher’s work we tended to think that our brain’s just got more connected as we got older, but what he showed was that we hit peak connectivity in the first year of life and much of brain development is actually removing the unneeded connections.

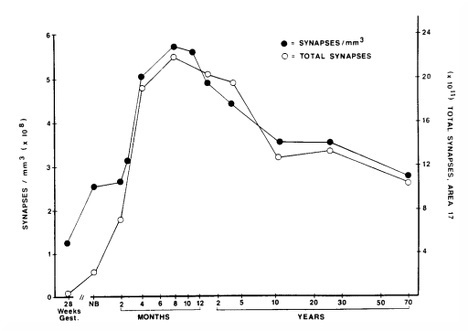

This is know as synpatic pruning and it was demonstrated with this graph from classic 1990 paper.

I love this graph for a couple of reasons. Firstly, it’s a bit wonky. It was hand-drawn and whenever it is reproduced, as it has been in many textbooks, it’s always a bit off-centre.

Secondly, it’s crystal clear. It’s a graph showing the density of synaptic connections in the visual cortex of the human brain and you can see it’s rapidly downhill from the first year of life until the late teens where things start to even out.

This is a good thing as the infant brain starts over-connected but loses anything that isn’t needed as we learn which skills are most important, and we are left with only the most efficient neural connections, through the experience of growing up.

One of Huttenlocher’s discoveries was that this process of synaptic pruning may go wrong in people who have neurodevelopmental disorders.

Link to NYT obituary for Peter Huttenlocher.

August 27, 2013

Are classical music competitions judged on looks?

Looking at the evidence behind a recent news story

The headlines

The Los Angeles Times: People trust eyes – not ears – when judging musicians

Classic FM: Classical singers judged by actions not voice

Nature: Musicians’ appearances matter more than their sound

The story

If you wanted to pick out the musician who won a prestigious classical music competition would you listen to a clip of them playing or watch a silent video of them performing the same piece of music?

Most of us would go for an audio clip rather than video, and we’d be wrong. In a series of experiments, Chia-Jung Tsay from University College London, showed that both novices and expert musicians were better able to pick out the winners when they watched rather than listened to them.

The moral, we’re told, is that how you look is more important than how you sound, even in elite classical music competitions.

What they actually did

Dr Tsay, herself a classically trained musician, used footage from real international classical music competitions. She took the top three finalists and asked volunteers to pick out the real winner – with a cash incentive – by looking at video without sound, sound without video, or both.

Over a series of experiments she showed that people think that audio will be more informative than video, but actually people are able to pick the real winner when watching video clips. But they aren’t able to do this when listening to audio clips (these test subjects only perform at the level of chance). The shocking thing is that when people get sound and video clips, which notionally contain more information, they still perform at chance. The implication being that they would do better if they could block their ears and ignore the sound.

Follow up experiments suggested that people’s ability to pick winners depended on their being able to pick out things associated with “stage presence”. A video reduced to line drawings, designed to remove details and emphasise motion, still allowed people to pick out winners at an above chance rate. Another experiment showed that asking people to identify the “most confident, creative, involved, motivated, passionate, and unique performer” tallied with the real winners.

How plausible is this?

We’re a visual species. How things look really matters, as everyone who has dressed up for an interview knows. It’s also not uncommon for us to be misled into believing that how something looks isn’t as important as it really is (here’s an example: judging wine by the labels rather than the taste).

What is less plausible is the spin put on the story by the headlines. We all know that looks are important, but how can they really be more important than sound in a classical music competition? The most important thing really is the sound, but this research resonates with a popular cliché about how irrational we are.

Tom’s take

The secret to why these experiments give the results they do is in this detail: the judgement that people were asked to make was between the top three finalists in prestigious international competitions. In other words, each of these musicians is among the best in the world at what they do. The best of the best even.

In all probability there is a minute difference between their performances on any scale of quality. The paper itself admits that the judges themselves often disagree about who the winner is in these competitions.

The experimental participants were not scored according to some abstract ability to measure playing quality, but according to how well they were able to match real-world competition outcome.

The experiments show that matching the judges in these competitions can be done based on sight but not on sound. This isn’t because sight reveals playing quality, but because sight gives the experimental participants similar biases to the real judges. The real expert judges are biased by how the performers look – and why not, since there is probably so little to choose between them in terms of how they sound?

This is why the conclusion, spelt out in the original paper, is profoundly misleading: “The findings demonstrate that people actually depend primarily on visual information when making judgements about music performance”. It remains completely plausible that most of us, most of the time, judge music on how it sounds, just like we assumed before this research came out.

In ambiguous cases we might rely on looks over sounds – even the experts among us. This is a blow to musicians who thought it was always just about sound – but isn’t a revelation to the rest of us who knew that when choices are hard, whether during the job interview or the music competition, looks matter.

Read more

The original paper: Sight over sound in the judgment of music performance. Tsay, C-J (2013), Proceedings of the National Academy of Sciences

Special mention for the BBC and reporter Melissa Hogenboom who were the only people, as far as I know, who managed to report this story with an accurate headline: Sight dominates sound in music competition judging

The interaction between the senses is an active and fascinating research area. Read more from the Crossmodal Research Laboratory at the Univeristy of Oxford and Cross-modal perception of music network at the University of Sheffield

[image error]

This article was originally published at The Conversation.

Read the original article.

August 26, 2013

A detangler for the net

I’ve just finished reading the new book Untangling the Web by social psychologist Aleks Krotoski. It turns out to be one of the best discussions I’ve yet read on how the fabric of society is meshing with the internet.

I’ve just finished reading the new book Untangling the Web by social psychologist Aleks Krotoski. It turns out to be one of the best discussions I’ve yet read on how the fabric of society is meshing with the internet.

Regular readers know I’ve been a massive fan of the Digital Human, the BBC Radio 4 series that Krotoski writes and presents, that covers similar territory.

Untangling the Web takes a slightly more analytical angle, focusing more on scientific studies of online social interaction and theories of online psychology, but it is all the richer for it.

It covers almost the entire range psychological debates: friendships, how kids are using the net, debates over whether the net can ‘damage the brain’, online remembrance and mourning, propaganda and persuasion, sex, dating and politics. You get the idea. It’s impressively comprehensive.

It’s not an academic book but, unsurprisingly, given Krotoski’s background as both a social psychologist and a tech journalist, is very well informed.

I picked up a couple of minor errors. It suggests internet addiction was recognised as a diagnosis in the DSM-IV, when the nearest things to an internet addiction diagnosis was only discussed (and eventually relegated to the Appendix), in the DSM-5.

It also mentions me briefly, in the discussion of public anxieties that the internet could ‘rewire the brain,’ but suggests I’m based at University College London (apparently a college to the north of the River Thames) when really I’m from King’s College London.

But that was about the best I could do when trying to find fault with the book. It’s a hugely enjoyable, balanced treatment of an often inflammatory subject, that may well be one of the best guides to how we relate over the net that you’re likely to read for a long time.

Link to more details about Untangling the Web.

Tom Stafford's Blog

- Tom Stafford's profile

- 13 followers