Daniel M. Russell's Blog, page 4

May 8, 2025

Slightly delayed...

I should have known this would happen...

... I took the silver flying bird to Copenhagen today, instead of writing the answer to last week's SRS Challenge. I was looking forward to spending some quality time thinking about AI summaries... but it's too big a topic to knock out in an hour.

SO... in order to create the bespoke, hand-crafted, lovingly artisanal SRS answer that you expect, I decided to push it out a week to give me a bit of extra time.

BUT... if you find yourself in Copenhagen tomorrow (Friday, May 9, 2025), I'll be speaking at the Pioneer Centre for Artificial Intelligence Research at 14:00. Here's the link for you, just in case you're around. (And if you DO make it to my talk, please stick around afterwards and let me know you're a SearchResearcher. The passphrase will be "I verify all my searches.")

Title: People, AI, and online research

Abstract: Given all of the press that LLMs have garnered, it’s worthwhile asking if they’re changing the ways people find information. Are LLMs pulling traffic away from the search engines? Just as importantly, how do regular people think about the quality of information they get from their favorite AI systems? One key lesson of my research into the UX of AI systems over the past 30 years is that people don’t really understand what AI is, how it works, or what it means for them. I’ll review some successes and failures of earlier research approaches, what we should learn from these decades of practice at the boundary between human experience and the use of intelligent systems, and where AI systems will change knowledge practices in the future.

Keep searching!

May 1, 2025

SearchResearch (5/1/25): How good are those AI summaries anyway? Let us know!

I don't know about you...

P/C [slide showing someone using AI for summarization] Gemini (May 1, 2025)

P/C [slide showing someone using AI for summarization] Gemini (May 1, 2025)... but while the entire world seems to be having ecstatic paroxysms about the incredible capabilities of generative AI, my experience has been a little less wonderful.

On more than one occasion I've spent hours trying to track down a factual claim, a citation, or a reference that some AI system made, only to find out that it's bogus or purely hallucinatory.

That's my experience. Don't get me wrong--there's a lot of good stuff in the AI that's deployed, but I find myself constantly having to check the output of an AI to make sure that the bad stuff doesn't overwhelm the good stuff.

For this week, I'd love to hear your stories about using AI and then having to fact-check it, only to discover that things went way off.

Let's ask a very specific Challenge for this week:

1. How has your experience of using AI for summarization worked out?

I really want to hear your stories. Let me give you one example.

I asked Gemini 2.0 (Flash) to [summarize this article and give citations to the claims that you make in the summary].

It seemed to do a good job, but one of the citations was to an article by a famous author in a well-known publication, on a date that was plausible, with a title that is very consistent with everything he's written over the past few years.

But try as I might, I could NOT find that damn article. I eventually went to the journal's searchable archive website and found that no article by that name was ever published. The whole thing wasted a full hour of my time.

I definitely do NOT want to be in the situation of the lawyers for Mike Lindell who submitted legal briefs with LLM hallucinated citations.

So I want to hear your stories about using AI to summarize other texts. How's that working out for you?

Share your AI summarization stories in the comments below so we can all learn from your work.

What's your critical and careful analysis of the quality of AI as a text summarizing tool?

True stories only, please. And definitely nothing written by AIs.

I'll summarize your stories--lovingly, by my human eyes, hands, and brains--and let you know what the SRS crew has to say about this.

Keep searching.

April 28, 2025

A lemon in lemon - update

In our previous SRS episode....

P/C bungaboi89, from his post on r/mildlyinteresting

P/C bungaboi89, from his post on r/mildlyinteresting... we discussed the origins of the "lemon in lemon" and when it was first written about in the 18th century.

I found this great pic on Reddit and thought I'd share it with you here. Bungaboi89 gave me permission to use this excellent image of exactly what we were talking about.

And I thought you might enjoy this. I think I need a glass of lemonade (a double, please) around now.

Keep searching.

April 24, 2025

Answer: What's a lemon in a lemon called?

A lemon in a lemon?

Excerpt of still life by Jan Davidsz de Heem, "Breakfast with Wine Glass and Goudse Pipe"

Excerpt of still life by Jan Davidsz de Heem, "Breakfast with Wine Glass and Goudse Pipe"P/C source Wikimedia.

Curiously, I've actually found a perfectly formed lemon (peel and all) growing inside of another lemon. That was weird, so I looked around a bit and discovered a world of remarkable things.

Obviously, this led to this week's SearchResearch Challenge:

1. What do you call this strange lemon inside of another lemon?

I'll start by telling you what doesn't work: Almost any query having to do with just "lemon" in it. There are just too many articles about lemons--especially recipes--that the term "lemon" is overwhelmed by other content.

BUT, [lemon inside lemon] does lead you to a fun Reddit post about exactly this (in the subreddit /mildlyinteresting). That post has a perfect pic of the lemon-in-lemon, exactly like the one I saw.

Otherwise, this kind of search doesn't work well. Or at least, there's not a lot of information out there that's about "lemons in lemons." So we have to shift our strategy.

I realized this only after trying a lot of variations (e.g., [twin of lemon] [double lemon] [lemon growing inside of lemon]). Despite being very clever, all I found were reports of lemon seeds sprouting inside of a lemon fruit. I've seen a lot of those (I grew up in Los Angeles where every other home has a lemon tree), but it's not quite the same thing. They look like this, perhaps you've seen this as well:

That's not it, though... We're looking for a fully-formed lemon fruit on the inside of the lemon.

Then I saw the comment on this blog by Harry8Dresden who said that he just copied much of the Challenge into ChatGPT 4o. I tried this with ChatGPT 4o, Claude 3.7, and Gemini 2.0 Flash. (If you try other AIs, let us know in the comments.) I just copy/pasted this much of the Challenge statement:

[ This happened to me once... I found a fully formed, perfectly intact lemon completely enclosed within an outer lemon shell. Silly me, I didn't take a picture, but imagine a double-skinned lemon and you'll have the right idea.

So, as you'd imagine, I did a little bit of research and found that there is a very specific name for this kind of strange double lemon fruit AND learned that it was well known in the 18th century! Obviously, this has to lead to a SearchResearch Challenge: 1. What do you call this strange lemon inside of another lemon? ]

Here, in summary, is what I got back from each:

ChatGPT: calls this lemon-in-lemon an "inclusion" and points to the French naturalist Antoine-Joseph Dezallier d'Argenville included detailed descriptions and illustrations of citrus fruits exhibiting unusual growth patterns in his 18th-century botanical works.

That may be, but the cited work actually does NOT contain any of d'Argenville's citrus writings! Hmm. d'Argenville wrote extensively about gardens, but NOT about citrus, nor enclosed. Correction: he had one brief mention about the origin of Bergamot oranges, but nothing about the oddities of lemon growth.

(More interesting and odder: I actually ran this query on ChatGPT twice by accident, and got different answers each time. The first time ChatGPT called it a "supernumerary fruit," which is close, but not quite right. A "supernumerary" is just an extra fruit in an unusual place--not an enclosed fruit.) In both cases, ChatGPT didn't help much.

Claude: Says...well... funny you should ask. Claude initially said that this was "endocarp polyembryony" but then changed its mind to say that this unusual growth is called a "citrus within citrus" or more specifically an "endocarp proliferation." (Good thing Claude changed its mind: "polyembryony" refers to multiple shoots from the same seed--NOT what we're looking for.)

Except... "citrus within citrus" usually refers to a particular type of citrus being (taxonomically) a child of another citrus variety--example: the Meyer lemon results from a cross of a citron and a pomelo. Claude interprets this phrase incorrectly.

And "endocarp proliferation" is the increase in endocarp tissue, the innermost layer of the fruit wall, particularly as it develops and matures.

Claude went on to say that:

In historical botanical literature, particularly from the 18th century, this was referred to as "Fructus in fructo" (fruit within fruit) or "proliferous citrus.

Meanwhile, "fructus in fructo" translates to "fruit within fruit" and is used to describe the usufruct legal concept, which is a right to enjoy the benefits of property owned by another. It specifically refers to the right to collect the "fruit" (profits) from a property. In this context, "fructus" refers to the product of the property, like crops or animals, or the income generated from it. It has nothing to do with one "fructus" being contained within another.

Finally, "proliferous citrus" refers to additional fruit that forms on the side of the base fruit. Many citrus do this, but it's not inside the original fruit--it's not what we're looking for.

While I learned a lot from Claude, it was mostly off-topic learning. *I* appreciate the excursions, but most people probably won't. Not a great reply.

Gemini: Tells us that some 18th century texts "...describes citrum in citro, noting it as a "strange monstrosity" where "one lemon [grows] from the centre of another."

This Latin phrase, translating to "citrus in citrus" or, more specifically in this context, "lemon in lemon," provides a historical designation for the observed anomaly.

Gemini also tells us that:

"In modern botanical terminology, the phenomenon described by the user aligns closely with the concept of internal proliferation or carpelloid formation. Several of the provided research snippets discuss this occurrence in other fruits, particularly bell peppers."

A quick regular Google search confirms that these terms (internal proliferation and carpelloid formation) actually DO refer to the development of an abnormal ovule inside a fruit, resulting in a miniature version of the parent fruit rather than seeds. This phenomenon often occurs in peppers (Capsicum annuum).

P/C Wikimedia A pepper inside of a pepper, just like lemons.

P/C Wikimedia A pepper inside of a pepper, just like lemons.

Gemini goes on to tell us that:

"Johann Christoph Volkamer's comprehensive work, Nürnbergische Hesperides, published in the early 18th century, aimed to document the various citrus fruits known at the time, including their cultivation and characteristics."

So I looked up this book and found you can download it from Heidelberg University

The book bills itself as "Nürnbergische Hesperides, Oder Gründliche Beschreibung Der Edlen Citronat- Citronen- und Pomeranzen-Früchte: Wie solche in selbiger und benachbarten Gegend/ recht mögen eingesetzt/ gewartet/ erhalten und fortgebracht werden" (Or..."Nuremberg Hesperides, or a thorough description of the noble citron, lemon, and bitter orange fruits: how such in the same and neighboring area/ may be properly used, maintained, preserved, and transported.")

If you read through the book, you'll eventually find this page:

Page 173 of Nürnbergische Hesperides. P/C Google Books

Page 173 of Nürnbergische Hesperides. P/C Google BooksWhich has this accompanying figure:

The figure is not 100% clear, but the text is very straightforward. If you use Google Translate (in camera mode) to translate the text, you'll find:

"When I cut it open, I found two small fruits inside, which had grown a little at the top, but could still be lifted out, and were surrounded by a yellow shell, but inside completely white and thick, without a single mark. When I cut off a space among similar small fruits, and for the third time these small fruits, I found hidden inside reach."

Now we know: the lemon-in-lemon effect has been known about for a long time, and it's got a very specific modern botanical term: internal proliferation or carpelloid formation. In the 18th century, it was known as citrum in citro. (Yes, I did a bit more confirmation searches and found other books that used this phrase.)

2. What famous 18th century explorer knew about this strange lemon?

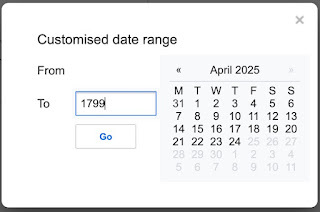

Finding this was a bit tricky. For many historical searches like this I turn to Google Books, limiting my search to books published in the 18 century. Choose the tools option and limit your search like this:

And then, the guessing game was on: How would an 18th century explorer talk about something like this? (And would it be in English, Spanish, Portugese, or Chinese? There were a LOT of explorers at the time.)

I started with queries in English, hoping against hope that I'd find something, maybe something in translation.

As before, I tried a lot of queries: [lemon in lemon] [ lemon within lemon] [lemon surrounded by lemon] [citrum in citro] etc etc.

On the 7th or 8th attempt, I struck gold with [lemon enclosed within lemon]

The first hit ("New Royal Geographical Magazine") contains the second document, which is Volume 1 of Captain James Cook's Voyage to the Pacific Ocean . On page 14 Cook writes about a stopover on Tenerife with a few botanical notes:

... they called it an "...impregnated lemon. It is a perfect and distinct lemon enclosed within another and differing from the outer only in being a little more globular."

So we find that Captain James Cook wrote about the citrus in citro

3. Where did he find these odd botanical mutants?

Reading through the text, we find that Cook wrote about this odd lemon while his ship was on a pause in the Canary Islands in 1776 near the beginning of his Third Voyage. This was the Voyage that ended with him being killed in Kealakekua Bay in 1779.

Later, William Bligh (famously of the mutiny) also took his ship, Bounty, to the Canary Islands in 1788 at the beginning of his shortened round-the-world trip when the sailors cast him off the ship into south Pacific waters in April 1789. He also used the same term ("impregnated lemon") to describe the odd fruit.

You might wonder how Cook could write this book that was published AFTER his death. Answer: one of the men who served under Cook on board the HMS Resolute, James King, took over as captain on Cook's death, and wrote up the voyages after he returned to England (based on both his and Cook's extensive notes).

SearchResearch Lessons

1. Using an AI to generate ideas and leads is a great idea! But check everything. As we saw in this example, LLMs don't always speak truthfully or with attention to detail. ChatGPT and Claude both were seriously wrong. Gemini did better this time, but always / always / always check what you find. Use another AI if you have to, but in the end, validate with grounded texts (that are usually easier to find with regular search).

2. Searches can take time.. and reward iteration. When looking for the 18th century commentary on "lemon in lemon," I had to try a LOT of variations on that query. With persistence, I was able to find the Captain Cook quote--but I admit that I got fairly lucky. This is one of those cases when your personal knowledge (in this case, about the way an 18th century sea captain might write) can be incredibly handy. Arr!

3. Remember Google Books is a great repository for historical searches. Especially since you can limit the search to a particular period.

Keep searching!

April 17, 2025

SearchResearch Challenge (4/17/25): What's a lemon in a lemon called?

Remarkable things demand attention...

Excerpt of still life by Jan Davidsz de Heem, "Breakfast with Wine Glass and Goudse Pipe"

Excerpt of still life by Jan Davidsz de Heem, "Breakfast with Wine Glass and Goudse Pipe" P/C source Wikimedia.

This happened to me once... I found a fully formed, perfectly intact lemon completely enclosed within an outer lemon shell. Silly me, I didn't take a picture, but imagine a double-skinned lemon and you'll have the right idea.

So, as you'd imagine, I did a little bit of research and found that there is a very specific name for this kind of strange double lemon fruit AND learned that it was well known in the 18th century!

Obviously, this has to lead to a SearchResearch Challenge:

1. What do you call this strange lemon inside of another lemon?

2. What famous 18th century explorer knew about this strange lemon?

3. Where did he find these odd botanical mutants?

Once you figure it out, let us know the answer AND how you found it! What clever SRS techniques did you use to arrive at the answer?

Keep searching!

April 13, 2025

Follow-up: Can you extract and summarize a blog?

In a moment of curiosity,

I tried the same task as in our previous post (retrieve the last 10 blog posts from SearchResearch) with Grok and Claude... just for comparison purposes.

I did the same query, but the results weren't much better.

Here's the view of the sheet for Claude:

Claude's results: Red shading means the answer is totally wrong (or a 404 error);

Claude's results: Red shading means the answer is totally wrong (or a 404 error); yellow shading means it's around 50% right; green means 100% correct. This actually isn't bad.

For contrast, here are Grok's answers:

Grok's results. Truly terrible.

Grok's results. Truly terrible.As we've discussed, it's a good practice to iterate when you search, and the same is true when using LLMs.

I gave both systems a second try, after giving them both the additional prompt of [be sure to give accurate links to the blog posts. give only high quality summaries of the pages you find.]

Both systems said that they would do better. Bemusingly, Grok said: "I apologize for the oversight in providing links that may not lead to valid pages. I’ve rechecked each URL by attempting to access them and verifying whether they resolve to actual, relevant blog posts on searchresearch1.blogspot.com."

But here are the results of the second attempt, Claude first:

Claude second attempt: About the same (9/10 correct), just a different error.

Claude second attempt: About the same (9/10 correct), just a different error.Despite protestations of "rechecking each URL," Grok actually performed worse, getting a solid 100% of the links wrong.

Grok fails in a spectacular way. Nothing is correct.

Grok fails in a spectacular way. Nothing is correct. I don't know about you, but I'm worried about the future of Agents when the major LLM providers can't get a simple request correct.

The irony, of course, is that checking for valid URLs is really simple. But the AI systems don't do it.

SearchResearch Lessons

1. Be very, very, very cautious about trusting LLM output. Don't trust, but validate. While LLMs CAN do a lot of great things, they can also make monumental errors.

But have faith, and keep on searching the way you've learned.

April 10, 2025

Answer: Can you extract and summarize a blog?

Can AI systems really read the web?

P/C Gemini [elderly robot reading a long scroll of blog names]. No I don't recognize any of the blogs shown on the scroll, nor why it uses so many different fonts in a ransom note style. I don't trust these results, and you shouldn't either.

P/C Gemini [elderly robot reading a long scroll of blog names]. No I don't recognize any of the blogs shown on the scroll, nor why it uses so many different fonts in a ransom note style. I don't trust these results, and you shouldn't either. One of the latest claims being made by the LLM / AI providers is that the "knowledge cutoff problem" isn't a real problem any more. Most providers say that their AIs now have access to realtime web information. For instance, when I asked ChatGPT about its live connection, this is what it told me. (With a live citation to an article on Lifewire.)

That sounds great, right? But let's do a quick test and find out... that's the essence of this week's SRS Challenge:

1. Can you get an LLM AI system to give you the 10 most recent links to a blog? For instance, could you get Gemini, Perplexity, ChatGPT, or MS Copilot to make a table of the 10 most recent blog posts?

That seems like a simple enough request for an AI system with live web access, yes?

Not to tip my hand or anything, but the reality of asking an AI to do something this simple just boggled my mind.

Just to check my suppositions about AI tools that can access the internet, I did the obvious search and found several article documenting that they claim access. (see: WritingMate, YourEverdayAI, Otterly) Note that this list will change over time as more and more LLMs gain live search engine access.

It's easy to find out what the providers say. I just did a few site searches like this to find out:

[ site:openai.com live web access ]

For instance, here's OpenAI's comment about live access: OpenAI blog The claim is that Google Gemini, OpenAI ChatGPT, and Perplexity all have live access to web content. To test this claim, I gave this query to each of them:

[can you give me links to the 10 most recent blog

posts from searchresearch1.blogspot.com]

Results:

Perplexity gave 3 links ChatGPT 4.0 gave 0 links (but see below) Gemini Deep Research with 2.5 pro gave me 10 links

Well, dang... okay.

Maybe they're not so great at simple questions--but isn't that sort of the point? If you've got an LLM that's planning on being an AI agent, you'd think it could do something like this.

When I looked at the 10 links in the Google list, 6 of them were wrong… invalid URLs. What's going on here? My spidey-sense was tingling.

So I made a spreadsheet that calls out to Gemini asking for the title, date, and a summary of the contents of that blog post for each of the links Gemini gave me in the answer. Here's a link to my spreadsheet, and an image below where you can see what happened:

Click to see full-size, or click here to see the sheet itself.

Click to see full-size, or click here to see the sheet itself.I know this is too small to read (click thru for details), but I want you to notice something very, very odd about this. The red-colored cells are completely wrong. That means rows 7-10 are completely hallucinated, lock, stock, URL, and summary.

The yellow-colored cells are close, but not quite right--the summaries are in the area, but wrong in most of the details. (Not a little wrong, a LOT wrong.)

What we're seeing here is that Gemini hallucinated content that it theoretically has access to! There's a lot to be sad about, but it kills me that several of the cells say "I lack the ability to access external websites, including the URL you provided. Therefore, I cannot provide a summary of the blog post."

What? That's nonsensical.

Even more oddly: I posted this Challenge a week ago and asked ChatGPT the same thing, and it gave me 0 results.

But AS I'M WRITING THIS POST I tried it again... and got 10 links! Here are some of those ChatGPT results:

To OpenAI's credit, they're all real links... but the dates are kind of wonky. (Several are off by one.) And the title of the last post "Using Notebooks to keep track of your research" is a bit off--the actual title is "Using NotebookLM to help with Deep Research"! Hallucination is rampant.

Just out of a sense of heightened due diligence, I started a new chat with ChatGPT and asked the same question again. Here are the results this time (literally 10 seconds later):

How interestingly weird! It's found new blog posts! How strange that it found the post "What building is this?" from (purportedly) March 5, 2025. The reality is that I posted that on January 29, 2025.

Seeing that ChatGPT's results aren't stable, I was curious about Gemini's results. If I re-ran the query to Gemini Deep Research 2.5 pro, would I get different results?

Result? YES, they're mostly the same, but with a few additional cleverly hallucinated results.

The thing that kills me about all this is that, according to their own press, the LLM has access to the internet. How can they screw it up? Gemini told me that:

"From the initial list, I've carefully selected the primary URLs for the 10 most recent and unique blog posts. I made sure to prioritize the direct links to the blog articles, even when other links like images or external references were present in the initial data."

Really? I don't believe you.

Despite saying this, Gemini clearly didn't do the obvious step of checking to see if the URLs are valid. If Gemini did that, they would have seen this:

It wasn't a minor hallucination either--4/10 of the links were bogus.

SearchResearch Lessons

It should be obvious by this point but...

1. If your research is high-stakes or mission-critical, DO NOT rely on LLMs--even ones with live connections to the internet--to return reasonable results for even the simplest of directions.

It should be pretty clear by now that hallucinations are still a huge problem. Yes, they're getting better, but there's not a single day where I don't see hallucinated results in LLM output. Mostly it doesn't matter because I'm not asking it about mission critical information--but if you're doing something where it does matter, my advice to you is to check the data very, very, very closely. The results LOOK good... but they can be very very wrong.

Keep searching!

P.S. FWIW, EveryDayAI found pretty much the same thing as I report here. It's pretty bad out there...

April 2, 2025

SearchResearch Challenge (3/4/2025): Can you extract and summarize a blog?

P/C Gemini [elderly robot reading a long scroll of blog names]. No I don't recognize any of the blogs shown on the scroll, nor why it uses so many different fonts in a ransom note style.

P/C Gemini [elderly robot reading a long scroll of blog names]. No I don't recognize any of the blogs shown on the scroll, nor why it uses so many different fonts in a ransom note style. Just last year people using AIs to ask up-to-date questions would often run into the "knowledge cutoff date" problem. That's the date of the last training cycle, when all of the information in the LLM was solidified.

Since then various AIs have been claiming that they now have continuous updates because they're able to read web content in real-time. (Here's a news article describing the new real-time web access capabilities of some LLMs.)

I've explored this a bit and come to some insights--and that leads to today's Challenge:

1. Can you get an LLM AI system to give you the 10 most recent links to a blog? For instance, could you get Gemini, Perplexity, ChatGPT, or MS Copilot to make a table of the 10 most recent blog posts?

That seems like a simple enough request for an AI system, yes?

We keep reading about AI agents that claim to be able to take actions on your behalf by operating your applications to get-things-done. So let's ask for a simple task ("make a list of the 10 most recent posts") and see how well it does.

I'll show you how I did it next week and talk about the issues involved.

I'm VERY curious to see how well you did. Were you able to do one request and get back the list?

As a sample blog, you might try good old SearchResearch, but you might also try something like OpenAI's blog or Google's Gemini blog.

Keep searching!

March 28, 2025

Answer: To search, or to AI? That is the question...

The question is--what works?

If you've been using online search tools for any amount of time you know that which tool you use, and how you use it can make a huge difference in the quality of your results.

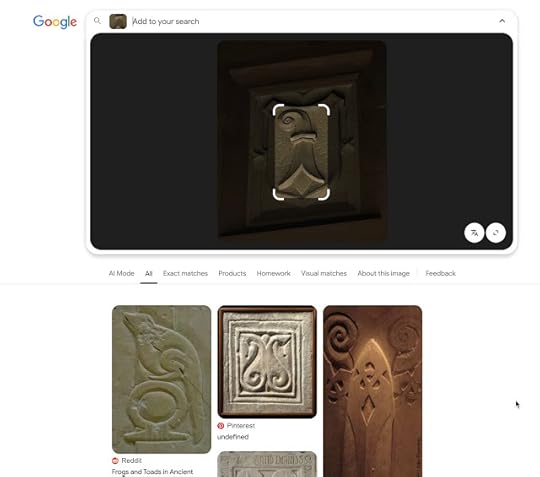

This week I Challenged you to find what each of these images is really about. When you try to find out the story of these images, where you able to use "regular" Search-by-Image, or did you use AI image descriptions? If so, what prompt did you use, and how well did it work for you?

1. While traveling in northwest Switzerland, I saw this emblem all over the city I was visiting. Can you tell me what city I was in? Just as importantly, what IS this thing?

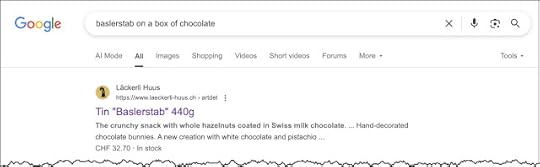

For each of these images I used 2 different methods: (1) regular Google Search-by-Image, SBI (you know, upload the image and let it search--aka Google Lens), and (2) upload the image to an LLM (either Gemini or ChatGPT) and ask it to identify what it sees in the image. Image B1: On a box of chocolates...

Image B1: On a box of chocolates...  Image B2: Carved into a stone wall

Image B2: Carved into a stone wall Image B3: Seen on a parade float

Image B3: Seen on a parade floatHere's what I found:

B1 (gold symbol on black/white background): This image is very focused on the object of interest and SBI does a much better job. Here's what the Google Lens (mobile) interface looked like when I asked about this:

Mobile Lens search for B1 image

Mobile Lens search for B1 imageIt STILL doesn't tell me what it is, but at least we learn yet another potentially interesting word: Baslerstab. Searching for this leads to:

We see that "Baslerstab" is the name of the local Swiss-German newspaper AND that "baselstab" is the name of the symbol! (German note: "Basler" means a person or thing from the city of Basel.) Clicking through on the Wikipedia link takes us to the Baselstab article, telling us a bit more:

"The Baselstab or Baslerstab is the name given to the shepherd's staff or bishop's staff in the coats of arms of villages and towns in the Basel region in Switzerland. The crosier of the Prince-Bishop of Basel can be found in the coats of arms of the city of Basel and the cantons of Basel-Stadt, Basel-Landschaft, and Jura. The crosier of Basel-Stadt is black, while those of Basel-Landschaft and Jura are red."

Excellent. Now we know that this symbol is the emblem for Basel and is an abstract version of a bishop's crozier.

Since I told you that the Baslerstab was on a box of chocolates, I was wondering what the connection was between this symbol and chocolate. Reasonable question for Gemini, yes?

Unfortunately, Gemini seems to fall into a hallucinatory rabbit hole with this answer. Despite what Gemini says in its answer, as far as I can tell, there is NO connection between Lindt (a large chocolate company in Zürich) and the actual chocolate company that uses the Baslerstab symbol (which is called Laeckerli-Huus, headquartered in Basel). Aaarrghhh! Everything in this answer below about the crozier and Lindt is wrong.

Since that didn't work well, I did the obvious query on regular Google:

If you click on that first link you'll see exactly the image of the entire box of chocolate and cookies, a nice example of the baslerstab being used as a design element.

That's MUCH better than Gemini. Another example of doing a simple Google search that works out much better than asking an AI.

For the record, ChatGPT didn't know either... and also completely hallucinated a connection between the baslerstab symbol and coat of arms of the canton of Fribourg, Switzerland where the chocolate maker Maison Cailler is based. Utter balderdash.

B2 (symbol on wall): This was the symbol carved into a stone wall in the city's center. Sadly, nothing I could do with SBI would work. Google failed, Bing's SBI failed, and even focusing in tight on the symbol didn't help. Search-by-Image just couldn't handle this.

When I turned to Gemini with the wall-carving image, and asked [ identify this symbol ], Gemini replied:

"The symbol shown in the image appears to be a heraldic shield with a stylized bird's head and neck facing left, and a lozenge (diamond shape) below it."

Not quite.

However, Gemini also said (quite reasonably):

"To understand the full meaning of this symbol, you would need to know the context in which it was found. Here are some questions to consider: Where was the image taken? The location could provide clues about the origin of the symbol and the family or institution associated with it."

To which I answered, [ the image was taken in Basel, Switzerland ] to which Gemini replied:

"Okay, knowing that the image was taken in Basel, Switzerland, provides some very important context. Here's how that helps us understand the symbol:

Basel's Heraldic Traditions:Basel has a rich history of heraldry. Notably, the city is strongly associated with the "Baselstab" (Basel staff), a stylized bishop's crozier. This is a very prominent symbol in the heraldry of Basel-Stadt (the city of Basel) and Basel-Landschaft (the surrounding canton).

Another significant heraldic figure associated with Basel is the Basilisk.

This mythical creature is frequently seen in Basel's heraldic displays."

Which tells me that this is a baselstab (the crozier symbol) AND that the City of Basel also uses a basilisk as a heraldic element. But of course I had to check this as well... luckily, it passes the sniff test.

When I checked with ChatGPT, it also gave me a poor result:

ChatGPT trying to identify the carved symbol.

ChatGPT trying to identify the carved symbol.But since Gemini needed to know where the image was from, I also told ChatGPT that this image is from Basel. Guess what? A new answer emerged!

After I told ChatGPT the image is from Basel, it was able to figure out what it was.

After I told ChatGPT the image is from Basel, it was able to figure out what it was. Lesson learned here--location matters!

So I tried again with Google SBI. Turns out it DOES matter!

It makes complete sense--given a random image from somewhere in the world, adding LOCATION information would help any identification process (including humans!) to figure out what a symbol is.

B3 (dragon with shield): Going through the process again... and once again, SBI didn't work, even when I cropped to just the symbol. Here's the "regular" SBI search:

Click to see full-size

Click to see full-sizeIt correctly identified the dragon-like creature as a basilisk, but that's not what I'm interested in--I want the symbol on the shield.

Fortunately, Google Lens now has the ability zoom in on just the key feature by dragging the white rounded angle brackets--in this case, to focus in on the symbol. When you do that, you'll get an okay search--the first two results are good, but the rest are terribly off-topic!

The good news is that you learn that it's the city symbol of Basel, but you won't find out WHAT it is!

If you ask Gemini to [ describe the image ], you'll get a nice description:

"This image shows a close-up, angled shot of a bronze sculpture of a griffin holding a shield. The griffin, with its eagle-like head and wings, is the dominant feature, positioned in the left half of the frame...The shield the griffin is holding is prominently displayed in the center of the image. It features a unique emblem, possibly a heraldic symbol, with a curved, stylized design."

But no amount of me asking what that symbol is could convince Gemini to give me a real answer. Not a great result. Even when I gave it the cropped version of the image (showing just the symbol itself), Gemini couldn't say what it was.

By contrast, ChatGPT did much better: When I asked [ what's the symbol in the center of the shield ] ChatGPT responded with:

"The symbol in the center of the shield is a heraldic emblem known as a "crosier," which is stylized like a pastoral staff with a curled or spiral top. It resembles the ceremonial staff traditionally carried by bishops or abbots, often symbolizing religious or spiritual authority. The depiction on this shield is stylized, emphasizing the curved top and decorative elements typical of such symbols."

So now we have a term to look up: "crosier." A quick search takes me to Wikipedia where I learn that the word is usually spelled "crozier" and it's a stylized shepherd's crook, which is ALSO the symbol for the city of Basel, as you can see in the image below:

P/C Wikipedia

P/C WikipediaBut then I took my own advice and added Basel Switzerland to the SBI search, and as expected, it really improved things:

SO... I took my own advice AGAIN... and re-ran the very first query of Gemini ADDING in the information about [...this image was taken in Basel Switzerland]. Voila! Here's the answer:

Which is a pretty good result.

BUT... you have to know to prompt it with all of contextual information!

I'll tell you the ground truth: I took all of these images while visiting Basel for Fasnacht--huge parades with floats (like the one above), and repeated crozier symbols everywhere. Since I was literally seeing this just about ever time I looked up, I was curious what it was. B3 was taken during a Fasnacht parade as a float slowly drifted by me, the box of chocolates was something I bought, and the stone carving was taken as I walked around the city at 4AM for the Morgestraich.

2. In open green spaces all over Zürich I keep seeing this flower popping up--they're growing everywhere in vast numbers. What is it? Again, how do you know? What search method did you use?

Image P1: A few flowers carpeting the lawn. What are they?

Image P1: A few flowers carpeting the lawn. What are they?I took this photo at a random lawn in Zürich because I was curious--any open green space seems to be blanketed with these flowers! What are they?

Since I was walking around, I did the obvious Google Lens search:

As you can see, it calls it a gentian. Well... notice how Lens has selected an individual flower in the middle? That does kind of look like a gentian, but I know gentians, and this isn't a gentian.

By resizing the image when I got to my desktop, I got a much better answer:

This is correct--it's a Primrose, Latin name: Primula. It's a genus of herbaceous flowering plants in the family Primulaceae.

I thought I'd try asking our favorite AIs what they thought. My prompt after uploading the image was [describe what's in this image]

Again, a reasonable answer from Gemini, but not great. This looks a bit like Polemonium pulcherrimum, but it's not quite right. Identifying small blue flowers is always hard.

By contrast, ChatGPT gives a nice description, but no identification:

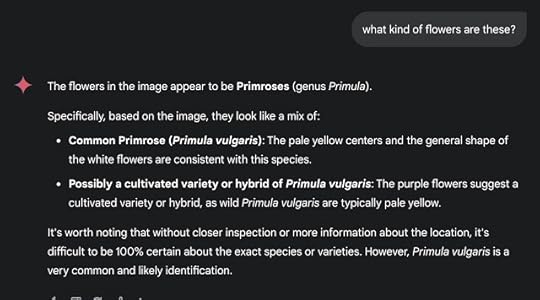

Not quite what I was looking for.... so I changed my prompt to: [what kind of flowers are these] NOW I got a much better answer. Here's Gemini's answer:

And ChatGPT's:

Which is also exactly right. But, as always... double check. Never trust an AI's identification of flowers, trees, dogs, or exotic symbols found on the packages of yummy chocolate!

SearchResearch Lessons

I didn't mean to write quite as much as I did--but this is a never-ending topic. Let's summarize what we learned from this little escapade...

1. Regular Search-By-Image is great at some things, but not at others. Usually it's simple to tell when SBI doesn't work--the hits don't tell you anything about the object of the search. BUT... you can improve your SBI query strategy by...

2. Include location information about the object of your SBI search. We saw that including the city name vastly improved SBI search on Google. (But, oddly, it did nothing to help Bing's SBI!)

3. Including location information VASTLY improves AI search. We saw that in both Gemini and ChatGPT--their results got much better when it knew where the target was from. Kudos to Gemini for mentioning that "including the location" would help.

4. Use AI/LLMs for search at your peril. We've talked about this before--they give what look like very plausible answers, but they can be complete nonsense. It's tempting to believe them, but the stories AIs told me about the connection between chocolate and the baselstab symbol were truly inventive...and wrong.

5. Flowers are still hard for AIs. Many flowers look a lot alike (e.g., blue primroses and gentian). I'm not really blaming AI for getting it wrong, but at the same time, they don't know their limits. Really. An AI SHOULD be giving you warning signals when it really can't accurately identify something like a flower or a carved symbol. But they don't. (And, for heaven's sake, do NOT trust an AI to correctly identify a mushroom for you.)

6. AIs are sensitive to the prompts you give them. We found that asking an AI to [describe this image] doesn't work--be direct and say what you really want to know--e.g., [identify the flower in this image]

So, to answer our original question, "What works?" There isn't any one answer, but knowing what your tools can do... and what they can't do. Be specific in your questions to an AI, provide as much information as you need, and remember your ordinary online search skills are still invaluable.

Keep searching!

March 21, 2025

A note about Heterophylly in the real world

Our Challenge about leaf variation on an individual plant made me more observant in the real world...

Holly leaf variation on the same plant. Taken in Zürich by Dan. The left image is from 3 meters above the ground in an unpruned part of the plant. The right image, from a well-pruned part of the bush, is about 1 meter off the ground.

Holly leaf variation on the same plant. Taken in Zürich by Dan. The left image is from 3 meters above the ground in an unpruned part of the plant. The right image, from a well-pruned part of the bush, is about 1 meter off the ground. ... I hope that being a Regular Reader of SRS does the same for you as well.

I was out walking last week, not long after having written about Mimcry in Plants (March 14, 2025), when I happened to cross paths with a beautiful holly bush. Like most holly bushes, it had the usual assortment of glossy green prickly leaves--it was lovely, but not the kind of bush you'd reach out and touch.

But having just read/written about heterophylly, I took a closer look--sure enough, just as it says in the books, there was a distinct variation between the lower branches (which had been pruned extensively) and the upper branches (which had never been pruned). Even better--the right side of the bush had a slight overhang into a neighbor's yard. They clearly had been pruning there--on the right was an armada of sharp-tipped leaves.

However, on the upper branches, especially on the left (and unpruned) side, smooth edge leaves abounded.

As I wrote last week, the mechanism of holly heterophylly is pretty well understood. The prickly/smooth variations are a result of damage to the leaves. When the leaves are damaged (say, by a passing hungry deer or by a neighbor’s pruning shears), methylation of the DNA in the leaves happens as a result of tissue damage. (Side note: methylation is the process of adding methyl groups onto pieces of large molecules, like DNA, to modify their behavior. This is the way much of epigenetics works. When an animal chomps on a leaf, methylation happens.)

By comparing the DNA of prickly leaves vs. smooth leaves, it turns out the prickly ones were significantly less methylated than prickless leaves, suggesting that methylation changes are ultimately responsible for leaf shape changes. More methylation = more prickly leaves. What's more, the methylation has an effect on nearby leaves. Other holly leaves nearby will also develop the prickles, with the effect diminishing with distance.

I was delighted to see heterophylly in action while just strolling down the street.

Keep looking... and searching.