Excellence in Hollywood, like excellence on television, did not end after the early 1970s: great moviemakers in every decade continued to produce films of enormous skill, insight, and emotion. What did change after the early 1970s was the idea of Hollywood as a central source of social commentary on the changing American society. The film studios largely renounced that role, as if shedding a skin, beginning around 1975. The change in movies followed almost precisely the transition in television.

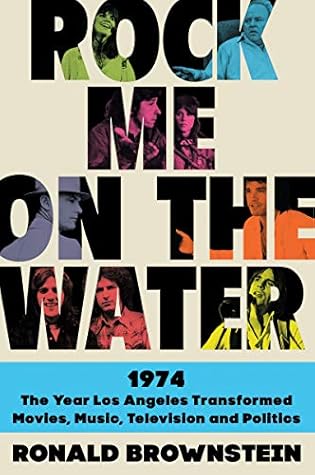

Welcome back. Just a moment while we sign you in to your Goodreads account.