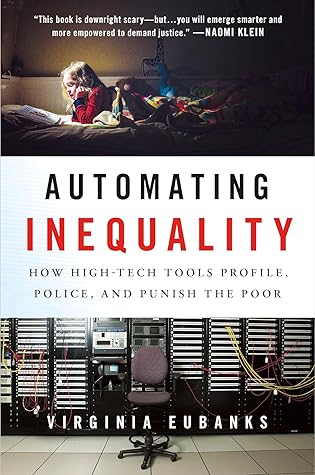

More on this book

Community

Kindle Notes & Highlights

Read between

March 17 - June 4, 2018

The advocates of automated and algorithmic approaches to public services often describe the new generation of digital tools as “disruptive.” They tell us that big data shakes up hidebound bureaucracies, stimulates innovative solutions, and increases transparency. But when we focus on programs specifically targeted at poor and working-class people, the new regime of data analytics is more evolution than revolution. It is simply an expansion and continuation of moralistic and punitive poverty management strategies that have been with us since the 1820s.

New high-tech tools allow for more precise measuring and tracking, better sharing of information, and increased visibility of targeted populations. In a system dedicated to supporting poor and working-class people’s self-determination, such diligence would guarantee that they attain all the benefits they are entitled to by law. In that context, integrated data and modernized administration would not necessarily result in bad outcomes for poor communities. But automated decision-making in our current welfare system acts a lot like older, atavistic forms of punishment and containment. It filters

...more

This kind of blanket access to deeply personal information makes little sense outside of a system that equates poverty and homelessness with criminality. As a point of contrast, it is difficult to imagine those receiving federal dollars through mortgage tax deductions or federally subsidized student loans undergoing such thorough scrutiny, or having their personal information available for access by law enforcement without a warrant.

Further integrating programs aimed at providing economic security and those focused on crime control threatens to turn routine survival strategies of those living in extreme poverty into crimes. The constant data collection from a vast array of high-tech tools wielded by homeless services, business improvement districts, and law enforcement create what Skid Row residents perceive as a net of constraint that influences their every decision.

But wiretaps, photography, tailing, and other techniques of old surveillance were individualized and focused. The target had to be identified before the watcher could surveil. In contrast, in new data-based surveillance, the target often emerges from the data. The targeting comes after the data collection, not before.

the data is mined, analyzed, and searched in order to identify possible targets for more thorough scrutiny.

Surveillance is not only a means of watching or tracking, it is also a mechanism for social sorting.

The AFST is supposed to support, not supplant, human decision-making in the call center. And yet, in practice, the algorithm seems to be training the intake workers.

Data scientist Cathy O’Neil has written that “models are opinions embedded in mathematics.”8

Poor and working-class families feel forced to trade their rights to privacy, protection from unreasonable searches, and due process for a chance at the resources and services they need to keep their children safe.

We might call this poverty profiling. Like racial profiling, poverty profiling targets individuals for extra scrutiny based not on their behavior but rather on a personal characteristic: living in poverty. Because the model confuses parenting while poor with poor parenting, the AFST views parents who reach out to public programs as risks to their children.

Poverty in America is not invisible. We see it, and then we look away.

51 percent of Americans will spend at least a year below the poverty line between the ages of 20 and 65.

We could not make eye contact because we were enacting a cultural ritual of not-seeing, a semiconscious renunciation of our responsibility to each other.

Like the brick-and-mortar poorhouse, the digital poorhouse diverts the poor from public resources. Like scientific charity, it investigates, classifies, and criminalizes. Like the tools birthed during the backlash against welfare rights, it uses integrated databases to target, track, and punish.

When automated decision-making tools are not built to explicitly dismantle structural inequities, their speed and scale intensify them.

the best cure for the misuse of big data is telling better stories.

poverty is not an island; it is a borderland.

The first challenge we face in dismantling the digital poorhouse is building empathy and understanding among poor and working-class people in order to forge winning political coalitions.

Such sustained, practiced empathy can change the “us/them” to a “we,” without obscuring the real differences in our experiences and life chances.

Oath of Non-Harm for an Age of Big Data I swear to fulfill, to the best of my ability, the following covenant: I will respect all people for their integrity and wisdom, understanding that they are experts in their own lives, and will gladly share with them all the benefits of my knowledge. I will use my skills and resources to create bridges for human potential, not barriers. I will create tools that remove obstacles between resources and the people who need them. I will not use my technical knowledge to compound the disadvantage created by historic patterns of racism, classism, able-ism,

...more

This highlight has been truncated due to consecutive passage length restrictions.

it will be equally important to confront the deep classism of many progressive organizations. A true revolution will start where people are. It will engage them in terms of their basic material needs: safety, shelter, wellness, food, and family. And it will honor poor and working-class people’s deep knowledge, strength, and capacity for leadership.