He and his colleagues started working on a new language model they called a “generatively pre-trained transformer” or GPT for short. They trained it on an online corpus of about seven thousand mostly self-published books found on the internet, many of them skewed toward romance and vampire fiction. Plenty of AI scientists had used this same dataset, too, known as BooksCorpus, and anyone could download it for free. Radford and his team believed they had all the right ingredients to ensure that this time, their model would also be able to infer context.

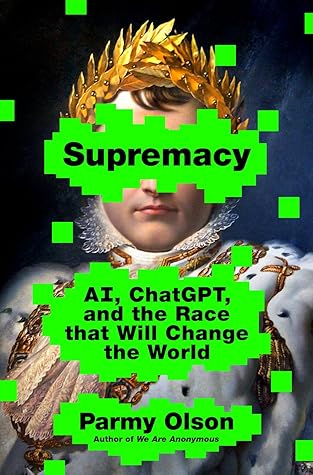

Welcome back. Just a moment while we sign you in to your Goodreads account.