A second reason was the data and computing power needed to run experiments in AI research. Universities typically have a limited number of GPUs, or graphics processing units, which are the powerful semiconductors made by Nvidia that run most of the servers training AI models today. When Pantic was working in academia, she managed to purchase sixteen GPUs for her entire group of thirty researchers. With so few chips, it would take them months to train an AI model. “This was ridiculous,” she says. Not long after she joined Samsung, she got access to two thousand GPUs. All that extra processing

...more

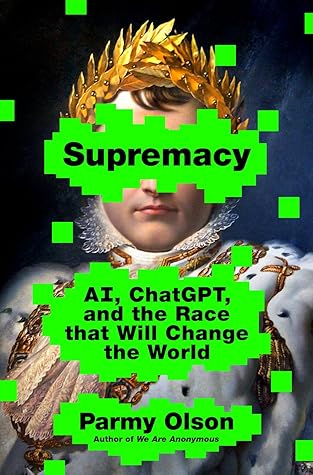

Welcome back. Just a moment while we sign you in to your Goodreads account.