Magnus Vinding's Blog, page 6

December 14, 2018

Thinking of Consciousness as Waves

First written: Dec 14, 2018, Last update: Jan 2, 2019.

How can we think about the relationship between the conscious and the physical? In this essay I wish to propose a way of thinking about it that might be fruitful and surprisingly intuitive, namely to think of consciousness as waves.

The idea is quite simple: one kind of conscious experience corresponds to, or rather conforms to description in terms of, one kind of wave. And by combining different kinds of waves, we can obtain an experience with many different properties in one.

It should be noted that I in this post merely refer to waves in an abstract sense to illustrate a general point. That is, I do not refer to electromagnetic waves in particular (as some theories of consciousness do), nor to quantum waves (as other theories do), nor to any other particular kind of wave (such as Selen Atasoy’s so-called connectome-specific harmonic waves*). The point here is not what kind of wave, or indeed which physical state in general, that mediates different states of consciousness. The point is merely to devise a metaphor that can render intuitive the seemingly unintuitive, namely: how can we get something complex and multifaceted from something very simple without having anything seemingly spooky or strange, such as strong emergence, in between? In particular, how can we say that brains mediate conscious experience without saying that, say, electrons mediate conscious experience? I believe thinking about consciousness in terms of waves can help dissolve this confusion.

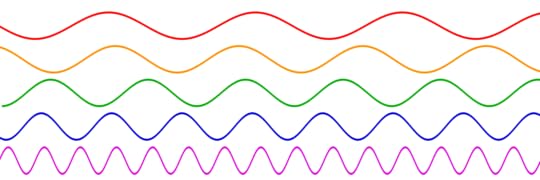

The magic of waves is that we can produce (or to an arbitrary level of precision approximate) any kind of complex, multifaceted wave by adding simple sine waves together.

Sine waves with different frequencies.

Sine waves with different frequencies.

In this way, it is possible, for instance, to decompose any recorded song — itself a complex, multifaceted wave — into simple, tedious-sounding sine waves. Each resulting sine wave can be said to comprise an aspect of the song, yet not in any recognizable way. The whole song is in fact a sum of such waves, not in a strange way that implies strong emergence, but merely in a complicated, composite way.

Another way to think about waves that can help us think more clearly about emergent complexity is to think of a wave that is very small in both amplitude and duration. If this were a sound wave, it would be an extremely short-lived, extremely low-volume sound. On a visual representation of an entire song file, this sound would look more akin to a dot than a wave.

A dot.

A dot.

And such simple sound waves can also be put together so as to create a song (for instance, one can take the sine waves obtained by decomposing a song and then chop them into smaller bits and decrease their amplitude). It will just, to make a song, take a very great number of such small waves superimposed (if the song is to be loud enough to hear) and in succession (if the song is to last for more than a split-second).

The deeper point here is that waves are waves, no matter how small or simple, large or complex. Yet not all waves comprise what we would recognize as music. Similarly, even if all physical states are phenomenal in the broadest sense, this does not imply that they are conscious in the sense of being an ordered, multifaceted whole. Unfortunately, we do not as yet have good, analogous terms for “sound” and “music” in the phenomenal realm — perhaps we could use “phenomenality” and “consciousness”, respectively?

The problem is indeed that we are limited by language, in that the word “conscious” usually only connotes an ordered, composite mind rather than the property of phenomenality in the most general sense. Consequently, if we think all that exists is either music or non-sound, metaphorically speaking, we are bound to be confused. But if we instead expand our vocabulary, and thereby expand our allowed ways of thinking, our confusion can, I think, be readily dissolved. If we think of the phenomenality of the simplest physical systems as being nothing like consciousness in the usual sense of a composite mind but rather as a state of hyper-crude phenomenality — i.e. “phenomenal noise” that is nothing like a song but more akin to a low, short-lived sound, and yet unimaginably more crude still — then the problem of consciousness, as commonly (mis)conceived, seems to become a lot less confusing.**

Avoiding Confusion Due to FuzzinessA more specific point of confusion the wave metaphor can help us dissolve is the notion that consciousness is so fuzzy a category that it in fact does not really exist, just like tables and chairs do not really exist. As I have argued elsewhere, I think this is a non sequitur. The fact that the categories of tables and chairs are themselves fuzzy does not imply that the physical properties of the objects to which we refer with these labels are inexact, let alone non-existent. The objects have the physical properties they have regardless of how we label them. Or, to continue the analogy to waves above, and songs in particular: although there is ambiguity about what counts as a song, this does not imply that we cannot speak in precise, factual terms about the properties of a given song — for instance, whether a given song contains a 440 Hz tone.

Similarly, the fact that consciousness, as in “an ordered, composite mind”, is a fuzzy category (after all, what counts as ordered? Do psychotic states? Fleeting dreams?) does not imply that any given phenomenal state we refer to with this term does not have exact and clearly identifiable phenomenal properties — e.g. an experience of the color red or the sensation of fear; properties that exist regardless of how outside observers choose to label them.

And although our labels for categorizing particular phenomenal states themselves tend to be fuzzy to some extent — e.g. which part of the spectrum below counts as red? — this does not imply that we cannot distinguish between different states, nor that we cannot draw any clear boundaries. For instance, we can clearly distinguish between the blue and the red zones respectively on the illustration below despite its gradation.

A linear representation of the visible light spectrum with wavelengths in nanometers.

A linear representation of the visible light spectrum with wavelengths in nanometers.

Just as we can point toward a confined range of wavelengths which induce an experience of (some kind of) red in most people upon hitting their retinas, we can also, in principle, point to a range of physical states that mediate specific phenomenal states. This includes the phenomenal states we call suffering, with the fuzziness of what counts as suffering contained within and near the bounds of this range, while the physical states outside this range, especially those far away, do not mediate suffering, cf. the non-red range in the illustration above.

Thus, by analogy to how we can have precise descriptions of the properties of a song, even as an exact definition of what counts as a song escapes us, there is no reason why we should not be able to speak in factual and precise terms about the phenomenal aspects of a mind and its physical signatures, including the “red range” of wavelengths that comprise phenomenal suffering, metaphorically speaking. And a sophisticated understanding of this notional range is indeed of paramount importance for the project of reducing suffering.

* Note that these seemingly different kinds of waves and theories of consciousness can be identical, since connectome-specific harmonic waves could turn out to be coherent waves in the electromagnetic quantum field, as would seem suggested by a hypothesis known as quantum brain dynamics (I do not necessarily endorse this particular hypothesis).

** Another useful analogy for thinking more clearly about the seemingly crazy notion that “everything is conscious” — or rather: phenomenal — is to think about the question, Is everything light? For in a highly non-standard sense, everything is indeed “light”, in that electromagnetic waves permeate the universe in the form of cosmic background radiation, although everything is not permeated by light in the usual sense of visible electromagnetic radiation (wavelengths around 400–700 nm). We may thus think of consciousness as analogous to visible light (they can also both be more or less intense and have various nuances), and electromagnetic radiation as analogous to phenomenality — the more general phenomenon that encompasses the specific one.

Is AI Alignment Possible?

The problem of AI alignment is usually defined roughly as the problem of making powerful artificial intelligence do what we humans want it to do. My aim in this essay is to argue that this problem is less well-defined than many people seem to think, and to argue that it is indeed impossible to “solve” with any precision, not merely in practice but in principle.

There are two basic problems for AI alignment as commonly conceived. The first is that human values are non-unique. Indeed, in many respects, there is more disagreement about values than people tend to realize. The second problem is that even if we were to zoom in on the preferences of a single human, there is, I will argue, no way to instantiate a person’s preferences in a machine so as to make it act as this person would have preferred.

Problem I: Human Values Are Non-Unique

The common conception of the AI alignment problem is something like the following: we have a set of human preferences, X, which we must, somehow (and this is usually considered the really hard part), map onto some machine’s goal function, Y, via a map f, let’s say, such that X and Y are in some sense isomorphic. At least, this is a way of thinking about it that roughly tracks what people are trying to do.

Speaking in these terms, much attention is being devoted to Y and f compared to X. My argument in this essay is that we are deeply confused about the nature of X, and hence confused about AI alignment.

The first point of confusion is about the values of humanity as a whole. It is usually acknowledged that human values are fuzzy, and that there are some disagreements over values among humans. Yet it is rarely acknowledged just how strong this disagreement in fact is.

For example, concerning the ideal size of the future population of sentient beings, the disagreement is near-total, as some (e.g. some defenders of the so-called Asymmetry in population ethics, as well as anti-natalists such as David Benatar) argue that the future population should ideally be zero, while others, including many classical utilitarians, argue that the future population should ideally be very large. Many similar examples could be given of strong disagreements concerning the most fundamental and consequential of ethical issues, including whether any positive good can ever outweigh extreme suffering. And on many of these crucial disagreements, a very large number of people will be found on both sides.

Different answers to ethical questions of this sort do not merely give rise to small practical disagreements; in many cases, they imply completely opposite practical implications. This is not a matter of human values being fuzzy, but a matter of them being sharply, irreconcilably inconsistent. And hence there is no way to map the totality of human preferences, “X”, onto a single, well-defined goal-function in a way that does not conflict strongly with the values of a significant fraction of humanity. This is a trivial point, and yet most talk of human-aligned AI seems oblivious to this fact.

Problem II: Present Human Preferences Are Underdetermined Relative to Future Actions

The second problem and point of confusion with respect to the nature of human preferences is that, even if we focus only on the present preferences of a single human, then these in fact do not, and indeed could not possibly, determine with much precision what kind of world this person would prefer to bring about in the future.

This claim requires some unpacking, but one way to realize what I am trying to say here is to think in terms of the information required to represent the world around us. A precise such representation would require an enormous amount of information, indeed far more information than what can be contained in our brain. This holds true even if we only consider morally relevant entities around us — on the planet, say. There are just too many of them for us to have a precise representation of them. By extension, there are also too many of them for us to be able to have precise preferences about their individual states. Given that we have very limited information at our disposal, all we can do is express extremely coarse-grained and compressed preferences about what state the world around us should ideally have. In other words: any given human’s preferences are bound to be extremely vague about the exact ideal state of the world right now, and there will be countless moral dilemmas occurring across the world right now to which our preferences, in their present state, do not specify a unique solution.

And yet this is just considering the present state of the world. When we consider future states, the problem of specifying ideal states and resolutions to hitherto unknown moral dilemmas only explodes in complexity, and indeed explodes exponentially as time progresses. It is simply a fact, and indeed quite an obvious one at that, that no single brain could possibly contain enough information to specify unique, or indeed just qualified, solutions to all moral dilemmas that will arrive in the future. So what, then, could AI alignment relative to even a single brain possibly mean? How can we specify Y with respect to these future dilemmas when X itself does not specify solutions?

We can, of course, try to guess what a given human, or we ourselves, might say if confronted with a particular future moral dilemma and given knowledge about it, yet the problem is that our extrapolated guess is bound to be just that: a highly imperfect guess. For even a tiny bit of extra knowledge or experience can readily change a person’s view of a given moral dilemma to be the opposite of what it was prior to acquiring that knowledge (for instance, I myself switched from being a classical to a negative utilitarian based on a modest amount of information in the form of arguments I had not considered before). This high sensitivity to small changes in our brain implies that even a system with near-perfect information about some person’s present brain state would be forced to make a highly uncertain guess about what that person would actually prefer in a given moral dilemma. And the further ahead in time we go, and thus further away from our familiar circumstance and context, the greater the uncertainty will be.

By analogy, consider the task of AI alignment with respect to our ancestors ten million years ago. What would their preferences have been with respect to, say, the future of space colonization? One may object that this is underdetermined because our ancestors could not conceive of this possibility, yet the same applies to us and things we cannot presently conceive of, such as alien states of consciousness. Our current preferences say about as little about the (dis)normativity of such states as the preferences of our ancestors ten million years ago said about space colonization.

A more tangible analogy might be to consider the level of confidence with which we, based on knowledge of your current brain state, can determine your dinner preferences twenty years from now with respect to dishes made from ingredients not yet invented — a preference that will likely be influenced by contingent, environmental factors found between now and then. Not with great confidence, it seems safe to say. And this point pertains not only to dinner preferences but also to the most consequential of choices. Our present preferences cannot realistically determine, with any considerable precision, what we would deem ideal in as yet unknown, realistic future scenarios. Thus, by extension, there can be no such thing as value extrapolation or preservation in anything but the vaguest sense. No human mind has ever contained, or indeed ever could contain, a set of preferences that evaluatively orders more than but the tiniest sliver of (highly compressed versions of) real-world states and choices an agent in our world is likely to face in the future. To think otherwise amounts to a strange Platonization of human preferences. We just do not have enough information in our heads to possess such fine-grained values.

The truth is that our preferences are not some fixed entity that determine future actions uniquely; they simply could not be that. Rather, our preferences are themselves interactive and adjustive in nature, changing in response to new experiences and new information we encounter. Thus, to say that we can “idealize” our present preferences so as to obtain answers to all realistic future moral dilemmas is rather like calling the evolution of our ancestors’ DNA toward human DNA a “DNA idealization”. In both cases, we find no hidden Deep Essences waiting to be purified; no information that points uniquely toward one particular solution in the face of all realistic future “problems”. All we find are physical systems that evolve contingently based on the inputs they receive.*

The bottom line of all this is not that it makes no sense to devote resources toward ensuring the safety of future machines. We can still meaningfully and cooperatively seek to instill rules and mechanisms in our machines and institutions that seem optimal in expectation given our respective, coarse-grained values. The conclusion here is just that 1) the rules instantiated cannot be the result of a universally shared human will or anything close; the closest thing possible would be rules that embody some compromise between people with strongly disagreeing values. And 2) such an instantiation of coarse-grained rules in fact comprises the upper bound of what we can expect to accomplish in this regard. Indeed, this is all we can expect with respect to future influence in general: rough and imprecise influence and guidance with the limited information we can possess and transmit. The idea of a future machine that will do exactly what we would want, and whose design therefore constitutes a lever for precise future control, is a pipe dream.

* Note that this account of our preferences is not inconsistent with value or moral realism. By analogy, consider human preferences and truth-seeking: humans are able to discover many truths about the universe, yet most of these truths are not hidden in, nor extrapolated from, our DNA or our preferences. Indeed, in many cases, we only discover these truths by actively transcending rather than “extrapolating” our immediate preferences (for comfortable and intuitive beliefs, say). The same could apply to the realm of value and morality.

November 2, 2018

Why the Many-Worlds Interpretation May Not Have Significant Ethical Implications

At first glance, it seems like the many worlds interpretation of quantum mechanics (MWI) might have significant ethical implications. After all, MWI implies that there are many more sentient beings in the world than one would think given a naive classical view, indeed a much greater number of them. And so it seems quite plausible, at least on the face of it, that ethical considerations pertaining to MWI should dominate everything else in expectation, even if we place only a small credence on this interpretation being true. In this post, I shall outline some reasons why this may in fact not be the case, at least with respect to two commonly supposed implications: 1) extreme caution, and 2) exponentially greater value over time. However, questions concerning the ethical implications of our best physical theories and their interpretations remain open and worth exploring.

Would Branching Worlds Imply Extreme Caution?

“I still recall vividly the shock I experienced on first encountering this multiworld concept [MWI]. The idea of 10100 slightly imperfect copies of oneself all constantly splitting into further copies, which ultimately become unrecognizable, is not easy to reconcile with common sense.”

This is a common way to introduce the implications of MWI, and it seems plausible that this radically different conception of reality, if true, should lead us to change our actions in significant ways. In particular, it may seem intuitive that it should lead us to act more cautiously, as David Pearce argues:

So one should always act “unnaturally” responsibly, driving one’s car not just slowly and cautiously, for instance, but ultracautiously. This is because one should aim to minimise the number of branches in which one injures anyone, even if leaving a trail of mayhem is, strictly speaking, unavoidable. If a motorist doesn’t leave a (low-density) trail of mayhem, then quantum mechanics is false. This systematic re-evaluation of ethically acceptable risk needs to be adopted world-wide.

Yet, while intuitive, I would argue that this actually does not follow. For although it may be true that we should generally act much more cautiously than we do, this conclusion is not influenced by MWI, for various reasons.

First, if one is trying to reduce suffering, one should not “aim to minimise the number of branches in which one injures anyone”, but rather seek to reduce as much suffering as possible (in expectation) in the world. At an intuitive level, these may seem equivalent, yet they are not. The former is in fact impossible, as we are bound to injure others, even assuming the existence of just one world, whereas the latter — reducing the greatest amount of suffering possible throughout all branches — is possible by definition.

In particular, this argument for being highly cautious ignores the fact that such caution also carries risks — e.g. extreme caution might increase the probability that we will bring about more suffering by omission, by rendering our efforts to reduce suffering less effective. And these other risks may well be much larger, and thus result in the realization of a larger amount of suffering in a larger measure of branches. In other words, since it is far from clear that being ultracautious is the best way to reduce suffering in expectation throughout all branches, it is far from clear that we should practice such ultracaution in light of MWI.

Second, and quite relatedly, I would argue that, whether we live in many worlds or one, we should seek to minimize expected suffering regardless. For if we happened to exist in one world, a small probability of a very bad outcome would be equally worth avoiding, in expectation, as it would be if we happened to live in a quantum multiverse. Whether we do just one or an arbitrarily large number of “trials”, we should still pursue the same action: that which reduces the most suffering in expectation.

Third, any argument of the kind made above concerning how all slightly probable outcomes will be realized can also be made by assuming that the multiverse of inflation exists. Thus, if one already believes that we live in a spatially infinite, or indeed “merely” extremely large universe, then the radical conclusions supposed to follow from MWI would already be implied by that belief alone (as we shall see below, many prominent proponents of MWI actually consider MWI not only equivalent but identical with the multiverse of inflation). And if one does not think a spatially very large universe should change how we act, then why think that a large, in many ways equivalent, quantum universe should? As argued above, it seems that no radical conclusions should follow either way. One world or many, we should still do what seems best in expectation.

Another way to arrive at the same conclusion is by embracing Stuart Armstrong’s Anthropic Decision Theory, according to which we, as altruists aiming to reduce suffering, should act the same way regardless of how many similar copies of us there may be in the world.

Would Branching Worlds Imply More Value Later?

Following Bryce DeWitt’s quote about rapidly splitting copies, one can reasonably wonder whether MWI implies that the net amount of value in the world, and hence the value of our actions’ impact on the world, is increasing exponentially over time. Indeed, if we naively interpret DeWitt’s claim to mean that the number of sentient beings that exists is multiplied by 10100 just about every second, this would imply that the value of the very last second of the existence of sentient life should massively dominate every thing else. If this interpretation of MWI is correct, it would have extremely significant ethical implications. Yet is it? It would seem not. Here is Max Tegmark:

Does the number of universes exponentially increase over time? The surprising answer is no. From the bird perspective, there is of course only one quantum universe. From the frog perspective, what matters is the number of universes that are distinguishable at a given instant—that is, the number of noticeably different Hubble volumes. Imagine moving planets to random new locations, imagine having married someone else, and so on. At the quantum level, there are 10 to the 10118 universes with temperatures below 108 kelvins. That is a vast number, but a finite one.

From the frog perspective, the evolution of the wave function corresponds to a never-ending sliding from one of these 10 to the 10118 states to another. Now you are in universe A, the one in which you are reading this sentence. Now you are in universe B, the one in which you are reading this other sentence. Put differently, universe B has an observer identical to one in universe A, except with an extra instant of memories.

Thus, it seems one should think about MWI in terms of an intertwining rope rather than a branching tree. A good way to gain intuition about it may be to think in terms of the multiverse of inflation instead. Indeed, according to prominent proponents of MWI, the many-worlds of quantum mechanics and the multiverse of inflation are not only closely related notions but indeed the same thing, cf. (Aguirre & Tegmark, 2010; Nomura, 2011; Bousso & Susskind, 2011). In that case, not only is thinking about copies of ourselves in worlds spatially far away from us a great way to gain intuition about MWI; it is the correct way to think about it.

And when we think about it in these terms, it suddenly all becomes quite straightforward and intuitive, at least relatively speaking. For on the inflationary model, there are copies of us in the universe located far away with whom we share our entire history from the big bang up until now. Yet as time progresses, and more different outcomes become possible, the distance to the copies of us that share our exact history becomes ever greater, at a rapid pace, cf. (Garriga & Vilenkin, 2001). Thus, there is indeed a rapid branching in a very real sense, only, this branching consists in departing from “nearby” copies of us who had been just like us up until this point. No new worlds are really added. The “other worlds” were always there, and then merely went their separate ways.

Hence, given the assumptions made here, the number of sentient beings in our world does not in fact increase exponentially in the way naively supposed above, unless one keeps on aggregating over an exponentially larger fraction of the space that already existed. (There is, however, an exponential increase in the number of new universes created by inflating regions of the universe, assuming inflationary theory is correct. Yet this process does not create an exponentially greater number of sentient beings from our point in space and time, i.e. Earth, 13.8 billion years after the big bang. Rather, these new worlds are all created “from scratch”.) In short, MWI does not appear to imply greater value later.

In sum, I have argued that we seem to have good reason to maintain something akin to one-world common sense in most of our decisions (decisions that might influence the creation of new universes would be an exception). This conclusion may, however, be strongly biased given that it comes from a brain that very much wants to preserve common sense.