Raymond Pompon's Blog, page 14

October 25, 2016

The future

I just got back from speaking at a conference in Palm Springs. On the plane ride, I read the most excellent book Superforecasting: The Art and Science of Prediction by Philip E. Tetlock and Dan Gardner. Great book if you're doing risk analysis or threat analysis work.

One thing that really got under my skin was a reprint of a letter from Donald Rumsfeld to the President - "Thoughts for the 2001 Quadrennial Defense Review". It's a PDF download, so here is the heart of the letter:

If you had been a security policy-maker in the world’s greatest power in 1900, you would have been a Brit, looking warily at your age-old enemy, France.

By 1910, you would be allied with France and your enemy would be Germany.

By 1920, World War I would have been fought and won, and you’d be engaged in a naval arms race with your erstwhile allies, the U.S. and Japan.

By 1930, naval arms limitation treaties were in effect, the Great Depression was underway, and the defense planning standard said “no war for ten years.”

Nine years later World War II had begun.

By 1950, Britain no longer was the worlds greatest power, the Atomic Age had dawned, and a “police action” was underway in Korea.

Ten years later the political focus was on the “missile gap,” the strategic paradigm was shifting from massive retaliation to flexible response, and few people had heard of Vietnam.

By 1970, the peak of our involvement in Vietnam had come and gone, we were beginning détente with the Soviets, and we were anointing the Shah as our protégé in the Gulf region.

By 1980, the Soviets were in Afghanistan, Iran was in the throes of revolution, there was talk of our “hollow forces” and a “window of vulnerability,” and the U.S. was the greatest creditor nation the world had ever seen.

By 1990, the Soviet Union was within a year of dissolution, American forces in the Desert were on the verge of showing they were anything but hollow, the U.S. had become the greatest debtor nation the world had ever known, and almost no one had heard of the internet.

Ten years later, Warsaw was the capital of a NATO nation, asymmetric threats transcended geography, and the parallel revolutions of information, biotechnology, robotics, nanotechnology, and high density energy sources foreshadowed changes almost beyond forecasting.

All of which is to say that I’m not sure what 2010 will look like, but I’m sure that it will be very little like we expect, so we should plan accordingly.

This really got me thinking about the where we will be in warfare, especially considering the recent DNS DDOS attacks and a possible cyber cold-war. Really makes you think.

One thing that really got under my skin was a reprint of a letter from Donald Rumsfeld to the President - "Thoughts for the 2001 Quadrennial Defense Review". It's a PDF download, so here is the heart of the letter:

If you had been a security policy-maker in the world’s greatest power in 1900, you would have been a Brit, looking warily at your age-old enemy, France.

By 1910, you would be allied with France and your enemy would be Germany.

By 1920, World War I would have been fought and won, and you’d be engaged in a naval arms race with your erstwhile allies, the U.S. and Japan.

By 1930, naval arms limitation treaties were in effect, the Great Depression was underway, and the defense planning standard said “no war for ten years.”

Nine years later World War II had begun.

By 1950, Britain no longer was the worlds greatest power, the Atomic Age had dawned, and a “police action” was underway in Korea.

Ten years later the political focus was on the “missile gap,” the strategic paradigm was shifting from massive retaliation to flexible response, and few people had heard of Vietnam.

By 1970, the peak of our involvement in Vietnam had come and gone, we were beginning détente with the Soviets, and we were anointing the Shah as our protégé in the Gulf region.

By 1980, the Soviets were in Afghanistan, Iran was in the throes of revolution, there was talk of our “hollow forces” and a “window of vulnerability,” and the U.S. was the greatest creditor nation the world had ever seen.

By 1990, the Soviet Union was within a year of dissolution, American forces in the Desert were on the verge of showing they were anything but hollow, the U.S. had become the greatest debtor nation the world had ever known, and almost no one had heard of the internet.

Ten years later, Warsaw was the capital of a NATO nation, asymmetric threats transcended geography, and the parallel revolutions of information, biotechnology, robotics, nanotechnology, and high density energy sources foreshadowed changes almost beyond forecasting.

All of which is to say that I’m not sure what 2010 will look like, but I’m sure that it will be very little like we expect, so we should plan accordingly.

This really got me thinking about the where we will be in warfare, especially considering the recent DNS DDOS attacks and a possible cyber cold-war. Really makes you think.

Published on October 25, 2016 12:11

October 17, 2016

Third parties and SSAE 18

My new book talks about building a security program that can pass a number of audits, including the SSAE 16. Now comes news of a new standard from the AICPA Auditing Standards Board (ASB) called the SSAE 18 that will replace SSAE 16 later in 2017.

Does that mean you need to redo everything? No. I wrote this book with the idea that technology, compliance, and threats will evolve over time. The advice is designed to be timeless not timely.

There are some changes in how the auditors will conduct and write their opinion, but really one thing that affects the audited organization: increased scrutiny of the performance sub service organizations. This is another way of talking about third party security. I have an entire chapter covering that subject in the book.

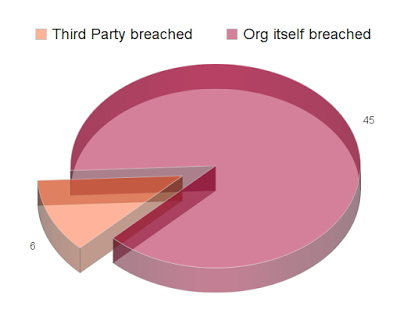

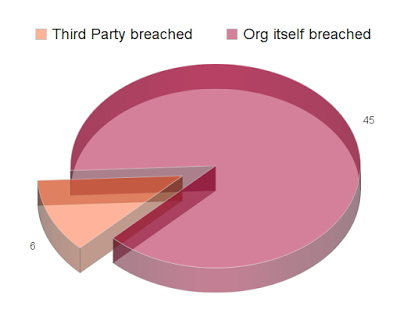

So what about third party security? Well, looking at the data from the past three months at the California Attorney General Breach records and I get the following:

Out of the 51 incidents examined, 6 were directly attributable to a third party's security. Is 12% significant? Sure, maybe not a top risk but it's something to worry about.

So, what is going on with third party security? Protiviti did a Vendor Risk Management Benchmark Study in 2015 and concluded that"Third party risk management is immature."

Furthermore, they went on to comment that out of all the third party risk management programs going on, the most mature ones are within the financial services organizations. Good to know, which leads us to ask: how well are financial services organizations managing third party risk?

Well, the New York State Department of Financial Services issued a "Update on Cyber Security in the Banking Sector: Third Party Service Providers." In this report, they noted that fewer than half of examined financial service companies do on-site assessments and within the programs themselves there is a lot of variation.

Having been on the pointy end of these assessments for nearly a decade, I concur with these findings. I've seen banks assess vendor security by a large variety of methods including:

Questionnaires with simple yes/no questionsOpen-ended questionnaires requiring narrative answersQuestionnaires in word docs, spreadsheets, PDFs of varying lengths (1 to 30 pages)Shared assessment formsOnline questionnaire/GRC tools hosted as by SaaS On-site interviews (with auditors of varying expertise or lack thereof)Software scans of varying typesInternet vulnerability scanningThird-party auditors (hiring a consulting or audit firm to do assessment)Subscription-based risk reputation scoringThey all seem to have varying strengths (accuracy, low cost, speed) and weaknesses (lack of accuracy, difficulty). For questionnaires, the actual questions always seem to revolve around the standard 27k2 control sets. Maybe these are sufficient, but does fall victim to best practicism.

Whatever third party security assessment you use, doing something is better than doing nothing. And if you're going to pursue meeting the SSAE 18 certification, you should invest in a good method.

Does that mean you need to redo everything? No. I wrote this book with the idea that technology, compliance, and threats will evolve over time. The advice is designed to be timeless not timely.

There are some changes in how the auditors will conduct and write their opinion, but really one thing that affects the audited organization: increased scrutiny of the performance sub service organizations. This is another way of talking about third party security. I have an entire chapter covering that subject in the book.

So what about third party security? Well, looking at the data from the past three months at the California Attorney General Breach records and I get the following:

Out of the 51 incidents examined, 6 were directly attributable to a third party's security. Is 12% significant? Sure, maybe not a top risk but it's something to worry about.

So, what is going on with third party security? Protiviti did a Vendor Risk Management Benchmark Study in 2015 and concluded that"Third party risk management is immature."

Furthermore, they went on to comment that out of all the third party risk management programs going on, the most mature ones are within the financial services organizations. Good to know, which leads us to ask: how well are financial services organizations managing third party risk?

Well, the New York State Department of Financial Services issued a "Update on Cyber Security in the Banking Sector: Third Party Service Providers." In this report, they noted that fewer than half of examined financial service companies do on-site assessments and within the programs themselves there is a lot of variation.

Having been on the pointy end of these assessments for nearly a decade, I concur with these findings. I've seen banks assess vendor security by a large variety of methods including:

Questionnaires with simple yes/no questionsOpen-ended questionnaires requiring narrative answersQuestionnaires in word docs, spreadsheets, PDFs of varying lengths (1 to 30 pages)Shared assessment formsOnline questionnaire/GRC tools hosted as by SaaS On-site interviews (with auditors of varying expertise or lack thereof)Software scans of varying typesInternet vulnerability scanningThird-party auditors (hiring a consulting or audit firm to do assessment)Subscription-based risk reputation scoringThey all seem to have varying strengths (accuracy, low cost, speed) and weaknesses (lack of accuracy, difficulty). For questionnaires, the actual questions always seem to revolve around the standard 27k2 control sets. Maybe these are sufficient, but does fall victim to best practicism.

Whatever third party security assessment you use, doing something is better than doing nothing. And if you're going to pursue meeting the SSAE 18 certification, you should invest in a good method.

Published on October 17, 2016 09:00

October 10, 2016

Assume breach as a foundation of a security program

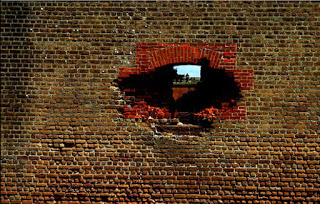

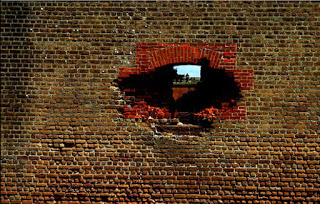

This picture below was excluded from my new book, IT Security Risk Control Management: An Audit Preparation Plan.

The publishers thought it wouldn't look very good in gray scale print. The story that goes with it is still there in Chapter 2, Assume Breach.

The concept of Assume Breach has been with us for over twenty years and I've been blogging here about it since 2008.

Assume Breach simply means don't count on our security defenses to keep the bad guys out. This picture is of the wall of a supposedly impregnable fortress after it went up against it's first real challenge against new technology.

Quinn Norton also coined a great corollary, called Norton's Law, which states that all data, over time, approaches deleted, or public.

In the book, the assume breach concept forms the foundation of a security program. What does this mean for defenders? It means that if you're going to be breached, you need to know what can be sacrificed and what must be protected at all costs. That implies you understand your organization, it's data flows, and what is truly important for survival. It also means you need to have a clear idea of the threats and vulnerabilities facing you. Lastly, assume breach means being prepared to adequately respond to incidents, survive them, and grow stronger because it.

It's the opposite of common rookie thinking “That’ll never happen in a million years!” or "why would anyone do that?" and instead think "When the inevitable happens, what will the damage look like and how will we react?" Assume breach forces you to focus on what matters and prioritize accordingly. Not a bad way to build a security program.

The publishers thought it wouldn't look very good in gray scale print. The story that goes with it is still there in Chapter 2, Assume Breach.

The concept of Assume Breach has been with us for over twenty years and I've been blogging here about it since 2008.

Assume Breach simply means don't count on our security defenses to keep the bad guys out. This picture is of the wall of a supposedly impregnable fortress after it went up against it's first real challenge against new technology.

Quinn Norton also coined a great corollary, called Norton's Law, which states that all data, over time, approaches deleted, or public.

In the book, the assume breach concept forms the foundation of a security program. What does this mean for defenders? It means that if you're going to be breached, you need to know what can be sacrificed and what must be protected at all costs. That implies you understand your organization, it's data flows, and what is truly important for survival. It also means you need to have a clear idea of the threats and vulnerabilities facing you. Lastly, assume breach means being prepared to adequately respond to incidents, survive them, and grow stronger because it.

It's the opposite of common rookie thinking “That’ll never happen in a million years!” or "why would anyone do that?" and instead think "When the inevitable happens, what will the damage look like and how will we react?" Assume breach forces you to focus on what matters and prioritize accordingly. Not a bad way to build a security program.

Published on October 10, 2016 07:30

October 1, 2016

The "softside" of Security can be the hardest

I just watched Leigh Honeywell's talk on "Building Secure Cultures" on the YouTubez. (BTW, it is a must watch for anyone remotely involved in security) I've been a big fan of Leigh's work and she lays down a lot of practical and effective advice. Her talk also struck a chord with me and my recent book on how to build a successful security program to pass audit.

For one, I felt great that she was also emphasizing empathy and coaching when delivering security advice. She, like me, has seen how counter-productive the elitism, abrasiveness, and condescension (and just plain rudeness) that somehow has become associated with a lot of the security industry. I especially liked how she called out "feigning surprise", an insulting practice I too have been guilty of doing.

"What? You didn't patch it?"

Her talk raises a powerful point: It serves no useful purpose beyond belittling the person seeking advice. Remember, we want people to bring their security problems to us and report suspicious things.

Those of you who have read my book may have noticed a running theme of working to see things from other people's perspectives. Yes, it's real work. In fact, Leigh touches on that in her talk as well (if you haven't watched it, you should). I've said it before: the hard part of security is sometimes the soft parts. By that I mean managing our feelings. Sometimes we have to suppress our fear and anger and present a positive face despite what may be a valid emotional response. This is work -- hard work. There's even a term for it - Emotional Labor.

Working in security, especially if you're trying to actively improve things in an organization, takes emotional labor. Many of us are geeks, having worked our way up from the techie trenches to meet the challenge of security work. The term geek itself should tell you something about our innate people skills and our limits on managing our external personas. Nevertheless, these soft skills are force multipliers we can leverage for effective security work done. I've woven practical advice on how to do this into a number of chapters. It's nice to see it called out on its own as a critical success factor in security work. Empathy is powerful in designing security solutions - how would a non-security tech react to what you think is obvious? I'm happy to see there is work now starting to blossom in this area.

Being able to modulate our own external outputs is critical not just in social engineering, but in being heard and acknowledged by others. Yes, listening to the other person before delivering your advice levels up your ability to create meaningful change.

As I said, none of this is easy. But it's definitely worth checking out.

For one, I felt great that she was also emphasizing empathy and coaching when delivering security advice. She, like me, has seen how counter-productive the elitism, abrasiveness, and condescension (and just plain rudeness) that somehow has become associated with a lot of the security industry. I especially liked how she called out "feigning surprise", an insulting practice I too have been guilty of doing.

"What? You didn't patch it?"

Her talk raises a powerful point: It serves no useful purpose beyond belittling the person seeking advice. Remember, we want people to bring their security problems to us and report suspicious things.

Those of you who have read my book may have noticed a running theme of working to see things from other people's perspectives. Yes, it's real work. In fact, Leigh touches on that in her talk as well (if you haven't watched it, you should). I've said it before: the hard part of security is sometimes the soft parts. By that I mean managing our feelings. Sometimes we have to suppress our fear and anger and present a positive face despite what may be a valid emotional response. This is work -- hard work. There's even a term for it - Emotional Labor.

Working in security, especially if you're trying to actively improve things in an organization, takes emotional labor. Many of us are geeks, having worked our way up from the techie trenches to meet the challenge of security work. The term geek itself should tell you something about our innate people skills and our limits on managing our external personas. Nevertheless, these soft skills are force multipliers we can leverage for effective security work done. I've woven practical advice on how to do this into a number of chapters. It's nice to see it called out on its own as a critical success factor in security work. Empathy is powerful in designing security solutions - how would a non-security tech react to what you think is obvious? I'm happy to see there is work now starting to blossom in this area.

Being able to modulate our own external outputs is critical not just in social engineering, but in being heard and acknowledged by others. Yes, listening to the other person before delivering your advice levels up your ability to create meaningful change.

As I said, none of this is easy. But it's definitely worth checking out.

Published on October 01, 2016 16:23

September 26, 2016

IT Security Risk Control Management: An Audit Preparation Plan - PUBLISHED!

My new book is officially published!

It is available in both electronic and paper form.

After many months of hard work, I am so happy to see the fruits of my labor.

Published on September 26, 2016 19:15

July 27, 2016

IT Security Risk Control Management: An Audit Preparation Plan

I've been quiet for a long, long while. It hasn't been because I didn't have anything to say. On the contrary, I've been pouring it all into my soon-to-be-released book, IT Security Risk Control Management: An Audit Preparation Plan. Before you ask, I didn't come up with the title, the publisher did. The book is aimed at newly minted security professionals or those wanting to step into the security role. It covers how to build a security program from scratch, do the risk analysis, pick controls, implement the controls (in such a manner that they actually work), and then be able to pass an audit. I specifically chose the SSAE-16/ISAE-3402 (SOCs 1,2,3), PCI DSS, and ISO 27001 as my audit candidates as they are the most common globally. It'll be out in early October, but you can pre-order now. I'll be writing more as we get closer to the publication date.

Published on July 27, 2016 03:00

September 21, 2015

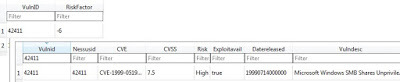

Some updates to vuln visualization

A while ago, I posted about an internal tool I created, Cestus, which I use to help score my vulnerabilities in my environment. Since then, I've made a few tweaks to the tool.

Specifically, I've added:

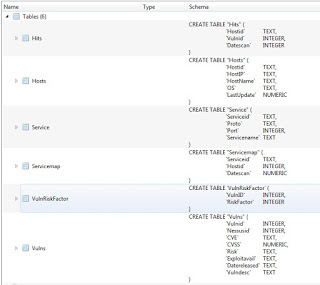

Risk-based adjustment based on host importance. Some hosts are obviously more important than others. Perimeter-facing mission-critical boxes score individually higher than internal utility servers behind 3 layers of firewalls. To do this, I had to modify the database and add new fields. Luckily with SQLite, this was a snap.Scoring based on type of vulnerability service. Vulnerabilities that require local access (such as a browser vuln) score lower on servers than external service type of vulnerability does. Bonus risk points for an external service discovered on what should be a client box.... laptops should not have listening FTP servers on them!New report on total number of vulnerabilities and total risk score per host. Handy for shaming informative reports to asset owners.New report on total number of listening services per host. Good for seeing which boxes are leaving large footprints on your networks. And singling out unusual looking devices. Both have proved very "interesting" when reviewed. Also good to compare to asset master lists to see if you missed scanning anything.New report on vulnerabilities based on keyword name in description. This lets me create useful pie charts on which vendors are causing the most number of vulnerabilities in our environment. So far these reports haven't been that revelatory:

That's all I've got for now. I'm still working on integrating this into our on-going threat and IDS data streams.

Specifically, I've added:

Risk-based adjustment based on host importance. Some hosts are obviously more important than others. Perimeter-facing mission-critical boxes score individually higher than internal utility servers behind 3 layers of firewalls. To do this, I had to modify the database and add new fields. Luckily with SQLite, this was a snap.Scoring based on type of vulnerability service. Vulnerabilities that require local access (such as a browser vuln) score lower on servers than external service type of vulnerability does. Bonus risk points for an external service discovered on what should be a client box.... laptops should not have listening FTP servers on them!New report on total number of vulnerabilities and total risk score per host. Handy for shaming informative reports to asset owners.New report on total number of listening services per host. Good for seeing which boxes are leaving large footprints on your networks. And singling out unusual looking devices. Both have proved very "interesting" when reviewed. Also good to compare to asset master lists to see if you missed scanning anything.New report on vulnerabilities based on keyword name in description. This lets me create useful pie charts on which vendors are causing the most number of vulnerabilities in our environment. So far these reports haven't been that revelatory:

That's all I've got for now. I'm still working on integrating this into our on-going threat and IDS data streams.

Published on September 21, 2015 12:16

September 11, 2015

Siracon 2015

Excited to be presenting at this year's SiraCon in Detroit

My talk will be on Third Party Risk Assessment Exposed

You hear things like "The majority of breaches occur as the result of third parties." You see a lot of surveys and read a lot of "best practices" around third party security. But what is actually happening in third-party risk assessment? It’s hard enough to measure the risk of your own organization, how can you quickly measure an external organization? Banks are required to do this but specific methods aren't defined. So what are they doing? We’ll examine data from hundreds of external assessments in the financial sector and compare this to actual breach data. We'll look at such questions like: What are the top questions asked by more than half of the assessors? What are questions asked rarely? What factors drive assessments? What important questions are missed? We’ll also dig into the top assessment standards SOC1-2-3, ISO27k, Shared Assessments and see what they’re accomplishing.

Looks to be an awesome lineup this year. Honored to be a part of it.

My talk will be on Third Party Risk Assessment Exposed

You hear things like "The majority of breaches occur as the result of third parties." You see a lot of surveys and read a lot of "best practices" around third party security. But what is actually happening in third-party risk assessment? It’s hard enough to measure the risk of your own organization, how can you quickly measure an external organization? Banks are required to do this but specific methods aren't defined. So what are they doing? We’ll examine data from hundreds of external assessments in the financial sector and compare this to actual breach data. We'll look at such questions like: What are the top questions asked by more than half of the assessors? What are questions asked rarely? What factors drive assessments? What important questions are missed? We’ll also dig into the top assessment standards SOC1-2-3, ISO27k, Shared Assessments and see what they’re accomplishing.

Looks to be an awesome lineup this year. Honored to be a part of it.

Published on September 11, 2015 12:40

June 25, 2015

DIY Security tools - Cestus: Risk Modelling on Vulnerability Data

I've spoken in the past about prioritized patching and the operational constraints. Assuming you actually want to do some prioritization, how do you go about it?

Continuing in the data-driven security realm, I've been pushing my vulnerability management tools to their limit. One of the tools we use is Nessus for internal vulnerability scanning. I've been a Nessus fan since Y2K days and still love it now. One problem with all vulnerability scanners (and reports) is that their risk ranking tools are clunky, sometime inappropriate, and occasionally over-inflated.

Most of them use CVSS, which is a nice standard though quite limited. And do remember, "Vulnerability != risk." As you may have read before, I do a wide variety of types of internal vulnerability scanning and then synthesize the results.

What I want to do is suck up and analyze all this scanning data, and then apply localized relevant data, and then put this through a risk model of my own choosing. There is really no such beast, though some commercial services are very close and becoming quite useful. And this open source tool is also pretty good too.

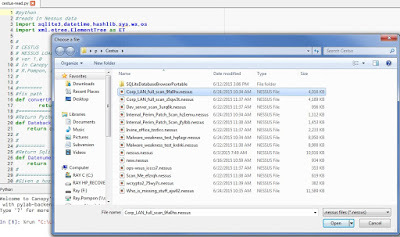

So in the spirit of "Make your own security tools", I started working on risk scoring tool to use with Nessus and some of my other in-house scanning tools.

I wish I could share code with you, but this is developed on my employer's dime and is used internally... so no. The good news, it wasn't hard to do and I think I can walk you through the algorithm and the heavy lifting.

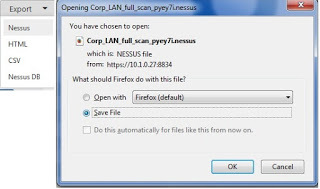

Let's start with the Nessus data itself. You can export everything you need in a handy XML file which can be read natively by a variety of tools.

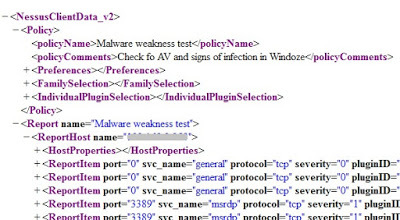

My tool-du-jour is Python, so that's what I'm using. The file itself is broken into two major pieces: information about the scan and discovered vulnerabilities. I'll want both.

My tool-du-jour is Python, so that's what I'm using. The file itself is broken into two major pieces: information about the scan and discovered vulnerabilities. I'll want both.

The information about the scan gives me when the scan was done (so I can save and compare subsequent scans) as well as which vulnerabilities were tested. This is critical because I can see what hosts were tested for what. Then I can mark off a vulnerability that was previously found when it no longer shows up (it was fixed!). I can also get data about the vulnerability itself, such as when it was discovered. This is useful in my risk model because we've seen that old vulnerabilities are worse than new ones. I also get from Nessus whether a public exploit is available for that vulnerability... and that info is in the exported XML for the scooping. This is extremely valuable for risk modelling, as these kinds of vulnerabilities have been shown to be far more worrisome.

After I pull in the data, I need to store it since I'm looking at synthesizing multiple scans from different sources. So I shove all of this into a local database. I've chosen SQLite just because I've used it before and easy. Having been a former database developer, I know a little bit about data normalization. So here's the structure I'm using for now.

The way I wrote my tool was to break into two parts: loading Nessus data and reporting on it. Here's how I load my Nessus XML files (with some hints on the libraries I'm using). So basically, run it and load up all the Nessus scan files you want. They're processed and put into the database.

The way I wrote my tool was to break into two parts: loading Nessus data and reporting on it. Here's how I load my Nessus XML files (with some hints on the libraries I'm using). So basically, run it and load up all the Nessus scan files you want. They're processed and put into the database.

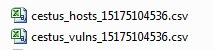

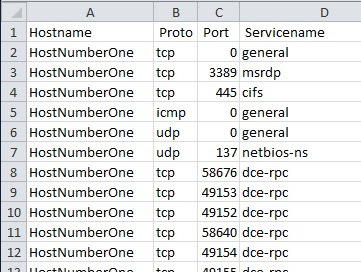

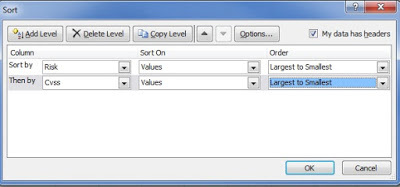

The second piece is to run risk modelling and generate reports in CSV format, suitable for spreadsheeting. Here's what pops out:

Oh yeah, you can see I'm calling the thing Cestus. Anyway, I produce two reports, one is a host/services report, which is a nice running tally of inventory of hosts and services. This has obvious utility beyond security and since I have all the info, why not grab it and analyze it?

Oh yeah, you can see I'm calling the thing Cestus. Anyway, I produce two reports, one is a host/services report, which is a nice running tally of inventory of hosts and services. This has obvious utility beyond security and since I have all the info, why not grab it and analyze it?

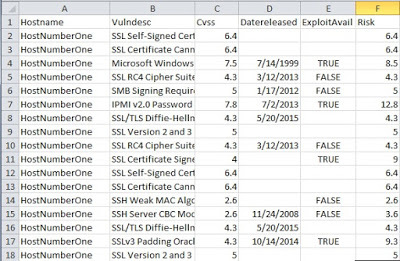

Second report is the meat: my synthesized list of vulnerabilities and risk scoring.

Second report is the meat: my synthesized list of vulnerabilities and risk scoring.

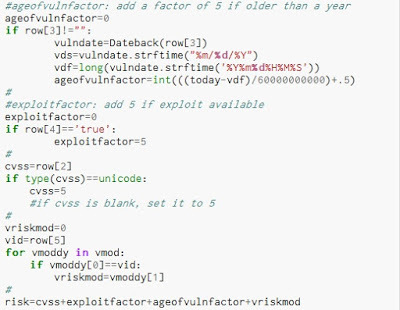

Now, how do I calculate the "risk"? Well, that's the beauty of doing this myself. Answer: anyway I want. Let's build an arbitrary risk model for scoring vulnerabilities to illustrate:

Alright, we'll use CVSS as the base score. If there's no CVSS score for a particular discovered vulnerability, then just pick 5 (right in the middle). Now add to it based on the vulnerability's age.... the older, the higher (again arbitrary weights at this point). Add 5 more points if there's a known exploit. I also have another table of vulnerabilities with their own weight factors to tweak this.

Alright, we'll use CVSS as the base score. If there's no CVSS score for a particular discovered vulnerability, then just pick 5 (right in the middle). Now add to it based on the vulnerability's age.... the older, the higher (again arbitrary weights at this point). Add 5 more points if there's a known exploit. I also have another table of vulnerabilities with their own weight factors to tweak this.

I use this to add or subtract from the risk score based on what I may know about a particular vulnerability in our environment. For example, An open SMB share rates high but I'm doing a credentialed scan, so actually the share is locked down. Drop the score by... I dunno... 6. Add this up, and now we've got some risk scores, which I when I open the CSV in Excel, I can quickly sort on.

This is a nice quick way at looking at things. As you may have guessed, this is only step 1 (actually step 2) in my plan. The next step is link this to my intrusion dashboard so I can have company-specific threat intelligence feeding my risk models. I also would like to add more external vulnerability scanning sources and feeds into my risk modelling calculator. Of course, I'll want to improve and test my risk model against the real world, see how it performs. When I get that all working, maybe I'll blog about it.

Published on June 25, 2015 11:22

May 20, 2015

Data Driven Security, Part 3 - Finally some answers!

I am continuing my exploration inspired by Data Driven Security.

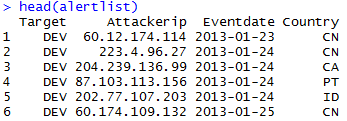

In Part One, I imported some data on SSH attacks from Outlook using AWK to get it into R.

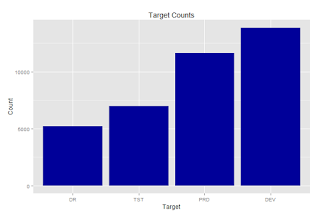

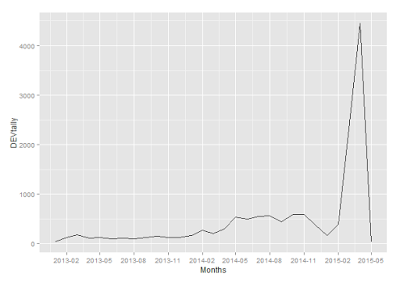

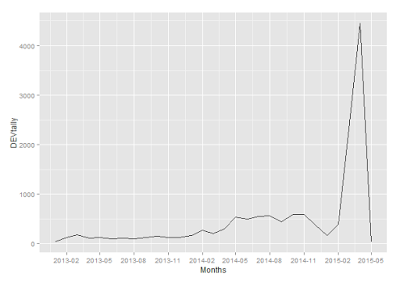

In Part Two, I converted some basic numbers into graphs, which helped visualize some strange items. The final graph was most interesting:

It was strange that there were twice as many attacks at the Dev SSH service as the DR service. What is going on here?

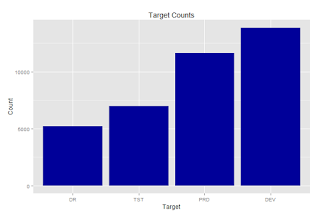

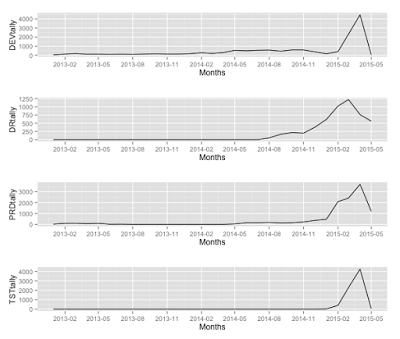

Well, we've got over 37,000 entries in here over a couple of years. Let's break them out and get monthly totals based on target. First, I'm going to convert those date entries to a number I can add up.

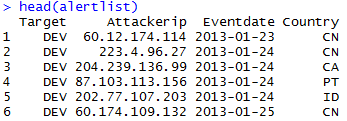

Remember the data looks like this:

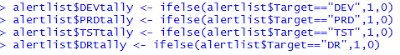

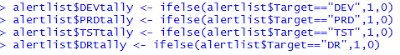

Each entry has a date and what target location was hit. So I'm going to use an ifelse to add a new column with a "1" in it for every matching target

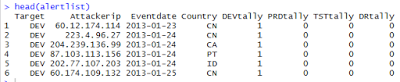

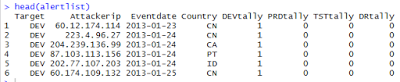

So now I have this

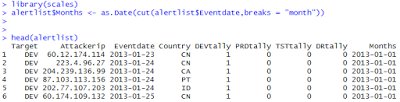

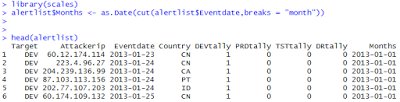

Now I add another vector the dataframe, breaking dates down by month

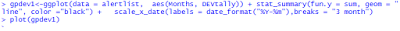

There might be an easier way to do this, but I'm still an R noob and this seemed the easiest way forward for me. Anyway, I can plot these and compare.

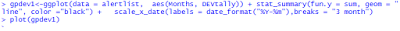

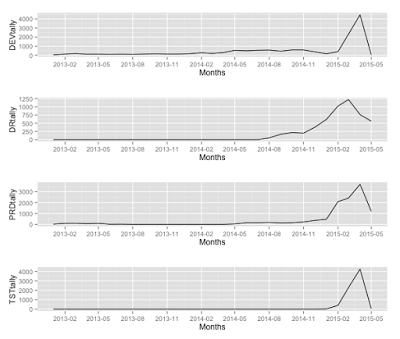

You can do this for all four and compare:

This gives me two insights. First, different SSH systems began receiving alerts at different times. Basically there are twice as many Dev alerts as DR alerts simply because the DR system didn't generate alarms until the middle of 2014. Same with the Tst SSH system. So there is a long tail of Dev alarms skewing the data. In fact, the Dev system was the SSH system to go live. Pretty much any system on the Internet is going to receive some alarms, so a zero in the graph means that service was not alive yet.

Second, we confirm and can graphically show what IT orginally asked back in part 1. To refresh your memory: said "The IT Operations team complained about the rampup of SSH attacks recently. "

Here we can visually see that ramp up, beginning at the end of 2014 and spiking sometime in the first quarter.

The next step in our analysis would be to see who was in the spike: are these new attackers? Where are they from? The traffic to the targets seem to peak at different times, so there might be something worth investigating there.

And why did the traffic die down? Were the IPs associated with a major botnet that got taken down sometime in April 2015? A quick Googling says yes: "A criminal group whose actions have at times been responsible for one-third of the Internet’s SSH traffic—most of it in the form of SSH brute force attacks—has been cut off from a portion of the Internet."

I hope this series was informative to you as it was to me. I was pleasantly surprised in being find some tangible answers on current threats from simply graphing our intrusion data. Now it's your turn!

In Part One, I imported some data on SSH attacks from Outlook using AWK to get it into R.

In Part Two, I converted some basic numbers into graphs, which helped visualize some strange items. The final graph was most interesting:

It was strange that there were twice as many attacks at the Dev SSH service as the DR service. What is going on here?

Well, we've got over 37,000 entries in here over a couple of years. Let's break them out and get monthly totals based on target. First, I'm going to convert those date entries to a number I can add up.

Remember the data looks like this:

Each entry has a date and what target location was hit. So I'm going to use an ifelse to add a new column with a "1" in it for every matching target

So now I have this

Now I add another vector the dataframe, breaking dates down by month

There might be an easier way to do this, but I'm still an R noob and this seemed the easiest way forward for me. Anyway, I can plot these and compare.

You can do this for all four and compare:

This gives me two insights. First, different SSH systems began receiving alerts at different times. Basically there are twice as many Dev alerts as DR alerts simply because the DR system didn't generate alarms until the middle of 2014. Same with the Tst SSH system. So there is a long tail of Dev alarms skewing the data. In fact, the Dev system was the SSH system to go live. Pretty much any system on the Internet is going to receive some alarms, so a zero in the graph means that service was not alive yet.

Second, we confirm and can graphically show what IT orginally asked back in part 1. To refresh your memory: said "The IT Operations team complained about the rampup of SSH attacks recently. "

Here we can visually see that ramp up, beginning at the end of 2014 and spiking sometime in the first quarter.

The next step in our analysis would be to see who was in the spike: are these new attackers? Where are they from? The traffic to the targets seem to peak at different times, so there might be something worth investigating there.

And why did the traffic die down? Were the IPs associated with a major botnet that got taken down sometime in April 2015? A quick Googling says yes: "A criminal group whose actions have at times been responsible for one-third of the Internet’s SSH traffic—most of it in the form of SSH brute force attacks—has been cut off from a portion of the Internet."

I hope this series was informative to you as it was to me. I was pleasantly surprised in being find some tangible answers on current threats from simply graphing our intrusion data. Now it's your turn!

Published on May 20, 2015 10:51