Keith McCormick's Blog, page 3

July 7, 2020

IADSS Data Science Training and Education Survey

I have been working with https://www.iadss.org/ since attending their excellent workshop at KDD 2019 in Anchorage, Alaska. They’ve recently been initiated data collection regarding data science education and training. Please participate.

Have you recently attended a #datascience or #analytics degree program, #bootcamp or online #certification? We need your help! Share your experience of training in data science and you can get a chance to win a data science prize: Data Visualization course by DATAcated Academy thanks to Kate Strachnyi ♕, Essentials Elements of Predictive Analytics and Data Mining by Keith McCormick at LinkedIn Learning, and mentorship sessions by Kirk Borne and Usama Fayyad. Results will be shared at #kdd conference workshop organized by #iadss and co-chaired by Usama Fayyad and Xiao-Li Meng of #Harvard Data Science Review.

Please complete survey here: https://lnkd.in/etXs3ck.

Level Up with Lori on LinkedIn Live

One observation Lori Silverman and I’ve had for some time here on LinkedIn — in both data science and data analytics — is the lack of reference and attention paid to statistics and statistical analysis grounded in theory.

Lori was blessed to learn the fundamentals of statistics from Donald Wheeler, Howard Gitlow, PhD, Dr. Deming, and George Box, PhD, as well as many statistician colleagues. I’ll be joining Lori on this week’s #linkedinlive show to discuss this glaring gap.

Please join us:

For those who’d like to put a placeholder on their calendar (this includes the YouTube streaming page link – you can also join through LinkedIn if you get a real-time notification on Thursday)

* Apple – https://bit.ly/31MA4zx

* Google – https://bit.ly/3f4sy6X

* Outlook – https://bit.ly/3e1PrGN

June 25, 2020

Keynote for Institute of Product Leadership

About a month ago presented a talk about Data Science careers, specifically, Data Science management for the Institute of Product Leadership. They have kindly made the video available.

February 26, 2020

New course for Executives

I’ve found my work collaboration with LinkedIn Learning to be very rewarding, but I’m especially proud of this course. My Essential Elements course has hundreds of thousands of views, and this course, Predictive Analytics Essential Training for Executives is a sibling course for senior management. I hope you enjoy it.

Course announcement for Advanced IBM SPSS Modeler Training in NYC

I’m going to be offering an advanced three-day Modeler training for a very small number of experienced users in New York City in July. There will be more than 3 hours a day of “office hours” style Q&A, in addition to a very full day of lecture and discussion. If you are looking for advanced training please message me privately and I will send you the detailed information.

For this training to be a good fit you should be a power user and should have some familiarity with the kind of content that we cover in the Modeler Cookbook. If that describes you I’d be excited to have you join us, but I’m limiting the training to just five.

You should already have taken something like Predictive Modeling for Categorical Targets Using IBM SPSS Modeler and/or Predictive Modeling for Continuous Targets Using IBM SPSS Modeler, or have the equivalent experience with predictive models in Modeler.

February 24, 2020

Some reflections on CRISP-DM

Here is a copy of the CRISP-DM original document.

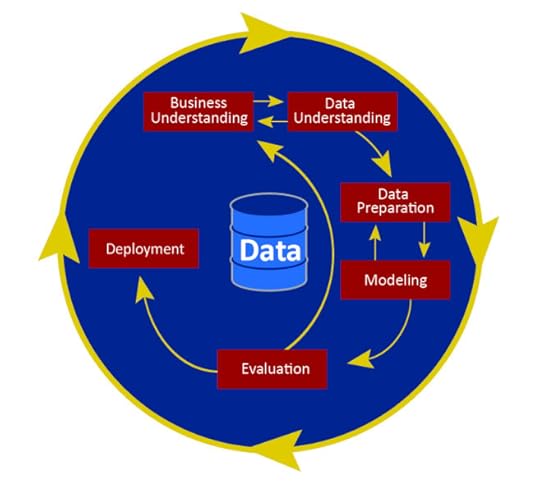

And here is a version of the famous circular diagram. Please be careful to refer to the task level and not just the phase level. The model is rich and very useful if you use the entire model. There is a danger in simply mapping one’s expectations onto the circular diagram. CRISP-DM is much more than just a diagram. Read the full document and then use the diagram for reference.

Some reflections on CRISP-DM’s six phases

Business Understanding is a group activity. It takes place in meeting rooms, not alone at one’s laptop. The goal is to formalize the business problem and transform it into a form that can be answered with data and with data mining techniques. Data Mining is fundamentally about addressing problems. Data Mining projects can not ultimately succeed unless sufficient attention is given to this first phase. One of the possible outcomes of Business Understanding, and a common one, is to carefully define a Target variable.

The Tasks of this phase are:

Determine Business Objectives

Assess Situation

Determine Data Mining Goals

Produce Project Plan

Data Understanding, like Business Understanding is not a solitary activity.

A common form it takes is receiving data, exploring it, and then meeting with Subject Matter Experts (SMEs). It is not the SMEs job to pick winning or losing variables, but rather to ensure that all data is represented and that the data miner thoroughly understands the data. Once some exploration is done, the data is discussed in light of the business problem and sometimes Business Understanding has to be revisited. Deployment should be discussed during both the Business Understanding phase and this phase. You need to understand how the data will flow into the model, and back out to the business where it can provide value. One has to be careful not to draw too many conclusions at this stage because the data has not been fully integrated and data augmentation and cleaning have not occurred.

The tasks of this phase are:

Collect initial data

Describe data

Explore data

Verify data quality

It is frequently said that Data Preparation is 70-90% of the time spent on a Data Mining project.

When choosing a number as high as 90% it is probably meant that both Data Understanding and Data Preparation take up that much time, but the high end of that range is too high. However, the notion that it is the biggest threat to schedule is true. Data preparation is the biggest variable in estimating project length. Every project differs, but if the project takes longer than expected, it is virtually always that Data Preparation is to blame. Tom Khabaza claims that this phase is ALWAYS more than 50% even when a data warehouse is in place, and even when there is software support to facilitate the process. This is the case because, when time allows, more data preparation improves the effectiveness of the modeling phase. If ample time exists, it is often wiser to spend extra time on this phase than on any other phase. It is also true, that many practitioners underestimate the necessary time for this phase, and that is also a contributing factor to its reputation as time-consuming. Corners cut on this phase tend to make the other phases fall behind schedule.

In short, there is no way around it – this is a time-consuming phase, with CRISP-DM listing these five tasks.

Select data

Clean data

Construct data

Integrate data

Format data

Data construction, sometimes called data augmentation is a critical aspect of Data Preparation. Models will work much better when the variables have been manipulated to make the patterns clear. For instance, raw dates are not useful in most models. What is useful is the differences between dates. Other commonplace examples include subtracting variables from each other to measure change. Did someone spend more on iTunes downloads in December than they did on average over the last year? Most data mining beginners vastly underestimate the importance of this aspect of Data Preparation.

The Modeling Phase gets lots of attention during training, and in books about data mining, because there is so much to learn, but it is not the longest nor the most labor-intensive phase.

Each algorithm rewards many, many hours of study. However, in most projects Modeling is done in 1-2 weeks, even when the project is much longer. The reason is that if you have the schedule and resources to support a third week you would be better off spending an extra week on data preparation or data understanding instead. During this phase, the software is working at its hardest, and the human data miner has the most difficult work behind them. Expect to attempt dozens of models and model settings. Once you narrow it down to the several that are best, then the final winning model will be the one that best addresses the business problem.

The Tasks in the Modeling Phase are:

Select modeling technique

Generate test design

Build model

Assess model

Another challenge in the Modeling phase of Data Mining is weeding out the very weakest of the variables – weak in terms of data quality or weak in terms of relationship with the target. This is a delicate process as you don’t want to discard anything that could be useful.

The Assess Task, part of the Modeling Phase, is a screen of sorts. It is a process by which you can eliminate modeling approaches that are simply not working. Accuracy, while necessary, is insufficient. You need to ensure that the chosen model will solve the business problem. Model accuracy will not be the most important criterion once you have achieved this stage since all sufficiently capable models have graduated to the Evaluation Phase. Another way of phrasing this is that you need accuracy to be a semi-finalist, but the ultimate winner is not necessarily the model with the highest accuracy of all.

Data miners should be careful not to focus on predictive accuracy, model stability, or any other technical metric for predictive models at the expense of business insight and business fit. You may need to develop an evaluation approach that is unique to the problem at hand.

What are you trying to increase?

What are you trying to decrease?

How can you measure that?

Is there a particular target that has to be achieved to justify the project?

An extended quote from Tom Khabaza’s 9 Laws of Data Mining:

Accuracy and stability are useful measures of how well a predictive model makes its predictions. Accuracy means how often the predictions are correct (where they are truly predictions) and stability means how much (or rather how little) the predictions would change if the data used to create the model were a different sample from the same population. Given the central role of the concept of prediction in data mining, the accuracy and stability of a predictive model might be expected to determine its value, but this is not the case.

Khabaza also states the following.

The value of a predictive model arises in two ways:

The model’s predictions drive improved (more effective) action, and

The model delivers insight (new knowledge) which leads to improved strategy.

Once you reach the Evaluation Phase in the project you have built a model (or models) that have survived the technical criterion of the Assess Model Task. According to CRISP-DM “Before proceeding to final deployment of the model, it is important to more thoroughly evaluate the model, and review the steps executed to construct the model, to be certain it properly achieves the business objectives.” Additional tasks address what might be called an after-action report creating an opportunity to revisit organizational issues that might be discussed to shed light on how the team could make some improvements in how they collaborate on future projects.

The Evaluation Phase Tasks are:

Evaluate Results

Review Process

Determine Next Steps

The oversimplified view of deployment is that you can easily score new data on an existing model as long as you have generated some code along the way. The reality of deployment is always a bit more complicated than this, and sometimes it can become a project in itself in the case of very sophisticated solutions. For instance, it is important to keep in mind, that during deployment data preparation still must be conducted.

If you had to merge two data sets to create the Training data, then you will likely have to do so at deployment. The only alternative is if the data is prepared in another way. For instance, once the model exists you may decide that you want to modify the source data. Ultimately, however, you have to ensure that the data flowing through the model is in the same form as it was when you built the model. This almost always involves data preparation in the deployment stream.

The Deployment Phase Tasks are:

Plan Deployment

Plan Monitoring and Maintenance

Produce Final Report

Review Project

CRISP-DM

Here is a copy of the CRISP-DM original document.

And here is a version of the famous circular diagram. Please be careful to refer to the task level and not just the phase level. The model is rich and very useful if you use the entire model. There is danger is simply mapping one’s expectations onto the circular diagram.

January 8, 2019

Ensembles: A Reading List of the original academic papers

I’ve been spending the last few weeks reading all of the original academic papers I can find on ensembles. By “original” I mean the major papers that launched the various famous approaches and algorithms. I want to list this here to help anyone who might conduct a similar search (including myself in the future). In some cases, they aren’t originally the first paper, but each is a comprehensive paper written by the person credited with developing the technique.

Bagging: Bagging Predictors by Leo Breiman, 1994

Random Forests: Random Forests by Leo Breiman, 2001

AdaBoost: Intro to Boosting by Y. Freund, and R. Shapire, 1999

Super Learner (stacking): Super Learner by van der Laan, et al, 2007

December 7, 2018

Comparing Linear Regression to Artificial Neural Networks

Comparing regression to Neural Nets from Machine Learning & AI Foundations: Linear Regression by Keith McCormick

I’m really glad that I was given the opportunity to incorporate this material into my regression class for LinkedIn Learning. I truly believe that regression is the topic that we think we’ve all mastered but that often has not revealed all of its secrets. In this lecture, I explain that if you really want to understand neural networks you have to compare them to regression.

September 24, 2018

The Predictive Analytics Team

I’ve been speaking at national TDWI conferences for more than three years. As a part of that responsibility I’ve been interviewed a few times and have also contributed to their blogs and publications. Some of this material is public and some is for TDWI members but I would like to summarize some of this work and let you know where to find it. A recurring theme has been working in teams. The accumulation of material is considerable and taken together addresses the topic well.

When are you ready for a full time Data Scientist

The first is an interview that I did exploring when an organization is ready to hire a full time Data Scientist. I think that some do this too quickly. They do so because their not sure how to start so they figure that the first step is to bring in an expert and go from there. The problem with this approach is that organizations take months to find the “right” hire and then 12 months later they lose them. Turnover is a real problem and folks simply aren’t thinking more than 1 or 2 chess moves out. You have to have a plan. You can’t expect a new hire to show up with one.

I state it this way in the interview:

“Companies seem to think that if they can manage to attract that person with the most experience, the longest resume, the most letters after their name, a Ph.D.-type person — if they just make that hire, everything else will fall into place.

But like most things in business transformation, it’s just not that easy.”

The interview is a considerable length and covers a lot of ground. Is a linked to a webinar as well: “Your First Hire.” In the webinar I cover the composition of the team in considerable detail. So I focused on the different team roles and how many of those roles should be filled with existing internal talent. I argue that teams created out of thin air, composed entirely of new hires is a poor choice. I explain the roles one by one.

Finding and Hiring Data Scientists

In another interview, in the Business Intelligence Journal I tackle the hiring itself. Entitled High Demand Drives Up Interest in Data Scientists—and Salaries we talk about why the market is so hot. As I write this, it was a couple of years ago, but the market hasn’t changed much. Even the numbers haven’t changed much as the market as stabilized a bit.

We really get into the details. For instance I’m asked about salaries.

BI Journal: Speaking of salaries, what should companies anticipate spending for a data scientist? You just mentioned $150,000 as a round number for an experienced person.

Keith: You know, I look into the issue of data scientist salaries about once a year. I’ve read two salary surveys [recently], both by Burtch Works, who did a good job.

Burtch Works continues to be an amazing resources and their latest can be found here. Make sure that you also seek out the webinar recordings that they put out about once a year.

The interviewer and I also get into the issue of Data Science graduate degrees and certificate programs and my discussion of that is still relevant as well. They are new, they are attracting a lot of graduates, but none of them have been around long enough to see what impact they will have on salaries, retention, or performance. Most of us that have been at this for a decade or two have degrees in statistics, computer science, or engineering.

Data Preparation is collaborative too

In another blog post I cover the challenges of collaboration during the data preparation phase. The challenge here is figuring out who should do what and it is not as easy as you might think to sort that out.

Many companies figure that they can just perform the data prep in advance for everyone’s benefit, but that ultimately doesn’t work out because the most interesting data is data that is being accessed or combined in innovative ways that are different from routine reporting requirements.

For example, visits to the company website might not be routinely combined with inbound calls to a customer service center because they might be managed by different departments. This would seem to be naturally handled by IT, but it must be a collaborative effort to be productive.