Alan Winfield's Blog, page 3

August 10, 2020

Back to robot coding part 1: hello world

One of the many things I promised myself when I retired nearly two years ago was to get back to some coding. Why? Two reasons: one is that writing and debugging code is hugely satisfying - for those like me not smart enough to do pure maths or theoretical physics - it's the closest you can get to working with pure mind stuff. But the second is that I want to prototype a number of ideas in cognitive robots which tie together work in artificial theory of mind and the ethical black box, with old ideas on how robots telling each other stories and new ideas on how social robots might be able to explain themselves in response to questions like "Robot: what would you do if I forget to take my medicine?"

But before starting to work on the robot (a NAO) I first needed to learn Python, so completed most of the Codecadamy's excellent Learn Python 2 course over the last few weeks. I have to admit that I started learning Python with big misgivings over the language. I especially don't like the way Python plays fast and loose with variable types, allowing you to arbitrarily assign a thing (integer, float, string, etc) to a variable and then assign a different kind of thing to the same variable; very different to the strongly typed languages I have used since student days: Algol 60, Algol 68, Pascal and C. However, there are things I do like: the use of indentation as part of the syntax for instance, and lots of nice built in functions like range(), so x = range(0,10) puts a list ('array' in old money) of integers from 0 to 9 in x.

But before starting to work on the robot (a NAO) I first needed to learn Python, so completed most of the Codecadamy's excellent Learn Python 2 course over the last few weeks. I have to admit that I started learning Python with big misgivings over the language. I especially don't like the way Python plays fast and loose with variable types, allowing you to arbitrarily assign a thing (integer, float, string, etc) to a variable and then assign a different kind of thing to the same variable; very different to the strongly typed languages I have used since student days: Algol 60, Algol 68, Pascal and C. However, there are things I do like: the use of indentation as part of the syntax for instance, and lots of nice built in functions like range(), so x = range(0,10) puts a list ('array' in old money) of integers from 0 to 9 in x.

So, having got my head around Python I finally made a start with the robot on Thursday last week. I didn't get far and it was *very* frustrating.

Act 1: setting up on my Mac

Attempting to set things up on my elderly Mac air was a bad mistake which sent me spiralling down a rabbit hole of problems. The first thing you have to do is download and unzip the NAO API, called naoqi, from Aldebaran. The same web page then suggests you simply try to import naoqi from within Python, and if there are no errors all's well.

As soon as I got the export path commands right, import naoqi resulted in the following error

...Reason: unsafe use of relative rpath libboost_python.dylib in /Users/alansair/Desktop/naoqi/pynaoqi-python2.7-2.1.4.13-mac64/_qi.so with restricted binary

According to stack overflow this problem is caused by Mac OSX system integrity protection (SIP).

Then (somewhat nervously) I tried turning SIP off, as instructed here.

But import naoqi still gives a different error. Perhaps its because my Python is in the wrong place, the Aldebaran page says it must be at /usr/local/bin/python (the default on the mac is /usr/bin. Ok so I So, reinstall python 2.7 from Python.org so that it is in /usr/local/bin/python. But now I get another error message:

>> import naoqiFatal Python error: PyThreadState_Get: no current threadAbort trap: 6

A quick search and I read: "this error shows up when a module tries to use a python library that is different than the one the interpreter uses, that is, when you mix two different pythons. I would run otool -L <dyld> on each of the dynamic libraries in the list of Binary Images, and see which ones is linked to the system Python."

At which point I admitted defeat.

Act 2: setting up on my Linux machine

Once I had established that the Python on my Linux machine was also the required version 2.7, I then downloaded and unzipped the NAO API, this time for Linux.

This time I was able to import naoqi with no errors, and within just a few minutes ran my first NAO program: hello world.

from naoqi import ALProxytts = ALProxy("ALTextToSpeech", "164.168.0.17", 9559)tts.say("Hello, world!")

whereupon my NAO robot spoke the words "Hello world". Success!

But before starting to work on the robot (a NAO) I first needed to learn Python, so completed most of the Codecadamy's excellent Learn Python 2 course over the last few weeks. I have to admit that I started learning Python with big misgivings over the language. I especially don't like the way Python plays fast and loose with variable types, allowing you to arbitrarily assign a thing (integer, float, string, etc) to a variable and then assign a different kind of thing to the same variable; very different to the strongly typed languages I have used since student days: Algol 60, Algol 68, Pascal and C. However, there are things I do like: the use of indentation as part of the syntax for instance, and lots of nice built in functions like range(), so x = range(0,10) puts a list ('array' in old money) of integers from 0 to 9 in x.

But before starting to work on the robot (a NAO) I first needed to learn Python, so completed most of the Codecadamy's excellent Learn Python 2 course over the last few weeks. I have to admit that I started learning Python with big misgivings over the language. I especially don't like the way Python plays fast and loose with variable types, allowing you to arbitrarily assign a thing (integer, float, string, etc) to a variable and then assign a different kind of thing to the same variable; very different to the strongly typed languages I have used since student days: Algol 60, Algol 68, Pascal and C. However, there are things I do like: the use of indentation as part of the syntax for instance, and lots of nice built in functions like range(), so x = range(0,10) puts a list ('array' in old money) of integers from 0 to 9 in x. So, having got my head around Python I finally made a start with the robot on Thursday last week. I didn't get far and it was *very* frustrating.

Act 1: setting up on my Mac

Attempting to set things up on my elderly Mac air was a bad mistake which sent me spiralling down a rabbit hole of problems. The first thing you have to do is download and unzip the NAO API, called naoqi, from Aldebaran. The same web page then suggests you simply try to import naoqi from within Python, and if there are no errors all's well.

As soon as I got the export path commands right, import naoqi resulted in the following error

...Reason: unsafe use of relative rpath libboost_python.dylib in /Users/alansair/Desktop/naoqi/pynaoqi-python2.7-2.1.4.13-mac64/_qi.so with restricted binary

According to stack overflow this problem is caused by Mac OSX system integrity protection (SIP).

Then (somewhat nervously) I tried turning SIP off, as instructed here.

But import naoqi still gives a different error. Perhaps its because my Python is in the wrong place, the Aldebaran page says it must be at /usr/local/bin/python (the default on the mac is /usr/bin. Ok so I So, reinstall python 2.7 from Python.org so that it is in /usr/local/bin/python. But now I get another error message:

>> import naoqiFatal Python error: PyThreadState_Get: no current threadAbort trap: 6

A quick search and I read: "this error shows up when a module tries to use a python library that is different than the one the interpreter uses, that is, when you mix two different pythons. I would run otool -L <dyld> on each of the dynamic libraries in the list of Binary Images, and see which ones is linked to the system Python."

At which point I admitted defeat.

Act 2: setting up on my Linux machine

Once I had established that the Python on my Linux machine was also the required version 2.7, I then downloaded and unzipped the NAO API, this time for Linux.

This time I was able to import naoqi with no errors, and within just a few minutes ran my first NAO program: hello world.

from naoqi import ALProxytts = ALProxy("ALTextToSpeech", "164.168.0.17", 9559)tts.say("Hello, world!")

whereupon my NAO robot spoke the words "Hello world". Success!

Published on August 10, 2020 05:49

June 5, 2020

Robot Accident Investigation

Yesterday I gave an talk at the ICRA 2020 workshop Against Robot Dystopias. The workshop should have been in Paris but - like most academic meetings during the lockdown - was held online. In the zoom chat window toward the end of the workshop many of us were wistfully imagining continued discussions in a Parisian bar over a few glasses of wine. Next year I hope. The workshop was excellent and all of the talks should be online soon.

My talk was an extended version of last year's talk for AI@Oxford What could possibly go wrong . With results from our new paper Robot Accident Investigation, which you can download here, the talk outlines a fictional investigation of a fictional robot accident. We had hoped to stage the mock accident, in the lab, with human volunteers and report a real investigation (of a mock accident) but the lockdown put paid to that too. So we have had to use our imagination and construct - I hope plausibly - the process and findings of the accident investigation.

Here is the abstract of our paper.

Special thanks to project colleagues and co-authors: Prof Marina Jirotka, Prof Carl Macrae, Dr Helena Webb, Dr Ulrik Lyngs and Katie Winkle.

My talk was an extended version of last year's talk for AI@Oxford What could possibly go wrong . With results from our new paper Robot Accident Investigation, which you can download here, the talk outlines a fictional investigation of a fictional robot accident. We had hoped to stage the mock accident, in the lab, with human volunteers and report a real investigation (of a mock accident) but the lockdown put paid to that too. So we have had to use our imagination and construct - I hope plausibly - the process and findings of the accident investigation.

Here is the abstract of our paper.

Robot accidents are inevitable. Although rare, they have been happening since assembly-line robots were first introduced in the 1960s. But a new generation of social robots are now becoming commonplace. Often with sophisticated embedded artificial intelligence (AI) social robots might be deployed as care robots to assist elderly or disabled people to live independently. Smart robot toys offer a compelling interactive play experience for children and increasingly capable autonomous vehicles (AVs) the promise of hands-free personal transport and fully autonomous taxis. Unlike industrial robots which are deployed in safety cages, social robots are designed to operate in human environments and interact closely with humans; the likelihood of robot accidents is therefore much greater for social robots than industrial robots. This paper sets out a draft framework for social robot accident investigation; a framework which proposes both the technology and processes that would allow social robot accidents to be investigated with no less rigour than we expect of air or rail accident investigations. The paper also places accident investigation within the practice of responsible robotics, and makes the case that social robotics without accident investigation would be no less irresponsible than aviation without air accident investigation.And the slides from yesterday's talk:

Special thanks to project colleagues and co-authors: Prof Marina Jirotka, Prof Carl Macrae, Dr Helena Webb, Dr Ulrik Lyngs and Katie Winkle.

Published on June 05, 2020 06:02

April 20, 2020

Autonomous Robot Evolution: an update

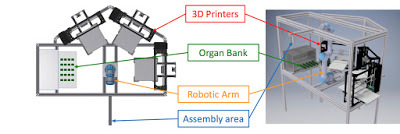

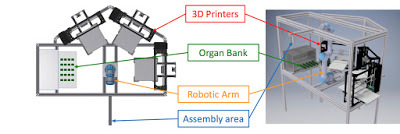

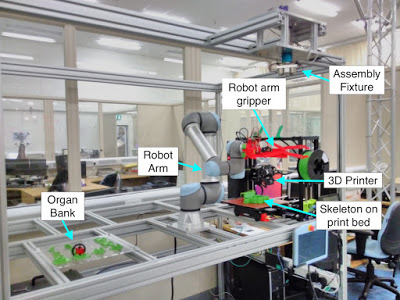

It's been over a year since my last progress report from the Autonomous Robot Evolution (ARE) project, so an update on the ARE Robot Fabricator (RoboFab) is long overdue. There have been several significant advances. First is integration of each of the elements of RoboFab. Second is the design and implementation of an assembly fixture, and third significantly improved wiring. Here is a CAD drawing of the integrated RoboFab.

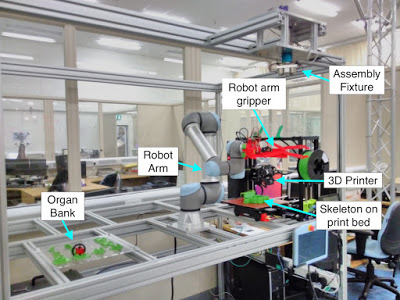

The ARE RoboFab has four major subsystems: up to three 3D printer(s), an organ bank, an assembly fixture and a centrally positioned robot arm (multi-axis manipulator). The purpose of each of these subsystems is outlined as follows:The 3D printers are used to print the evolved robot’s skeleton, which might be a single part, or several. With more than one 3D printer we can speed up the process by 3D printing skeletons for several different evolved robots in parallel, or – for robots with multi-part skeletons – each part can be printed in parallel.The organ bank contains a set of pre-fabricated organs, organised so that the robot arm can pick organs ready for placing within the part-built robot. For more on the organs see previous blog post(s).The assembly fixture is designed to hold (and if necessary rotate) the robot’s core skeleton while organs and paced and wired up.The robot arm is the engine of RoboFab. Fitted with special gripper the robot arm is responsible for assembling the complete robot.And here is the Bristol RoboFab (there is a second identical RoboFab in York):

The ARE RoboFab has four major subsystems: up to three 3D printer(s), an organ bank, an assembly fixture and a centrally positioned robot arm (multi-axis manipulator). The purpose of each of these subsystems is outlined as follows:The 3D printers are used to print the evolved robot’s skeleton, which might be a single part, or several. With more than one 3D printer we can speed up the process by 3D printing skeletons for several different evolved robots in parallel, or – for robots with multi-part skeletons – each part can be printed in parallel.The organ bank contains a set of pre-fabricated organs, organised so that the robot arm can pick organs ready for placing within the part-built robot. For more on the organs see previous blog post(s).The assembly fixture is designed to hold (and if necessary rotate) the robot’s core skeleton while organs and paced and wired up.The robot arm is the engine of RoboFab. Fitted with special gripper the robot arm is responsible for assembling the complete robot.And here is the Bristol RoboFab (there is a second identical RoboFab in York):

Note that the assembly fixture is mounted upside down at the top front of the RoboFab. This has the advantage that there is a reasonable volume of clear space for assembly of the robot under the fixture, which is reachable by the robot arm.

The fabrication and assembly sequence has six stages:RoboFab receives the required coordinates of the organs and one or more mesh file(s) of the shape of the skeleton.The skeleton is 3D printed.The robot arm fetches the core ‘brain’ organ from the organ bank and clips it into the skeleton on the print bed. This is a strong locking clip.The robot arm then lifts the core organ and skeleton assemblage off the print bed, and attaches it to the assembly fixture. The core organ has metal disks on its underside which are used to secure the assemblage to the fixture with electromagnets.The robot arm then picks and places the required organs from the organ bank, clipping them into place on the skeleton.Finally the robot arm wires each organ to the core organ, to complete the robot.

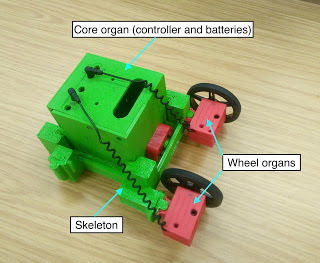

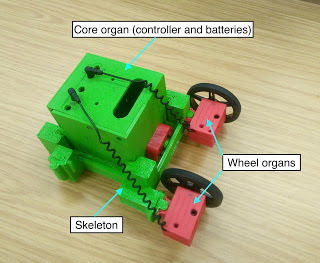

Here is a complete robot, fabricated, assembled and wired by the RoboFab. This evolved robot has a total of three organs: the core ‘brain’ organ, and two wheel organs.

Note especially the wires connecting the wheel organs to the core organ. My colleague Matt has come up with an ingenious design in which a coiled cable is contained within the organ. After the organs have been attached to the skeleton (stage 5), the robot arm in turn grabs each organ's jack plug and pulls the cable to plug into the core organ (stage 6). This design minimises the previously encountered problem of the robot gripper getting tangled in dangling loose wires during stage 6.

Credits

The work described here has been led by my brilliant colleague Matt Hale, very ably supported by York colleagues Edgar Buchanan and Mike Angus. The only credit I can take is that I came up with some of the ideas and co-wrote the bid that secured the EPSRC funding for the project.

References

For a much more detailed account of the RoboFab see this paper, which was presented at ALife 2019 last summer in Newcastle: The ARE Robot Fabricator: How to (Re)produce Robots that Can Evolve in the Real World.

Related blog posts

First automated robot assembly (February 2019)

Autonomous Robot Evolution: from cradle to grave (July 2018)

Autonomous Robot Evolution: first challenges (Oct 2018)

The ARE RoboFab has four major subsystems: up to three 3D printer(s), an organ bank, an assembly fixture and a centrally positioned robot arm (multi-axis manipulator). The purpose of each of these subsystems is outlined as follows:The 3D printers are used to print the evolved robot’s skeleton, which might be a single part, or several. With more than one 3D printer we can speed up the process by 3D printing skeletons for several different evolved robots in parallel, or – for robots with multi-part skeletons – each part can be printed in parallel.The organ bank contains a set of pre-fabricated organs, organised so that the robot arm can pick organs ready for placing within the part-built robot. For more on the organs see previous blog post(s).The assembly fixture is designed to hold (and if necessary rotate) the robot’s core skeleton while organs and paced and wired up.The robot arm is the engine of RoboFab. Fitted with special gripper the robot arm is responsible for assembling the complete robot.And here is the Bristol RoboFab (there is a second identical RoboFab in York):

The ARE RoboFab has four major subsystems: up to three 3D printer(s), an organ bank, an assembly fixture and a centrally positioned robot arm (multi-axis manipulator). The purpose of each of these subsystems is outlined as follows:The 3D printers are used to print the evolved robot’s skeleton, which might be a single part, or several. With more than one 3D printer we can speed up the process by 3D printing skeletons for several different evolved robots in parallel, or – for robots with multi-part skeletons – each part can be printed in parallel.The organ bank contains a set of pre-fabricated organs, organised so that the robot arm can pick organs ready for placing within the part-built robot. For more on the organs see previous blog post(s).The assembly fixture is designed to hold (and if necessary rotate) the robot’s core skeleton while organs and paced and wired up.The robot arm is the engine of RoboFab. Fitted with special gripper the robot arm is responsible for assembling the complete robot.And here is the Bristol RoboFab (there is a second identical RoboFab in York):

Note that the assembly fixture is mounted upside down at the top front of the RoboFab. This has the advantage that there is a reasonable volume of clear space for assembly of the robot under the fixture, which is reachable by the robot arm.

The fabrication and assembly sequence has six stages:RoboFab receives the required coordinates of the organs and one or more mesh file(s) of the shape of the skeleton.The skeleton is 3D printed.The robot arm fetches the core ‘brain’ organ from the organ bank and clips it into the skeleton on the print bed. This is a strong locking clip.The robot arm then lifts the core organ and skeleton assemblage off the print bed, and attaches it to the assembly fixture. The core organ has metal disks on its underside which are used to secure the assemblage to the fixture with electromagnets.The robot arm then picks and places the required organs from the organ bank, clipping them into place on the skeleton.Finally the robot arm wires each organ to the core organ, to complete the robot.

Here is a complete robot, fabricated, assembled and wired by the RoboFab. This evolved robot has a total of three organs: the core ‘brain’ organ, and two wheel organs.

Note especially the wires connecting the wheel organs to the core organ. My colleague Matt has come up with an ingenious design in which a coiled cable is contained within the organ. After the organs have been attached to the skeleton (stage 5), the robot arm in turn grabs each organ's jack plug and pulls the cable to plug into the core organ (stage 6). This design minimises the previously encountered problem of the robot gripper getting tangled in dangling loose wires during stage 6.

Credits

The work described here has been led by my brilliant colleague Matt Hale, very ably supported by York colleagues Edgar Buchanan and Mike Angus. The only credit I can take is that I came up with some of the ideas and co-wrote the bid that secured the EPSRC funding for the project.

References

For a much more detailed account of the RoboFab see this paper, which was presented at ALife 2019 last summer in Newcastle: The ARE Robot Fabricator: How to (Re)produce Robots that Can Evolve in the Real World.

Related blog posts

First automated robot assembly (February 2019)

Autonomous Robot Evolution: from cradle to grave (July 2018)

Autonomous Robot Evolution: first challenges (Oct 2018)

Published on April 20, 2020 02:35

September 17, 2019

What's the worst that could happen? Why we need robot/AI accident investigation.

Robots. What could possibly go wrong?

Imagine that your elderly mother, or grandmother, has an assisted living robot to help her live independently at home. The robot is capable of fetching her drinks, reminding her to take her medicine and keeping in touch with family. Then one afternoon you get a call from a neighbour who has called round and sees your grandmother collapsed on the floor. When the paramedics arrive they find the robot wandering around apparently aimlessly. One of its functions is to call for help if your grandmother stops moving, but it seems that the robot failed to do this.

Fortunately your grandmother recovers but the doctors find bruising on her legs, consistent with the robot running into them. Not surprisingly you want to know what happened: did the robot cause the accident? Or maybe it didn't but made matters worse, and why did it fail to raise the alarm?

Although this is a fictional scenario it could happen today. If it did you would be totally reliant on the goodwill of the robot manufacturer to discover what went wrong. Even then you might not get the answers you seek; it's entirely possible the robot and the company are just not equipped with the tools to facilitate an investigation.

Right now there are no established processes for robot accident investigation.

Of course accidents happen, and that just as true for robots as any other machinery [1].

Finding statistics is tough. But this web page shows serious accidents with industrial robots in the US since the mid 1980s. Driverless car fatalities of course make the headlines. There have been five (that we know about) since 2016. But we have next to no data on accidents in human robot interaction (HRI); that is for robots designed to interact directly with humans. Here is one - a security robot - that happened to be reported.

But a Responsible Roboticist must be interested in *all* accidents, whether serious or not. We should also be very interested in near misses; these are taken *very* seriously in aviation [2], and there is good evidence that reporting near misses improves safety.

So I am very excited to introduce our 5-year EPSRC funded project RoboTIPS – responsible robots for the digital economy. Led by Professor Marina Jirotka at the University of Oxford, we believe RoboTIPS to be the first project with the aim of systematically studying the question of how to investigate accidents with social robots.

So what are we doing in RoboTIPS..?

First we will look at the technology needed to support accident investigation.

In a paper published 2 years ago Marina and I argued the case for an Ethical Black Box (EBB) [3]. Our proposition is very simple: that all robots (and some AIs) should be equipped by law with a standard device which continuously records a time stamped log of the internal state of the system, key decisions, and sampled input or sensor data (in effect the robot equivalent of an aircraft flight data recorder). Without such a device finding out what the robot was doing, and why, in the moments leading up to an accident is more or less impossible. In RoboTIPS we will be developing and testing a model EBB for social robots.

But accident investigation is a human process of discovery and reconstruction. So in this project we will be designing and running three staged (mock) accidents, each covering a different application domain: assisted living robots, educational (toy) robots, and driverless cars.In these scenarios we will be using real robots and will be seeking human volunteers to act in three roles, as the: subject(s) of the accident, witnesses to the accident, and as members of the accident investigation.Thus we aim to develop and demonstrate both technologies and processes (and ultimately policy recommendations) for robot accident investigation. And the whole project will be conducted within the framework of Responsible Research and Innovation; it will, in effect, be a case study in Responsible Robotics.The text above is the script for a very short (10 minute) TED-style talk I gave at the conference AI@Oxford today in the Impact of Trust in AI session, and here below are the slides.

References:

[1] Dhillon BS (1991) Robot Accidents. In: Robot Reliability and Safety. Springer, New York, NY

[2] Macrae C (2014) Close Calls: Managing risk and resilience in Airline flight safety, Palgrave macmillan.

[3] Winfield AFT and Jirotka M (2017) The Case for an Ethical Black Box. In: Gao Y, Fallah S, Jin Y, Lekakou C (eds) Towards Autonomous Robotic Systems. TAROS 2017. Lecture Notes in Computer Science, vol 10454. Springer, Cham.

Imagine that your elderly mother, or grandmother, has an assisted living robot to help her live independently at home. The robot is capable of fetching her drinks, reminding her to take her medicine and keeping in touch with family. Then one afternoon you get a call from a neighbour who has called round and sees your grandmother collapsed on the floor. When the paramedics arrive they find the robot wandering around apparently aimlessly. One of its functions is to call for help if your grandmother stops moving, but it seems that the robot failed to do this.

Fortunately your grandmother recovers but the doctors find bruising on her legs, consistent with the robot running into them. Not surprisingly you want to know what happened: did the robot cause the accident? Or maybe it didn't but made matters worse, and why did it fail to raise the alarm?

Although this is a fictional scenario it could happen today. If it did you would be totally reliant on the goodwill of the robot manufacturer to discover what went wrong. Even then you might not get the answers you seek; it's entirely possible the robot and the company are just not equipped with the tools to facilitate an investigation.

Right now there are no established processes for robot accident investigation.

Of course accidents happen, and that just as true for robots as any other machinery [1].

Finding statistics is tough. But this web page shows serious accidents with industrial robots in the US since the mid 1980s. Driverless car fatalities of course make the headlines. There have been five (that we know about) since 2016. But we have next to no data on accidents in human robot interaction (HRI); that is for robots designed to interact directly with humans. Here is one - a security robot - that happened to be reported.

But a Responsible Roboticist must be interested in *all* accidents, whether serious or not. We should also be very interested in near misses; these are taken *very* seriously in aviation [2], and there is good evidence that reporting near misses improves safety.

So I am very excited to introduce our 5-year EPSRC funded project RoboTIPS – responsible robots for the digital economy. Led by Professor Marina Jirotka at the University of Oxford, we believe RoboTIPS to be the first project with the aim of systematically studying the question of how to investigate accidents with social robots.

So what are we doing in RoboTIPS..?

First we will look at the technology needed to support accident investigation.

In a paper published 2 years ago Marina and I argued the case for an Ethical Black Box (EBB) [3]. Our proposition is very simple: that all robots (and some AIs) should be equipped by law with a standard device which continuously records a time stamped log of the internal state of the system, key decisions, and sampled input or sensor data (in effect the robot equivalent of an aircraft flight data recorder). Without such a device finding out what the robot was doing, and why, in the moments leading up to an accident is more or less impossible. In RoboTIPS we will be developing and testing a model EBB for social robots.

But accident investigation is a human process of discovery and reconstruction. So in this project we will be designing and running three staged (mock) accidents, each covering a different application domain: assisted living robots, educational (toy) robots, and driverless cars.In these scenarios we will be using real robots and will be seeking human volunteers to act in three roles, as the: subject(s) of the accident, witnesses to the accident, and as members of the accident investigation.Thus we aim to develop and demonstrate both technologies and processes (and ultimately policy recommendations) for robot accident investigation. And the whole project will be conducted within the framework of Responsible Research and Innovation; it will, in effect, be a case study in Responsible Robotics.The text above is the script for a very short (10 minute) TED-style talk I gave at the conference AI@Oxford today in the Impact of Trust in AI session, and here below are the slides.

References:

[1] Dhillon BS (1991) Robot Accidents. In: Robot Reliability and Safety. Springer, New York, NY

[2] Macrae C (2014) Close Calls: Managing risk and resilience in Airline flight safety, Palgrave macmillan.

[3] Winfield AFT and Jirotka M (2017) The Case for an Ethical Black Box. In: Gao Y, Fallah S, Jin Y, Lekakou C (eds) Towards Autonomous Robotic Systems. TAROS 2017. Lecture Notes in Computer Science, vol 10454. Springer, Cham.

Published on September 17, 2019 14:05

July 31, 2019

On the simulation (and energy costs) of human intelligence, the singularity and simulationism

For many researchers the Holy Grail of robotics and AI is the creation of artificial persons: artefacts with equivalent general competencies as humans. Such artefacts would literally be simulations of humans. Some researchers are motivated by the utility of AGI; others have an almost religious faith in the transhumanist promise of the technological singularity. Others, like myself, are driven only by scientific curiosity. Simulations of intelligence provide us with working models of (elements of) natural intelligence. As Richard Feynman famously said ‘What I cannot create, I do not understand’. Used in this way simulations are like microscopes for the study of intelligence; they are scientific instruments.

Like all scientific instruments simulation needs to be used with great care; simulations need to be calibrated, validated and – most importantly – their limitations understood. Without that understanding any claims to new insights into the nature of intelligence – or for the quality and fidelity of an artificial intelligence as a model of some aspect of natural intelligence – should be regarded with suspicion.

In this essay I have critically reflected on some of the predictions for human-equivalent AI (AGI); the paths to AGI (and especially via artificial evolution); the technological singularity, and the idea that we are ourselves simulations in a simulated universe (simulationism). The quest for human-equivalent AI clearly faces many challenges. One (perhaps stating the obvious) is that it is a very hard problem. Another, as I have argued in this essay, is that the energy costs are likely to limit progress.

However, I believe that the task is made even more difficult for two further reasons. The first is – as hinted above – that we have failed to recognize simulations of intelligence (which all AIs and robots are) as scientific instruments, which need to be designed, operated and results interpreted, with no less care than we would a particle collider or the Hubble telescope.

The second, and more general observation, is that we lack a general (mathematical) theory of intelligence. This lack of theory means that a significant proportion of AI research is not hypothesis driven, but incrementalist and ad-hoc. Of course such an approach can and is leading to interesting and (commercially) valuable advances in narrow AI. But without strong theoretical foundations, the grand challenge of human-equivalent AI seems rather like trying to build particle accelerators to understand the nature of matter, without the Standard Model of particle physics.

The text above is the concluding discussion of my essay On the simulation (and energy costs) of human intelligence, the singularity and simulationism, which appears in an edited collection of essays in a book called From Astrophysics to Unconventional Computation. Published in April 2019, the book marks the 60th birthday of astrophysicist, computer scientist and all round genius, Susan Stepney.

Note: regular visitors to the blog will recognise themes covered in several previous blog posts, brought together in I hope a coherent and interesting way.

Like all scientific instruments simulation needs to be used with great care; simulations need to be calibrated, validated and – most importantly – their limitations understood. Without that understanding any claims to new insights into the nature of intelligence – or for the quality and fidelity of an artificial intelligence as a model of some aspect of natural intelligence – should be regarded with suspicion.

In this essay I have critically reflected on some of the predictions for human-equivalent AI (AGI); the paths to AGI (and especially via artificial evolution); the technological singularity, and the idea that we are ourselves simulations in a simulated universe (simulationism). The quest for human-equivalent AI clearly faces many challenges. One (perhaps stating the obvious) is that it is a very hard problem. Another, as I have argued in this essay, is that the energy costs are likely to limit progress.

However, I believe that the task is made even more difficult for two further reasons. The first is – as hinted above – that we have failed to recognize simulations of intelligence (which all AIs and robots are) as scientific instruments, which need to be designed, operated and results interpreted, with no less care than we would a particle collider or the Hubble telescope.

The second, and more general observation, is that we lack a general (mathematical) theory of intelligence. This lack of theory means that a significant proportion of AI research is not hypothesis driven, but incrementalist and ad-hoc. Of course such an approach can and is leading to interesting and (commercially) valuable advances in narrow AI. But without strong theoretical foundations, the grand challenge of human-equivalent AI seems rather like trying to build particle accelerators to understand the nature of matter, without the Standard Model of particle physics.

The text above is the concluding discussion of my essay On the simulation (and energy costs) of human intelligence, the singularity and simulationism, which appears in an edited collection of essays in a book called From Astrophysics to Unconventional Computation. Published in April 2019, the book marks the 60th birthday of astrophysicist, computer scientist and all round genius, Susan Stepney.

Note: regular visitors to the blog will recognise themes covered in several previous blog posts, brought together in I hope a coherent and interesting way.

Published on July 31, 2019 06:54

July 29, 2019

Ethical Standards in Robotics and AI: what they are and why they matter

Here are the slides for my keynote, presented this morning at the International Conference on Robot Ethics and Standards (ICRES 2019). The talk is based on my paper Ethical Standards in Robotics and AI published in Nature Electronics a few months ago (here is a pre-print).

To see the speaker notes click on the options button on the google slides toolbar above.

To see the speaker notes click on the options button on the google slides toolbar above.

Published on July 29, 2019 14:30

June 28, 2019

Energy and Exploitation: AIs dirty secrets

A couple of days ago I gave a short 15 minute talk at an excellent 5x15 event in Bristol. The talk I actually gave was different to the one I'd originally suggested. Two things prompted the switch: one was seeing the amazing line up of speakers on the programme - all covering more or less controversial topics - and the other was my increasing anger in recent months over the energy and human costs of AI. So it was that I wrote a completely new talk the day before this event.

But before I get to my talk I must mention the amazing other speakers: we heard Phillipa Perry speaking on child parent relationships, Hallie Rubenhold on the truth about Jack the Ripper's victims, Jenny Riley speaking very movingly about One25's support for Bristol's (often homeless) sex workers, and Amy Sinclair introducing her activism with Extinction Rebellion.

Here is the script for my talk (for the slides go to the end of this blog post).

Artificial Intelligence and Machine Learning are often presented as bright clean new technologies with the potential to solve many of humanity's most pressing problems.

We already enjoy the benefit of truly remarkable AI technology, like machine translation and smart maps. Driverless cars might help us get around before too long, and DeepMind's diagnostic AI can detect eye diseases from retinal scans as accurately as a doctor.

Before getting into the ethics of AI I need to give you a quick tutorial on machine learning. The most powerful and exciting AI today is based on Artificial Neural Networks. Here [slide 3] is a simplified diagram of a Deep Learning network for recognizing images. Each small circle is a *very* simplified mathematical model of biological neurons, and the outputs of each layer of artificial neurons feed the inputs of the next layer. In order to be able to recognise images the network must first be trained with images that are already labelled - in this case my dog Lola.

But in order to reliably recognise Lola the network needs to be trained not with one picture of Lola but many. This set of images is called the training data set and without a good data set the network will not work at all or will be biased. (In reality there will need to be not 4 but hundreds of images of Lola).

So what does an AI ethicist do? Well, the short answer is worry. I worry about the ethical and societal impact of AI on individuals, society and the environment. Here are some keywords on ethics [slide 4], reflecting that we must work toward AI that respects Human Rights, diversity and dignity, is unbiased and sustainable, transparent, accountable and socially responsible.

But I do more than just worry. I also take practical steps like drafting ethical principles, and helping to write ethical standards for the British Standards Institute and the IEEE Standards Association. I lead P7001: a new standard on transparency in of autonomous systems based on the simple ethical principle that it should always be possible to find out why an AI made a particular decision. I have given evidence in parliament several times, and recently took part in a study of AI and robotics in healthcare and what this means for the workforce of the NHS.

Now I want to share two serious new worries with you.

The first is about the energy cost of AI. In 2016 Go champion Lee Sedol was famously defeated by DeepMind's AlphaGo. It was a remarkable achievement for AI. But consider the energy cost. In a single two hour match Sedol burned around 170 kcals: roughly the amount of energy you would get from an egg sandwich. Or about the power of an LED night light - 1 Watt. In the same two hours the AlphaGo machine reportedly consumed 50,000 times more energy than Sedol. Equivalent to a 50 kW generator for industrial lighting. And that's not taking account of the energy used to train AlphaGo.

Now some people think we can make human equivalent AI by simulating the human brain. But the most complex animal brain so far simulated is that of c-elegans – the nematode worm. It has 302 neurons and about 5000 synapses - these are the connections between neurons. A couple of years ago I worked out that simulating a neural network for a simple robot with only a 10th the number of neurons of c-elegans costs 2000 times more energy than the whole worm.

In a new paper that came out just a few days ago we have for the first time estimates of the carbon cost of training large AI models for natural language processing such as machine translation [1]. The carbon cost of simple models is quite modest, but with tuning and experimentation the carbon cost leaps to 7 times the carbon footprint of an average human in one year (or 2 times if you're an American).

And the energy cost of optimising the biggest model is a staggering 5 times the carbon cost of a car over its whole lifetime, including manufacturing it in the first place. The dollar cost of that amount of energy is estimated at between one and 3 million US$. (Something that only companies with very deep pockets can afford.)

These energy costs seem completely at odds with the urgent need to halve carbon dioxide emissions by 2030. At the very least AI companies need to be honest about the huge energy costs of machine learning.

Now I want to turn to the human cost of AI. It is often said that one of the biggest fears around AI is the loss of jobs. In fact the opposite is happening. Many new jobs are being created, but the tragedy is that they are not great jobs, to say the least. Let me introduce you to two of these new kinds of jobs.

The first is AI tagging. This is manually labelling objects in images to, for instance, generate training data sets for driverless car AIs. Better (and safer) AI needs huge training data sets and a whole new outsourced industry has sprung up all over the world to meet this need. Here [slide 9] is an AI tagging factory in China.

Conversational AI or chat bots also need human help. Amazon for instance employs thousands of both full-time employees and contract workers to listen to and annotate speech. The tagged speech is then fed back to Alexa to improve its comprehension. And last month the Guardian reported that Google employs around 100,000 temps, vendors and contractors: literally an army of linguists working in "white collar sweatshops" to create the handcrafted data sets required for Google translate to learn dozens of languages. Not surprisingly there is a huge disparity between the wages and working conditions of these workers and Google's full time employees.

AI tagging jobs are dull, repetitive and in the case of the linguists highly skilled. But by far the worst kind of new white collar job in the AI industry is content moderation.

These tens of thousands of people, employed by third-party contractors, are required to watch and vet offensive content: hate speech, violent pornography, cruelty and sometimes murder of both animals and humans for Facebook, YouTube and other media platforms [2]. These jobs are not just dull and repetitive they are positively dangerous. Harrowing reports tell of PTSD-like trauma symptoms, panic attacks and burnout after one year, alongside micromanagement, poor working conditions and ineffective counselling. And very poor pay - typically $28,800 a year. Compare this with average annual salaries at Facebook of ~$240,000.

The big revelation to me over the past few months is the extent to which AI has a human supply chain, and I am an AI insider! The genius designers of this amazing tech rely on both huge amounts of energy and a hidden army of what Mary Gray and Siddhartha Suri call Ghost Workers.

I would like to leave you with a question: how can we, as ethical consumers, justify continuing to make use of unsustainable and unethical AI technologies?

References:

[1] Emma Strubell, Ananya Ganesh, Andrew McCallum (2019) Energy and Policy Considerations for Deep Learning in NLP, arXiv:1906.02243

[2] Sarah Roberts (2016) Digital Refuse: Canadian Garbage, Commercial Content Moderation and the Global Circulation of Social Media’s Waste, Media Studies Publications. 14.

But before I get to my talk I must mention the amazing other speakers: we heard Phillipa Perry speaking on child parent relationships, Hallie Rubenhold on the truth about Jack the Ripper's victims, Jenny Riley speaking very movingly about One25's support for Bristol's (often homeless) sex workers, and Amy Sinclair introducing her activism with Extinction Rebellion.

Here is the script for my talk (for the slides go to the end of this blog post).

Artificial Intelligence and Machine Learning are often presented as bright clean new technologies with the potential to solve many of humanity's most pressing problems.

We already enjoy the benefit of truly remarkable AI technology, like machine translation and smart maps. Driverless cars might help us get around before too long, and DeepMind's diagnostic AI can detect eye diseases from retinal scans as accurately as a doctor.

Before getting into the ethics of AI I need to give you a quick tutorial on machine learning. The most powerful and exciting AI today is based on Artificial Neural Networks. Here [slide 3] is a simplified diagram of a Deep Learning network for recognizing images. Each small circle is a *very* simplified mathematical model of biological neurons, and the outputs of each layer of artificial neurons feed the inputs of the next layer. In order to be able to recognise images the network must first be trained with images that are already labelled - in this case my dog Lola.

But in order to reliably recognise Lola the network needs to be trained not with one picture of Lola but many. This set of images is called the training data set and without a good data set the network will not work at all or will be biased. (In reality there will need to be not 4 but hundreds of images of Lola).

So what does an AI ethicist do? Well, the short answer is worry. I worry about the ethical and societal impact of AI on individuals, society and the environment. Here are some keywords on ethics [slide 4], reflecting that we must work toward AI that respects Human Rights, diversity and dignity, is unbiased and sustainable, transparent, accountable and socially responsible.

But I do more than just worry. I also take practical steps like drafting ethical principles, and helping to write ethical standards for the British Standards Institute and the IEEE Standards Association. I lead P7001: a new standard on transparency in of autonomous systems based on the simple ethical principle that it should always be possible to find out why an AI made a particular decision. I have given evidence in parliament several times, and recently took part in a study of AI and robotics in healthcare and what this means for the workforce of the NHS.

Now I want to share two serious new worries with you.

The first is about the energy cost of AI. In 2016 Go champion Lee Sedol was famously defeated by DeepMind's AlphaGo. It was a remarkable achievement for AI. But consider the energy cost. In a single two hour match Sedol burned around 170 kcals: roughly the amount of energy you would get from an egg sandwich. Or about the power of an LED night light - 1 Watt. In the same two hours the AlphaGo machine reportedly consumed 50,000 times more energy than Sedol. Equivalent to a 50 kW generator for industrial lighting. And that's not taking account of the energy used to train AlphaGo.

Now some people think we can make human equivalent AI by simulating the human brain. But the most complex animal brain so far simulated is that of c-elegans – the nematode worm. It has 302 neurons and about 5000 synapses - these are the connections between neurons. A couple of years ago I worked out that simulating a neural network for a simple robot with only a 10th the number of neurons of c-elegans costs 2000 times more energy than the whole worm.

In a new paper that came out just a few days ago we have for the first time estimates of the carbon cost of training large AI models for natural language processing such as machine translation [1]. The carbon cost of simple models is quite modest, but with tuning and experimentation the carbon cost leaps to 7 times the carbon footprint of an average human in one year (or 2 times if you're an American).

And the energy cost of optimising the biggest model is a staggering 5 times the carbon cost of a car over its whole lifetime, including manufacturing it in the first place. The dollar cost of that amount of energy is estimated at between one and 3 million US$. (Something that only companies with very deep pockets can afford.)

These energy costs seem completely at odds with the urgent need to halve carbon dioxide emissions by 2030. At the very least AI companies need to be honest about the huge energy costs of machine learning.

Now I want to turn to the human cost of AI. It is often said that one of the biggest fears around AI is the loss of jobs. In fact the opposite is happening. Many new jobs are being created, but the tragedy is that they are not great jobs, to say the least. Let me introduce you to two of these new kinds of jobs.

The first is AI tagging. This is manually labelling objects in images to, for instance, generate training data sets for driverless car AIs. Better (and safer) AI needs huge training data sets and a whole new outsourced industry has sprung up all over the world to meet this need. Here [slide 9] is an AI tagging factory in China.

Conversational AI or chat bots also need human help. Amazon for instance employs thousands of both full-time employees and contract workers to listen to and annotate speech. The tagged speech is then fed back to Alexa to improve its comprehension. And last month the Guardian reported that Google employs around 100,000 temps, vendors and contractors: literally an army of linguists working in "white collar sweatshops" to create the handcrafted data sets required for Google translate to learn dozens of languages. Not surprisingly there is a huge disparity between the wages and working conditions of these workers and Google's full time employees.

AI tagging jobs are dull, repetitive and in the case of the linguists highly skilled. But by far the worst kind of new white collar job in the AI industry is content moderation.

These tens of thousands of people, employed by third-party contractors, are required to watch and vet offensive content: hate speech, violent pornography, cruelty and sometimes murder of both animals and humans for Facebook, YouTube and other media platforms [2]. These jobs are not just dull and repetitive they are positively dangerous. Harrowing reports tell of PTSD-like trauma symptoms, panic attacks and burnout after one year, alongside micromanagement, poor working conditions and ineffective counselling. And very poor pay - typically $28,800 a year. Compare this with average annual salaries at Facebook of ~$240,000.

The big revelation to me over the past few months is the extent to which AI has a human supply chain, and I am an AI insider! The genius designers of this amazing tech rely on both huge amounts of energy and a hidden army of what Mary Gray and Siddhartha Suri call Ghost Workers.

I would like to leave you with a question: how can we, as ethical consumers, justify continuing to make use of unsustainable and unethical AI technologies?

References:

[1] Emma Strubell, Ananya Ganesh, Andrew McCallum (2019) Energy and Policy Considerations for Deep Learning in NLP, arXiv:1906.02243

[2] Sarah Roberts (2016) Digital Refuse: Canadian Garbage, Commercial Content Moderation and the Global Circulation of Social Media’s Waste, Media Studies Publications. 14.

Published on June 28, 2019 04:03

May 29, 2019

My top three policy and governance issues in AI/ML

1. For me the biggest governance issue facing AI/ML ethics is the gap between principles and practice. The hard problem the industry faces is turning good intentions into demonstrably good behaviour. In the last 2.5 years there has been a gold rush of new ethical principles in AI. Since Jan 2017 at least 22 sets of ethical principles have been published, including principles from Google, IBM, Microsoft and Intel. Yet any evidence that these principles are making a difference within those companies is hard to find – leading to a justifiable accusation of

ethics-washing

– and if anything the reputations of some leading AI companies are looking increasingly tarnished.

2. Like others I am deeply concerned by the acute gender imbalance in AI (estimates of the proportion of women in AI vary between ~12% and ~22%). This is not just unfair, I believe it too be positively dangerous, since it is resulting in AI products and services that reflect the values and ambitions of (young, predominantly white) men. This makes it a governance issue. I cannot help wondering if the deeply troubling rise of surveillance capitalism is not, at least in part, a consequence of male values.

3. A major policy concern is the apparently very poor quality of many of the jobs created by the large AI/ML companies. Of course the AI/ML engineers are paid exceptionally well, but it seems that there is a very large number of very poorly paid workers who, in effect, compensate for the fact that AI is not (yet) capable of identifying offensive content, nor is it able to learn without training data generated from large quantities of manually tagged objects in images, nor can conversational AI manage all queries that might be presented to it. This hidden army of piece workers, employed in developing countries by third party sub contractors and paid very poorly, are undertaking work that is at best extremely tedious (you might say robotic) and at worst psychologically very harmful; this has been called AI’s dirty little secret and should not – in my view – go unaddressed.

2. Like others I am deeply concerned by the acute gender imbalance in AI (estimates of the proportion of women in AI vary between ~12% and ~22%). This is not just unfair, I believe it too be positively dangerous, since it is resulting in AI products and services that reflect the values and ambitions of (young, predominantly white) men. This makes it a governance issue. I cannot help wondering if the deeply troubling rise of surveillance capitalism is not, at least in part, a consequence of male values.

3. A major policy concern is the apparently very poor quality of many of the jobs created by the large AI/ML companies. Of course the AI/ML engineers are paid exceptionally well, but it seems that there is a very large number of very poorly paid workers who, in effect, compensate for the fact that AI is not (yet) capable of identifying offensive content, nor is it able to learn without training data generated from large quantities of manually tagged objects in images, nor can conversational AI manage all queries that might be presented to it. This hidden army of piece workers, employed in developing countries by third party sub contractors and paid very poorly, are undertaking work that is at best extremely tedious (you might say robotic) and at worst psychologically very harmful; this has been called AI’s dirty little secret and should not – in my view – go unaddressed.

Published on May 29, 2019 16:13

April 18, 2019

An Updated Round Up of Ethical Principles of Robotics and AI

This blogpost is an updated round up of the various sets of ethical principles of robotics and AI that have been proposed to date, ordered by date of first publication. I previously listed principles published before December 2017 here; this blogpost appends those principles drafted since January 2018. The principles are listed here (in full or abridged) with notes and references but without critique.

Scroll down to the next horizontal line for the updates.

If there any (prominent) ones I've missed please let me know.

Asimov's three laws of Robotics (1950)

A robot may not injure a human being or, through inaction, allow a human being to come to harm. A robot must obey the orders given it by human beings except where such orders would conflict with the First Law. A robot must protect its own existence as long as such protection does not conflict with the First or Second Laws. I have included these to explicitly acknowledge, firstly, that Asimov undoubtedly established the principle that robots (and by extension AIs) should be governed by principles, and secondly that many subsequent principles have been drafted as a direct response. The three laws first appeared in Asimov's short story Runaround [1]. This wikipedia article provides a very good account of the three laws and their many (fictional) extensions.

Murphy and Wood's three laws of Responsible Robotics (2009)

A human may not deploy a robot without the human-robot work system meeting the highest legal and professional standards of safety and ethics. A robot must respond to humans as appropriate for their roles. A robot must be endowed with sufficient situated autonomy to protect its own existence as long as such protection provides smooth transfer of control which does not conflict with the First and Second Laws. These were proposed in Robin Murphy and David Wood's paper Beyond Asimov: The Three Laws of Responsible Robotics [2].

EPSRC Principles of Robotics (2010)

Robots are multi-use tools. Robots should not be designed solely or primarily to kill or harm humans, except in the interests of national security. Humans, not Robots, are responsible agents. Robots should be designed and operated as far as practicable to comply with existing laws, fundamental rights and freedoms, including privacy. Robots are products. They should be designed using processes which assure their safety and security. Robots are manufactured artefacts. They should not be designed in a deceptive way to exploit vulnerable users; instead their machine nature should be transparent. The person with legal responsibility for a robot should be attributed. These principles were drafted in 2010 and published online in 2011, but not formally published until 2017 [3] as part of a two-part special issue of Connection Science on the principles, edited by Tony Prescott & Michael Szollosy [4]. An accessible introduction to the EPSRC principles was published in New Scientist in 2011.

Future of Life Institute Asilomar principles for beneficial AI (Jan 2017)

I will not list all 23 principles but extract just a few to compare and contrast with the others listed here:

The ACM US Public Policy Council Principles for Algorithmic Transparency and Accountability (Jan 2017)

Awareness: Owners, designers, builders, users, and other stakeholders of analytic systems should be aware of the possible biases involved in their design, implementation, and use and the potential harm that biases can cause to individuals and society.Access and redress: Regulators should encourage the adoption of mechanisms that enable questioning and redress for individuals and groups that are adversely affected by algorithmically informed decisions.Accountability: Institutions should be held responsible for decisions made by the algorithms that they use, even if it is not feasible to explain in detail how the algorithms produce their results.Explanation: Systems and institutions that use algorithmic decision-making are encouraged to produce explanations regarding both the procedures followed by the algorithm and the specific decisions that are made. This is particularly important in public policy contexts.Data Provenance: A description of the way in which the training data was collected should be maintained by the builders of the algorithms, accompanied by an exploration of the potential biases induced by the human or algorithmic data-gathering process.Auditability: Models, algorithms, data, and decisions should be recorded so that they can be audited in cases where harm is suspected.Validation and Testing: Institutions should use rigorous methods to validate their models and document those methods and results. See the ACM announcement of these principles here. The principles form part of the ACM's updated code of ethics.

Japanese Society for Artificial Intelligence (JSAI) Ethical Guidelines (Feb 2017)

Contribution to humanity Members of the JSAI will contribute to the peace, safety, welfare, and public interest of humanity. Abidance of laws and regulations Members of the JSAI must respect laws and regulations relating to research and development, intellectual property, as well as any other relevant contractual agreements. Members of the JSAI must not use AI with the intention of harming others, be it directly or indirectly.Respect for the privacy of others Members of the JSAI will respect the privacy of others with regards to their research and development of AI. Members of the JSAI have the duty to treat personal information appropriately and in accordance with relevant laws and regulations.Fairness Members of the JSAI will always be fair. Members of the JSAI will acknowledge that the use of AI may bring about additional inequality and discrimination in society which did not exist before, and will not be biased when developing AI. Security As specialists, members of the JSAI shall recognize the need for AI to be safe and acknowledge their responsibility in keeping AI under control. Act with integrity Members of the JSAI are to acknowledge the significant impact which AI can have on society. Accountability and Social Responsibility Members of the JSAI must verify the performance and resulting impact of AI technologies they have researched and developed. Communication with society and self-development Members of the JSAI must aim to improve and enhance society’s understanding of AI.Abidance of ethics guidelines by AI AI must abide by the policies described above in the same manner as the members of the JSAI in order to become a member or a quasi-member of society.An explanation of the background and aims of these ethical guidelines can be found here, together with a link to the full principles (which are shown abridged above).

Draft principles of The Future Society's Science, Law and Society Initiative (Oct 2017)

AI should advance the well-being of humanity, its societies, and its natural environment. AI should be transparent. Manufacturers and operators of AI should be accountable. AI’s effectiveness should be measurable in the real-world applications for which it is intended. Operators of AI systems should have appropriate competencies. The norms of delegation of decisions to AI systems should be codified through thoughtful, inclusive dialogue with civil society.This article by Nicolas Economou explains the 6 principles with a full commentary on each one.

Montréal Declaration for Responsible AI draft principles (Nov 2017)

Well-being The development of AI should ultimately promote the well-being of all sentient creatures.Autonomy The development of AI should promote the autonomy of all human beings and control, in a responsible way, the autonomy of computer systems.Justice The development of AI should promote justice and seek to eliminate all types of discrimination, notably those linked to gender, age, mental / physical abilities, sexual orientation, ethnic/social origins and religious beliefs.Privacy The development of AI should offer guarantees respecting personal privacy and allowing people who use it to access their personal data as well as the kinds of information that any algorithm might use.Knowledge The development of AI should promote critical thinking and protect us from propaganda and manipulation.Democracy The development of AI should promote informed participation in public life, cooperation and democratic debate.Responsibility The various players in the development of AI should assume their responsibility by working against the risks arising from their technological innovations.The Montréal Declaration for Responsible AI proposes the 7 values and draft principles above (here in full with preamble, questions and definitions).

IEEE General Principles of Ethical Autonomous and Intelligent Systems (Dec 2017)How can we ensure that A/IS do not infringe human rights? Traditional metrics of prosperity do not take into account the full effect of A/IS technologies on human well-being. How can we assure that designers, manufacturers, owners and operators of A/IS are responsible and accountable? How can we ensure that A/IS are transparent? How can we extend the benefits and minimize the risks of AI/AS technology being misused? These 5 general principles appear in Ethically Aligned Design v2, a discussion document drafted and published by the IEEE Standards Association Global Initiative on Ethics of Autonomous and Intelligent Systems. The principles are expressed not as rules but instead as questions, or concerns, together with background and candidate recommendations.

A short article co-authored with IEEE general principles co-chair Mark Halverson Why Principles Matter explains the link between principles and standards, together with further commentary and references.

Note that these principles have been revised and extended, in March 2019 (see below).

UNI Global Union Top 10 Principles for Ethical AI (Dec 2017)

Demand That AI Systems Are TransparentEquip AI Systems With an “Ethical Black Box”Make AI Serve People and Planet Adopt a Human-In-Command ApproachEnsure a Genderless, Unbiased AIShare the Benefits of AI SystemsSecure a Just Transition and Ensuring Support for Fundamental Freedoms and RightsEstablish Global Governance MechanismsBan the Attribution of Responsibility to RobotsBan AI Arms RaceDrafted by UNI Global Union's Future World of Work these 10 principles for Ethical AI (set out here with full commentary) “provide unions, shop stewards and workers with a set of concrete demands to the transparency, and application of AI”.

Updated principles...

Lords Select Committee 5 core principles to keep AI ethical (Apr 2018)Artificial intelligence should be developed for the common good and benefit of humanity. Artificial intelligence should operate on principles of intelligibility and fairness. Artificial intelligence should not be used to diminish the data rights or privacy of individuals, families or communities. All citizens have the right to be educated to enable them to flourish mentally, emotionally and economically alongside artificial intelligence. The autonomous power to hurt, destroy or deceive human beings should never be vested in artificial intelligence.These principles appear in the UK House of Lords Select Committee on Artificial Intelligence report AI in the UK: ready, willing and able? published in April 2019. The WEF published a summary and commentary here.

AI UX: 7 Principles of Designing Good AI Products (Apr 2018)Differentiate AI content visually - let people know if an algorithm has generated a piece of content so they can decide for themselves whether to trust it or not.Explain how machines think - helping people understand how machines work so they can use them betterSet the right expectations - set the right expectations, especially in a world full of sensational, superficial news about new AI technologies.Find and handle weird edge cases - spend more time testing and finding weird, funny, or even disturbing or unpleasant edge cases.User testing for AI products (default methods won’t work here).Provide an opportunity to give feedback.These principles, focussed on the design of the User Interface (UI) and User Experience (UX), are from Budapest based company UX Studio.

Google AI Principles (Jun 2018)

Be socially beneficial. Avoid creating or reinforcing unfair bias.Be built and tested for safety.Be accountable to people.Incorporate privacy design principles.Uphold high standards of scientific excellence.Be made available for uses that accord with these principles. These principles were launched with a blog post and commentary by Google CEO Sundar Pichai here.

Microsoft Responsible bots: 10 guidelines for developers of conversational AI (Nov 2018)

Articulate the purpose of your bot and take special care if your bot will support consequential use cases.Be transparent about the fact that you use bots as part of your product or service.Ensure a seamless hand-off to a human where the human-bot exchange leads to interactions that exceed the bot’s competence.Design your bot so that it respects relevant cultural norms and guards against misuse.Ensure your bot is reliable.Ensure your bot treats people fairly.Ensure your bot respects user privacy.Ensure your bot handles data securely.Ensure your bot is accessible.Accept responsibility.Microsoft's guidelines for the ethical design of 'bots' (chatbots or conversational AIs) are fully described here.

Summary – with links – of ethical AI principles from IBM, Google, Intel and Microsoft, Nov 2018 https://vitalflux.com/ethical-ai-principles-ibm-google-intel/

CEPEJ European Ethical Charter on the use of artificial intelligence (AI) in judicial systems and their environment, 5 principles (Feb 2019)

Principle of respect of fundamental rights: ensuring that the design and implementation of artificial intelligence tools and services are compatible with fundamental rights.Principle of non-discrimination: specifically preventing the development or intensification of any discrimination between individuals or groups of individuals.Principle of quality and security: with regard to the processing of judicial decisions and data, using certified sources and intangible data with models conceived in a multi-disciplinary manner, in a secure technological environment.Principle of transparency, impartiality and fairness: making data processing methods accessible and understandable, authorising external audits.Principle “under user control”: precluding a prescriptive approach and ensuring that users are informed actors and in control of their choices.The Council of Europe ethical charter principles are outlined here, with a link to the ethical charter istelf.

Women Leading in AI (WLinAI) 10 recommendations (Feb 2019)

Introduce a regulatory approach governing the deployment of AI which mirrors that used for the pharmaceutical sector.Establish an AI regulatory function working alongside the Information Commissioner’s Office and Centre for Data Ethics – to audit algorithms, investigate complaints by individuals,issue notices and fines for breaches of GDPR and equality and human rights law, give wider guidance, spread best practice and ensure algorithms must be fully explained to users and open to public scrutiny.Introduce a new Certificate of Fairness for AI systems alongside a ‘kite mark’ type scheme to display it. Criteria to be defined at industry level, similarly to food labelling regulations.Introduce mandatory AIAs (Algorithm Impact Assessments) for organisations employing AI systems that have a significant effect on individuals.Introduce a mandatory requirement for public sector organisations using AI for particular purposes to inform citizens that decisions are made by machines, explain how the decision is reached and what would need to change for individuals to get a different outcome.Introduce a ‘reduced liability’ incentive for companies that have obtained a Certificate of Fairness to foster innovation and competitiveness.To compel companies and other organisations to bring their workforce with them – by publishing the impact of AI on their workforce and offering retraining programmes for employees whose jobs are being automated.Where no redeployment is possible, to compel companies to make a contribution towards a digital skills fund for those employeesTo carry out a skills audit to identify the wide range of skills required to embrace the AI revolution.To establish an education and training programme to meet the needs identified by the skills audit, including content on data ethics and social responsibility. As part of that, we recommend the set up of a solid, courageous and rigorous programme to encourage young women and other underrepresented groups into technology.Presented by the Women Leading in AI group at a meeting in parliament in February 2019, this report in Forbes by Noel Sharkey outlines both the group, their recommendations, and the meeting.

The NHS’s 10 Principles for AI + Data (Feb 2019)

Understand users, their needs and the contextDefine the outcome and how the technology will contribute to itUse data that is in line with appropriate guidelines for the purpose for which it is being usedBe fair, transparent and accountable about what data is being usedMake use of open standardsBe transparent about the limitations of the data used and algorithms deployedShow what type of algorithm is being developed or deployed, the ethical examination of how the data is used, how its performance will be validated and how it will be integrated into health and care provisionGenerate evidence of effectiveness for the intended use and value for moneyMake security integral to the designDefine the commercial strategyThese principles are set out with full commentary and elaboration on Artificial Lawyer here.

IEEE General Principles of Ethical Autonomous and Intelligent Systems (A/IS) (Mar 2019)Human Rights: A/IS shall be created and operated to respect, promote, and protect internationally recognized human rights.Well-being: A/IS creators shall adopt increased human well-being as a primary success criterion for development.Data Agency: A/IS creators shall empower individuals with the ability to access and securely share their data to maintain people’s capacity to have control over their identity.Effectiveness: A/IS creators and operators shall provide evidence of the effectiveness and fitness for purpose of A/IS.Transparency: the basis of a particular A/IS decision should always be discoverable.Accountability: A/IS shall be created and operated to provide an unambiguous rationale for all decisions made.Awareness of Misuse: A/IS creators shall guard against all potential misuses and risks of A/IS in operation.Competence: A/IS creators shall specify and operators shall adhere to the knowledge and skill required for safe and effective operation.These amended and extended general principles form part of Ethical Aligned Design 1st edition, published in March 2019. For an overview see pdf here.

Ethical issues arising from the police use of live facial recognition technology (Mar 2019)

9 ethical principles relate to: public interest, effectiveness, the avoidance of bias and algorithmic justice, impartiality and deployment, necessity, proportionality, impartiality, accountability, oversight, and the construction of watchlists, public trust, and cost effectiveness.

Reported here the UK government’s independent Biometrics and Forensics Ethics Group (BFEG) published an interim report outlining nine ethical principles forming a framework to guide policy on police facial recognition systems.

Floridi and Clement Jones' five principles key to any ethical framework for AI (Mar 2019)

AI must be beneficial to humanity.AI must also not infringe on privacy or undermine security. AI must protect and enhance our autonomy and ability to take decisions and choose between alternatives. AI must promote prosperity and solidarity, in a fight against inequality, discrimination, and unfairnessWe cannot achieve all this unless we have AI systems that are understandable in terms of how they work (transparency) and explainable in terms of how and why they reach the conclusions they do (accountability).Luciano Floridi and Lord Tim Clement Jones set out, in the New Statesman, these 5 general ethical principles for AI, with additional commentary.

References

[1] Asimov, Isaac (1950): Runaround, in I, Robot, (The Isaac Asimov Collection ed.) Doubleday. ISBN 0-385-42304-7.

[2] Murphy, Robin; Woods, David D. (2009): Beyond Asimov: The Three Laws of Responsible Robotics. IEEE Intelligent systems. 24 (4): 14–20.

[3] Margaret Boden et al (2017): Principles of robotics: regulating robots in the real world

Connection Science. 29 (2): 124:129.

[4] Tony Prescott and Michael Szollosy (eds.) (2017): Ethical Principles of Robotics, Connection Science. 29 (2) and 29 (3).

Scroll down to the next horizontal line for the updates.

If there any (prominent) ones I've missed please let me know.

Asimov's three laws of Robotics (1950)

A robot may not injure a human being or, through inaction, allow a human being to come to harm. A robot must obey the orders given it by human beings except where such orders would conflict with the First Law. A robot must protect its own existence as long as such protection does not conflict with the First or Second Laws. I have included these to explicitly acknowledge, firstly, that Asimov undoubtedly established the principle that robots (and by extension AIs) should be governed by principles, and secondly that many subsequent principles have been drafted as a direct response. The three laws first appeared in Asimov's short story Runaround [1]. This wikipedia article provides a very good account of the three laws and their many (fictional) extensions.

Murphy and Wood's three laws of Responsible Robotics (2009)