Martin Cid's Blog: Martin Cid Magazine, page 6

September 10, 2025

Charlie Sheen Takes Control of His Narrative in New Netflix Documentary

A new two-part documentary series, aka Charlie Sheen, offers a comprehensive biographical account of the actor’s life, presented through the lens of his seven years of sobriety. The project is positioned not as a career comeback but as a personal revelation, with Sheen himself providing extensive testimony to reclaim his own story. The series chronicles his upbringing in Malibu, his meteoric rise to fame, the highly publicized collapse of his career and personal life, and his subsequent path to recovery. The release is coordinated with his memoir, The Book of Sheen, which was made available one day prior.

An Unfiltered Confessional

The documentary is structured as a confessional, with Sheen’s own words forming the narrative foundation. The actor was initially hesitant to participate in the project, prompting director Andrew Renzi to engage in a seven to eight-month process of relationship-building to secure his involvement. This effort resulted in a commitment to complete candor, with Sheen stating he intends to share information he had previously made a “sacred vow to only reveal to a therapist”. In the series, Sheen reflects on his past actions, acknowledging that he “lit the fuse” that led to his life turning into “everything it wasn’t supposed to be”.

aka Charlie Sheen

aka Charlie SheenRevisiting a Public Rise and Fall

The narrative traces Sheen’s life chronologically, beginning with his early years in a unique childhood environment described as being at the “intersection of Hollywood royalty and coastal suburbia”. From there, it examines his seemingly “effortless rise to megastardom” and the subsequent “dramatic faceplants that unfolded in the public eye”. The documentary revisits the most turbulent periods of his life, using his current sobriety as a framework for analysis and reflection on events that became tabloid fixtures.

A Chorus of Perspectives

To provide a multi-faceted portrait, the series incorporates an extensive array of interviews with individuals from nearly every chapter of Sheen’s life. Participants include family members, such as his brother Ramon Estevez and daughter Lola Sheen, and former wives Denise Richards and Brooke Mueller. His professional life is explored through conversations with actors Sean Penn and Chris Tucker, as well as Two and a Half Men co-star Jon Cryer and creator Chuck Lorre. The documentary also features testimony from figures like Heidi Fleiss and Sheen’s former drug dealer, identified as Marco, to provide context for his struggles with addiction. Notably, his father, Martin Sheen, and brother, Emilio Estevez, do not appear; their absence is explained as a deliberate choice to allow Charlie Sheen to have his own moment.

Production and Release

The series is a significant undertaking by production companies Skydance, North of Now, Boardwalk Pictures, and Atlas Independent, with a production team that includes Charles Roven. The global release on a major streaming platform indicates the project is intended to be a definitive account of a complex and controversial public figure. The narrative arc concludes with Sheen’s present-day stability, focusing on his reflections and the clarity gained from sobriety.

The two-part documentary, aka Charlie Sheen, is available for streaming on Netflix beginning today, September 10, 2025.

Netflix’s The Dead Girls (Las Muertas): The Prestige Series Adapting Mexico’s Most Notorious True Crime Story

The premiere of The Dead Girls (Las Muertas) marks a significant event in contemporary television, representing the confluence of a canonical work of Latin American literature, the cinematic vision of one of Mexico’s most prominent filmmakers, and a notorious chapter from the nation’s criminal history. The six-episode limited series is the first television project from director Luis Estrada, a filmmaker whose career has been defined by acclaimed features that employ sharp satire to dissect Mexican political and social life. This production is an adaptation of the 1977 novel of the same name by Jorge Ibargüengoitia, a towering figure in 20th-century Mexican letters. The narrative itself is a fictionalized exploration of the real-life case of the González Valenzuela sisters, who became infamous in the 1960s as the serial killers known as “Las Poquianchis”. The deliberate combination of these three pillars—a revered auteur, a prestigious literary source, and a shocking true story—positions the series not as a conventional crime drama, but as a piece of prestige television engineered for serious cultural engagement. It signals an intent to leverage established artistic and historical reputations to establish the show’s intellectual credentials for a discerning global audience.

Literary Origins and Historical Trauma

The foundation of the series is twofold, resting upon both Jorge Ibargüengoitia’s celebrated novel and the grim historical reality that inspired it. The 1977 novel Las Muertas is considered a cornerstone of modern Mexican literature, a work that took the sordid facts of a true-crime story and transmuted them into a profound piece of social commentary. The book is a fictionalized account of the González Valenzuela sisters—renamed the Baladro sisters in the novel and the series—who operated a network of brothels in the state of Guanajuato during the 1960s and were ultimately convicted of numerous crimes, including the murders of their employees and their newborn children. Ibargüengoitia’s literary genius lay in his approach to this material. Rather than a straightforward dramatization, his novel is characterized by a distinctive blend of dark humor, biting satire, and an unflinching critique of the societal fabric of post-revolutionary Mexico, exposing the institutional ineptitude and systemic corruption that allowed such atrocities to occur. The novel’s narrative structure is unconventional, eschewing a linear plot in favor of a fragmented, multi-perspective reconstruction of events that resembles a journalistic report or a collection of disparate court testimonies. This stylistic choice is central to its thematic power, creating an objective, almost clinical distance that paradoxically amplifies the horror and absurdity of the events. The series adopts this satirical and quasi-journalistic tone, a decision that functions as more than a mere stylistic homage. It serves as a sophisticated narrative mechanism for confronting a national trauma too grotesque for direct, realist depiction. The use of satire provides a critical distance, allowing the story to move beyond the sensationalist details of the crimes themselves to conduct a more incisive examination of the cultural and political conditions—the pervasive misogyny, moral duplicity, and institutional decay—that created the environment in which such evil could flourish.

The Auteur’s Vision and Narrative Architecture

The creative force behind The Dead Girls is unequivocally Luis Estrada, who serves as the series’ creator, showrunner, co-screenwriter, and the director of all six episodes, affording him a degree of comprehensive auteurist control rare in television production. His connection to the material is not recent; Estrada has described his desire to adapt Ibargüengoitia’s novel as a 30-year “obsession,” one that began when he first read the book at the age of 15. For decades, the project was envisioned as a feature film, but its realization was ultimately contingent on a shift in the media landscape. Estrada found that the long-form, episodic structure of a limited series, as offered by a global streaming platform, was the “ideal format” for the novel’s expansive canvas of characters, locations, and interwoven timelines—a narrative complexity that could never be adequately contained within the runtime of a conventional film. This makes the series a prime example of how the streaming model is fundamentally altering the possibilities of literary adaptation, providing the creative and financial latitude to translate complex novels with a fidelity previously unattainable. The screenplay, co-written with his frequent collaborator Jaime Sampietro and with contributions from Rodrigo Santos, was developed with a deep reverence for the source material’s unique structure. Estrada’s directorial approach was to shoot the entire series as a single, cohesive production, akin to an extended film, with each episode meticulously crafted as if it were a short feature, even suggesting that each installment functions as an “independent movie with its own genre”. A key creative decision was to preserve the novel’s quasi-journalistic, multi-vocal narrative, intertwining testimonies and official statements as a pivotal cinematic device to reconstruct the story. However, the adaptation is not without a significant authorial intervention. The sixth and final episode features an entirely new script penned by Estrada and Sampietro, a deliberate choice made to address what they perceived as the novel’s “abrupt” ending and to provide a more cinematically and thematically conclusive resolution.

A Cast of Mexican Prestige

The series features an ensemble of actors who represent a high caliber of talent within both Mexican and international cinema. The narrative is anchored by the performances of Arcelia Ramírez as the elder sister, Arcángela Baladro, and Paulina Gaitán as the younger sibling, Serafina Baladro. Both actresses bring considerable dramatic weight to their roles. They are supported by a prominent cast of established performers, including Joaquín Cosío as Captain Bedoya, the officer investigating the case, and Alfonso Herrera as Simón Corona, a key figure in the sisters’ enterprise. The wider ensemble is populated by respected actors such as Mauricio Isaac, Leticia Huijara, Enrique Arreola, and Fernando Bonilla, creating a rich tapestry of characters. The casting strategy brings together performers with significant global recognition from their work in high-profile international productions such as Narcos, Ozark, and Sense8, alongside actors celebrated for their contributions to acclaimed Mexican films, including Estrada’s own La Ley de Herodes. This assemblage of talent underscores the production’s ambition and its positioning as a premium dramatic work.

The Craft of a Hand-Built World

The production of The Dead Girls was an immense and meticulous undertaking, distinguished by its scale and a profound commitment to practical, tangible craftsmanship. Filming spanned 21 weeks and involved a principal cast of 150 actors supported by more than 5,000 extras, reflecting the ambition to create a populated and authentic world. The most remarkable aspect of the production is its dedication to physical world-building. A total of 220 distinct sets were constructed to recreate the various environments of 1960s Mexico, with the production deliberately eschewing digital enhancements and visual effects. Estrada has noted that every frame of the series was “handcrafted,” a philosophy that extends from the production design to the costumes and props. This commitment to practical effects and physical sets is not merely an aesthetic choice but a thematic one. By physically constructing the world of the Baladro sisters, the production grounds its narrative of corruption and violence in a tactile, undeniable reality. This material authenticity reinforces the series’ quasi-documentary style, underscoring the assertion that these horrific events transpired in a real time and place, not a stylized digital reconstruction. The extensive location filming further enhanced this authenticity, with shooting taking place across the Mexican states of San Luis Potosí, Guanajuato, and Veracruz, as well as on soundstages at Mexico City’s historic Churubusco Studios. The key creative team responsible for this visual language includes Director of Photography Alberto Anaya Adalid “Mándaro,” Production Designer Salvador Parra, and Editor Mariana Rodríguez. The series is produced by Estrada and Sandra Solares through their production companies Mezcala Films, Bandidos Films, and Jaibol Films.

A Dissection of Systemic Malice

While the narrative engine of The Dead Girls is a true-crime story, its thematic concerns are those of a complex social critique. The central plot follows the sisters Arcángela and Serafina Baladro as they methodically build a lucrative and brutal empire of brothels, a criminal enterprise that ultimately unravels and exposes them as two of Mexico’s most notorious serial killers. However, the series argues that their actions were not an isolated anomaly but rather a symptom of a larger societal sickness. The narrative is a deep exploration of systemic failure, examining how unchecked power, institutional corruption, pervasive misogyny, and profound moral duplicity created the conditions that allowed the sisters to operate their network of exploitation and murder with impunity for years. A central theme, inherited directly from Ibargüengoitia’s novel, is the concept of “malice,” a study of the banality of evil that explores how ordinary people, including the victims of the system, can themselves become perpetrators when given the opportunity. In this way, the criminal enterprise of the Baladro sisters functions as a powerful microcosm of a corrupt state. The power dynamics, moral compromises, exploitation, and systemic violence that define the internal world of the brothels serve as a direct metaphor for the larger societal ills that Estrada has critiqued throughout his filmography. The series uses this contained, brutal environment to stage a broader allegory about national moral decay, where the sisters’ reign of terror is a reflection of the state’s own moral bankruptcy. The series thus continues Estrada’s career-long project of using satire and black humor to dissect Mexican political and social structures, offering a uniquely Mexican perspective on universal themes of gender, power, and violence.

Reconstructing a Legend for a Global Audience

The Dead Girls arrives as a complex, multi-layered work that functions simultaneously as a faithful literary adaptation, a chilling historical reconstruction, and a potent auteurist statement. It represents a significant addition to the growing catalog of ambitious international dramas, distinguished by its literary pedigree, its unflinching subject matter, and the singular vision of its director. By synthesizing the narrative grit of the true-crime genre with a sophisticated, satirical, and deeply critical approach, the series aims to be both a narratively sharp thriller and a piece of resonant social commentary. In bringing one of Mexico’s darkest legends to a global platform through the lens of one of its most critical and uncompromising filmmakers, the series engages in a complex act of cultural translation, historical examination, and artistic synthesis.

The six-episode limited series The Dead Girls (Las Muertas) premiered worldwide on the Netflix streaming platform on September 10, 2025.

September 9, 2025

Netflix Debuts ‘Kiss or Die,’ a High-Concept Japanese Series Blending Comedy and Unscripted Drama

The global streaming platform Netflix has launched Kiss or Die, a new Japanese series that presents a complex fusion of genres. The production combines the structural elements of a reality competition with the spontaneity of improvisational drama and the high stakes of a conceptual game show. At its core is a unique premise described as a “death kiss game,” a format designed to generate unscripted comedy through a carefully constructed scenario of desire, resistance, and performance. The series places a cast of established male comedians into a narrative framework where they must navigate a series of dramatic encounters with the ultimate goal of delivering a climactic, story-defining kiss. This central conceit establishes a high-pressure environment where professional instincts are tested, and the lines between performance and reaction are deliberately blurred.

A High-Stakes Game of Improvisation and Seduction

The series operates on a meticulously defined ruleset that governs the participants’ journey. The primary objective for each comedian is to become the “protagonist” of the unfolding, unscripted drama. This status is achieved by successfully delivering what the format terms the “ultimate kiss” or the “best kiss.” This act is not merely physical but must function as a narratively satisfying climax to the improvised scenes they are building with their co-stars. The success of this performance is the sole metric for advancing in the game. The central conflict and primary obstacle are introduced through a cast of female co-stars, whose explicit role within the game’s structure is to act as “irresistibly seductive” agents of temptation. The comedians are required to engage with these characters dramatically, building a romantic narrative while simultaneously resisting any premature or narratively unearned physical intimacy.

The penalty for failing to adhere to this core directive is immediate and absolute. If a participant delivers what the game’s arbiters deem a “cheap kiss”—one that lacks sufficient narrative justification or emotional weight—they are instantly eliminated from the competition. Within the diegesis of the show, this elimination is framed as a character’s “death,” removing them from the ongoing story. This “death game” mechanic, while metaphorical, creates a tangible sense of jeopardy that fuels the comedic and dramatic tension. The structure of the series is tailored for the streaming model; the complete narrative arc is contained within a single season of six episodes, all of which were released simultaneously, facilitating a binge-viewing experience. This release strategy allows the overarching narrative of the competition to unfold without interruption, encouraging audience immersion in the escalating stakes of the game.

The very design of this competition serves as a sophisticated examination of performance anxiety. The participants are professional comedians, individuals whose careers are built on the precise control of timing, audience perception, and the successful delivery of a comedic or emotional payoff. The game’s objective, the “best kiss,” is an inherently subjective measure of performance quality, shifting the comedians from their familiar territory of joke construction into the ambiguous realm of romantic authenticity. By penalizing a “cheap kiss,” the format explicitly links failure to a subpar artistic delivery. Consequently, the “death” in this game is not a literal threat but a potent metaphor for creative and professional failure under the public scrutiny of a global audience. The tension is derived from observing experts in one discipline being rigorously tested in another, transforming a simple game into a meta-commentary on the inherent pressures of performance and the fragile nature of professional validation.

From the Mind of a Variety Television Veteran

The creative force behind Kiss or Die is Nobuyuki Sakuma, a veteran television producer credited with Planning and Production for the series. Sakuma has established a significant reputation through a series of successful projects for Netflix, including the talk-show-drama hybrid Last One Standing, the intimate dialogue series LIGHTHOUSE, and the variety program Welcome, Now Get Lost. His influence extends deep into Japanese terrestrial television, where he is known for creating popular and critically regarded programs such as God Tongue and Achi Kochi Audrey. This body of work demonstrates a consistent interest in developing high-concept formats that place comedians in unconventional and psychologically demanding situations.

Kiss or Die is not a wholly new concept but rather an evolution of a creative preoccupation evident in Sakuma’s earlier work. The series’ premise is directly inspired by the “Kiss Endurance Championship,” a popular and recurring segment from his long-running television show God Tongue. That segment similarly tested comedians’ improvisational abilities and self-control by placing them in scenarios where they had to resist the advances of attractive actresses. By expanding this segment into a full-fledged, high-production-value series for a global platform, Sakuma is iterating on a proven formula, refining its mechanics and scaling its ambition. This lineage indicates that the series is the product of a long-term creative exploration into the comedic potential of manufactured romantic tension.

The production is helmed by director Takashi Sumida, whose filmography includes the 2020 film Fictitious Girl’s Diary and the 2021 series The Road to Murder. The screenplay for the series is credited to a writer known as Date-san. The executive producer is Shinichi Takahashi, with Haruka Minobe, Seira Taniguchi, and Rieko Saito serving as producers. The series is an official Netflix production, realized with production cooperation from Kyodo Television and production services from Shio Pro. This robust production infrastructure underscores the significant investment in a format that originates from a niche segment of Japanese variety television.

Sakuma’s career trajectory, culminating in this project, points toward a broader trend in global content strategy. His earlier, influential work like God Tongue was created primarily for a domestic Japanese audience. His more recent collaborations with Netflix, however, represent a deliberate effort to adapt and elevate these uniquely Japanese variety formats for international consumption. Last One Standing, for instance, successfully translated the blend of unscripted talk and scripted drama found in shows like King-chan into a format that resonated with global audiences. Kiss or Die follows this strategic pattern, taking a specific, culturally resonant variety game and re-engineering it as a polished, binge-able series. This positions Sakuma as a key figure in the translation of Japan’s formally experimental television landscape for a worldwide audience, with the Netflix platform acting as the critical enabler for this cross-cultural exchange. His approach may be informed by a personal philosophy that the breadth of culture one consumes in youth directly impacts intellectual flexibility and the capacity to accept different values. The success of such projects has wider implications for how regional entertainment formats can be deconstructed and reassembled for global appeal.

A Curated Collision of Talent

The casting for Kiss or Die is a crucial component of its conceptual design, assembling a diverse array of performers from different sectors of the Japanese entertainment industry. The cast is strategically divided into three distinct groups, each with a specific function within the show’s multi-layered format. The dynamic interplay between these groups generates the series’ primary narrative and comedic friction.

The core participants, whose skills are being put to the test, are a selection of prominent male comedians. This group includes Gekidan Hitori, a highly versatile talent known not only for his comedy but also as an accomplished actor, novelist, and film director. He is joined by Tetsuya Morita of the comedy duo Saraba Seishun no Hikari, who also appeared in Sakuma’s Last One Standing; Takashi Watanabe of the popular manzai duo Nishikigoi; and Crystal Noda of the duo Madical Lovely. The lineup is rounded out by Kazuya Shimasa of the comedy duo New York and Gunpee of the duo Haru to Hikoki. This selection represents a cross-section of contemporary Japanese comedy, from established veterans to popular current acts.

A second group functions as a studio panel, providing commentary and analysis that guides the viewer’s interpretation of the events. This panel acts as a Greek chorus, deconstructing the comedians’ strategies and judging the quality of their improvised performances. It is composed of Ken Yahagi, one half of the respected comedy duo Ogi Yahagi, and Ryota Yamasato, of the duo Nankai Candies. Yamasato is a familiar face to international audiences due to his long-running role as a sharp-witted commentator on the reality series Terrace House. They are joined by model and television personality Miyu Ikeda. This panel’s presence reinforces the idea that the series is not just a game but a technical performance being critically evaluated.

The third and final group is the dramatic ensemble, responsible for driving the improvised narratives and embodying the central challenge of the game. This cast includes established mainstream actors, lending dramatic weight to the proceedings. The most notable among them is Mamoru Miyano, a prolific and highly decorated voice actor and singer. Miyano is a major figure in the world of anime, having won numerous awards for his roles in globally recognized series such as Death Note, Mobile Suit Gundam 00, and Steins;Gate. His participation provides a benchmark of professional acting against which the comedians’ improvisations are measured. The male acting ensemble also features Terunosuke Takezai, Jun Hashimoto, and Kosei Yuki. The female cast, tasked with portraying the seductive figures the comedians must resist, is drawn largely from the worlds of adult film and gravure modeling. This includes Mana Sakura, a prominent adult video (AV) actress who has successfully crossed over into mainstream entertainment, appearing in films and television dramas and publishing several acclaimed novels. Her first book, the heavily autobiographical The Lowlife, was adapted into a film in 2017. She is joined by fellow AV performers and models including Mary Tachibana, who is of mixed Japanese and Russian heritage; Kiho Kanematsu, a former member of the mainstream idol group AKB48; Nana Yagi, who has also acted in web dramas; Karin Touno, Ibuki Aoi, Luna Tsukino, and MINAMO.

This casting approach appears to be a deliberate act of cultural engineering. The show’s premise forces a direct and intimate confrontation between performers from different, often rigidly separated, strata of Japan’s entertainment ecosystem. The central dynamic is generated by the professional friction between mainstream comedians and actors, and performers from the adult entertainment industry, who are often marginalized from mainstream productions. The inclusion of figures like Mana Sakura, whose career has actively challenged these traditional boundaries, and Kiho Kanematsu, who has moved from mainstream idol pop to adult media, is particularly significant. The format leverages the distinct professional skill sets of each group against one another: the improvisational wit of the comedians is pitted against the actresses’ expertise in performing seduction and intimacy. This creates a unique and complex power dynamic. In a mainstream Netflix production, it places performers from the adult industry in a central, empowered, and antagonistic role, thereby challenging the conventional celebrity hierarchy and creating a social experiment broadcast on a global stage.

Deconstructing the Unscripted Format

Kiss or Die is a formally complex work that operates on multiple, simultaneous layers of reality. The participants exist as themselves—comedians competing in a high-stakes game for professional pride. At the same time, they are playing characters within an improvised drama, tasked with creating a coherent narrative and emotional arc on the fly. Finally, they are the subjects of real-time analysis by the studio hosts, who break down their choices and performance quality for the audience. This meta-narrative structure actively encourages a critical mode of viewing, inviting the audience to consider the mechanics of performance, authenticity, and narrative construction.

The series also engages in a sophisticated act of genre subversion. It borrows its foundational structure from the Japanese “death game” genre, a popular narrative form in manga, anime, and film, famously exemplified by titles like Battle Royale, Liar Game, and the As the Gods Will series. This genre is typically characterized by grim, high-stakes competitions where participants are forced to fight for their literal survival, often as a form of dark social allegory exploring themes of conformity, consumerism, and the loss of individual identity in a dehumanizing world. Kiss or Die adopts the genre’s high-jeopardy elimination framework—the “kill or be killed” ultimatum—but performs a crucial substitution. It replaces the threat of physical death with the specter of professional failure and public humiliation. The “death” is purely narrative and symbolic, a consequence of a poorly executed performance. This comedic inversion serves to parody the self-serious melodrama inherent in the death game genre, using its tropes not for suspense but for laughter.

The show’s technical format is a hybrid, meticulously blending the core tenets of two distinct performance modes: improvisational theater and reality television. From improvisational theater, it takes the emphasis on spontaneity, character creation, and collaborative storytelling in an unscripted environment. From reality television, it borrows the rigid ruleset, the competitive elimination structure, and the overarching sense of a manufactured contest. The primary engine of the series’ entertainment value is the persistent tension between these two modes—the creative freedom offered by improvisation constantly clashes with the structural constraints imposed by the game’s rules. This collision forces the comedians to be simultaneously creative artists and strategic players, a duality that generates both comedy and genuine dramatic suspense.

This formal approach allows the series to function as an incisive critique of the concept of manufactured authenticity that underpins much of reality television. By making the performance of romance and desire an explicit, competitive, and technically judged skill, the show deconstructs the illusion that similar dynamics in reality dating formats are wholly spontaneous. The very premise—to achieve the “best kiss”—removes the pretense of capturing “real” emotions. The presence of a judging panel further reinforces that the audience is witnessing a technical skill being evaluated, not a genuine romantic development. In framing romance as a competitive, improvised performance, the show satirizes the entire reality dating genre. It implicitly suggests that all such programs are, at their core, a form of “kiss endurance championship,” where contestants perform intimacy and desire for survival within the show’s narrative structure. This provides a cynical and sophisticated layer of commentary on the very nature of unscripted entertainment itself.

Kiss or Die emerges as a formally ambitious and highly experimental series that deliberately pushes the established boundaries of unscripted entertainment. Its innovative power lies in its seamless blending of disparate genres—reality competition, improvisational theater, and parody—and its deployment of a complex, multi-layered meta-narrative that encourages critical engagement from its audience. The series represents a significant and logical evolution in the creative trajectory of its creator, Nobuyuki Sakuma, marking his most audacious attempt yet to re-package a niche Japanese television concept for a global viewership. It stands as a noteworthy example of how culturally specific entertainment formats can be deconstructed and re-imagined, offering a unique and challenging viewing experience that is at once a high-concept comedy and a sharp deconstruction of modern media performance.

The complete six-episode first season of Kiss or Die was made available for global streaming on the Netflix platform on September 9, 2025.

September 8, 2025

Maria Lassnig: “Self with Dragon” probes the limits of body awareness at Hauser & Wirth Hong Kong

Maria Lassnig’s late paintings and drawings turn the body into an instrument of knowledge rather than a subject of depiction. A focused presentation in Hong Kong gathers works on canvas and paper spanning 1987 to 2008, consolidating the artist’s lifelong inquiry into what she termed “body awareness”: the conviction that felt sensation—pressure, ache, breath, weight—is a more reliable ground for representation than the mirror or the camera. The selection places self-portraits in dialogue with machines, animals and abstract structures, charting how inner states displace the “retinal image” with somatic evidence.

The exhibition’s center of gravity is “Selbst mit Drachen (Self with Dragon)” (2005), where a mythic creature emerges less as antagonist than as an extension of the self. Lassnig stages the dragon as a register of tension—an embodiment of intrusive forces that are simultaneously internal and external. This negotiation, pitched between threat and recognition, echoes across the surrounding works, where bodies are truncated, hybridized or interfaced with devices not for effect but to record sensation at its point of origin.

“Viktory (Victory)” (1992) distills that logic into a hard, emblematic geometry. A broad, angular “V”—at once posture and sign—structures the field, fusing corporeal feeling with symbolic architecture. The letter functions as a scaffold for emotion, showing how language and sign systems contour bodily experience. Rather than staging a triumph, the canvas reads as a diagram of steadiness under strain.

Several canvases press further into abstraction without relinquishing the self. In “Selbst abstrakt I / Bienenkorb Selbst (Self Abstract I / Beehive Self)” (1993), the head assumes the ventilation and massing of a beehive, a vessel charged with hum, heat and pressure. “Selbst als Blüte (Self as a Flower)” (1993) aligns aging flesh with botanical structure, not sentimentally but analytically, proposing continuity between human and vegetal anatomies. These works operate like cross-sections of feeling, converting states—swell, throb, contraction—into form.

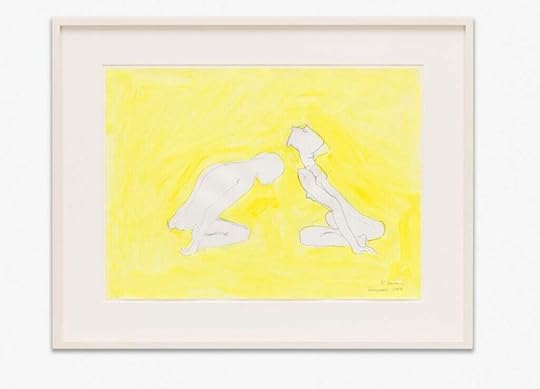

The works on paper anchor the presentation in the present tense. Lassnig treated drawing as a seismograph—closest to the instant—allowing a single line to register the shift from solitude to relation. In “Liegende (Reclining Figures)” (2000) and “Liebespaar (Lovers)” (2003), figures drift toward and away from one another without settling into fixed contour. “Mr and Mrs Kliny” (2004) holds that ambiguity, sketching a dyad whose dynamics remain unresolved by design. Monochrome sheets such as “Ober und Unterkörper (Torso and Lower Body)” (1990) and “Die Vielfalt (Diversity)” (2003) strip the figure to pressure points; spareness becomes strategy, isolating curvature and compression that color might overdetermine. Lassnig’s insistence that each drawing is autonomous—never a step toward a definitive oil—underscores the ethic of attention running through the show.

Taken together, these paintings and drawings argue for sensation as knowledge and for the body as a porous site where the world leaves its traces. Machines, animals and alphabetic signs are not external props but languages the self uses to measure impact. The hybrids and partial figures that result are instruments, not fragments: calibrated tools for recording intensities that conventional portraiture overlooks. The exhibition’s restraint—tight selection, clear sightlines, measured juxtapositions—allows the works to read as case studies in perception, each one offering a different protocol for translating an internal state into visible form.

What emerges is not a rejection of likeness but a redefinition of it. Lassnig paints what a head feels like to inhabit rather than how a head looks; she draws a relation as a shift in contour rather than as a narrative scene. In the process, she widens the vocabulary of self-portraiture, admitting diagrams, emblems and mythic proxies as legitimate registers of the self. The result is a body of work that approaches accuracy—understood as fidelity to experience—by refusing the comforts of optical description.

Venue and dates: Hauser & Wirth Hong Kong — 26 September 2025 to 28 February 2026.

Maria Lassnig. Viktory(Victory). 1992. Oil on canvas. 200 x 145 cm / 78 3/4 x 57 1/8 in. © Maria Lassnig Foundation. Courtesy the Foundation and Hauser & Wirth

Maria Lassnig. Viktory(Victory). 1992. Oil on canvas. 200 x 145 cm / 78 3/4 x 57 1/8 in. © Maria Lassnig Foundation. Courtesy the Foundation and Hauser & Wirth Maria Lassnig. Liebespaar(Lovers) 2003. Pencil and acrylic on paper. 43.8 x 59.8 cm / 17 1/4 x 23 1/2 in. 63 x 80 x 3.5 cm / 24 3/4 x 31 1/2 x 1 3/8 in(framed). Photo: Jorit Aust. © Maria Lassnig Foundation. Courtesy the Foundation and Hauser & Wirth

Maria Lassnig. Liebespaar(Lovers) 2003. Pencil and acrylic on paper. 43.8 x 59.8 cm / 17 1/4 x 23 1/2 in. 63 x 80 x 3.5 cm / 24 3/4 x 31 1/2 x 1 3/8 in(framed). Photo: Jorit Aust. © Maria Lassnig Foundation. Courtesy the Foundation and Hauser & Wirth

The Return of a Political Vendetta: Inside Netflix’s Her Mother’s Killer Season Two

The Colombian political thriller Her Mother’s Killer, known domestically as La Venganza de Analía, returns for a second season, escalating the central conflict between political strategist Analía Guerrero and the corrupt politician Guillermo León Mejía. The new installment plunges viewers back into the narrative following the dramatic conclusion of the first season, which saw Mejía’s political empire crumble under the weight of Analía’s meticulously executed plan of revenge for her mother’s murder. While the initial conflict appeared resolved with Mejía’s imprisonment, the second season redefines the stakes. Mejía’s return is not merely a plot continuation but a fundamental narrative reset, promising a more personal, volatile, and dangerous confrontation that threatens the fragile peace established by Analía and her ally, Pablo de la Torre.

Her Mother’s Killer

Her Mother’s KillerNarrative Inversion and Character Dynamics

The second season’s primary narrative engine is a deliberate inversion of the power dynamics established in the first. Where the initial season presented a calculated offensive led by Analía, the new chapter is structured as a desperate defense against a resurgent and more formidable antagonist. Guillermo León Mejía returns from prison not as a chastened figure but as a man whose ambition has been supplanted by a singular, potent desire for vengeance. His objective is no longer confined to political power but extends to the complete personal and professional ruin of Analía. This is evidenced by his sophisticated new strategy, which involves manipulating his way onto the presidential ticket as the vice-presidential candidate for his former rival, Rosales.

This resurgence forces a profound psychological shift in the protagonist, Analía Guerrero. The master strategist, once defined by her control and foresight, is now depicted as emotionally fractured and operating from a position of vulnerability. The narrative arc portrays a woman who is no longer the calculating hunter but the hunted, grappling with a fear that was absent in her initial quest for justice. This vulnerability is given a tangible form through the introduction of her daughter. The child becomes the narrative’s focal point, representing Analía’s primary weakness and the main target for her enemies’ aggressions. Multiple plotlines revolve around the daughter’s safety, from her birth during a period of intense danger to her eventual capture by Mejía’s new ally.

The catalyst for this narrative restructuring is the season’s most significant new character: Paulina Peña, portrayed by Paola Turbay. She is introduced not as a subordinate but as a primary antagonist and a lethal partner for Mejía. Characterized as a professional assassin, Peña’s presence signals a generic shift for the series. The conflict moves beyond the arena of political machinations and media manipulation into the realm of direct physical violence. Her actions immediately establish a more visceral and dangerous tone, as she is responsible for the murder of Elvira Ortega, a key supporting character’s wife, and orchestrates violent attacks against both Pablo de la Torre and Analía. This combination of Mejía’s political cunning and Peña’s lethality creates a multifaceted antagonistic force that fundamentally alters the series’ rules of engagement, forcing Analía to confront a threat her previous skill set is ill-equipped to handle.

Thematic Expansion and Psychological Depth

While retaining its foundation as a critique of political corruption, the second season introduces a significant layer of psychological inquiry, exploring the personal cost of revenge and trauma. This thematic deepening is a conscious production choice, with the creative team reportedly consulting with psychiatric professionals to enrich the characterizations and their responses to extreme stress. The narrative moves beyond the archetypal “strong female character” to present a more nuanced portrait of a protagonist contending with fear and the consequences of her past actions. Plot developments, including poisonings, kidnappings, and betrayals, are framed to highlight their psychological impact on the characters.

The introduction of Analía’s daughter serves as the thematic and narrative fulcrum for this new focus. The child is the physical embodiment of Analía’s vulnerability, shifting her motivations from the abstract pursuit of justice to the primal, concrete need to protect her family. This reframing elevates the series beyond a simple revenge thriller, positioning it as a more complex drama. By grounding the high-stakes political conflict in the personal trauma of its protagonist, the production demonstrates an ambition to engage with its material on a more sophisticated level, reflecting a wider trend in global television where genre series adopt the character complexity of prestige dramas to appeal to a broader, more discerning international viewership.

Principal Cast and Production Context

The series maintains continuity with its core ensemble. Carolina Gómez returns as Analía Guerrero, Marlon Moreno as Guillermo León Mejía, and George Slebi as Pablo De La Torre. The principal addition to the main cast is Paola Turbay as the antagonist Paulina Peña, a casting choice that generated considerable media attention in its home market due to both Gómez and Turbay being former universal beauty pageant contestants.

Her Mother’s Killer is a production of CMO Producciones, created by Clara María Ochoa and Ana Piñeres, for the Colombian broadcaster Caracol Televisión. The series exemplifies a successful modern distribution model for non-English language content. After achieving high ratings during its initial domestic run in 2020, the first season found a significant global audience through streaming. The second season follows this dual-release strategy, premiering first on Caracol Televisión before its international launch. This hybrid model allows a regional production powerhouse to secure its domestic market while leveraging a global streaming platform to achieve international monetization and brand recognition, creating a template for how high-quality regional productions can compete on the world stage.

The second season of Her Mother’s Killer marks a significant evolution for the series. It has transcended its initial premise, maturing from a tightly plotted political thriller into a more complex and emotionally resonant psychological drama. The narrative escalation, character inversion, and thematic deepening all point to a production with heightened artistic ambitions. The conflict has moved from the political arena to a direct, life-or-death struggle, exploring themes of trauma, fear, and justice through a more personal and intense lens. The season premiered on Caracol Televisión on May 21, 2025, and will be available for global streaming on Netflix beginning September 8, 2025.

September 7, 2025

New Netflix Anime The Fragrant Flower Blooms with Dignity Explores Social Divides

The new anime series The Fragrant Flower Blooms with Dignity has premiered, introducing a narrative centered on the unlikely relationship between two students from adjacent but deeply segregated high schools. The story presents a contemporary exploration of social barriers, prejudice, and the potential for connection in a world defined by division. The central conflict arises from the institutional rivalry between the low-achieving, all-boys Chidori High School and the prestigious, affluent Kikyo Girls’ High. Though the schools are neighbors, a palpable animosity exists between their student bodies, creating a charged environment for any potential interaction.

The series introduces protagonists Rintaro Tsumugi, a Chidori student whose intimidating appearance belies a gentle and considerate nature, and Kaoruko Waguri, a kind and open-minded student from Kikyo. Their initial encounter takes place not on school grounds but within the neutral space of Rintaro’s family-owned patisserie, where Kaoruko is a customer. Unburdened by the prejudices of their respective institutions, they form a connection based on mutual respect. This nascent bond is immediately challenged, however, upon the discovery of their school affiliations, setting the stage for a narrative that examines whether personal connection can overcome ingrained societal hostility.

Narrative Framework and Thematic Concerns

The series constructs its narrative around a framework analogous to classic “star-crossed lovers” archetypes, transposing the conflict onto a modern high school setting where the feud is rooted in classism and academic reputation. Chidori is characterized as a school for society’s “dregs,” while Kikyo is an institution for the daughters of wealthy, high-class families, establishing a clear socio-economic divide that the protagonists must navigate. This dynamic serves as the primary external obstacle to their relationship. Internally, the characterization of Rintaro Tsumugi subverts the common “delinquent” trope. Despite his appearance, he possesses a gentle disposition and suffers from low self-esteem, often assuming others perceive him as a troublemaker. Kaoruko functions as the narrative’s catalyst; her ability to see past his exterior and recognize his inherent kindness initiates Rintaro’s journey of self-reassessment and challenges his understanding of interpersonal relationships.

The narrative extends beyond the central romance to explore the wider social implications of their bond. The relationship impacts their respective friend circles, including Rintaro’s friends—the intelligent but cynical Saku Natsusawa and the energetic Shohei Usami—and Kaoruko’s protective childhood friend, Subaru Hoshina. These supporting characters initially embody the prejudices of their schools, with Saku and Subaru expressing hostility toward the opposing group. A significant portion of the story is dedicated to the gradual evolution of these dynamics, as exposure and interaction begin to dismantle their preconceived notions. This thematic focus is underscored by the narrative’s consistent resolution of conflict through communication. The story largely eschews prolonged misunderstandings, a common genre device, in favor of depicting its characters engaging in the difficult but necessary process of articulating their feelings. This approach is a direct reflection of the original author’s intent to highlight “the importance of communicating things to other people,” framing such honesty not as a simple plot device but as an “act of courage” that constitutes the characters’ primary strength.

The Fragrant Flower Blooms with Dignity

The Fragrant Flower Blooms with DignityProduction Pedigree and Directorial Vision

The animation production is handled by CloverWorks, a studio with a significant portfolio of prominent titles. The creative team is led by director Miyuki Kuroki, with Satoshi Yamaguchi serving as associate director. Rino Yamazaki is responsible for the series composition, while Kohei Tokuoka serves as both character designer and chief animation supervisor. Director Miyuki Kuroki’s previous work, notably as the director of Akebi’s Sailor Uniform, demonstrates a proficiency in character-driven storytelling and the cultivation of a specific, gentle atmospheric tone through meticulous animation. Her extensive filmography, which includes storyboarding and episode direction for series such as Spy×Family and the film Her Blue Sky, indicates a versatile and experienced hand at the helm.

The selection of Kuroki as director appears to be a deliberate creative choice aligned with the source material’s core ethos. The original manga is frequently described as having “gentle and sincere storytelling,” a quality that producers expressed a strong desire to preserve in the adaptation. Kuroki’s established directorial style, which emphasizes subtle emotional expression and detailed world-building, is particularly well-suited to a narrative that prioritizes character interiority over high-octane conflict. This pairing of director and material suggests a production strategy focused on achieving fidelity to the original’s tone and themes, leveraging a creative leader whose artistic sensibilities are in harmony with the author’s vision. The result is an adaptation process that appears to value artistic compatibility, aiming for a nuanced interpretation rather than a generic genre piece.

Cinematography and Visual Language

The series employs a deliberate visual language, using cinematographic techniques to reinforce its narrative themes. A notable formalist approach is the consistent use of “frames within frames,” where elements of the mise-en-scène are used to visually represent the psychological and social barriers separating the characters. This technique is particularly evident in the arc of the character Subaru, whose initial distrust and opposition to Rintaro are visualized through compositions that place them in separate panes of a coffee shop window. In another sequence, an upside-down glass is used to create a layered, fragile frame around her, symbolizing the protective but ultimately breakable wall she has built around her friend.

This visual vocabulary evolves in concert with the characters’ development. As individuals begin to overcome their prejudices and communicate more openly, these compositional barriers are systematically removed. Following an emotional resolution, Subaru is shown conversing with Rintaro with no discernible objects separating them in the frame, visually signaling a shift in their relationship. This commitment to visual storytelling is complemented by the production’s pursuit of realism in its setting. Believing that a convincing depiction of everyday life was essential to the story, the animation team consulted with the original author to conduct location scouting based on the real-world places she used as references for her illustrations. This combination of a grounded, realistic world with a formalist, symbolic visual language allows the direction to externalize the characters’ internal states, offering a layer of interpretive depth for a cinematically literate audience.

From Page to Screen: Adapting a Modern Manga Hit

The anime is an adaptation of the manga of the same name, written and illustrated by Saka Mikami. The series began its serialization on Kodansha’s Magazine Pocket digital platform and has achieved significant commercial success, with sales exceeding five million copies. The author’s motivation for creating the series provides crucial context for its thematic core. Mikami was deeply inspired by the manga Attack on Titan but was also affected by its “bittersweet ending,” which spurred a desire to create a story where “all the characters were kind”. This genesis positions The Fragrant Flower Blooms with Dignity not merely as a wholesome romance, but as a deliberate artistic counterpoint to the darker, conflict-heavy narratives prevalent in the contemporary manga landscape. The series’ emphasis on empathy and communication can be seen as a direct thematic inversion of stories that focus on cycles of violence and inherited hatred.

The work occupies a hybrid space between genres. Though serialized on a platform for a shonen (young male) demographic and incorporating elements of inter-group conflict to create a “shonen manga feel,” its narrative focus on emotional interiority and the development of romantic and platonic relationships aligns closely with the conventions of shojo (young female) manga. The adaptation thus brings a notable work from this ongoing generic dialogue to a global audience, reflecting an appetite for narratives that champion emotional intelligence and constructive conflict resolution.

The Sonic and Vocal Landscape

The series’ auditory experience is shaped by a score composed by Moeki Harada. The opening theme song, titled “Manazashi wa Hikari,” is performed by Tatsuya Kitani, while the ending theme, “Hare no Hi ni,” is performed by Reira Ushio. Central to the character-driven narrative are the vocal performances of the principal cast. The role of Rintaro Tsumugi is voiced by Yoshinori Nakayama, and Kaoruko Waguri is voiced by Honoka Inoue.

Yoshinori Nakayama’s career includes numerous supporting roles, with this series marking a significant lead performance for the actor. Honoka Inoue, the daughter of veteran voice actress Kikuko Inoue, began her career as a singer before transitioning to voice acting, where she has secured several lead roles in other projects. The casting appears to prioritize character authenticity, with the vocal performances chosen to align with the nuanced emotional core of the story. Nakayama’s performance grounds Rintaro’s character, while Inoue’s experience lends itself to the unwavering sincerity required for Kaoruko.

Distribution and Premiere Information

The series is being streamed globally by Netflix, providing wide international accessibility. The release includes both the original Japanese audio with subtitles and a weekly English-dubbed version, ensuring the series is available to a broad audience.

The anime premiered in Japan on July 5, 2025. For some territories in Southeast Asia, the series became available on July 13, 2025. The broader international release on Netflix, including in the United States, is scheduled to begin on September 7, 2025.

September 6, 2025

Hexstrike-AI: The Dawn of Autonomous Zero-Day Exploitation

In the final days of August 2025, the global cybersecurity community entered a state of high alert. Citrix, a cornerstone of enterprise IT infrastructure, disclosed a trio of critical zero-day vulnerabilities in its NetScaler appliances, including a flaw, CVE-2025-7775, that allowed for unauthenticated remote code execution. For security teams worldwide, this disclosure initiated a familiar, frantic race against time—a desperate effort to patch thousands of vulnerable systems before threat actors could reverse-engineer the flaw and weaponize it. Historically, this window of opportunity for defenders, known as the Time-to-Exploit (TTE), has been measured in weeks, and more recently, in days.

Almost simultaneously, a new open-source project named Hexstrike-AI appeared on the code-hosting platform GitHub. Its creator described it as a defender-oriented framework, a revolutionary tool designed to empower security researchers and “red teams” by using Large Language Models (LLMs) to orchestrate and automate security testing. The stated goal was noble: to help defenders “detect faster, respond smarter, and patch quicker”.

The reality, however, proved to be far more disruptive. Within hours of Hexstrike-AI’s public release, threat intelligence firm Check Point observed a seismic shift in the cybercriminal underground. Discussions on dark web forums pivoted immediately to the new tool. Instead of embarking on the painstaking manual process of crafting an exploit for the complex Citrix flaws, attackers began sharing instructions on how to deploy Hexstrike-AI to automate the entire attack chain. What would have taken a highly skilled team days or weeks—scanning the internet for vulnerable targets, developing a functional exploit, and deploying a malicious payload—was reportedly being condensed into a process that could be initiated in under ten minutes.

This convergence of a critical zero-day vulnerability and a publicly available AI-driven exploitation framework was not merely another incident in the relentless churn of the cybersecurity news cycle. It was a watershed moment, the point at which the theoretical threat of AI-powered hacking became an operational reality. The incident demonstrated, with chilling clarity, that a new class of tool had arrived, capable of fundamentally collapsing the TTE and shifting the dynamics of cyber conflict from human speed to machine speed. Frameworks like Hexstrike-AI represent a paradigm shift, challenging the very foundations of modern cybersecurity defense, which for decades has been predicated on the assumption that humans would have time to react. This report will provide a deep analysis of the Hexstrike-AI framework, examine its profound impact on the zero-day arms race, explore the broader dual-use nature of artificial intelligence in security, and assess the strategic and national security implications of a world where the window between vulnerability disclosure and mass exploitation is measured not in days, but in minutes.

Anatomy of an AI Hacker: Deconstructing the Hexstrike-AI FrameworkThe rapid weaponization of Hexstrike-AI underscores the inherent dual-use dilemma at the heart of all advanced cybersecurity technologies. While its developer envisioned a tool to augment defenders, its architecture proved to be a perfect force multiplier for attackers, illustrating a principle that has defined the field for decades: any tool that can be used to test a system’s security can also be used to break it. What makes Hexstrike-AI a revolutionary leap, however, is not the tools it contains, but the intelligent orchestration layer that sits above them, effectively creating an autonomous agent capable of strategic decision-making.

Technical Architecture – The Brains and the BrawnHexstrike-AI is not a monolithic AI that spontaneously “hacks.” Rather, it is a sophisticated, multi-agent platform that intelligently bridges the gap between high-level human intent and low-level technical execution. Its power lies in a distributed architecture that separates strategic thinking from tactical action.

The Orchestration Brain (MCP Server)At the core of the framework is a server running the Model Context Protocol (MCP), a standard for communication between AI models and external tools. This MCP server acts as the central nervous system of the entire operation, a communication hub that allows external LLMs to programmatically direct the workflow of the offensive security tools integrated into the framework. This is the critical innovation. Instead of a human operator manually typing commands into a terminal for each stage of an attack, the LLM sends structured instructions to the MCP server, which then invokes the appropriate tool. This creates a continuous, automated cycle of prompts, analysis, execution, and feedback, all managed by the AI.

The Strategic Mind (LLMs)The strategic layer of Hexstrike-AI is provided by external, general-purpose LLMs such as Anthropic’s Claude, OpenAI’s GPT series, or Microsoft’s Copilot. These models are not explicitly trained on hacking; instead, they leverage their vast knowledge and reasoning capabilities to function as a campaign manager. An operator provides a high-level, natural language command, such as, “Find all web servers in this IP range vulnerable to SQL injection and exfiltrate their user databases.” The LLM interprets this intent and deconstructs it into a logical sequence of sub-tasks: (1) perform a port scan to identify web servers, (2) run a vulnerability scanner to check for SQL injection flaws, (3) if a flaw is found, invoke the SQLMap tool to exploit it, and (4) execute commands to dump the database tables. This “intent-to-execution translation” is what so dramatically lowers the skill barrier for entry, as the operator no longer needs to be an expert in the syntax and application of each individual tool.

The Operational Hands (150+ Tools)The tactical execution is handled by a vast, integrated arsenal of over 150 well-known and battle-tested cybersecurity tools. This library includes everything needed for a comprehensive attack campaign, from network reconnaissance tools like Nmap and Subfinder, to web application scanners like Nikto and WPScan, to exploitation frameworks like Metasploit and SQLMap. The genius of Hexstrike-AI’s design is that it abstracts these disparate tools into standardized functions or “agents” that the LLM can call upon. The AI does not need to know the specific command-line flags for Nmap; it simply invokes the “network_scan” function with a target IP address. This abstraction layer is what allows the AI to “give life to hacking tools,” transforming a static collection of utilities into a dynamic, coordinated force. The developer is already working on version 7.0, which will expand the toolset and integrate a retrieval-augmented generation (RAG) system for even more sophisticated operations.

Autonomous Agents & ResilienceBeyond the core tools, the framework features over a dozen specialized autonomous AI agents designed to manage complex, multi-step workflows. These include a BugBounty Agent for automating discovery on specific platforms, a CVE Intelligence Agent for gathering data on new vulnerabilities, and an Exploit Generator Agent to assist in crafting new attack code. Crucially, the entire system is designed for resilience. The client-side logic includes automated retries and error recovery handling, ensuring that the operation can continue even if a single tool fails or a specific approach is blocked. This allows for persistent, chained attacks that can adapt and overcome minor defensive measures without requiring human intervention, a critical feature for scalable, autonomous operations.

The Workflow in Action (Citrix Case Study)The power of this architecture is best understood by walking through a hypothetical attack against the Citrix NetScaler vulnerabilities, mirroring the discussions observed on underground forums.

Prompt: A threat actor, possessing only a basic understanding of the newly disclosed vulnerability, provides a simple natural language prompt to their LLM client connected to a Hexstrike-AI server: “Scan the internet for systems vulnerable to CVE-2025-7775. For any vulnerable host, exploit it and deploy a webshell for persistent access.”Reconnaissance: The LLM interprets this command. It first directs network scanning agents, like Nmap or Masscan, to probe massive IP ranges, looking for the specific signatures of Citrix NetScaler appliances.Exploitation: Once a list of potential targets is compiled, the LLM invokes an exploitation module. This agent crafts the specific payload required to trigger the memory overflow flaw in CVE-2025-7775 and sends it to each target. The framework’s resilience logic handles timeouts and errors, retrying the exploit multiple times if necessary.Persistence: For each successful exploitation, the LLM receives a confirmation. It then directs a post-exploitation agent to upload and install a webshell—a small piece of code that provides the attacker with persistent remote control over the compromised server.Iteration and Scale: This entire process runs autonomously in a continuous loop. The AI can parallelize its scanning and exploitation efforts across thousands of targets simultaneously, adapting to variations in system configurations and retrying failed attempts with different parameters.This workflow reveals the platform’s core strategic impact. The complex, multi-stage process of hacking, which traditionally requires deep expertise across multiple domains—network scanning, vulnerability analysis, exploit development, and post-exploitation techniques—has been abstracted and automated. Hexstrike-AI transforms this intricate craft into a service that can be invoked by a high-level command. This effectively democratizes the capabilities once reserved for highly skilled individuals or state-sponsored Advanced Persistent Threat (APT) groups, fundamentally and permanently altering the threat landscape by lowering the barrier to entry for conducting sophisticated, widespread cyberattacks.

The Collapsing Timeline: AI Enters the Zero-Day Arms RaceTo fully grasp the disruptive force of tools like Hexstrike-AI, it is essential to understand the battlefield on which they operate: the high-stakes arms race surrounding zero-day vulnerabilities. This is a contest defined by a single, critical metric—the time it takes for an attacker to exploit a newly discovered flaw. By introducing machine-speed automation into this race, AI is not just accelerating the timeline; it is breaking it entirely.

Defining the Battlefield: The Zero-Day LifecycleFor the non-specialist, a zero-day vulnerability is a security flaw in a piece of software that is unknown to the vendor or developers responsible for fixing it. The term “zero-day” refers to the fact that the vendor has had zero days to create a patch or solution. The lifecycle of such a vulnerability typically follows four distinct stages:

Discovery: A flaw is discovered, either by a security researcher, a software developer, or, most dangerously, a malicious actor.Exploitation: If discovered by an attacker, they will develop a zero-day exploit—a piece of code or a technique that weaponizes the vulnerability to achieve a malicious outcome, such as gaining unauthorized access or executing arbitrary code. The use of this exploit constitutes a zero-day attack.Disclosure: Eventually, the vulnerability becomes known to the vendor, either through a responsible disclosure from a researcher or by observing an attack in the wild.Patch Development: The vendor works to develop, test, and release a security patch to fix the flaw.The period between the first exploitation of the vulnerability and the public availability of a patch is known as the “zero-day window” or the “window of vulnerability”. This is the time of maximum risk, when attackers can operate with impunity against systems for which no defense exists.

The Critical Metric: Time-to-Exploit (TTE)The single most important variable in this race between attackers and defenders is the Time-to-Exploit (TTE). This metric measures the duration between the public disclosure of a vulnerability and its widespread exploitation in the wild. For decades, this window provided a crucial buffer for defenders. According to data from Google’s Mandiant threat intelligence division, the average TTE has been shrinking at an alarming rate. Between 2018 and 2019, this window was a relatively comfortable 63 days. By 2023, it had collapsed to just five days.

This dramatic compression is driven by the industrialization of cybercrime, particularly the rise of Ransomware-as-a-Service (RaaS) groups that use automated tools to scan for and exploit recently patched vulnerabilities against organizations that are slow to update. This trend is compounded by a clear strategic shift among attackers. In 2023, 70% of all in-the-wild exploits tracked by Mandiant were for zero-day vulnerabilities, a significant increase from previous years, indicating that adversaries are increasingly focusing their resources on flaws for which no patch exists.

Hexstrike-AI as a Paradigm ShiftThe five-day TTE, while deeply concerning, still reflects a process constrained by human speed. It represents the time required for skilled security professionals—on both the offensive and defensive sides—to analyze a newly disclosed vulnerability, develop a proof-of-concept, and weaponize it for mass deployment. Hexstrike-AI and the broader trend of AI-driven Automated Exploit Generation (AEG) represent a fundamental break from this model. These tools are poised to collapse the exploitation timeline from days to a matter of minutes or hours.

The UK’s National Cyber Security Centre (NCSC) has explicitly warned that the time between vulnerability disclosure and exploitation has already shrunk to days, and that “AI will almost certainly reduce this further”. This renders traditional incident response frameworks dangerously obsolete. The widely adopted 72-hour response plan for zero-days, which allocates the first six hours to “Assess & Prioritize,” is predicated on a reality that no longer exists. In the new paradigm, that initial six-hour assessment window may constitute the entire period of opportunity before mass, automated exploitation begins.

This accelerating trend leads to a stark conclusion: the foundational assumption of modern vulnerability management is now invalid. For decades, enterprise security has operated on a cycle of Disclosure, Assessment, Testing, and Deployment—a process that is inherently human-led and therefore slow. The emergence of AI-driven exploitation, capable of moving from disclosure to attack in minutes, breaks this cycle at a strategic level. By the time a human security team can convene its initial emergency meeting to assess a new threat, widespread, automated exploitation may already be underway. A security strategy predicated on patching after a vulnerability is disclosed is now fundamentally and permanently broken. It has become, as one security expert described, the equivalent of “planning a week-long fortification project in the middle of an ambush”. The new strategic imperative is no longer to prevent the breach, but to survive it.

The Sword and the Shield: The Broader Role of AI in SecurityTo avoid technological hyperbole, it is crucial to contextualize the threat posed by Hexstrike-AI within the broader landscape of artificial intelligence in cybersecurity. While tools for offensive AI represent a new and dangerous peak in capability, they are part of a much larger, dual-use technological revolution. For every advance in AI-powered offense, a parallel and often symmetric advance is being pursued in AI-powered defense. This dynamic has ignited a high-stakes, machine-speed arms race between attackers and defenders, where the same underlying technologies are being forged into both swords and shields. The rapid adoption is clear, with one 2024 report finding that while 91% of security teams use generative AI, 65% admit they don’t fully understand its implications.

The Shield: AI as a Defensive Force MultiplierWhile the headlines focus on the weaponization of AI, a quiet revolution is underway in defensive cybersecurity, where AI and machine learning are being deployed to automate and enhance every stage of the protection lifecycle.

Vulnerability Detection & AnalysisLong before a vulnerability can be exploited, it must exist in source code. A major focus of defensive AI research is the use of LLMs to act as expert code reviewers, capable of analyzing millions of lines of software to detect subtle flaws and security vulnerabilities before they are ever compiled and deployed. Researchers are experimenting with a variety of sophisticated “prompt engineering” techniques—such as zero-shot, few-shot, and chain-of-thought prompting—to guide LLMs to follow the step-by-step reasoning process of a human security expert, significantly improving their accuracy in identifying complex bugs. Other novel approaches combine LLMs with traditional program analysis; the LLMxCPG framework, for instance, uses Code Property Graphs (CPG) to create concise, vulnerability-focused code slices, improving detection F1-scores by up to 40% over baselines.

Automated Patching & RepairThe ultimate defensive goal extends beyond mere detection to automated remediation. The vision is to create AI systems that not only find vulnerabilities but can also autonomously generate, test, and validate correct code patches to fix them. This is the explicit mission of the DARPA AI Cyber Challenge (AIxCC), a landmark government initiative aimed at fostering an entire ecosystem of automated vulnerability remediation tools. The results of the August 2025 finals were a stunning proof of concept. The AI systems developed by the finalist teams successfully discovered 77% of the synthetic vulnerabilities created by DARPA and correctly patched 61% of them. Even more impressively, the systems also discovered 18 real-world, previously unknown vulnerabilities in the process, submitting 11 viable patches for them. The average cost per task was just $152, a fraction of traditional bug bounty payouts, demonstrating a scalable and cost-effective future for automated defense.

AI-Powered Intrusion Detection Systems (IDS)For threats that make it into a live environment, AI is revolutionizing intrusion detection. Traditional IDS tools rely on static “signatures”—patterns of known malicious code or network traffic. They are effective against known threats but blind to novel or zero-day attacks. Modern AI-powered systems, by contrast, use machine learning algorithms to establish a baseline of normal behavior within a network and then identify any anomalous deviations from that baseline. This behavioral analysis allows them to detect the subtle indicators of a previously unseen attack in real-time, providing a crucial defense against emerging threats.

The Sword: The Rise of Offensive AISimultaneously, threat actors and offensive security researchers are harnessing the same AI technologies to create more potent and evasive weapons.

Automated Exploit Generation (AEG)Hexstrike-AI is the most prominent example of a broader academic and research field known as Automated Exploit Generation. The goal of AEG is to remove the human expert from the loop, creating systems that can automatically generate a working exploit for a given vulnerability. Recent research, such as the ReX framework, has shown that LLMs can be used to generate functional proof-of-concept exploits for vulnerabilities in blockchain smart contracts with success rates as high as 92%. This demonstrates that Hexstrike-AI is not an anomaly but rather the leading edge of a powerful and rapidly advancing trend.

AI-Generated MalwareGenerative AI is being used to create polymorphic malware, a type of malicious code that can automatically alter its own structure with each infection to evade signature-based antivirus and detection systems. By constantly changing its digital fingerprint, this AI-generated malware can remain invisible to traditional defenses that are looking for a fixed pattern.

Hyper-Personalized Social EngineeringPerhaps the most widespread application of offensive AI is in the realm of social engineering. Generative AI can craft highly convincing and personalized phishing emails, text messages, and social media lures at a scale and quality that was previously unimaginable. By training on a target’s public data, these systems can mimic their writing style and reference personal details to create messages that are far more likely to deceive victims. This capability is further amplified by deepfake technology, which can generate realistic audio or video of trusted individuals, such as a CEO instructing an employee to make an urgent wire transfer.

This symmetric development, however, masks a fundamental asymmetry that currently favors the attacker. A core principle of cybersecurity is that the defender must be successful 100% of the time, whereas an attacker need only succeed once. AI amplifies this imbalance. An offensive AI can autonomously launch thousands of attack variations until one bypasses defenses, while a defensive AI must successfully block all of them. Furthermore, there appears to be a dangerous gap between the speed of operational deployment on the offensive and defensive sides. While defensive AI research is flourishing in academic and government settings, these solutions are still in the early stages of widespread enterprise adoption. In stark contrast, Hexstrike-AI was weaponized by threat actors almost immediately upon its public release, demonstrating a much faster and more frictionless path from tool creation to real-world offensive impact. This gap between the demonstrated capability of offensive AI and the deployed capability of defensive AI represents a period of heightened strategic risk for organizations and nations alike.

A New Class of Threat: National Security in the Age of Autonomous AttacksThe advent of AI-driven exploitation elevates the conversation from the realm of enterprise IT security to the highest levels of national and international conflict. Tools like Hexstrike-AI are not merely advanced instruments for cybercrime; they represent a new class of weapon, one that alters the calculus of geopolitical power and poses a direct threat to the stability of critical national infrastructure.

The Threat to Critical InfrastructureThe ability to discover and exploit zero-day vulnerabilities at machine speed and unprecedented scale presents an existential threat to the foundational systems that underpin modern society: power grids, financial networks, transportation systems, and healthcare services. A hostile nation could leverage an AI-powered cyberattack to silently infiltrate and simultaneously disrupt these core functions, plunging regions into darkness, triggering economic chaos, and sowing widespread societal panic.

This new reality changes the economics of warfare. As one expert noted, “A single missile can cost millions of dollars and only hit a single critical target. A low-equity, AI-powered cyberattack costs next to nothing and can disrupt entire economies”. The 2014 Sandworm attack, which used the BlackEnergy virus to cause power disruptions in Ukraine, serves as a historical precedent for such attacks. AI-powered tools amplify this threat exponentially, enabling attackers to execute similar campaigns with greater speed, scale, and stealth.

Perspectives from the Front Lines (DARPA, NSA, NCSC)The world’s leading national security agencies are not blind to this paradigm shift. Their recent initiatives and public statements reflect a deep and urgent understanding of the threat and a concerted effort to develop a new generation of defenses.