Michael J. Behe's Blog, page 496

April 14, 2019

Artificial intelligence is not dangerous. Only natural intelligence is dangerous

Natural intelligence, for example, seeks to dominate by abolishing privacy:

AI will not become conscious. We don’t even know what consciousness is, in any scientific sense, let alone how to produce it. Nor will AI take over the world. Lacking a conscious self, an entity could not have a personal goal at all, let alone a grandiose one.

So now we come to the true threat posed by AI: it greatly reduces the costs and risks of mass surveillance and manipulation. Some human beings are quite sure that the world would be a better place if they knew more about our business and policed it better. Mass snooping creeps up unnoticed and becomes a way of life. Then it explodes. Denyse O’Leary, “Big Artificial Brother” at Salvo 48

There is a huge media pundit industry anxious to persuade us that machines will come to think like people when the actual concern should be quite the opposite… people will come to think like machines and won’t see through their pretensions. See, for example, A Short Argument Against the Materialist Account of the Mind.

Follow UD News at Twitter!

See also: See also: Your phone is selling your secrets You’d be shocked to know what it tells people who want your money

and

Your phone knows everything now. And it is talking.

Copyright © 2019 Uncommon Descent . This Feed is for personal non-commercial use only. If you are not reading this material in your news aggregator, the site you are looking at is guilty of copyright infringement UNLESS EXPLICIT PERMISSION OTHERWISE HAS BEEN GIVEN. Please contact legal@uncommondescent.com so we can take legal action immediately.

Plugin by Taragana

From The Poached Egg: How Darwinism dumbs us down

From Nancy Pearcey:

To understand how Darwinism undercuts the very concept of rationality, we can think back to the late nineteenth century when the theory first arrived on American shores. Almost immediately, it was welcomed by a group of thinkers who began to work out its implications far beyond science. They realized that Darwinism implies a broader philosophy of naturalism (i.e., that nature is all that exists, and that natural causes are adequate to explain all phenomena). Thus they began applying a naturalistic worldview across the board–in philosophy, psychology, the law, education, and the arts…

In a famous essay called “The Influence of Darwin on Philosophy,” Dewey said Darwinism leads to a “new logic to apply to mind and morals and life.” In this new evolutionary logic, ideas are not judged by a transcendent standard of Truth, but by how they work in getting us what we want. Ideas do not “reflect reality” but only serve human interests.Nancy Pearcey, “How Darwinism Dumbs Us Down” at The Poached Egg

Indeed. And then it falls apart because, obviously, those are ideas too. Then we can’t be sure that we are conscious but our coffee mug is not.

Follow UD News at Twitter!

See also: Panpsychism: You are conscious but so is your coffee mug

and

How can consciousness be a material thing? A surprising implication of Darwinism and materialism. Materialist philosophers face starkly limited choices in how to view consciousness. In analytical philosopher Galen Strawson’s opinion, our childhood memories of pancakes on Saturday, for example, are—and must be—”wholly physical.”

Copyright © 2019 Uncommon Descent . This Feed is for personal non-commercial use only. If you are not reading this material in your news aggregator, the site you are looking at is guilty of copyright infringement UNLESS EXPLICIT PERMISSION OTHERWISE HAS BEEN GIVEN. Please contact legal@uncommondescent.com so we can take legal action immediately.

Plugin by Taragana

Video: How Quantum Mechanics and Consciousness Correlate

From Philip Cunningham:

In this present video I would like to further refine and expand on the argument that I made in the “Albert Einstein vs. Quantum Mechanics and His Own Mind” video with more recent experimental evidence from quantum mechanics. and to thus further strengthen the case that the present experimental evidence that we now have from quantum mechanics strongly supports a Mind First and/or a Theistic view of reality, and even, when combined with other scientific evidences that we now have, strongly supports the Christian view of reality in particular.

In order to accomplish this task I must first define some properties of immaterial mind which are irreconcilable with the reductive materialistic view of mind that Darwinists hold to be true.

Dr. Michael Egnor, who is a neurosurgeon as well as professor of neurosurgery at the State University of New York, Stony Brook, states six properties of immaterial mind that are irreconcilable to the view that the mind is just the material brain. Those six properties are, “Intentionality,,, Qualia,,, Persistence of Self-Identity,,, Restricted Access,,, Incorrigibility,,, Free Will,,,” More.

Follow UD News at Twitter!

See also: What Taragana

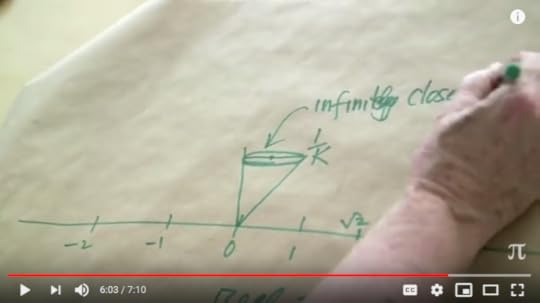

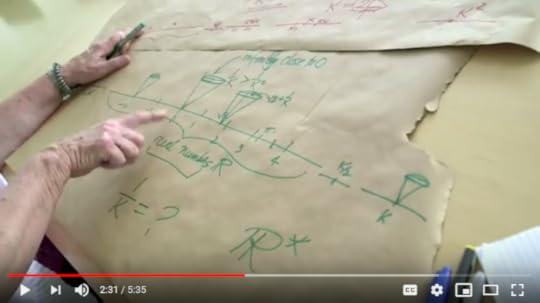

Logic & First Principles, 17: Pondering the Hyperreals *R with Prof Carol Wood (including Infinitesimals)

Dr Carol Wood of Wesleyan University (a student of Abraham Robinson who pioneered non-standard analysis 50+ years ago) has discussed the hyperreals in two Numberphile videos:

Wenmackers may also be helpful:

In effect, using Model Theory (thus a fair amount of protective hedging!) or other approaches, one may propose an “extension” of the Naturals and the Reals, often N* or R* — but we will use *N and *R as that is more conveniently “hyper-“.

Such a new logic model world — the hyperreals — gives us a way to handle transfinites in a way that is intimately connected to the Reals (with Naturals as regular “mileposts”). As one effect, we here circumvent the question, are there infinitely many naturals, all of which are finite by extending the zone. So now, which version of the counting numbers are we “really” speaking of, N or *N? In the latter, we may freely discuss a K that exceeds any particular k in N reached stepwise from 0. That is, some k = 1 + 1 + . . . 1, k times added and is followed by k+1 etc.

And BTW, the question of what a countable infinite means thus surfaces: beyond any finite bound. That is, for any finite k we represent in N, no matter how large, we may succeed it, k+1, k+2 etc, and in effect we may shift a tape starting 0,1,2 etc up to k, k+1, k+2 etc and see that the two “tapes” continue without end in 1:1 correspondence:

Extending, in a quasi-Peano universe, we can identify some K > any n in N approached by stepwise progression from 0. Where of course Q and R are interwoven with N, giving us the familiar reals as a continuum. What we will do is summarise how we may extend the idea of a continuum in interesting and useful ways — especially once we get to infinitesimals.

Clipping from the videos:

Pausing, let us refresh our memory on the structure and meaning of N considered as ordinals, courtesy the von Neumann construction:

{} –> 0{0} –> 1{0,1} –> 2. . . {0,1,2 . . . } –> w, omega the first transfinite ordinal

Popping over to the surreals for a moment, we may see how to build a whole interconnected world of numbers great and small (and yes the hyperreals fit in there . . . from Ehrlich, as Wiki summarises: “[i]t has also been shown (in Von Neumann–Bernays–Gödel set theory) that the maximal class hyperreal field is isomorphic to the maximal class surreal field”):

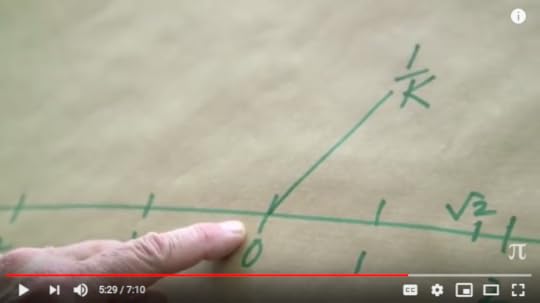

Now, we may also see in the extended R, *R, that 1/K = m, a newly minted number closer to zero than we can get by inverting any k in N or r in R, that is m is less than 1/k for any k in N and is less than 1/r for any r in R (as the reals are interwoven with the naturals):

and that as we have a range around K, K+1 etc and K-1 etc, even K/2 (an integer if K is even) m has company, forming an ultra-near cloud of similar infinitesimals in the extra-near neighbourhood of 0.

Of course, the reciprocal function is here serving as a catapult, capable of leaping over the bulk of the reals in *R, back and forth between transfinite hyperreals such as K and kin and infinitesimals such as m and kin ultra-near to 0.

Using the additive inverse approach, this extends to the negative side also.

Further, by extending addition, 0 plus its cloud (often called a Monad) can be added to any r in R or indeed K in *R, i.e. we may vector-shift the cloud anywhere in *R. That is, every number on the extended line has its own ultra-near cloud.

Where does all of this lead? First, to Calculus foundations, then to implications of a transfinite zone with stepwise succession, among other things; besides, we need concept space to think and reason about matters of relevance to ID, however remotely (or not so remotely). So, let me now clip a comment I made in the ongoing calculus notation thread of discussion:

KF, 86: >>Money shot comment by JB:

JB, 74: we have Arbogast’s D() notation that we could use, but we don’t. Why not? Because we want people to look at this like a fraction. If we didn’t, there are a ton of other ways to write the derivative. That we do it as a fraction is hugely suggestive, especially, as I mentioned, there exists a correct way to write it as a fraction.

This is pivotal: WHY do we want that ratio, that fraction?

WHY do we think in terms of a function y = f(x), which is continuous and “smooth” in a relevant domain, then for some h that somehow trends to 0 but never quite gets there — we cannot divide by zero — then evaluate:dy/dx is

lim h –> 0

of

[f(x + h) – f(x)]/[(x + h) – x]. . . save that, we are looking at the tangent value for the angle the tangent-line of the f(x) curve makes with the horizontal, taken as itself a function of x, f'(x) in Newton’s fluxion notation.

We may then consider f-prime, f'(x) as itself a function and seek its tangent-angle behaviour, getting to f”(x), the second order flow function. Then onwards.

But in all of this, we are spewing forth a veritable spate of infinitesimals and higher order infinitesimals, thus we need something that allows us to responsibly and reliably conceive of and handle them.

I suspect, the epsilon delta limits concept is more of a kludge work-around than we like to admit, a scaffolding that keeps us on safe grounds among the reals. After all, isn’t there no one closest real to any given real, i.e. there is a continuum

But then, is that not in turn something that implies infinitesimal, all but zero differences? Thus, numbers that are all but zero different from zero itself considered as a real? Or, should we be going all vector and considering a ring of the close in C?

In that context, I can see that it makes sense to consider some K that somehow “continues on” from the finite specific reals we can represent, let’s use lower case k, and confine ourselves to the counting numbers as mileposts on the line:

0 – 1 – 2 . . . k – k+1 – k+2 – . . . . – K – K+1 – K+2 . . .

{I used the four dot ellipsis to indicate specifically transfinite span}

We may then postulate a catapult function so 1/K –> m, where m is closer to 0 than ANY finite real or natural we can represent by any k can give.

Notice, K is preceded by a dash, meaning there is a continuum back to say K/2 (–> considering K as even) and beyond, descending and passing mileposts as we go:K-> K-1 –> K-2 . . . K/2 – [K/2 – 1] etc,

but we cannot in finite successive steps bridge down to k thence to 1 and 0.

Where, of course, we can reflect in the 0 point, through posing additive inverses and we may do the rotation i*[k] to get the complex span.

Of course, all of this is to be hedged about with the usual non standard restrictions, but here is a rough first pass look at the hyperreals, with catapult between the transfinite and the infinitesimals that are all but zero. Where the latter clearly have a hierarchy such that m^2 is far closer to 0 than m.

And, this is also very close to the surreals pincer game, where after w steps we can constrict a continuum through in effect implying that a real is a power series sum that converges to a particular value, pi or e etc. then, go beyond, we are already in the domain of supertasks so just continue the logic to the transfinitely large domain, ending up with that grand class.

Coming back, DS we are here revisiting issues of three years past was it: step along mile posts back to the singularity as the zeroth stage, then beyond as conceived as a quasi-physical temporal causal domain with prior stages giving rise to successors. We may succeed in finite steps from any finitely remote -k to -1 to 0 and to some now n, but we have no warrant for descent from some [transefinite] hyperreal remote past stage – K as the descent in finite steps, unit steps, from there will never span to -k. That is, there is no warrant for a proposed transfinite quasi-physical, causal-temporal successive past of our observed cosmos and its causal antecedents.

Going back to the focus, if 0 is surrounded by an infinitesimal cloud closer than any k in R can give by taking 1/k, but which we may attain to by taking 1/K in *R, the hyperreals, then by simple vector transfer along the line, any real, r, will be similarly surrounded by such a cloud. For, (r + m) is in the extended continuum, but is closer than any (r + 1/k) can give where k is in R.

The concept, continuum is strange indeed, stranger than we can conceive of.

So, now, we may come back up to ponder the derivative.

If a valid, all but zero number or quantity exists, then — I am here exploring the logic of structure and quantity, I am not decreeing some imagined absolute conclusion as though I were omniscient and free of possibility of error — we may conceive of taking a ratio of two such quantities, called dy and dx, where this further implies an operation of approach to zero increment. The ratio dy/dx then is much as conceived and h = [(x +h) – x] is numerically dx.

But dx is at the same time a matter of an operation of difference as difference trends to zero, so it is not conceptually identical

Going to the numerator, with f(x), the difference dy is again an operation but is constrained by being bound to x, we must take the increment h in x to identify the increment in f(x), i.e. the functional relationship is thus bound into the expression. This is not a free procedure.

Going to a yet higher operation, we have now identified that a flow-function f'(x) is bound to the function f(x) and to x, all playing continuum games as we move in and out by some infinitesimal order increment h as h trends to zero. Obviously, f'(x) and f”(x) can and do take definite values as f(x) also does, when x varies. So, we see operations as one aspect and we see functions as another, all bound together.

And of course the D-notation as extended also allows us to remember that operations accept pre-image functions and yield image functions. Down that road lies a different perspective on arithmetical, algebraic, analytical and many other operations including of course the vector-differential operations and energy-potential operations [Hamiltonian] that are so powerful in electromagnetism, fluid dynamics, q-mech etc.

Coming back, JB seems to be suggesting, that under x, y and other quasi-spatial variables lies another, tied to the temporal-causal domain, time. Classically, viewed as flowing somehow uniformly at a steady rate accessible all at once everywhere. dt/dt = 1 by definition. From this, we may conceive of a state space trajectory for some entity of interest p, p(x,y,z . . . t). At any given locus in the domain, we have a state and as t varies there is a trajectory. x and y etc are now dependent.

This brings out the force of JB’s onward remark to H:

if x *is* the independent variable, and there is no possibility of x being dependent on something else, then d^2x (i.e., d(d(x))) IS zero

Our simple picture breaks if x is no longer lord of all it surveys.

Ooooopsie . . .

Trouble.

As, going further, we now must reckon with spacetime and with warped spacetime due to presence of massive objects, indeed up to outright tearing the fabric at the event horizon of a black hole. Spacetime is complicated.

A space variable is now locked into a cluster of very hairy issues, with a classical limiting case. >>

The world just got a lot more complicated than hitherto we may have thought. END

PS: As one implication, let us go to Davies and Walker:

In physics, particularly in statistical mechanics, we base many of our calculations on the assumption of metric transitivity, which asserts that a system’s trajectory will eventually [–> given “enough time and search resources”] explore the entirety of its state space – thus everything that is phys-ically possible will eventually happen. It should then be trivially true that one could choose an arbitrary “final state” (e.g., a living organism) and “explain” it by evolving the system backwards in time choosing an appropriate state at some ’start’ time t_0 (fine-tuning the initial state). In the case of a chaotic system the initial state must be specified to arbitrarily high precision. But this account amounts to no more than saying that the world is as it is because it was as it was, and our current narrative therefore scarcely constitutes an explanation in the true scientific sense. We are left in a bit of a conundrum with respect to the problem of specifying the initial conditions necessary to explain our world. A key point is that if we require specialness in our initial state (such that we observe the current state of the world and not any other state) metric transitivity cannot hold true, as it blurs any dependency on initial conditions – that is, it makes little sense for us to single out any particular state as special by calling it the ’initial’ state. If we instead relax the assumption of metric transitivity (which seems more realistic for many real world physical systems – including life), then our phase space will consist of isolated pocket regions and it is not necessarily possible to get to any other physically possible state (see e.g. Fig. 1 for a cellular automata example).

[–> or, there may not be “enough” time and/or resources for the relevant exploration, i.e. we see the 500 – 1,000 bit complexity threshold at work vs 10^57 – 10^80 atoms with fast rxn rates at about 10^-13 to 10^-15 s leading to inability to explore more than a vanishingly small fraction on the gamut of Sol system or observed cosmos . . . the only actually, credibly observed cosmos]

Thus the initial state must be tuned to be in the region of phase space in which we find ourselves [–> notice, fine tuning], and there are regions of the configuration space our physical universe would be excluded from accessing, even if those states may be equally consistent and permissible under the microscopic laws of physics (starting from a different initial state). Thus according to the standard picture, we require special initial conditions to explain the complexity of the world, but also have a sense that we should not be on a particularly special trajectory to get here (or anywhere else) as it would be a sign of fine–tuning of the initial conditions. [ –> notice, the “loading”] Stated most simply, a potential problem with the way we currently formulate physics is that you can’t necessarily get everywhere from anywhere (see Walker [31] for discussion).

[“The “Hard Problem” of Life,” June 23, 2016, a discussion by Sara Imari Walker and Paul C.W. Davies at Arxiv.]

Yes, cosmological fine-tuning lurks under these considerations, given where statistical mechanics points.

Copyright © 2019 Uncommon Descent . This Feed is for personal non-commercial use only. If you are not reading this material in your news aggregator, the site you are looking at is guilty of copyright infringement UNLESS EXPLICIT PERMISSION OTHERWISE HAS BEEN GIVEN. Please contact legal@uncommondescent.com so we can take legal action immediately.

Plugin by Taragana

April 13, 2019

Why do some biologists hate theism more than physicists do?

British physicist John Polkinghorne thinks that biologists see a more disorderly universe:

I think two effects produce this hostility. One is that biologists see a much more perplexing, disorderly, and painful view of reality than is presented by the austere and beautiful order of fundamental physics. . . . There is, however, a second effect at work of much less intellectual respectability. Biology, through the unravelling of the molecular basis of genetics, has scored an impressive victory, comparable to physics’ earlier elucidation of the motions of the solar system through the operation of universal gravity. The post-Newtonian generation was intoxicated with the apparent success of universal mechanism and wrote books boldly proclaiming that man is a machine. Dan Peterson, “Why does hostility to theism tend to be more pronounced among some biologists than among physicists?” at Patheos

He thinks they’ve got another think coming. But to what extent do some biologists hate a lot of things generally? Consider, for example, “PZ Myers blows off attack on Bret Weinstein” or “Priceless: A Plea To Son With Down Syndrome To Be Tolerant Of Dawkins” These guys’ world probably isn;t that much messier than ours; it’s more a question of focus in some cases.

The top of the comment stream features Michael Behe.

Follow UD News at Twitter!

Copyright © 2019 Uncommon Descent . This Feed is for personal non-commercial use only. If you are not reading this material in your news aggregator, the site you are looking at is guilty of copyright infringement UNLESS EXPLICIT PERMISSION OTHERWISE HAS BEEN GIVEN. Please contact legal@uncommondescent.com so we can take legal action immediately.

Plugin by Taragana

New free speech rules won’t change much at US Universities, says campus politics expert

First Amendment defenders will welcome the new rules that tie government money to academic freedom but anyone ringing a cowbell can drown out a speaker and craven administrators would have little incentive to take the risk of stopping them:

We can encourage our children and grandchildren to stand up against the stifling political conformity and let them know it’s okay if they’re penalized for their principled stand.

As I told my own daughter in a letter when she started college, “Most of your professors will be excellent and you will gain a priceless education from attending college. But a few of your professors will be militant, intolerant disasters, yet they will be ostensibly intelligent and far more articulate than you. So what should you do? …

That’s okay, I told her: “Your mother and I will accept, with pride, your D if it was given as political punishment by a bad apple because you remained principled.”Ronald L. Trowbridge, “Taking a ‘D’ in the Fight for Campus Speech” at Independent Institute

Well, that’s fine if it costs the prof’s department a huge financial penalty to behave that way. Doubtless, he’d be happy to teach for less, without the taxpayers’ money. Otherwise, it’s not clear how the expert’s approach will help.

But if it weren’t for new rules, no one would even be talking about it.

Follow UD News at Twitter!

See also: New US Free Speech Policy For Universities Miffs Boffins At Nature

and

US Prez Trump Vows To Tie Federal Funding To Campus Free Speech

Copyright © 2019 Uncommon Descent . This Feed is for personal non-commercial use only. If you are not reading this material in your news aggregator, the site you are looking at is guilty of copyright infringement UNLESS EXPLICIT PERMISSION OTHERWISE HAS BEEN GIVEN. Please contact legal@uncommondescent.com so we can take legal action immediately.

Plugin by Taragana

New England Journal of Medicine, seeking new editor, urged to get woke

Or something. Back in October 2018, the oldest and one of the most prestigious medical journals was looking for a new editor-in-chief sometime within the next year, as the current one announced that he was planning to retire. The journal has received, as one might expect, a lot of advice, including

Dr. Richard Smith, former editor of the BMJ, offered this advice: Change the raison d’etre…

Looking ahead, Smith said, as science moves away from journals as the most important medium for delivering information, the next editor of NEJM will have to adapt.

“The main job of journals will not be to disseminate science but to ‘speak truth to power,’ encourage debate, campaign, investigate and agenda-set — the same job as the mass media. The NEJM needs to strengthen that side of the journal.” (Smith may have a model in mind. Fiona Godlee, the current editor of the BMJ, has called her publication an outlet for “campaigning” against what see sees as flaws in the biomedical edifice.) Adam Marcus and Ivan Oransky, “At NEJM, a change at the top offers a chance to reshape the world’s oldest medical journal” at STAT

In short, Smith

1) sees mass media journalism as a “campaign” with an “agenda” Sadly, that’s true today. (Cue further mass media layoffs.) Mass media were more useful (and better read) back when they told us things that the elite of none of the parties in power wanted us to know. Now that they are so often just political operatives with bylines, it is less of a timewaster to follow the politicians directly.

and

2) He makes clear that science contributes only a little to NEJM’s perspective in the pursuit of that goal.

Something like this has happened to The Lancet too.

Overall, we’re gonna hear some remarkable stuff touted as medicine in years to come.

Follow UD News at Twitter!

See also: Lancet: Why has a historic medical publication gone weird?

and

Was Thomas Kuhn not so “evil” after all? Philosopher of science: If Errol or Kripke or anyone can tell me something absolutely objective and unchanging about what’s out there in the natural world, I sincerely want to hear and believe that. Maybe I should (re)turn to Jesus.

Copyright © 2019 Uncommon Descent . This Feed is for personal non-commercial use only. If you are not reading this material in your news aggregator, the site you are looking at is guilty of copyright infringement UNLESS EXPLICIT PERMISSION OTHERWISE HAS BEEN GIVEN. Please contact legal@uncommondescent.com so we can take legal action immediately.

Plugin by Taragana

Was Thomas Kuhn not so “evil” after all?

John Horgan offers a defense of the science philosopher who made “paradigm shift” an everyday term, by James McClellan, a different early Seventies student from Errol Morris, the one at whom he flung an ashtray back then:

Back then science was seen as having a distinct method and as the triumphant and seamless layering of one secure brick of knowledge on top of another. Whatever else we may think of Kuhn’s Structure of 1962, he killed Whiggism. He showed once and for all that the history of science has been marked by fundamental discontinuities (revolutions), and he was supremely creative in outlining processes involved in scientific change. These are major and incontrovertible contributions to our understanding of science and its history…

Of course, all that came crashing down, Errol and Steven Weinberg notwithstanding, when it became clear that science is fundamentally a social activity and scientific claims are socially constructed by practitioners, admittedly trying their best to say something solid about the natural world around us. Kuhn’s views of 1962 had to be fitted in to and defended in this radically new intellectual context. He did his best despite the disdain of establishment philosophers, who never accepted him as a legitimate voice. …

If Errol or Kripke or anyone can tell me something absolutely objective and unchanging about what’s out there in the natural world, I sincerely want to hear and believe that. Maybe I should (re)turn to Jesus. John Horgan, “Thomas Kuhn Wasn’t So Bad” at Scientific American

Hold that last thought (?). Maybe. McClellan could otherwise end up arguing whether he is more conscious than his coffee mug. That is the trajectory; the question is, what role did Kuhn really play?

Follow UD News at Twitter!

Flung an ashtray? See: Was philosopher of science Thomas Kuhn “evil”?

See also:

Darwinism’s Influence On Philosopher Of Science Thomas Kuhn (a friend thinks it’s relevant)

and

Is there life Post-Truth?

Copyright © 2019 Uncommon Descent . This Feed is for personal non-commercial use only. If you are not reading this material in your news aggregator, the site you are looking at is guilty of copyright infringement UNLESS EXPLICIT PERMISSION OTHERWISE HAS BEEN GIVEN. Please contact legal@uncommondescent.com so we can take legal action immediately.

Plugin by Taragana

Logic & First Principles, 16: The problem of playing God (when we don’t — cannot — know how)

In discussing the attempted brain hacking of monkeys, I made a comment about refraining from playing God. This sparked a sharp reaction, then led to an onward exchange. This puts on the table the captioned issue . . . which it seems to me is properly part of our ongoing logic and first principles reflections. Here, the other big piece of axiology (the study of the valuable) ethics, with side-orders of limitations in epistemology. So, kindly allow me to headline:

KF, 10: >>It is interesting what sparked the sharpness of exchange above:

KF: Playing God without his knowledge base, wisdom and benevolence is asking for trouble.

A78 is right:

all I’m saying is proceed with caution we shouldn’t play God because we don’t know how.

Some humility, some prudence, some caution — thus, a least regrets decision principle — is therefore well advised.

A song on Poker as a metaphor for life, once put this . . . I cite as heard on scratchy AM radio decades ago:

know when to hold

know when to fold

know when to walk away

know when to run

you never count your money

sitting at the table

there’ll be time enough

for counting

when the dealing’s

done

[See: https://www.lyricsfreak.com/k/kenny+rogers/the+gambler_20077886.html ]

I add a vid:

Let us again cite his cautions:

A78: I find this comment to be in poor taste “Should we

tell the people that you’re going to die because we shouldn’t play God”

It really doesn’t justify or prove the point of using or doing this type

of research.It’s more of a manipulative comment trying to force somebody into a

moral dilemma (obviously most people are not going to deny someone life

saving medication) but it certainly doesn’t prove any point. As an

opposite and equal extreme, one can same the same for the opposite

prescriptive. IE “We shouldn’t play God with other living creatures

genomes because we could accidental create a fatal mutation in the

Species which caused its extinction”Simple slip ups in this field can cause the deaths of millions of

people if not billions with a virus that was genetically engineered or

something that came naturally that sprouted off of our genetic

tampering.The other scenario could happen after 100 years of genetic

manipulations of the human species, a new virus or other new microbes

now sharing the new genes that we created, come into existence some of

which we might not be able to fight off. The other possibility is

permanent genetic defects that show up way later in a generation because

of our meddling.In a way, when we mess with the genome, It is similar to introducing a

new species to an ecosystem that is not ready to support it. We do not

know the impact or the effects that could happen down the line but they

can be devastating, and often with proceeded these was good intentions,

Hence “the road to hell is paved in good intentions”

Wisdom requires due humility to recognise the potential for unintended havoc.

We may turn our further thoughts on a premise, that BB intends to

persuade us towards the truth and the right as he perceives it. That is,

he implies a thesis I have often highlighted here at UD in recent

times: our thought-volitional inner life is morally governed. So

governed, by KNOWN — repeat, KNOWN (and undeniable) — duties to truth,

right reason, prudence, fairness and justice, etc. This, on pain of

patent absurdity on the attempted denial (we do not operate on the

global premise of who is the most effective manipulative liar). Where,

such is attested by the witness-voice of conscience. Which cannot be its

grounds, its authentic roots.

So, what is?

We here find ourselves facing the IS-OUGHT gap, and post Hume there

is only one place it can be bridged on pain of ungrounded ought: the

root of reality. We need an inherently good, sound, sufficient root for

reality in order for our whole inner life to make sense, an inner life

we cannot set aside as inconvenient. Where, it is easy to see that for

such a root, there is but one serious candidate. (As this is a

philosophical exercise [and not an undue imposition], if you dispute

this, simply provide and justify a serious alternative: _________ ,

addressing comparative difficulties: _________ )

The candidate is: the inherently good and utterly wise creator

God, a necessary and maximally great being, worthy of our loyalty and of

the responsible, reasonable service of doing the good that accords with

our manifest nature.

And, it will be readily apparent that when one deals with powerful,

potentially destructive ill-understood domains fraught with moral

hazards, a due recognition that we are not omnipotent, we are not

omniscient, we are not omnibenevolent, would be appropriate. As Cicero

summed up, conscience is a law, prudence is a law.

So, for one, instead of playing heedlessly with gene engineering

fire, we should learn from the history of damaging industrial

development, spewing all sorts of chemicals into the environment (and

into our foods), importing invasive species and the challenges of

nuclear technology and should proceed with humility, prudence,

soundness. How many times have we been promised sci-tech, technocratic

utopias that failed? Failed, with awful consequences and costs?

It is not for nothing that Hippocrates and others taught us to ponder

the duties of the learned professional in society (and environment).

First, do no harm.

Second,

art is long,

life is short,

opportunity fleeting,[experience treacherous,]

[Thanks SM]

judgement difficult.

The professional must therefore act with due humility and prudence,

understanding the doctrine of unintended consequences in a deeply

interconnected world. Where, a properly cultivated conscience is a part

of the picture. Where, part of that education is and should be, the

lessons of our civilisation’s tradition of ethical theism. For, we need

to recognise that we are not God.

Something, that rage at our creator, the source of reality, wisdom and sound moral government, is liable to forget.

Anger, is a blinding emotion.>>

Food for thought. END

Copyright © 2019 Uncommon Descent . This Feed is for personal non-commercial use only. If you are not reading this material in your news aggregator, the site you are looking at is guilty of copyright infringement UNLESS EXPLICIT PERMISSION OTHERWISE HAS BEEN GIVEN. Please contact legal@uncommondescent.com so we can take legal action immediately.

Plugin by Taragana

April 12, 2019

Is there a fixed time limit for recovery after mass extinctions?

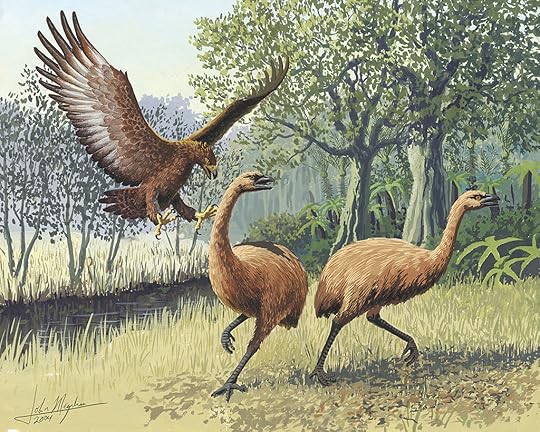

Haast’s eagle and moas (both extinct), New Zealand/ John Megahan (CC BY 2.5 )

Haast’s eagle and moas (both extinct), New Zealand/ John Megahan (CC BY 2.5 )From ScienceDaily:

It takes at least 10 million years for life to fully recover after a mass extinction, a speed limit for the recovery of species diversity that is well known among scientists. Explanations for this apparent rule have usually invoked environmental factors, but research led by The University of Texas at Austin links the lag to something different: evolution.

The recovery speed limit has been observed across the fossil record, from the “Great Dying” that wiped out nearly all ocean life 252 million years ago to the massive asteroid strike that killed all nonavian dinosaurs. The study, published April 8 in the journal Nature Ecology & Evolution, focused on the later example. It looks at how life recovered after Earth’s most recent mass extinction, which snuffed out most dinosaurs 66 million years ago. The asteroid impact that triggered the extinction is the only event in Earth’s history that brought about global change faster than present-day climate change, so the authors said the study could offer important insight on recovery from ongoing, human-caused extinction events…

In other words, mass extinctions wipe out a storehouse of evolutionary innovations from eons past. The speed limit is related to the time it takes to build up a new inventory of traits that can produce new species at a rate comparable to before the extinction event. Paper. (paywall) – Christopher M. Lowery, Andrew J. Fraass. Morphospace expansion paces taxonomic diversification after end Cretaceous mass extinction. Nature Ecology & Evolution, 2019; DOI: 10.1038/s41559-019-0835-0 More.

While this may turn out to be true, the apparently fixed rate of change implies a more regulated system than the random developments that we are used to associating with evolution.

One interpretation of the claim cites the concept of morphospace:

Morphospace, short for “morphological space”, is a way for scientists to visualise the possible shape and structure of organisms, the physical phenotype. A morphospace can show all possible forms, and how many of those have been taken on by organisms in the real world. …

What Lowery and Fraass discovered is that the fossil record for marine plankton shows that increasingly complex morphologies are linked to episodes of taxonomic diversification, with the latter being dependent on the former. Newly occupied morphospaces opened up room for increasing radiation, just as the morphospace reconstruction hypothesis predicted.

Importantly, their work indicates that the constraints of morphospace colonisation might impose a “speed limit” on post-extinction taxonomic diversification. This is a sobering reminder that if we are in the midst of an anthropogenic extinction event, the biosphere will take millions of years to get over it. Stephen Flieschfresser, “Mass extinction recovery governed by “morphospace”” at Cosmos

One can really only test this thesis for the distant past but structuralist, who think evolution is governed by fixed laws, should find it of interest.

See also:Learning more about the asteroid that doomed the dinosaurs

Insectologists swat “insects are doomed” paper

and

Giant tortoise, thought extinct, turns up again

Copyright © 2019 Uncommon Descent . This Feed is for personal non-commercial use only. If you are not reading this material in your news aggregator, the site you are looking at is guilty of copyright infringement UNLESS EXPLICIT PERMISSION OTHERWISE HAS BEEN GIVEN. Please contact legal@uncommondescent.com so we can take legal action immediately.

Plugin by Taragana

Michael J. Behe's Blog

- Michael J. Behe's profile

- 219 followers