Michael J. Behe's Blog, page 42

September 3, 2022

Natural Sources of Information?

Excerpted from Canceled Science, by Eric Hedin:

The EnvironmentIn systems which are far from thermodynamic equilibrium, differences or gradients in various thermodynamic variables may exist within the system and between the system and the environment. It has sometimes been mistakenly assumed that these gradients could generate the information found in living systems.[i] However, while thermodynamic gradients may produce complexity, they do not generate information. The foam and froth at the bottom of a waterfall, or the clouds of ash erupting out of a volcano, represent a high level of complexity due to the thermodynamic gradients driving their production, but for information to arise, specificity must be coupled with the complexity. Biological systems are information-rich because they contain a high level of specified complexity, which thermodynamic gradients, or any other natural processes, act to destroy rather than to create.

During a non-equilibrium process, statistical fluctuations become negligibly small for systems with even more than ten particles, which easily applies for any system relevant to the origin and development of life.[ii] Charles Kittel, writing on the topic of thermodynamics, considers a system composed of the number of particles in about a gram of carbon. This amount is relevant to origin-of-life scenarios since physical constraints on the need for localization of the raw ingredients leading to life mean that considering larger amounts of carbon-based ingredients wouldn’t affect the outcome of this argument. Kittel emphasizes that even small statistical fluctuations from the most probable configuration of such a system (with its particles randomly mixed) will never occur in a time frame as short as the entire history of our universe.[iii] This means that any appeal to statistical fluctuations as the source of new biological information flatly contradicts the physics of statistical mechanics. It is therefore not possible to have “an accumulation of information as the result of a series of discrete and incremental steps,” as has been postulated.[iv] Again, for systems with as many constituent atoms as biomolecules have, the information content will decrease with time, and never increase.[v]

Nonetheless, others have tried to suggest that certain natural processes can, in fact, generate new biological information. At times this opinion rests on misidentifying increasing information with decreasing thermodynamic entropy.[vi] Decreasing thermodynamic entropy can only be leveraged into information if a design template and the mechanism to employ it already exist. In this case, the desired information is not being created by the action of the low-entropy energy source; it is merely being transferred from the template to an output product. An example of such a system is a printing press—it takes energy to make it run, entropy increases during the process, and information is printed. But the important point to understand is that the whole process produces no information beyond what pre-exists in the type-set template of the printing press mechanism.

Our sun is a low-entropy source of thermal energy that the Earth receives via electromagnetic radiation. This thermal energy is useful energy in the thermodynamic sense because it can be used to do work. The same is true of energy released by gravitational potential energy being converted into kinetic energy or heat. Waterfalls and solar collectors can produce energy for useful work, but they are sterile with respect to generating information.

In fact, sources of natural energy (sunlight, fire, earthquakes, hurricanes, etc.) universally destroy complex specified information, and never create it. What will happen to a painting if left outside in the elements? What happens to a note tossed into a mulch pile? They degrade by the actions of nature, until all traces of information disappear. Or consider an unfortunate opossum killed on a country road. Will its internal, complex biochemistry increase or decrease with time due to the effects of natural forces? We all know the answer. If not eaten by scavengers, it eventually turns to a pile of dirt.

[i] Jonathan Lunine, Earth: Evolution of a Habitable World, 2nd ed. (New York: Cambridge University Press, 2013), 151.

[ii] Hobson, Concepts in Statistical Mechanics, 143.

[iii] Charles Kittel, Thermal Physics (New York: John Wiley & Sons, 1969), 44–45.

[iv] Robert O’Connor, “The Design Inference: Old Wine in New Wineskins,” in God and Design: The Teleological Argument and Modern Science, ed. Neil A. Manson (Abingdon: Routledge, 2003).

[v] Hobson, Concepts in Statistical Mechanics, 153.

[vi] Brian Greene, The Fabric of the Cosmos: Space, Time, and the Texture of Reality (New York: Vintage Books, 2004), 175; Franklin M. Harold, The Way of the Cell: Molecules, Organisms and the Order of Life (Oxford: Oxford University Press, 2001), 228–229.

Copyright © 2022 Uncommon Descent . This Feed is for personal non-commercial use only. If you are not reading this material in your news aggregator, the site you are looking at is guilty of copyright infringement UNLESS EXPLICIT PERMISSION OTHERWISE HAS BEEN GIVEN. Please contact legal@uncommondescent.com so we can take legal action immediately.Plugin by Taragana

September 2, 2022

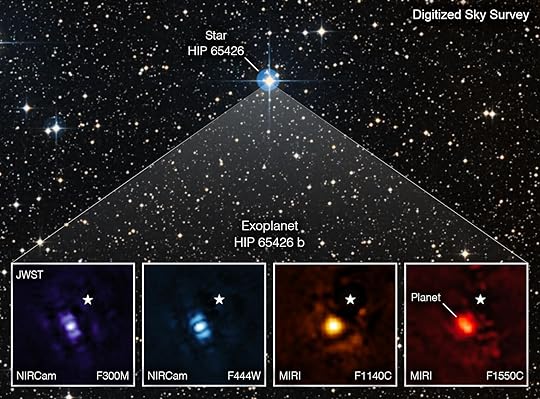

At SciTech Daily: “Space Treasure” – Webb Captures Its First-Ever Direct Image of a Distant World

Elizabeth Landau (NASA) writes:

For the first time ever, astronomers used NASA’s James Webb Space Telescope to take a direct image of a planet outside our solar system. The exoplanet, called HIP 65426 b, is a gas giant. This means it has no rocky surface and could not be habitable.

THIS IMAGE SHOWS THE EXOPLANET HIP 65426 B IN DIFFERENT BANDS OF INFRARED LIGHT, AS SEEN FROM THE JAMES WEBB SPACE TELESCOPE: PURPLE SHOWS THE NIRCAM INSTRUMENT’S VIEW AT 3.00 MICROMETERS, BLUE SHOWS THE NIRCAM INSTRUMENT’S VIEW AT 4.44 MICROMETERS, YELLOW SHOWS THE MIRI INSTRUMENT’S VIEW AT 11.4 MICROMETERS, AND RED SHOWS THE MIRI INSTRUMENT’S VIEW AT 15.5 MICROMETERS. CREDIT: ASA/ESA/CSA

THIS IMAGE SHOWS THE EXOPLANET HIP 65426 B IN DIFFERENT BANDS OF INFRARED LIGHT, AS SEEN FROM THE JAMES WEBB SPACE TELESCOPE: PURPLE SHOWS THE NIRCAM INSTRUMENT’S VIEW AT 3.00 MICROMETERS, BLUE SHOWS THE NIRCAM INSTRUMENT’S VIEW AT 4.44 MICROMETERS, YELLOW SHOWS THE MIRI INSTRUMENT’S VIEW AT 11.4 MICROMETERS, AND RED SHOWS THE MIRI INSTRUMENT’S VIEW AT 15.5 MICROMETERS. CREDIT: ASA/ESA/CSAAs seen through four different light filters, the image shows how Webb’s powerful infrared vision can easily capture worlds beyond our solar system. It paves the way for future observations that will reveal more information than ever before about exoplanets.

“This is a transformative moment, not only for Webb but also for astronomy generally,” said Sasha Hinkley, associate professor of physics and astronomy at the University of Exeter in the United Kingdom, who led these observations with a large international collaboration. An international mission, the James Webb Space Telescope is led by NASA in collaboration with its partners, ESA (European Space Agency) and CSA (Canadian Space Agency).

The exoplanet in Webb’s image, HIP 65426 b, is about six to 12 times the mass of Jupiter. With these observations, astronomers could help narrow that down that range even further. It is young as far as planets go — about 15 to 20 million years old, compared to our 4.5-billion-year-old Earth.

Because HIP 65426 b is about 100 times farther from its host star than Earth is from the Sun, it is sufficiently distant from the star that Webb can easily separate the planet from the star in the image.

Webb’s Near-Infrared Camera (NIRCam) and Mid-Infrared Instrument (MIRI) are both equipped with coronagraphs. These are sets of tiny masks that block out starlight, enabling Webb to take direct images of certain exoplanets like this one.

“It was really impressive how well the Webb coronagraphs worked to suppress the light of the host star,” Hinkley said.

Because stars are so much brighter than planets, taking direct images of exoplanets is challenging. In fact, the HIP 65426 b planet is more than 10,000 times fainter than its host star in the near-infrared, and a few thousand times fainter in the mid-infrared.

In each of the filtered images, the planet appears as a slightly differently shaped blob of light. That’s because of the particulars of Webb’s optical system and how it translates light through the different optics.

“Obtaining this image felt like digging for space treasure,” said Aarynn Carter. He is a postdoctoral researcher at the University of California, Santa Cruz, and led the analysis of the images. “At first all I could see was light from the star, but with careful image processing I was able to remove that light and uncover the planet.”

Although this is not the first direct image of an exoplanet taken from space – the Hubble Space Telescope has captured direct exoplanet images previously – HIP 65426 b points the way forward for Webb’s exoplanet exploration.

Copyright © 2022 Uncommon Descent . This Feed is for personal non-commercial use only. If you are not reading this material in your news aggregator, the site you are looking at is guilty of copyright infringement UNLESS EXPLICIT PERMISSION OTHERWISE HAS BEEN GIVEN. Please contact legal@uncommondescent.com so we can take legal action immediately.

“I think what’s most exciting is that we’ve only just begun,” Carter said. “There are many more images of exoplanets to come that will shape our overall understanding of their physics, chemistry, and formation. We may even discover previously unknown planets, too.”

SciTech Daily

Plugin by Taragana

September 1, 2022

At Phys.org: We’re heading to the moon and maybe Mars. So who owns them?

Humanity is set to make a return to the moon with the Artemis program, in what NASA says is a first step to Mars. So, who gets first dibs?

NASA’s Space Launch System (SLS) rocket with the Orion spacecraft aboard is seen atop a mobile launcher at Launch Pad 39B. Credit: NASA

NASA’s Space Launch System (SLS) rocket with the Orion spacecraft aboard is seen atop a mobile launcher at Launch Pad 39B. Credit: NASADr. Aaron Boley, a professor in UBC’s department of physics and astronomy, discusses the mission’s plans and why we need to sort out access and resource rights before we return to the lunar surface.

What’s going on with the moon right now?

There’s a push to have a sustained presence on the Moon by many players, including the United States, China and Russia. One such effort is NASA’s upcoming Artemis 1 launch, a three-part mission that starts with an upcoming unmanned launch and results in returning people to the lunar surface.

We have decades of experience operating in a low Earth orbit—but lunar operations are vastly different. And while there is experience from the Apollo missions 50 years ago, the goal is now to build a sustained human presence with critical support infrastructure. An orbiting station will provide a connection between Earth and the Moon for more complex operations. This program will also allow humans to learn how to extract and use resources in space, a requirement for a sustained human presence off Earth. For example, you could harvest lunar ice and use it for radiation shielding, life support and fuel.

A lunar presence will also open up science opportunities in orbit and on the surface, including detailed lunar chronology using surface samples and sites, which could provide information about the Earth’s history in the Solar System.

Does it matter who owns space?

Putting a flag on the Moon or in space doesn’t mean you own it. However, the question then becomes, if you take something from space do you then own it? If someone picks up a moon rock, ice, or other resource, does that rock, resource, or even the information you learn from it, belong to that person? Having multilateral agreements that address these questions is crucial, preferably before we go. We also need to have conflict resolution protocols in place should two powerful groups want the same resource, or if a company discovers important scientific information that should be available publicly. And how are we going to address cultural and natural heritage?

The current corpus of international law applied to space, including the 1967 Outer Space Treaty, provides an important foundation, but is not able to answer these questions alone. The United States has been promoting the Artemis Accords, a political commitment toward a particular vision for cooperation on the Moon—Canada is a signatory. But these have not been negotiated multilaterally. In contrast, a working group has been established at the UN Committee on the Peaceful Uses of Outer Space to reach an international understanding of resource utilization on celestial bodies. But such work takes time, and countries are racing to establish practices that might influence the outcome of that process.

Copyright © 2022 Uncommon Descent . This Feed is for personal non-commercial use only. If you are not reading this material in your news aggregator, the site you are looking at is guilty of copyright infringement UNLESS EXPLICIT PERMISSION OTHERWISE HAS BEEN GIVEN. Please contact legal@uncommondescent.com so we can take legal action immediately.

Why are these agreements important?

What we may see without agreements is countries testing each other’s limits in space, similar to the testing of claims of sovereignty in, say, seas on Earth. For example, a rover may be driven close to another country’s activities just to make a point concerning free access. We also want to avoid the loss of scientific information or opportunities due to a lack of data sharing or reckless activities. And we need to recognize that the path should not be decided by just a handful of states.

To explore the Moon is to ask questions about the origin of Earth and humanity. Historically, space has been a stabilizing influence for humanity because you have to work together in such a harsh environment otherwise things go wrong pretty quickly. The International Space Station and Cospas-Sarsat are testaments to this.

Phys.org

Plugin by Taragana

At Evolution News: Rosenhouse’s Whoppers: The Environment as a Source of Information

William Dembski writes:

I am responding again to Jason Rosenhouse about his book The Failures of Mathematical Anti-Evolutionism. See my earlier posts here and here.

In Rosenhouse’s book, he claims that “natural selection serves as a conduit for transmitting environmental information into the genomes of organisms.” (p. 215) I addressed this claim briefly in my review, indicating that conservation of information shows it to be incomplete and inadequate, but essentially I referred him to technical work by me and colleagues on the topic. In his reply, he remains, as always, unpersuaded. So let me here give another go at explaining the role of the environment as a source of information for Darwinian evolution. As throughout this response, I’m addressing the unwashed middle.

So What’s the Problem?Darwinian evolution depends on selection, variation, and replication working within an environment. How selection, variation, and replication play out, however, depends on the particulars of the environment. Take a simple example, one that Rosenhouse finds deeply convincing and emblematic for biological evolution, namely, Richard Dawkins’s famous METHINKS IT IS LIKE A WEASEL simulation (pp. 192–194 of Rosenhouse’s book). Dawkins imagines an environment consisting of sequences of 28 letters and spaces, random variations of those letters, and a fitness function that rewards sequences to the degree that they are close to (i.e., share letters with) the target sequence METHINKS IT IS LIKE A WEASEL.

The problem is not with the letter sequences, their randomization, or even the activity of a fitness function in guiding such an evolutionary process, but the very choice of fitness function. Why did the environment happen to fixate on METHINKS IT IS LIKE A WEASEL and make evolution drive toward that sequence? Why not a totally random sequence? The whole point of this example is to suggest that evolution can produce something design-like (a meaningful phrase, in this case, from Shakespeare’s Hamlet) without the need for actual design. But most fitness functions would evolve toward random sequences of letters and spaces. So what’s the difference maker in the choice of fitness? If you will, what selects the fitness function that then selects for fitness in the evolutionary process? Well, leaving aside some sort of interventional design (and not all design needs to be interventional), it’s got to be the environment.

But that’s the problem. What renders one environment an interesting source of evolutionary change given selection, variation, and replication but others uninteresting? Most environments, in fact, don’t lead to any interesting form of evolution. Consider Sol Spiegelman’s work on the evolution of polynucleotides in a replicase environment. One thing that makes real world biological evolution interesting, assuming it actually happens, is that it increases information in the items that are undergoing evolution. Yet Spiegelman demonstrated that even with selection, variation, and replication in play, information steadily decreased over the course of his experiment. Brian Goodwin, in his summary of Spiegelman’s work, highlights this point (How the Leopard Changed Its Spots, pp. 35–36):

Simple and Yet ProfoundIn a classic experiment, Spiegelman in 1967 showed what happens to a molecular replicating system in a test tube, without any cellular organization around it. The replicating molecules (the nucleic acid templates) require an energy source, building blocks (i.e., nucleotide bases), and an enzyme to help the polymerization process that is involved in self-copying of the templates. Then away it goes, making more copies of the specific nucleotide sequences that define the initial templates. But the interesting result was that these initial templates did not stay the same; they were not accurately copied. They got shorter and shorter until they reached the minimal size compatible with the sequence retaining self-copying properties. And as they got shorter, the copying process went faster. So what happened with natural selection in a test tube: the shorter templates that copied themselves faster became more numerous, while the larger ones were gradually eliminated. This looks like Darwinian evolution in a test tube. But the interesting result was that this evolution went one way: toward greater simplicity.

At issue here is a simple and yet profound point of logic that continually seems to elude Darwinists as they are urged to come to terms with how it can be that the environment is able to bring about the information that leads to any interesting form of evolution. And just to be clear, what makes evolution interesting is that it purports to build all the nifty biological systems that we see around us. But most forms of evolution, whether in a biology lab or on a computer mainframe, build nothing interesting.

The logical point at issue here is one the philosopher John Stuart Mill described back in the 19th century. He called it the “method of difference” and laid it out in his System of Logic. According to this method, to discover which of a set of circumstances is responsible for an observed difference in outcomes requires identifying a circumstance that is present when the outcome occurs and absent when it doesn’t occur. An immediate corollary of this method is that common circumstances cannot explain a difference in outcomes

So if selection, variation, and replication operating within an environment can produce wildly different types of evolution (information increasing, information decreasing, interesting, uninteresting, engineering like, organismic like, etc.), then something else besides these factors needs to be in play. Conservation of information says that the difference maker is information built into the environment.

In any case, the method of difference shows that such information cannot be reducible to Darwinian processes, which is to say, to selection, variation, and replication (because these are common to all forms of Darwinian evolution). Darwinists, needless to say, don’t like that conclusion. But they are nonetheless stuck with it. The logic is airtight and it means that their theory is fundamentally incomplete. For more on this, see my article with Bob Marks titled “Life’s Conservation Law” (especially section 8).

Evolution News

Dembski’s conclusions are consistent with expectations from information theory and the generalized 2nd law of thermodynamics; namely, that natural processes cause a system to lose information over the passage of time. If an increase in information is seen in any system (such as life from non-life, or the appearance of novel, functional body plans or physiological systems), then natural processes cannot have been the cause. If not natural, then the increase in information must have come from an intelligent agent (the only known source of functional information).

Copyright © 2022 Uncommon Descent . This Feed is for personal non-commercial use only. If you are not reading this material in your news aggregator, the site you are looking at is guilty of copyright infringement UNLESS EXPLICIT PERMISSION OTHERWISE HAS BEEN GIVEN. Please contact legal@uncommondescent.com so we can take legal action immediately.Plugin by Taragana

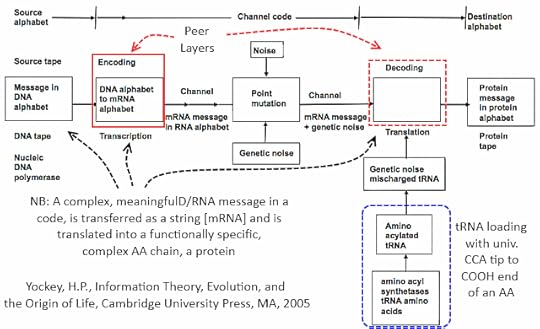

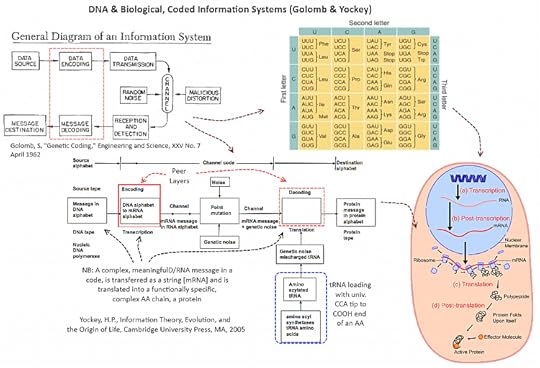

Protein Synthesis . . . what frequent objector AF cannot acknowledge

Let us use a handy diagram of protein synthesis:

Protein Synthesis (HT: Wiki Media)

Protein Synthesis (HT: Wiki Media)[U/D, Sep 2:] Where, to clarify key terms, let us note a key, classic text, Lehninger, 8th edn:

“The information in DNA is encoded in its linear (one-dimensional) sequence of deoxyribonucleotide subunits . . . . A linear sequence of deoxyribonucleotides in DNA codes (through an intermediary, RNA) for the production of a protein with a corresponding linear sequence of amino acids . . . Although the final shape of the folded protein is dictated by its amino acid sequence, the folding of many proteins is aided by “molecular chaperones” . . . The precise three-dimensional structure, or native conformation, of the protein is crucial to its function.” [Principles of Biochemistry, 8th Edn, 2021, pp 194 – 5. Now authored by Nelson, Cox et al, Lehninger having passed on in 1986. Attempts to rhetorically pretend on claimed superior knowledge of Biochemistry, that D/RNA does not contain coded information expressing algorithms using string data structures, collapse. We now have to address the implications of language, goal directed stepwise processes and underlying sophisticated polymer chemistry and molecular nanotech in the heart of cellular metabolism and replication.]

This is a corner of the general cell metabolism framework:

Where, Yockey observes (highlighted and annotated):

Yockey’s analysis of protein synthesis as a code-based communication process

Yockey’s analysis of protein synthesis as a code-based communication processWhere, too, the genetic code is, and its context of application is:

Given, say, Crick:

Crick’s letter

Crick’s letter. . . we need to ask, why. END

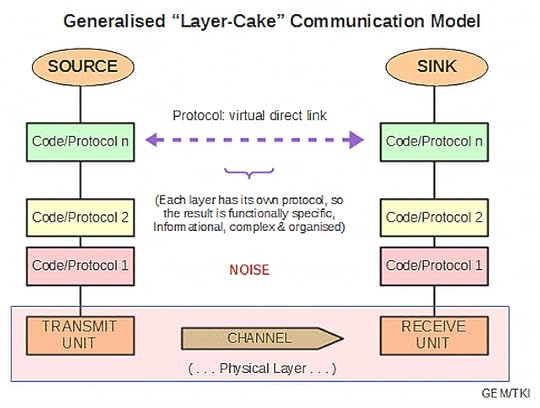

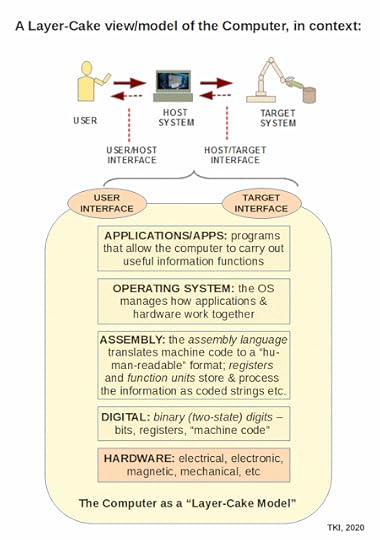

F/N1: It seems advisable to highlight the layer cake architecture of communication systems

, , , and of Computers, following Tanenbaum:

Clearly, the communication framework does not reduce to the physics of the hardware involved.

F/N2: Dawkins admits

He tries to deflect the force by appeal to “natural selection,” but protein synthesis and linked metabolic processes are causally antecedent to self replication and therefore pose a chicken before egg challenge especially for origin of life.

Copyright © 2022 Uncommon Descent . This Feed is for personal non-commercial use only. If you are not reading this material in your news aggregator, the site you are looking at is guilty of copyright infringement UNLESS EXPLICIT PERMISSION OTHERWISE HAS BEEN GIVEN. Please contact legal@uncommondescent.com so we can take legal action immediately.Plugin by Taragana

August 31, 2022

At Science Daily: Seeing universe’s most massive known star

By harnessing the capabilities of the Gemini South telescope in Chile, astronomers have obtained the sharpest image ever of the star R136a1, the most massive known star in the universe. Their research challenges our understanding of the most massive stars and suggests that they may not be as massive as previously thought.

Astronomers have yet to fully understand how the most massive stars — those more than 100 times the mass of the Sun — are formed. One particularly challenging piece of this puzzle is obtaining observations of these giants, which typically dwell in the densely populated hearts of dust-shrouded star clusters. Giant stars also live fast and die young, burning through their fuel reserves in only a few million years. In comparison, our Sun is less than halfway through its 10 billion year lifespan. The combination of densely packed stars, relatively short lifetimes, and vast astronomical distances makes distinguishing individual massive stars in clusters a daunting technical challenge.

This colossal star is a member of the R136 star cluster, which lies about 160,000 light-years from Earth in the center of the Tarantula Nebula in the Large Magellanic Cloud, a dwarf companion galaxy of the Milky Way.

Previous observations suggested that R136a1 had a mass somewhere between 250 to 320 times the mass of the Sun. The new Zorro observations, however, indicate that this giant star may be only 170 to 230 times the mass of the Sun. Even with this lower estimate, R136a1 still qualifies as the most massive known star.

“Our results show us that the most massive star we currently know is not as massive as we had previously thought,” explained Kalari, lead author of the paper announcing this result. “This suggests that the upper limit on stellar masses may also be smaller than previously thought.”

This result also has implications for the origin of elements heavier than helium in the Universe. These elements are created during the cataclysmicly explosive death of stars more than 150 times the mass of the Sun in events that astronomers refer to as pair-instability supernovae. If R136a1 is less massive than previously thought, the same could be true of other massive stars and consequently pair instability supernovae may be rarer than expected.

Gemini South’s Zorro instrument was able to surpass the resolution of previous observations by using a technique known as speckle imaging, which enables ground-based telescopes to overcome much of the blurring effect of Earth’s atmosphere [1]. By taking many thousands of short-exposure images of a bright object and carefully processing the data, it is possible to cancel out almost all this blurring [2]. This approach, as well as the use of adaptive optics, can dramatically increase the resolution of ground-based telescopes, as shown by the team’s sharp new Zorro observations of R136a1 [3].

Notes

[1] The blurring effect of the atmosphere is what makes stars twinkle at night, and astronomers and engineers have devised a variety of approaches to dealing with atmospheric turbulence. As well as placing observatories at high, dry sites with stable skies, astronomers have equipped a handful of telescopes with adaptive optics systems, assemblies of computer-controlled deformable mirrors and laser guide stars that can correct for atmospheric distortion. In addition to speckle imaging, Gemini South is able to use its Gemini Multi-Conjugate Adaptive Optics System to counteract the blurring of the atmosphere.

[2] The individual observations captured by Zorro had exposure times of just 60 milliseconds, and 40,000 of these individual observations of the R136 cluster were captured over the course of 40 minutes. Each of these snapshots is so short that the atmosphere didn’t have time to blur any individual exposure, and by carefully combining all 40,000 exposures the team could build up a sharp image of the cluster.

[3] When observing in the red part of the visible electromagnetic spectrum (about 832 nanometers), the Zorro instrument on Gemini South has an image resolution of about 30 milliarcseconds. This is slightly better resolution than NASA/ESA/CSA’s James Webb Space Telescope and about three-times sharper resolution achieved by the Hubble Space Telescope at the same wavelength.

Full article at Science Daily.

While massive stars are relatively rare in the galaxy, they are essential for life. Their end-stage supernova explosions helped produce and distribute throughout space most of the elements heavier than helium (produced by stars more than about 10 times the mass of the sun, not just in the “explosive death of stars more than 150 times the mass of the Sun in events that astronomers refer to as pair-instability supernovae”, as reported in this article.) Mass is the main parameter of a star that determines its properties throughout its life cycle. Stellar lifespans diminish strongly with increasing stellar mass. If our sun was just 40% more massive than it is, its life would already be finished, and so would all life on Earth. [1]

[1] Eric Hedin, Canceled Science: What Some Atheists Don’t Want You to See (Discovery Institute Press: Seattle, 2021), p. 129.

Copyright © 2022 Uncommon Descent . This Feed is for personal non-commercial use only. If you are not reading this material in your news aggregator, the site you are looking at is guilty of copyright infringement UNLESS EXPLICIT PERMISSION OTHERWISE HAS BEEN GIVEN. Please contact legal@uncommondescent.com so we can take legal action immediately.Plugin by Taragana

At Evolution News: Behe Debates the Limits of Darwinian Evolution

Connecting with an earlier post at UD, Michael Behe speaks to the limits of naturalism and when a “designing intelligence” is needed.

Copyright © 2022 Uncommon Descent . This Feed is for personal non-commercial use only. If you are not reading this material in your news aggregator, the site you are looking at is guilty of copyright infringement UNLESS EXPLICIT PERMISSION OTHERWISE HAS BEEN GIVEN. Please contact legal@uncommondescent.com so we can take legal action immediately.

A new ID the Future episode wraps up a debate over evolution and intelligent design between Lehigh University biologist Michael Behe and Benedictine College theologian Michael Ramage. Both Behe and Ramage are Catholic, and they carry on their conversation in the context of Catholic thinking about nature and creation, in particular the work of Thomas Aquinas and contemporary Thomist philosophers. Ramage seeks to integrate his Thomistic/personalist framework with modern evolutionary theory’s commitment to macroevolution and common descent. Behe doesn’t discount the possibility of common descent but he lays out a case that any evolution beyond the level of genus — for instance, the separate families containing cats and dogs — cannot be achieved through mindless Darwinian mechanisms and, instead, would require the contributions of a designing intelligence. Behe summarizes both the negative evidence against the Darwinian mechanism of change and the positive evidence in nature for intelligent design. This debate was hosted by Pat Flynn on his Philosophy for the People podcast. Download the episode or listen to it here.

Evolution News

Plugin by Taragana

August 30, 2022

At Phys.org: Study reveals flaws in popular genetic method

The most common analytical method within population genetics is deeply flawed, according to a new study from Lund University in Sweden. This may have led to incorrect results and misconceptions about ethnicity and genetic relationships. The method has been used in hundreds of thousands of studies, affecting results within medical genetics and even commercial ancestry tests. The study is published in Scientific Reports.

The rate at which scientific data can be collected is rising exponentially, leading to massive and highly complex datasets, dubbed the “Big Data revolution.” To make these data more manageable, researchers use statistical methods that aim to compact and simplify the data while still retaining most of the key information. Perhaps the most widely used method is called PCA (principal component analysis). By analogy, think of PCA as an oven with flour, sugar and eggs as the data input. The oven may always do the same thing, but the outcome, a cake, critically depends on the ingredients’ ratios and how they are combined.

“It is expected that this method will give correct results because it is so frequently used. But it is neither a guarantee of reliability nor produces statistically robust conclusions,” says Dr. Eran Elhaik, Associate Professor in molecular cell biology at Lund University.

According to Elhaik, the method helped create old perceptions about race and ethnicity. It plays a role in manufacturing historical tales of who and where people come from, not only by the scientific community but also by commercial ancestry companies. A famous example is when a prominent American politician took an ancestry test before the 2020 presidential campaign to support their ancestral claims. Another example is the misconception of Ashkenazic Jews as a race or an isolated group driven by PCA results.

“This study demonstrates that those results were unreliable,” says Eran Elhaik.

PCA is used across many scientific fields, but Elhaik’s study focuses on its usage in population genetics, where the explosion in dataset sizes is particularly acute, which is driven by the reduced costs of DNA sequencing.

The field of paleogenomics, where we want to learn about ancient peoples and individuals such as Copper age Europeans, heavily relies on PCA. PCA is used to create a genetic map that positions the unknown sample alongside known reference samples. Thus far, the unknown samples have been assumed to be related to whichever reference population they overlap or lie closest to on the map.

However, Elhaik discovered that the unknown sample could be made to lie close to virtually any reference population just by changing the numbers and types of the reference samples, generating practically endless historical versions, all mathematically “correct,” but only one may be biologically correct.

In the study, Elhaik has examined the twelve most common population genetic applications of PCA. He has used both simulated and real genetic data to show just how flexible PCA results can be. According to Elhaik, this flexibility means that conclusions based on PCA cannot be trusted since any change to the reference or test samples will produce different results.

Between 32,000 and 216,000 scientific articles in genetics alone have employed PCA for exploring and visualizing similarities and differences between individuals and populations and based their conclusions on these results.

“I believe these results must be re-evaluated,” says Elhaik.

“Techniques that offer such flexibility encourage bad science and are particularly dangerous in a world where there is intense pressure to publish. If a researcher runs PCA several times, the temptation will always be to select the output that makes the best story,” adds Prof. William Amos, from the University of Cambridge, who was not involved in the study.

Phys.org

How much does the “intense pressure to publish” research skew scientific articles away from objectivity towards attempts to show confirmation of acceptable, popular results?

Copyright © 2022 Uncommon Descent . This Feed is for personal non-commercial use only. If you are not reading this material in your news aggregator, the site you are looking at is guilty of copyright infringement UNLESS EXPLICIT PERMISSION OTHERWISE HAS BEEN GIVEN. Please contact legal@uncommondescent.com so we can take legal action immediately.Plugin by Taragana

At Evolution News: The Positive Case for Intelligent Design (series)

Although these posts at Evolution News may have been referenced previously, it seems timely to provide reminders of how ID serves as a “fruitful scientific paradigm.”

Science Stopper? Intelligent Design as a Fruitful Scientific ParadigmWhen critics claim that research is not permitted to detect design because that would stop science, it is they who hold science back.

Does Intelligent Design Make Predictions or Retrodictions?

Another potential objection to the positive case for intelligent design, outlined in this series, might be that we’re not making positive predictions for design, but rather, looking backwards to make after-the-fact retrodictions. With junk DNA, this is clearly not the case — ID proponents were predicting function years before biologists discovered those functions. The same could be said for the discovery of finely tuned CSI-rich biological sequences, something that design theory inspired scientists like Douglas Axe and Ann Gauger to investigate, and which they indeed have found.1 Winston Ewert’s dependency-graph model promises that ID can bear good fruit as we learn more about the gene sequences of organisms. Indeed, as we’ll see in an upcoming post, ID makes useful predictions that can guide future research in many scientific fields.

Does Darwinian Theory Make the Same Predictions as Intelligent Design?

One potential objection to the positive case for intelligent design, developed in this series, is that Darwinian evolution might make some of the same predictions as ID, making it difficult to tell which theory has better explanatory power. For example, in systematics, ID predicted reuse of parts in different organisms, but neo-Darwinism also predicts different species may share similar traits either due to inheritance from a common ancestor, convergent evolution, or loss of function. Likewise, in genetics, ID predicted functionality for junk DNA, but evolutionists might argue noncoding DNA could evolve useful functions by mutation and selection. If neo-Darwinism makes the same predictions as ID, can we still make a positive argument for design? The answer is yes, and there are multiple responses to these objections.

First, not all the predictions generated by positive arguments for design are also made by Darwinian theory. For example, Michael Behe explains that irreducible complexity is predicted under design but predicted not to exist by Darwinism.

Using the Positive Case for Intelligent Design to Answer Common Objections to ID

ID’s positive arguments are based precisely upon what we have learned from studies of nature about the origin of certain types of information, such as CSI-rich structures. In our experience, high CSI or irreducible complexity derives from a mind. If we did not have these observations, we could not infer intelligent design. We can then go out into nature and empirically test for high CSI or irreducible complexity, and when we find these types of information, we can justifiably infer that an intelligent agent was at work.

Thus, ID is not based upon what we don’t know — an argument from ignorance or gaps in our knowledge — but rather, is based upon what we do know about the origin of information-rich structures, as testified to by the observed information-generative powers of intelligent agents.

The Positive Case for Intelligent Design in Physics

“Agents can arrange matter with distant goals in mind. In their use of language, they routinely ‘find’ highly isolated and improbable functional sequences amid vast spaces of combinatorial possibilities.”1“Intelligent agents have foresight. Such agents can determine or select functional goals before they are physically instantiated. They can devise or select material means to accomplish those ends from among an array of possibilities. They can then actualize those goals in accord with a preconceived design plan or set of functional requirements. Rational agents can constrain combinatorial space with distant information-rich outcomes in mind.”2Observation (from previous studies): Intelligent agents can quickly find extremely rare or highly unlikely solutions to complex problems:

Hypothesis (prediction): The physical laws and constants of physics will take on rare values that match what is necessary for life to exist (i.e., fine-tuning).

Experiment (data): Multiple physical laws and constants must be finely tuned for the universe to be inhabited by advanced forms of life. These include the strength of gravity (gravitational constant), which must be fine-tuned to 1 part in 1035 (ref. 3); the gravitational force compared to the electromagnetic force, which must be fine-tuned to 1 part in 1040(ref. 4); the expansion rate of the universe, which must be fine-tuned to 1 part in 1055 (ref. 5); the cosmic mass density at Planck time, which must be fine-tuned to 1 part in 1060 (ref. 6); the cosmological constant, which must be fine-tuned to 1 part in 10120 (ref. 7); and the initial entropy of universe, which must be fine-tuned to 1 part in 1010^123 (ref. 8). The Nobel Prize-winning physicist Charles Townes observed:

Intelligent design, as one sees it from a scientific point of view, seems to be quite real. This is a very special universe: it’s remarkable that it came out just this way. If the laws of physics weren’t just the way they are, we couldn’t be here at all.9

Conclusion: The cosmic architecture of the universe was designed.

NotesMeyer, “The Cambrian Information Explosion.”Meyer, Darwin’s Doubt, 362-363.Geraint Lewis and Luke Barnes, A Fortunate Universe: Life in a Finely Tuned Cosmos (Cambridge, UK: Cambridge University Press, 2016), 109.John Leslie, Universes (London, UK: Routledge, 1989), 37, 51.Alan Guth, “Inflationary Universe: a possible solution to the horizon and flatness problems,” Physical Review D 23 (1981), 347-356; Leslie, Universes, 3, 29. Paul Davies, The Accidental Universe (Cambridge, UK: Cambridge University Press, 1982), 89; Leslie, Universes, 29. Leslie, Universes, 5, 31.Roger Penrose and Martin Gardner, The Emperor’s New Mind: Concerning Computers, Minds, and the Laws of Physics (Oxford, UK: Oxford University Press, 2002), 444-445; Leslie, Universes, 28.Charles Townes as quoted in Bonnie Azab Powell, “‘Explore as much as we can’: Nobel Prize winner Charles Townes on evolution, intelligent design, and the meaning of life,” UC Berkeley NewsCenter (June 17, 2005), https://www.berkeley.edu/news/media/r... (accessed October 26, 2020).While the evidence points strongly to design in the five scientific fields we have discussed — biochemistry, paleontology, systematics (the relationships between organisms), genetics, and physics — like all scientific theories, the conclusion of design is always held tentatively, subject to future scientific discoveries.

Several other articles that present the positive case for Intelligent Design are available at Evolution News.

Copyright © 2022 Uncommon Descent . This Feed is for personal non-commercial use only. If you are not reading this material in your news aggregator, the site you are looking at is guilty of copyright infringement UNLESS EXPLICIT PERMISSION OTHERWISE HAS BEEN GIVEN. Please contact legal@uncommondescent.com so we can take legal action immediately.Plugin by Taragana

August 29, 2022

At Sci News: Transits through Milky Way’s Spiral Arms Helped Form Early Earth’s Continental Crust, Study Says

New research led by Curtin University geologists suggests that regions of space with dense interstellar clouds may send more high-energy comets crashing to the surface of the Earth, seeding enhanced production of continental crust. The findings challenge the existing theory that Earth’s continental crust was solely formed by processes inside our planet.

Earth is unique among the known planets in having continents, whose formation has fundamentally influenced the composition of the mantle, hydrosphere, atmosphere, and biosphere.

Cycles in the production of continental crust have long been recognized and generally ascribed to the periodic aggregation and dispersal of Earth’s continental crust as part of the supercontinent cycle.

However, such cyclicity is also evident in some of Earth’s most ancient rocks that formed during the Hadean (over 4 billion years ago) and Archean (4-2.5 billion years ago) eons.

Frequencies of many geological processes become more challenging to decipher in the early Earth given that the rock record becomes increasingly fragmentary with age.

“As geologists, we normally think about processes internal to the Earth being really important for how our planet has evolved,” said lead author Professor Chris Kirkland, a researcher in the School of Earth and Planetary Sciences at Curtin University.

“But we can also think about the much larger scale and look at extraterrestrial processes and where we fit in the Galactic environment.”

Professor Kirkland and colleagues investigated the cyclicity in the addition of new crust and its subsequent reworking through the hafnium isotopic record of dated zircon grains from the North American craton in Greenland and the Pilbara craton in western Australia.

Using mathematical analysis, they uncovered the longer period pattern corresponding with the ‘galactic year.’

They observed a similar pattern when looking at oxygen isotopes, bolstering their results.

“Studying minerals in the Earth’s crust revealed a rhythm of crust production every 200 million years or so that matched our Solar System’s transit through areas of the Milky Way with a higher density of stars,” Professor Kirkland said.

According to the team, our Solar System and the spiral arms of the Milky Way are both spinning around the Galaxy’s center, but they are moving at different speeds.

While the spiral arms orbit at 210 km/second, the Sun is cruising along at 240 km/second, meaning it is surfing into and out of spiral arms over time.

At the outer reaches of our Solar System, there is a cloud of icy planetesimals — the Oort cloud — orbiting the Sun at a distance of between 0.03 to 3.2 light-years.

As the Solar System moves into a spiral arm, interaction between the Oort cloud and the denser material of the spiral arms could send more icy material from the Oort cloud hurling toward Earth.

While Earth experiences more regular impacts from the rocky bodies of the asteroid belt, comets ejected from the Oort cloud arrive with much more energy.

“That’s important because more energy will result in more melting,” Professor Kirkland said.

“When it hits, it causes larger amounts of decompression melting, creating a larger uplift of material, creating a larger crustal seat.”

Spherule beds — rock formations produced by meteorite impacts — are another key piece of evidence linking periods of increased crust generation to comet impacts.

Copyright © 2022 Uncommon Descent . This Feed is for personal non-commercial use only. If you are not reading this material in your news aggregator, the site you are looking at is guilty of copyright infringement UNLESS EXPLICIT PERMISSION OTHERWISE HAS BEEN GIVEN. Please contact legal@uncommondescent.com so we can take legal action immediately.

The researchers observed that the ages of spherule beds are well-correlated with the Solar System’s movement into spiral arms around 3.25 and 3.45 billion years ago.

“The findings challenged the existing theory that crust production was entirely related to processes internal to the Earth,” Professor Kirkland said.

“Our study reveals an exciting link between geological processes on Earth and the movement of the Solar System in our Galaxy.”

“Linking the formation of continents, the landmasses on which we all live and where we find the majority of our mineral resources, to the passage of the Solar System through the Milky Way casts a whole new light on the formative history of our planet and its place in the cosmos.”

The study is published in the journal Geology.

Sci.News

Plugin by Taragana

Michael J. Behe's Blog

- Michael J. Behe's profile

- 219 followers