Michael J. Behe's Blog, page 211

April 16, 2021

Physicist and philosopher Bruce Gordon on panpsychism

Idealism says everything is an idea in the mind of God. Panpsychism says everything participates in consciousness (thus is not just an idea). From Bruce Gordon’s dialogue with Michael Egnor:

Michael Egnor: To just sort of backtrack a little bit, when we make the assertion that the fundamental reality of the universe is mental rather than physical… what is mental? That is, we have a sense of what physical things are. They have extension in space. They’re heavy. They have inertia, things like that. But what is a mental “thing”? And can we define mental things except by what they’re not?

Bruce Gordon: Well, of course a panpsychist would deny this, but I would say the distinction between mental things and physical things is that for mental things, there is something that it’s like to be that thing. Whereas for physical things, there’s nothing that it’s like to be that thing.

Note: This distinction was originally made by philosopher Thomas Nagel in an essay, What is it like to be a bat? (1974), exploring the factors that distinguish consciousness from life as such. One might ask, “Is there something that it ‘is like’ to be a fern or a virus? Or an electron?”

Bruce Gordon: Of course, the panpsychist says that there’s something to be like everything. Right down to the most fundamental constituents of reality that we would, from a different philosophical perspective, regard as entirely impersonal.

News, “A physicist and philosopher examines panpsychism” at Mind Matters News

The main reason that interest in panpsychism is growing is probably the inability of materialism to provide a coherent account of consciousness.

Note: Readers may remember Bruce Gordon from books like The Nature of Nature, which he co-edited with design theorist William Dembski.

Takehome: Bruce Gordon thinks that, for a thing to be conscious, there must be something that it “is like” to be that thing. Can panpsychism demonstrate that?

Copyright © 2021 Uncommon Descent . This Feed is for personal non-commercial use only. If you are not reading this material in your news aggregator, the site you are looking at is guilty of copyright infringement UNLESS EXPLICIT PERMISSION OTHERWISE HAS BEEN GIVEN. Please contact legal@uncommondescent.com so we can take legal action immediately.Plugin by Taragana

Common Descent, Common Design, and ID

Mung had asked me to do a thread on common descent and common design. So, anyway, I’ll get things started by stating my own thoughts on these ideas. I intend this to be an open discussion, but I also find having a starting idea tends to help get things started.

So, as I have maintained for the last decade, I believe there is no fundamental conflict between ID and common descent. That is, it is fully possible to hold to both at the same time.

In fact, I would say that ID *potentially solves* many problems that common descent would bring. For instance, if you have gaps that are unbridgeable by a traditional Darwinian mechanism, you could posit that there was a prior source of information that the organism used to bridge the gap.

That being said, I don’t myself hold to common descent. If so, how does one explain the commonalities of organisms? The common explanation of this is “common design”. That is, since the same designer was designing these organisms, then we should expect repeated motifs to be used to solve various problems, various design ideas presented with variation, etc. The question is, is there a way to distinguish common design and common descent?

Well, to start with, common descent can actually be a mechanism for implementing common design. Therefore, inferring common design doesn’t necessarily tell you much about common descent.

Additionally problematic is that common descent actually depends on having a specific model of the origin of life. To understand this, let us say that instead of being rare, life comes about rather easily, but ALWAYS in the SAME WAY (i.e., builds the same starting organism, which we’ll call X). Therefore, if you find two X’s, there is NO WAY to tell if they are related by ancestry, despite the fact that they are identical! Let us then say that X is likely to branch to Y and then Z. Again, we could easily have two Zs that share NO common ancestors!

As you can see, inferring common descent as a definite conclusion requires a number of auxiliary hypotheses. These may or may not be reasonable, but they are there. However, *rejecting* common descent can be done with fewer.

Rejecting common descent can be done in one of two ways that I can think of:

Have a model that better fits the character space of organisms, orShow that certain gaps are unbridgeable genetically.#1 I believe has been done (at least on an initial basis) by Winston Ewert, with his dependency graph model. #2 is harder because, as I mentioned, in theory there could be repositories of information that help you close the gap. However, you could show that #2 *requires* information to close the gap, and then look and see if any such information repositories are known. Obviously, we might find information repositories in the future, but the question always is about what can we do with the knowledge we do have without overly-worrying about the knowledge we don’t have.

Anyway, along these lines, I think that Stephen Meyer has done a fairly good job with #2 in Darwin’s Doubt, where he discusses the origin of the phyla.

In short, ID can work inside or outside a Common Descent framework. However, ID also allows more degrees of freedom in biological thought, and many in the ID movement feel that the evidence against common descent outweighs the evidence for it. That means that many of the similarities in organisms are the results of common design and not common descent, but there is no logical contradiction in asserting both.

Those are my thoughts – what are yours?

Copyright © 2021 Uncommon Descent. This Feed is for personal non-commercial use only. If you are not reading this material in your news aggregator, the site you are looking at is guilty of copyright infringement UNLESS EXPLICIT PERMISSION OTHERWISE HAS BEEN GIVEN. Please contact legal@uncommondescent.com so we can take legal action immediately.

Plugin by Taragana

April 15, 2021

A contradiction in Charles Marshall’s “white smoker” origin-of-life argument?

Readers will recall Australian paleontologist Charles Marshall’s argument for horizontal gene transfer between protocells as sparking the origin of life. Anyway, here’s the contradiction, as it appears to Paul Nelson:

Marshall argues (40:10) that the universality of the genetic code, the protein translation machinery (the ribosome, etc.), and other apparently universally shared features of cells, support a scenario in which these features arose within “the same chimney” in an alkaline hydrothermal vent. In other words, we’re back to the single theater model of OOL.

But if the main explanatory attraction of the alkaline vent story is the “massively parallel process” of “hundreds of thousands or millions” (44:00 and following) of OOL theaters in which the RNA World began independently in various localities, then one sacrifices all that probabilistic advantage, and the benefits of horizontal transfer, by reverting to a single chimney to explain the genetic code. “We don’t have to rely on a ‘freak environment’” Marshall says (44:45) “to explain the origin of life.”

Here’s an imaginary dialogue:

Marshall: The universality of the genetic code points unmistakably to a single chimney — one locality where the code first arose.

Skeptic: Why a single chimney?

Marshall: The 64 codon assignments are “ridiculous details” (40:09) — chemically arbitrary.

Skeptic: So these codon assignments would not have happened more than once? Looks like one chimney got lucky.

Thus, unless I misunderstand (always a live possibility), we’re back to postulating a “freak environment,” meaning the OOL explanation is a one-off event after all. The chemical determinism of hundreds of thousands, or millions of alkaline chimneys operating in parallel disappears, and we’re back to one very lucky setting.

Paul Nelson, “Charles Marshall: Origin of Life Could Have Happened “Millions of Times”” at Evolution News and Science Today

Horizontal gene transfer will do a lot but not everything. 64 codons? A lot of freak in thatenvironment.

Meanwhile, the smokers again:

See also: New origin of life thesis: Last Universal Common Ancestor (LUCA) wasn’t actually a cell. Marshall favors horizontal gene transfer as a key method of early development because ancestor–descendant evolution is a “very slow” (42:25) evolutionary process. HGT among multiple independent lineages, by contrast, allows a “vast exchange of information,” thus sharing innovations and leading to faster development. Okay. And in the midst of all that, Dawkins’s Selfish Gene got lost in a crowd somewhere and was never heard from again.

Copyright © 2021 Uncommon Descent . This Feed is for personal non-commercial use only. If you are not reading this material in your news aggregator, the site you are looking at is guilty of copyright infringement UNLESS EXPLICIT PERMISSION OTHERWISE HAS BEEN GIVEN. Please contact legal@uncommondescent.com so we can take legal action immediately.Plugin by Taragana

Researchers: There were billions of T Rexes

That sounds odd if they were apex predators but here is the reasoning:

With fossils few and far between, paleontologists have shied away from estimating the size of extinct populations. But UC Berkeley scientists decided to try, focusing on the North American predator T. rex. Using data from the latest fossil analyses, they concluded that some 20,000 adults likely roamed the continent at any one time, from Mexico to Canada. The species survived for perhaps 2.5 million years, which means that about 2.5 billion lived and died overall.

University of California – Berkeley, “How many T. rexes were there? Billions” at ScienceDaily

Give or take “a factor of 10,” we are told.

The UC Berkeley scientists mined the scientific literature and the expertise of colleagues for data they used to estimate that the likely age at sexual maturity of a T. rex was 15.5 years; its maximum lifespan was probably into its late 20s; and its average body mass as an adult — its so-called ecological body mass, — was about 5,200 kilograms, or 5.2 tons. They also used data on how quickly T. rexes grew over their life span: They had a growth spurt around sexual maturity and could grow to weigh about 7,000 kilograms, or 7 tons.

From these estimates, they also calculated that each generation lasted about 19 years, and that the average population density was about one dinosaur for every 100 square kilometers.

Then, estimating that the total geographic range of T. rex was about 2.3 million square kilometers, and that the species survived for roughly 2 1/2 million years, they calculated a standing population size of 20,000. Over a total of about 127,000 generations that the species lived, that translates to about 2.5 billion individuals overall.

With such a large number of post-juvenile dinosaurs over the history of the species, not to mention the juveniles that were presumably more numerous, where did all those bones go? What proportion of these individuals have been discovered by paleontologists? To date, fewer than 100 T. rex individuals have been found, many represented by a single fossilized bone.

“There are about 32 relatively well-preserved, post-juvenile T. rexes in public museums today,” he said. “Of all the post-juvenile adults that ever lived, this means we have about one in 80 million of them.” University of California – Berkeley, “How many T. rexes were there? Billions” at ScienceDaily

It’s anyone’s guess how much of this will hold up, but positing some numbers to dispute is a worthwhile effort at sleuthing.

The paper is open access.

Dino tales:

Copyright © 2021 Uncommon Descent . This Feed is for personal non-commercial use only. If you are not reading this material in your news aggregator, the site you are looking at is guilty of copyright infringement UNLESS EXPLICIT PERMISSION OTHERWISE HAS BEEN GIVEN. Please contact legal@uncommondescent.com so we can take legal action immediately.Plugin by Taragana

Bill Dembski on why Erik Larson says there will be no AI overlords

One reason is “inference to the best explanation.” Computers, Erik J. Larson shows in his new book, The Myth of Artificial Intelligence: Why Computers Can’t Think the Way We Do (2021), can’t do some things by their very nature. A big gap is with “abduction,” also known as “inference to the best explanation.”

From Dembski:

With regard to inference, he shows that a form of reasoning known as abductive inference, or inference to the best explanation, is for now without any adequate computational representation or implementation. To be sure, computer scientists are aware of their need to corral abductive inference if they are to succeed in producing an artificial general intelligence.

True, they’ve made some stabs at it, but those stabs come from forming a hybrid of deductive and inductive inference. Yet as Larson shows, the problem is that neither deduction, nor induction, nor their combination are adequate to reconstruct abduction. Abductive inference requires identifying hypotheses that explain certain facts of states of affairs in need of explanation. The problem with such hypothetical or conjectural reasoning is that that range of hypotheses is virtually infinite. Human intelligence can, somehow, sift through these hypotheses and identify those that are relevant. Larson’s point, and one he convincingly establishes, is that we don’t have a clue how to do this computationally.

News, “No AI overlords?: What is Larson arguing and why does it matter?” at Mind Matters News

Abductive reasoning is part of design theory. Interesting that computers can’t do it.

See also: New book massively debunks our “AI overlords”: Ain’t gonna happen AI researcher and tech entrepreneur Eric J. Larson expertly dissects the AI doomsday scenarios. Many thinkers have tried to stem the tide of hype but, as an information theorist points out, no one has done it so well.

and

Why Richard Dawkins thinks AI may replace us He likes the idea because it is consistent with his naturalist philosophy. Dawkins does not advance an argument for why “anything that a human brain can do can be replicated in silicon,” apart from the fact that he is “committed to the view that there’s nothing in our brains that violates the laws of physics.”

Copyright © 2021 Uncommon Descent . This Feed is for personal non-commercial use only. If you are not reading this material in your news aggregator, the site you are looking at is guilty of copyright infringement UNLESS EXPLICIT PERMISSION OTHERWISE HAS BEEN GIVEN. Please contact legal@uncommondescent.com so we can take legal action immediately.Plugin by Taragana

Bruce Gordon and Michael Egnor: Why idealism is actually a practical philosophy

George Berkeley (1685–1753)

George Berkeley (1685–1753)Not what you heard? Philosopher of science — and pianist — Bruce Gordon, think again.

Michael Egnor: Is reality fundamentally more like a mind than a physical object?

Many are sure of the answer without understanding the question.

News, “Why idealism is actually a practical philosophy” at Mind Matters News

Basically, it’s the idea that material substances, as substantial entities, do not exist and are not the cause of our perceptions. They do not mediate our experience of the world. Rather, what constitutes what we would call the physical realm are ideas that exist solely in the mind of God, who, as an unlimited and uncreated immaterial being, is the ultimate cause of the sensations and ideas that we, as finite spiritual beings, experience intersubjectively and subjectively as the material universe.

Note: Philosopher George Berkeley (1685–1753) was a Church of England bishop in Ireland. Among his other accomplishments were his studies of human vision: “Berkeley’s empirical theory of vision challenged the then-standard account of distance vision, an account which requires tacit geometrical calculations. His alternative account focuses on visual and tactual objects. Berkeley argues that the visual perception of distance is explained by the correlation of ideas of sight and touch. This associative approach does away with appeals to geometrical calculation while explaining monocular vision and the moon illusion, anomalies that had plagued the geometric account.” – Internet Encyclopedia of Philosophy

About his idealism: “Berkeley’s system, while it strikes many as counter-intuitive, is strong and flexible enough to counter most objections.” – Stanford Encyclopedia of Philosophy

“All the choir of heaven and furniture of earth — in a word, all those bodies which compose the frame of the world — have not any subsistence without a mind.” ~ George Berkeley

Bruce Gordon: So we are, in effect, living our lives in the mind of God. And he is a mediator of our experience and of our inner subjectivity, rather than some sort of neutral material realm that serves as a third thing between us and the mind of God, so to speak.

Gordon thinks idealism (= reality is first and foremost a mental phenomenon) is defensible, reasonable, and too easily discarded.

(We enjoy setting the cat among the pigeons. But remember, the cat is serious. )

See also: Bill Dembski on how a new book expertly dissects AI doomsday scenarios

Copyright © 2021 Uncommon Descent . This Feed is for personal non-commercial use only. If you are not reading this material in your news aggregator, the site you are looking at is guilty of copyright infringement UNLESS EXPLICIT PERMISSION OTHERWISE HAS BEEN GIVEN. Please contact legal@uncommondescent.com so we can take legal action immediately.Plugin by Taragana

L&FP 40: Thoughts on [neo-?] Reidian Common Sense Realism

We live in a civilisation haunted by doubt and by hyperskepticism. One, where skepticism is deemed a virtue, inviting hyper forms in as champions of intellect. The result has gradually led to selective hyperskepticism that often uncritically takes the word of champions or publicists for Big-S Science, while doubting well founded but unfashionable analyses or even self-evident truths.

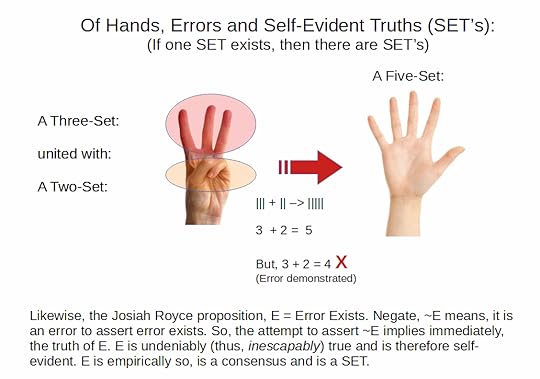

H’mm, just in case someone is unclear about or doubts that Self-Evident Truths exist, here is one . . . with an extra one for good measure:

(Of course, I also have argued that there are self-evident truths regarding duty; particularly, inescapable first duties of reason that actually govern responsible reason, argument and discussion, starting with duties to truth, right reason, warrant and wider prudence, etc. Thus, that there are MORAL SETs. For, example, not even the most ardent objector can avoid appealing to these principles to try to give his arguments rhetorical/persuasive traction. Inescapable, so true and self-evident. I just note that for the moment.)

How, then, can we exorcise the ghosts of acid doubt and restore a better balance regarding knowledge claims?

I think, Thomas Reid and other champions of “[refined] common sense” have some sound counsel. That is, I wish to champion a principle of responsible, common sense guided credulity:

PRINCIPLE OF “MODERATE” CREDULITY: It makes good sense to accept that our conscious self-awareness, sense of rational, responsible freedom (with first duties of reason) — “common sense,” so-called, and sense of being embodied as creatures in an objectively real physical world are generally warranted though they may err or have limitations or oddities in detail

Magenta (used in CMYK printing), violet and purple. Notice, Magenta seems a modified pinkish Red, Violet a modified Blue, Purple a reddened modified Blue

Magenta (used in CMYK printing), violet and purple. Notice, Magenta seems a modified pinkish Red, Violet a modified Blue, Purple a reddened modified BlueA case in point helps to clarify. Here, colour vision. There are two related but somehow distinct colours, violet and purple. The former is spectral [i.e. a “pure” colour coming from certain wavelengths of light], the latter is not. [Generally, purple is seen as a mix of red and blue, e.g. the Line of Purples on the CIE tongue of colour framework.] Why, then, the similarity, despite the difference?

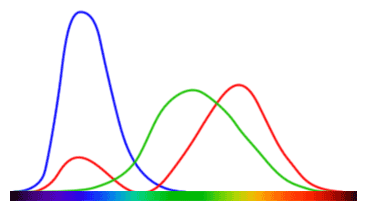

The answer turns out to depend on our colour sensors and onward processing in our eyes and visual system. Simplifying, it turns out that our Red response system has a secondary peak towards the high frequency end of the visible spectrum, near Blue:

Colour response of our visual system, as modelled. Notice the secondary peak for “Red”

Colour response of our visual system, as modelled. Notice the secondary peak for “Red”

Blue Jeans are Indigo

Blue Jeans are IndigoThe result is that at the Blue, short wavelength end, we distinguish Blue-Green [e.g. Cyan], Blue, Indigo [cf. dark Blue Jeans], Violet. And the relationship with Purple becomes obvious, Purple superposes Red and Blue colours, which is typically going to be significantly redder than Violet.

So, we see here how our perception of colours is shaped by our embodiment and specifics of our bodily tissues and cells, but corresponds to objective phenomena. Indeed, the colour screen you are most likely using to view this on, works by superposing tiny pixels with Red, Green and Blue. If you were to print off on a modern colour printer, it will most likely blend dots of Cyan, Magenta, Yellow and Black, with the paper providing White.

I add, on metamerism, so we can see how two closely similar colours can be composed in quite distinct ways:

Here we see two ways to a brassy amberish colour. One is spectral, with a suitably low light level that excites our LMS cones in a certain pattern. The other uses Red and Green light sources that yields a similar stimulation. So, a simple look at the objects might not tell the difference. This is of course part of how RGB displays work [HT Wikipedia]

Here we see two ways to a brassy amberish colour. One is spectral, with a suitably low light level that excites our LMS cones in a certain pattern. The other uses Red and Green light sources that yields a similar stimulation. So, a simple look at the objects might not tell the difference. This is of course part of how RGB displays work [HT Wikipedia]There is no good reason to airily sweep such away as being beyond some ugly, impassable gulch between what we can access internally through consciousness and a dubious external world of appearances. That is why we can take the principle that yes, we may err on particular points or details but on the whole there is no good reason to dismiss our conscious awareness — we symbolise C:( ) — and what it immediately presents, the self [= I] embedded in the world [We].

Let us symbolise:

C:(I UNION We)

So, we notice that it is our consciousness that carries everything else and instantly presents us with our sense of ourselves embedded in the world beyond our bodies. Our bodies, of course, are part of the physical world. Where, our reasonings are part of that self-awareness and are inextricably entangled with perceptions and language describing what we are aware of and perceive. The union is used as our bodies are embedded in the world and we are somehow present within it.

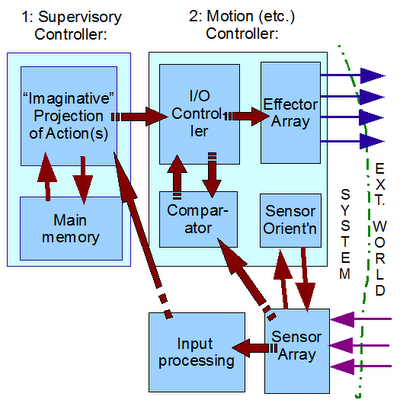

I have often pointed to Eng Derek Smith’s two-tier controller, cybernetic loop model as a context for discussing how that can be, esp. with quantum influence:

The Eng Derek Smith Cybernetic Model

The Eng Derek Smith Cybernetic ModelIn this light, the Plato’s Cave type shadow-show world of grand doubts or delusions can be set aside as self-defeating:

Plato’s Cave of shadow shows projected before life-long prisoners and confused for reality. Once the concept of general delusion is introduced, it raises the question of an infinite regress of delusions. The sensible response is to see that this should lead us to doubt the doubter and insist that our senses be viewed as generally reliable unless they are specifically shown defective. (Source: University of Fort Hare, SA, Phil. Dept.)

Plato’s Cave of shadow shows projected before life-long prisoners and confused for reality. Once the concept of general delusion is introduced, it raises the question of an infinite regress of delusions. The sensible response is to see that this should lead us to doubt the doubter and insist that our senses be viewed as generally reliable unless they are specifically shown defective. (Source: University of Fort Hare, SA, Phil. Dept.)For, there is no natural firewall, so to give a general challenge to our consciousness, self awareness, sense of the self or perception of the world is to undermine the whole process. That is as opposed to having errors in detail or to recognising processes and limitations of sensing, neural network computation etc and the quantum physical substructure associated with that awareness. As, Violet vs Purple indicates.

In short, the point is to recognise limitations without falling into hyperskepticism or reductionism. This is of course a part of the old philosophical problem of the one and the many. In a sense, there is nothing new under the Sun.

In this context, I find Michael Davidson helpful as he discusses what he terms Reid’s Razor, in effect a manifesto of defeasible but heuristically generally effective common good sense reasoning:

[Reidian Common good sense as definition and razor, 1785:] “that degree of judgement which is common to men with whom we can converse and transact business”

Davidson shrewdly points out, how the Razor shaves:

Take a philosophical or scientific principle that is being applied to a particular situation: ask yourself whether you would be able to converse rationally and transact business with that person assuming that principle governed the situation or persons involved. If not dismiss the principle as erroneous or at least deeply suspicious. For example, suppose someone proposes that things-as-they-appear-to-be are not things-as-they-really-are. I do not think I would buy a used car from this man.

That seems a fair enough test of habitual adherence to first duties of reason — or otherwise. Y’know: to truth, right reason, prudence, sound conscience, neighbour, fairness and justice, etc.

In that context, he abstracts from Thomas Reid, a list of defeatable, default rules of thumb for credulity vs skepticism:

REID’S RULES OF COMMON SENSE REALISM

1) Everything of which I am conscious really exists [–> at minimum as an object of conscious awareness, and often as a particular or abstract entity, the presumption is, if I perceive a world with entities, it is by and large real]

2) The thoughts of which I am conscious are the thoughts of a being which I call myself, my mind, my person.

3) Events that I clearly remember really did happen.

4) Our personal identity and continued existence extends as far back in time as we remember anything clearly.

5) Those things that we clearly perceive by our senses really exist and really are what we perceive them to be.

6) We have some power over our actions and over the decisions of our will.

7) The natural faculties by which we distinguish truth from error are not deceptive.

8) There is life and thought in our fellow-men with whom we converse.

9) Certain features of the face, tones of voice, and physical gestures indicate certain thoughts and dispositions of mind.

10) A certain respect should be accorded to human testimony in matters of fact, and even to human authority in matters of opinion.

11) For many outcomes that will depend on the will of man, there is a self-evident probability, greater or less according to circumstances.

12) In the phenomena of Nature, what happens will probably be like what has happened in similar circumstances.

Davidson comments:

According to Reid, anyone who doubts these principles will be incapable of rational discourse and those philosophers who profess to doubt them cannot do so sincerely and consistently. Each of these principles, if denied, can be turned back on the denier. For example, although it is not possible to justify the validity of memory (3) without reference to premises that rest on memory, to dispense with memory as usually unreliable is just not philosophically possible. Reid qualifies some of these principles as not applying in all cases, or as the assumptions that we presume to hold when we converse, which may be contradicted by subsequent experience. For instance with regard to (10) Reid believes that most men are more apt to over-rate testimony and authority than to under-rate them; which suggests to Reid that this principle retains some force even when it could be replaced by reasoning.

I endorse Reid’s principles as normally true and what we must assume to be true to engage in argument and discussion. But, as Reid acknowledges, not all may be true all the time. I thus see Reid’s principles as epistemological rather than metaphysical. Psychologists might point to such things as optical illusions, false memory, attentional blink, hallucinations and various other interesting phenomena which might throw some doubt over some of Reid’s assertions. But these are nonessential modifiers that if entertained as falsifications of these principles would lead to the collapse of all knowledge. Very few philosophers have not acknowledged that the senses can deceive us or that reason is fallible, but to say the senses consistently deceive or that reason is impotent is too big a sacrifice. That the senses can deceive and reason is fallible is good reason to be cautious in our conclusions but not a good reason to dispense with observation and reason all together.

That seems to me to be a useful backgrounder and 101, if not quite a Manifesto. I think it deserves a place in the ongoing UD series on Logic and First Principles of Reason. END

Copyright © 2021 Uncommon Descent . This Feed is for personal non-commercial use only. If you are not reading this material in your news aggregator, the site you are looking at is guilty of copyright infringement UNLESS EXPLICIT PERMISSION OTHERWISE HAS BEEN GIVEN. Please contact legal@uncommondescent.com so we can take legal action immediately.Plugin by Taragana

April 14, 2021

Rob Sheldon on Larry Moran and the junk DNA

No, not a tale, rather a reflection.

Recently, we learned that biochemist emeritus and (at one time) Uncommon Descent commenter Larry Moran is writing a book (2022) arguing that human DNA is 90% junk. Non-functional junk.

Our physics commentator Rob Sheldon writes to reflect on the many meanings of the word “function” in biology:

If I recall correctly, the original definition of “functional” was whether that piece of DNA was turned into a protein, which depended on finding a “start” and a “stop” codon. The Human Genome Project reported that some 90% of the human genome didn’t have these “start/stop” features, and hence was “non-functional”.

a) However, there was extensive editing of RNA in the “spliceosome” with the removal of introns and customization of proteins such that one “start/stop” strand of DNA could make 100 distinct proteins. So the first piece of news is that the mapping is “one to many”.

b) Since the function of start/stop was to tell the DNA polymerase where to start/stop reading, then the fact that the “spliceosome” is spitting out chunks of RNA totally indiscriminately of start/stop codons, means that start/stop is not a good way to characterize functional DNA. Some pieces were still being transcribed into protein without these markers.

So that led to the second method of defining “functional”. Let’s cut out that piece of DNA using CRISPR (or more likely, blocking it with a small anti-sense RNA fragment). Is the edited critter still viable after that DNA is rendered inoperable?

c) Again, a person is “still viable” without legs, just not very competitive. So what exactly does viable mean? If a bacterium lives for 2 weeks but doesn’t reproduce, is it viable? If a bacterium lives indefinitely in a Petri dish but dies when injected into a host, is that viable? If a bacterium lives in a host but dies when the host runs a fever, is that viable?

So we are back to not really knowing when something is functional or not.

So that led to a third method of defining “functional”. Let’s see if there are any protein strands in the organism that are derived from that piece of DNA.

d) But then we discovered that RNA does a million other things — it builds the ribosome, it brings in marked amino acids, it regulates transcription, it carries information outside the cell. Just because a piece of DNA isn’t converted to protein doesn’t mean that it has no function.

So we are up to our fourth method of defining “functional”. If that DNA is turned into RNA then it is functional.

e) That’s where ENCODE comes in and says that 80% and more of the DNA is converted to RNA, which is where Dan Graur loses it and starts ranting about creationists and TV sets. He builds a toy population genetics model and says that 80% destroys his model, and therefore the data is wrong. (No, he isn’t unique, all theorists harbor dark thoughts about experimentalists.)

f) But as experimentalists showed all the new things RNA does, Dan’s model gets less and less compelling. For one example, a piece of “junk DNA” was found to regulate cancer. When that junk DNA was removed, the organism died early of cancer. It was viable, just not competitive.

My analogy is that DNA is like a tool box. Just because we don’t have a hammer in our hand all day, doesn’t mean that the hammer is junk. When you need a hammer, a screwdriver just won’t do. I’ve used pipe wrenches as a hammer in a pinch, but I’ll tell you, it was ugly. My brother spent ten years as a truck mechanic, and as he would gladly tell you, he was often hired because of his toolbox.

So why should the genome be any different? Shouldn’t the default be that if some item is found in his toolbox, it has a function? Why is Dan Graur so adamant to tell my brother that his toolbox is full of junk? Whose reputation is he spitting on anyway?

Dan Graur had announced in 2014 that he didn’t “do politeness” on this topic so maybe forewarned is forearmed.

Rob Sheldon is the author of Genesis: The Long Ascent and The Long Ascent, Volume II.

See also: Larry Moran to write new book: Claims genome is 90% junk If he wants to pick a fight with ENCODE, grab a seat.

Copyright © 2021 Uncommon Descent . This Feed is for personal non-commercial use only. If you are not reading this material in your news aggregator, the site you are looking at is guilty of copyright infringement UNLESS EXPLICIT PERMISSION OTHERWISE HAS BEEN GIVEN. Please contact legal@uncommondescent.com so we can take legal action immediately.Plugin by Taragana

Mini VW Beetle spotted in Cleveland Museum of Natural History’s beetle collection

A suitable final resting place, for sure.

Copyright © 2021 Uncommon Descent . This Feed is for personal non-commercial use only. If you are not reading this material in your news aggregator, the site you are looking at is guilty of copyright infringement UNLESS EXPLICIT PERMISSION OTHERWISE HAS BEEN GIVEN. Please contact legal@uncommondescent.com so we can take legal action immediately.Plugin by Taragana

Wolf-Ekkehard Lönnig offers ten reasons birds are not living dinosaurs

Here. Lönnig is reviewing a book by Alan Feduccia, Romancing the Birds and Dinosaurs: Forays in Postmodern Paleontology (2020)

From the book’s blurb:

Evolutionary biologist Alan Feduccia is S. K. Heninger Distinguished Professor Emeritus at the University of North Carolina, Chapel Hill, where as Chair of Biology he pioneered the UNC Genome Sciences Building, dedicated in 2012. In the mid-1970s he was the first to propose an explosive evolutionary model for birds following the Cretaceous extinction event, now known as bird evolution’s Big Bang, and confirmed by whole genome analyses. He was also first to discover a vestigial first digit (thumb) in an avian embryo, a problem dating to the 1820s. Feduccia is the author of eight books, including notably the popular The Age of Birds (Harvard, 1980), and the award-winning The Origin and Evolution of Birds (Yale, 1996,1999), as well as numerous research papers, and is a popular lecturer. In 2014 UNC established the Alan Feduccia Distinguished Professorship.

Life would be simpler and more emotionally satisfying if birds were surviving dinosaurs but maybe they aren’t.

Readers may remember Wolf-Ekkehard Lönnig from the time that carnivorous plant tried to eat Nick Matzke.

See also: Remember that Darwin-eating plant? Now threatening to eat Nick Matzke …

Carnivorous plants: After eating Darwin, they couldn’t resist further culinary adventures

The plants that eat vertebrate animals

Carnivorous plants: The 200-year headache.

Copyright © 2021 Uncommon Descent . This Feed is for personal non-commercial use only. If you are not reading this material in your news aggregator, the site you are looking at is guilty of copyright infringement UNLESS EXPLICIT PERMISSION OTHERWISE HAS BEEN GIVEN. Please contact legal@uncommondescent.com so we can take legal action immediately.Plugin by Taragana

Michael J. Behe's Blog

- Michael J. Behe's profile

- 219 followers