Paul Gilster's Blog, page 243

August 17, 2012

Are ‘Waterworlds’ Planets in Transition?

Ponder how our planet got its water. The current view is that objects beyond the ‘snow line,’ where water ice is available in the protoplanetary disk, were eventually pushed into highly eccentric orbits by their encounters with massive young planets like Jupiter. Eventually some of these water-bearing objects would have impacted the Earth. The same analysis works for exoplanetary systems, but the amount of water delivered to a potentially habitable planet depends, in this scenario, on the presence of giant planets and their orbits.

Dorian Abbot (University of Chicago) and colleagues Nicolas Cowan and Fred Ciesla (both at Northwestern University) note the consequences of this theory of water delivery. One is that because low mass stars are thought to have low mass disks, they would have fewer gas giants and would produce less gravitational scattering. In other words, we may find that small planets around M-dwarfs are dry. On the other hand, solar-mass stars and above could easily have habitable planets with amounts of water similar to the Earth.

Waterworlds and their Future

‘Waterworlds’ are planets that may have formed outside the snow line and then migrated to a position in the habitable zone. A planet like this could be completely covered in ocean. In any case, we can expect habitable zone planets could have a wide range of water mass fractions; i.e., the amount of water vs. the amount of land. The Abbot paper studies how variable land surfaces could influence planetary habitability, and the authors attack the question using a computer model for weathering and global climate, assuming an Earth-like planet with silicate rocks, a large reservoir of carbon in carbonate rocks, and at least some surface ocean.

Image: A waterworld may be a planet in transition, moving from all ocean to a mixture of land and sea. Credit: ESA – AOES Medialab.

Interestingly, the researchers found that partially ocean-covered planets like the Earth are not dependent upon a particular fraction of land coverage as long as the land fraction is greater than about 0.01:

We will find that the weathering behavior is fairly insensitive to land fraction when there is partial ocean coverage. For example, we will find that weathering feedbacks function similarly, yielding a habitable zone of similar width, if a planet has a land fraction of 0.3 (like modern Earth) or 0.01 (equivalent to the combined size of Greenland and Mexico). In contrast, we will find that the weathering behavior of a waterworld is drastically different from a planet with partial ocean coverage.

What that means is that planets with some continent and some ocean should have habitable zones of about the same width, no matter what the percentage of land to water. The conclusion is based upon the fact that silicate weathering feedback helped to maintain habitable conditions through Earth’s own history. The weathering of surface silicate rocks is the main removal process for carbon dioxide from the atmosphere, and it is temperature dependent, thus helping to buffer climate changes and expanding the size of the habitable zone around a star.

Seafloor weathering also occurs, but the authors point out that it is thought to be weaker than continental weathering and to depend on ocean chemistry and seawater circulation more than surface climate. That would mean carbon dioxide would be removed less efficiently from the atmosphere of a waterworld, which would produce higher CO2 levels and a warmer climate. A planet like this would be less able to buffer any changes in received solar radiation (insolation) and would thus have a smaller habitable zone.

Planetary Evolution at Work

All this is leading up to an absorbing conclusion about waterworlds. Assuming that seafloor weathering does not depend on surface temperature, planets that are completely covered by water can have no climate-weathering feedback. Thus the conclusion that a water world has a smaller habitable zone than a planet with even a few small continents. But a waterworld may be, depending on its position in its solar system, a planet in a state of transition. Abbot and company posit a mechanism that would put a waterworld through a ‘moist greenhouse’ stage which would turn it into a planet with only partial ocean coverage, much like the Earth. Here what would have been complete loss of water is stopped by the exposure of even a small amount of land:

We find… that weathering could operate quickly enough that a waterworld could “self-arrest” while undergoing a moist greenhouse and the planet would be left with partial ocean coverage and a clement climate. If this result holds up to more detailed kinetic weathering modeling, it would be profound, because it implies that waterworlds that form in the habitable zone have a pathway to evolve into a planet with partial ocean coverage that is more resistant to changes in stellar luminosity.

A waterworld thus becomes an Earth-like planet after going through a ‘moist greenhouse’ phase — this occurs when a planet gets hot enough that large amounts of water are lost by photolysis in the atmosphere and hydrogen escapes into space. As water is lost and land begins to be exposed, the moist greenhouse phase can then be stopped by reducing the carbon dioxide through silicate weathering. This is the process the authors call ‘waterworld self-arrest.’

Although we have not performed a full analysis of the kinetic (non-equilibrium) effects, the order-of-magnitude analysis we have done indicates that a habitable zone waterworld could stop a moist greenhouse through weathering and become a habitable partially ocean-covered planet. We note that this process would not occur if the initial water complement of the planet is so large that continent is not exposed even after billions of years in the moist greenhouse state…

It’s also true that waterworlds at the outer edge of the habitable zone would not be in a moist greenhouse state in the first place. We’re likely to find waterworlds, then, but some of them may be in the process of transformation, becoming planets of continents and oceans. And any Earth-sized planet discovered near the habitable zone would be a good candidate to have a wide habitable zone and a stable climate if it has at least a small area of exposed land. That makes discovering the land fraction of any Earth-class planet we observe through future planet-finder missions a priority. The authors believe that missions of the Terrestrial Planet Finder class should be able to determine the land fraction by measuring reflected visible light.

The paper is Abbot et al., “Indication of insensitivity of planetary weathering behavior and habitable zone to surface land fraction,” accepted at The Astrophysical Journal (preprint). Thanks to Andrew Tribick for the pointer.

August 16, 2012

Barnard’s Star: No Sign of Planets

Barnard’s Star has always gotten its share of attention, and deservedly so. It was in 1916 that this M-class dwarf in Ophiuchus was measured by the American astronomer Edward Emerson Barnard, who found its proper motion to be the largest of any star relative to the Sun. That meant the star soon to be named for him was close to us, and unless we’re surprised by a hitherto unobserved brown dwarf, Barnard’s Star remains the closest star to our Sun after the Alpha Centauri triple system. Stick around long enough and Barnard’s Star will close to within 3.75 light years, but even if you make it to 10,000 AD or so, the star will still be too faint to be a naked eye object.

Image: Barnard’s Star, with proper motion demonstrated, part of an ongoing project to track the star. This image shows motion between 2004 and 2008. Credit: Paul Mortfield & Stefano Cancelli/The Backyard Astronomer.

Peter van de Kamp, working at Swarthmore College, had been looking for wobbles in the position of Barnard’s Star going all the way back to 1938, and for a time his results indicated at least one Jupiter-class planet there and possibly two. But other astronomers failed to find evidence for planets, and later work raised the likelihood that the changes in the star fields van de Kamp was looking at were caused by issues related to the refractor he was using. Now we have a new paper from Jieun Choi (UC Berkeley), whose team went to work on Doppler monitoring of Barnard’s Star and concluded that van de Kamp’s findings were erroneous.

The paper, however, gives a generous nod to van de Kamp’s work:

The two planets claimed by Peter van de Kamp are extremely unlikely by these 25 years of precise RVs. We frankly pursued this quarter-century program of precise RVs for Barnard’s Star with the goal of examining anew the existence of these historic planets. Indeed, Peter van de Kamp remains one of the most respected astrometrists of all time for his observational care, persistence, and ingenuity. But there can be little doubt now that van de Kamp’s two putative planets do not exist.

The one-planet model fails to fit as well when studied with radial velocity data from both Lick and Keck:

Even van de Kamp’s model of a single-planet having 1.6 MJup orbiting at 4.4 AU (van de Kamp 1963) can be securely ruled out. The RVs from the Lick and Keck Observatories that impose limits on the stellar reflex velocity of only a few meters per second simply leave no possibility of Jupiter-mass planets within 5 AU, save for unlikely face-on orbits.

The paper goes on to drill down to planets of roughly Earth mass, finding no evidence for such worlds. The result is interesting on a number of levels. We’re finding smaller planets with radii 2 to 4 times that of Earth around M-dwarfs regularly in data from the Kepler mission, in an area close in to the star where this new study of Barnard’s Star is most sensitive to Earth-mass planets. The transit data back up radial velocity data on M-dwarfs from the HARPS spectrograph, which have shown numerous planets with mass a few times larger than Earth’s around M-dwarfs. A 2011 study found the occurrence of super-Earths in the habitable zone is in the area of 41 percent for M-dwarfs, leading to what Jieun Choi and colleagues describe as ‘a lovely moment in science.’

After all, our two major planet-hunting techniques — Doppler measurements to detect planets by their effect on the host star, and brightness measurements for transit detection — both indicate that small planets are apparently common around M-dwarfs. By contrast:

…the non-detection of planets above a few Earth masses around Barnard’s Star remains remarkable as the detection limits here are as tight or tighter than was possible for the Kepler and HARPS surveys. The lack of planetary companions around Barnard’s Star is interesting because of its low metallicity. This non-detection of nearly Earth-mass planets around Barnard’s Star is surely unfortunate, as its distance of only 1.8 parsecs would render any Earth-size planets valuable targets for imaging and spectroscopy, as well as compelling destinations for robotic probes by the end of the century.

Let’s not forget that the early work of Peter van de Kamp had energized speculation about missions to Barnard’s Star. The British Interplanetary Society’s Project Daedalus chose it as a destination largely because of its supposed planetary system even though Alpha Centauri was considerably closer (4.3 light years vs. 6). Robert Forward toyed with Barnard’s Star in his fiction, writing Flight of the Dragonfly, later expanded as Rocheworld, to depict both the planetary system there as well as the technology needed to reach it.

Now we have 248 precise Doppler measurements of Barnard’s Star from the Lick and Keck Observatories saying that the habitable zone of this conveniently nearby star appears to be empty of planets of roughly Earth mass or larger. Let’s hope the Alpha Centauri stars yield a better result. The paper is Choi et al., “Precise Doppler Monitoring of Barnard’s Star,” available online.

August 15, 2012

100 Year Starship Public Symposium

“The future never just happened, it was created.” The quote is from Will and Ariel Durant, the husband and wife team who collaborated on an eleven-volume history of civilization that always used to be included in Book of the Month deals, which is how many of us got our copies. I’m glad to see the Durants’ quotation brought into play by the 100 Year Starship organization in the service of energizing space exploration. It’s a call to create, to work, to push our ideas.

100 Year Starship (100YSS) puts the Durants’ thinking into practice at the second 100 Year Starship Public Symposium, September 13-16 at the Hyatt Regency in Houston. The event promises academic presentations, science fiction panels, workshops, classes and networking possibilities for those in the aerospace community and the public at large. My hope is that the gathering will kindle some of the same enthusiasm we saw last October in Orlando, when the grant from DARPA that created the 100 Year Starship had yet to be assigned and the halls of the Orlando Hilton filled up with starship aficionados. For more on the event, check the 100YSS symposium page.

Image: The track chair panel from last year’s symposium in Orlando. Credit: 100YSS.

DARPA (the Defense Advanced Research Projects Agency) provided the seed money, but 100 Year Starship is now in the hands of Mae Jemison, whose Dorothy Jemison Foundation for Excellence will develop the idea with partners Icarus Interstellar (the people behind Project Icarus, the re-envisioning of the Project Daedalus starship) and the Foundation for Enterprise Development. And as we’ve discussed before, the 100 Year Starship refers not to a century-long star mission but to an organization that can survive for a century to nurture the starship idea, the thinking being that a century from now we will have made major progress on the interstellar front. It’s a gutsy and optimistic time frame and I hope it’s proven right.

The upcoming symposium will take place the week of the 50th anniversary of president Kennedy’s famous speech at Rice University exhorting Americans to land on the Moon, so it’s fitting that former president Bill Clinton has agreed to serve as honorary chair for the event. “This important effort helps advance the knowledge and technologies required to explore space,” said Clinton, “all while generating the necessary tools that enhance our quality of life on Earth.” 100YSS is collaborating with Rice University to integrate activities, which will include a salute to fifty years of human space flight at the Johnson Space Center.

The goal is for a multidisciplinary gathering, as a 100YSS news release makes clear:

100 Year Starship will bring in experts from myriad fields to help achieve its goal — utilizing not only scientists, engineers, doctors, technologists, researchers, sociologists and computer experts, but also architects, writers, artists, entertainers and leaders in government, business, economics, ethics and public policy. 100YSS will also collaborate with existing space exploration and advocacy efforts from both private enterprise and the government. In addition, 100YSS will establish a scientific research institute, The Way, whose major emphasis will be speculative, long-term science and technology.

The 2012 symposium is titled ‘Transition to Transformation…The Journey Begins.’ According to the organization, the goals for the gathering include:

Identifying research directions and priorities

Understanding methods to assess, transform and deploy space-related technologies to improve daily life

Fostering ways to identify and integrate partnerships and partnering opportunities, social structures, cultural awareness and global momentum essential to the 100 year challenge

I see that three track chairs have already been announced. David Alexander (Rice University) will be in charge of a special session on Future Visions, while Eric Davis (Institute for Advanced Studies – Austin) is to be track chair for Time-Distance Solutions. Amy Millman (Springboard Enterprises) chairs a special session called Interstellar Aspiration – Commercial Perspiration. More news on the other track chairs and session topics and papers as it becomes available.

The opportunity before us is to keep the Durants’ quotation in mind: “The future never just happened, it was created.” It’s true on the level of civilizations and on the level of individuals. I’m hoping you can create the opportunity to make it to Houston to see how 100 Year Starship is evolving and to join the scientists, engineers, public policy experts, entertainers and the rest who will be focusing on the issues of interstellar flight. These go well beyond propulsion to include life support, robotics, economics, intelligent systems, communications and more. If it’s anything like last year’s event in Orlando, this second symposium should help move interstellar studies forward.

August 14, 2012

Starships: ‘Skylark’ vs. the Long Haul

Centauri Dreams readers will remember Adam Frank’s recent op-ed Alone in the Void in the New York Times arguing that given the difficulty involved in traveling to the stars, humans had better get used to living on and improving this planet. ‘We will have no other choice,’ wrote Frank. ‘There will be nowhere else to go for a very long time.’ I responded to Frank’s essay in Defending the Interstellar Vision, to which Frank replied on his NPR blog.

Dr. Frank is an astrophysicist at the University of Rochester and author of the highly regarded About Time: Cosmology and Culture at the Twilight of the Big Bang (Free Press, 2011), a study of our changing conception of time that is now nearing the top of my reading stack. In his short NPR post, he makes a compelling point:

Even if we could get a starship up to 10% of light speed (which would be an epoch-making achievement) then the round trip to the nearest known star with a planet would still take 300 years (it’s Gliese 876d for all you exoplanet fans). It’s hard to imagine a culture driving significant changes in a significant fraction of humanity based on three-century long shipping delays! As much as I support moving forward in interstellar research, I can’t escape the conclusion that the theater of our future — for at least a few thousand years — will be here in the solar system. Not forever, perhaps, but millennia at least. And that is a long, long time.

On balance, what we are really disagreeing about is time, my own view being that interstellar flight may be a matter of several centuries away, while Frank takes a longer view. In any case, it’s interesting to speculate on what a society would look like if that 10 percent of lightspeed turned out to be attainable but remained more or less a maximum for space travel. We’ll doubtless find closer worlds than those around Gl 876 (even now the evidence for at least one planet around Epsilon Eridani seems strong, and we’ll see about Alpha Centauri). But a nearby world around Alpha Centauri B is still forty-plus years away at 10 percent of c.

Making Starflight Too Easy

From a cultural perspective, the discussion of interstellar flight has suffered from extremes. I think about something Geoff Landis once told me while he, Marc Millis and I were having lunch at an Indian restaurant near Glenn Research Center in Cleveland. We had come over from GRC after I interviewed the two there and we spent an enjoyable meal talking lightsails and Bussard ramjets and science fiction. A skilled science fiction writer himself, Landis felt that the genre hadn’t always been kind to the serious study of interstellar topics. I quoted him on this in my Centauri Dreams book:

“Science fiction has made work on interstellar flight harder to sell because in the stories, it’s always so easy… Somebody comes up with a breakthrough and you can make interstellar ships that are just like passenger liners. In a way, that spoiled people, because they don’t understand how much work is going to be involved in traveling to the stars. It’s going to be hard. And it’s going to take a long time.”

In Astounding Wonder (Univ. of Pennsylvania Press, 2012), John Cheng’s superb study of science fiction in the era between World War I and II, the author introduces Hugo Gernsback’s strategy of mingling science fact with fiction as a way into wonders that went beyond conventional physics. A case in point was Edward E. Smith’s ‘Skylark of Space’ series, published in Gernsback’s Amazing Stories in three installments in 1928. The tale, soon followed by popular sequels like ‘Skylark Three’ and ‘The Skylark of Valeron,’ went well beyond the science fiction of the period in its scale, invoking fast interstellar travel and a coming galactic civilization. Star travel mingled with romance and adventure to form ‘space opera.’

Image: The August, 1930 cover of Amazing Stories, containing the opening installment of Edward E. Smith’s ‘Skylark Three.’

Here we can see Landis’ point at work, just as we can see it on the bridge of the Enterprise in the Star Trek franchise. Gernsback wanted to keep science in the forefront but he accepted a vehicle — the starship — that defied all understanding of physics at the time. To do this, Skylark author Smith had to come up with an explanation for how his ships flew, and Gernsback’s readers critiqued it even though the device in question was pure fantasy.

Cheng explains:

…Smith provided a detailed discussion in his original story about the ‘intra-atomic energy’ within copper that powered the Skylark. Nonetheless attempts at more contemporary or realistic extrapolations of science were not distinctive features of his narratives. Their operatic sensibilities came from their adventure, the immense scale of their universe, and their moral and ethical consideration, drawing on their scope and perspective, of universal value. In the broader context of achieving universal civilization throughout the galaxy, full knowledge of the details of Einstein’s special theory of relativity might have seemed relatively unimportant. Readers, however, criticized Smith’s stories for their improper science or lack of scientific consideration in purely imagined devices, however small, but not for their grandiose social extrapolations.

The Starship as Plot Device

If Smith’s science didn’t stand up, readers like future science fiction critic P. Schuyler Miller (who explained the Lorentz-Fitzgerald equations in Amazing’s letter column as a way of critiquing Smith) agreed that the tale was a thundering good read. In the same way, James T. Kirk and his descendants gave television viewers a feel for interstellar flight at warp speed, even though many of those enjoying the ride believed that such journeys could be nothing more than plot devices. It was only in the mid-20th Century, and really not until the 1960s, that a case began to be made that actual interstellar flight might be something more than a fantasy.

Of course, the kind of travel the early interstellar pioneers were talking about was nothing like Smith’s or Gene Roddenberry’s. I think Landis’ point stands up well — the reflex is to dismiss interstellar travel because our cultural representations of it have made it look absurdly easy. We’ve now learned that it is possible in principle to send a payload to another star within a human lifetime, but we also see that such journeys would take huge amounts of power and decades of time. Adam Frank is surely right that a society making this kind of journey wouldn’t be working with a cohesive set of colonies with convenient re-supply, but with outposts of humans that would be completely self-sustaining, a new and deeply isolated branch of the human family.

It’s an open question whether our species will choose to make journeys at 10 percent of c, or whether we’ll find ways to ramp up the speed to shorten travel times. Another open question is whether, if we really do find that a low percentage of lightspeed is the best we can attain, humans rather than artificial intelligence — ‘artilects’ — would be the likely crew of such vessels. One thing that is happening in the public perception of interstellar flight, though, is that the gradually more visible study of these topics is opening peoples’ eyes to the possibilities, and the difficulties, of interstellar journeys. A science fictional movie treatment of just how challenging a journey at 10 percent of lightspeed would be is a project worth exploring.

August 13, 2012

Into the Uncanny Valley

After our recent exchange of ideas on SETI, Michael Chorost went out and read the Strugatsky brothers’ novel Roadside Picnic, a book I had cited as an example of contact with extraterrestrials that turns out to be enigmatic and far beyond the human understanding. I’ve enjoyed the back and forth with Michael between Centauri Dreams and his World Wide Mind blog because I learn something new from him each time. In his latest post, Michael explains why incomprehensible technology isn’t really his thing.

A Sense of the Weird

Why? Michael grants the possibility that extraterrestrial intelligence may be far beyond our understanding. But in terms of science fiction and speculation in general, he favors what he calls ‘the uncanny valley,’ the sense of weirdness we get from a technology that is halfway between the incomprehensible and the known. A case in point is Piers Anthony’s novel Macroscope, in which an alien message overwhelms the minds of those who can understand it (people with IQs in the 150 range), causing some to go into a coma, some to die. Those of average intellect, unable to understand the message, remain unharmed by it.

Chorost explains:

The idea of a mind-destroying concept falls into the uncanny valley. It’s analogous to something we have, but it’s qualitatively, ungraspably better. It echoes Godel’s Theorem, which proves that it, the theorem itself, is unprovable. (I’m oversimplifying here.) A theorem that proves its own unprovability is a fascinating, mindbending thing. It upended mathematics when Godel published it in 1931. I have never understood it myself in a whole and complete moment of insight, and there’s a reason for that; it is fundamentally paradoxical. I can understand the pieces one at a time, but not the pieces put together. It gives me the feeling that if I ever did fully grasp all of it, my mind would be both much smarter, and broken. (Godel in fact went insane toward the end of his life.) The point is, we already know of ideas that probably exceed the mental capacity of most human beings. Macroscope invites us to consider the possibility that even higher-octane ideas would break our minds.

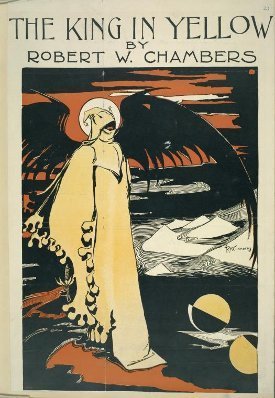

All of which brings to my mind Robert W. Chambers 1895 book The King in Yellow. Chambers was an artist and writer of considerable power. Indeed, Lovecraft biographer S. T. Joshi described The King in Yellow as a classic of supernatural literature, a sentiment echoed by science fiction bibliographer E. F. Bleiler. The book is a collection of unusual tales named after a play that becomes a theme in some, but not all of the stories. With Chambers you have truly entered into the uncanny valley, for when characters in his tales read the fictional play called The King in Yellow, they often go mad. The narrator reads the first act, throws the book into his fireplace and then, seeing the opening words of the second act, snatches it back and reads the entire volume, becoming possessed by its bizarre imagery.

When the French Government seized the translated copies which had just arrived in Paris, London, of course, became eager to read it. It is well known how the book spread like an infectious disease, from city to city, from continent to continent, barred out here, confiscated there, denounced by press and pulpit, censured even by the most advanced of literary anarchists. No definite principles had been violated in those wicked pages, no doctrine promulgated, no convictions outraged. It could not be judged by any known standard, yet, although it was acknowledged that the supreme note of art had been struck in The King in Yellow, all felt that human nature could not bear the strain, nor thrive on words in which the essence of purest poison lurked. The very banality and innocence of the first act only allowed the blow to fall afterward with more awful effect.

Image (above): A print by Robert Chambers illustrating his book The King in Yellow. Credit: New York Public Library/Art and Architecture Collection, Miriam and Ira D. Wallach Division of Art, Prints and Photographs.

Edgar Allen Poe’s ‘The Masque of the Red Death, with its decadent masquerade and spreading plague, was surely in Chambers’ mind when he wrote this. It’s chilling stuff, made all the more mysterious because the reader is left to fit the more prosaic tales in the volume into the larger themes of contact with a mighty force that can destroy the intellect. Not all the stories can be described as macabre but the feeling of strangeness persists, one that influenced H. P. Lovecraft and numerous later writers from James Blish, who set about writing a text for the mysterious play, to Raymond Chandler, who wrote a story of the same title using a narrator familiar with Chambers’ work.

Snaring the Mind in Language

But back to the extraterrestrial question, and the idea that contact may involve a deeply imperfect understanding of alien ideas that could be so powerful as to overwhelm our minds. What is it that could disrupt a human intellect? Michael Chorost talks about Shannon entropy as a way of working out the complexity of a message. That has me thinking about a Stephen Baxter story called “Turing’s Apples,” in which a signal has been received from the Eagle Nebula. We often think of such a signal being made as simple as possible so the beings behind it can communicate, but would they necessarily be communicating with us? What if we don’t get something simple, like a string of prime numbers, but an intercepted message intended for minds greater than our own?

In Baxter’s story, a SETI team has six years’ worth of data to work with. The signal technique is similar to terrestrial wavelength division multiplexing, with the signal divided into sections each roughly a kilohertz wide. Information theory says it is far more than just noise, but it defeats analysis. One character describes it as ‘More like a garden growing on fast-forward than any human data stream.’ The team has applied Shannon entropy analysis, which looks for relationships between signal elements. The method is straightforward:

You work out conditional probabilities: Given pairs of elements, how likely is it that you’ll see U following Q? Then you go on to higher-order ‘entropy levels,’ in the jargon, starting with triples: How likely is it to find G following I and N?

We can use mathematics, in other words, to work out the complexity of a language even if we can’t understand the language itself. That makes it possible to peg the languages dolphins use at third or fourth-order entropy, whereas human language gets up to about nine. In Baxter’s story, the mind-boggling SETI message weighs in with an entropy level around order thirty.

“It is information, but much more complex than any human language. It might be like English sentences with a fantastically convoluted structure — triple or quadruple negatives, overlapping clauses, tense changes.” He grinned. “Or triple entendres. Or quadruples.”

“They’re smarter than us.”

“Oh, yes. And this is proof, if we needed it, that the message isn’t meant specifically for us…. [T]he Eaglets are a new category of being for us. This isn’t like the Incas meeting the Spaniards, a mere technological gap. They had a basic humanity in common. We may find the gap between us and the Eaglets is forever unbridgeable…”

Chorost mentions Robert Sawyer’s WWW: Wake as containing a good discussion of Shannon entropy, which is why I’ve just acquired a copy. Meanwhile, the idea of a SETI message being so layered with meaning that we couldn’t possibly understand it does indeed put the chill down the spine that Chorost talks about, the same chill that Robert Chambers so effectively summons up in The King in Yellow, where language can suggest complexities that entangle the mind until merely human intellect overloads.

August 10, 2012

‘Deep Space Propulsion’: A Review

What I have in mind today is a book review, but I’ll start with a bit of news. The word from Houston is that Ad Astra Rocket Co., which has been developing the VASIMR concept from its headquarters not far from Johnson Space Center in Texas, has been making progress with its 200-kw plasma rocket engine prototype. VASIMR (Variable Specific Impulse Magnetoplasma Rocket) offers constant power throttling (CPT), which would allow it to vary its exhaust for thrust and specific impulse while maintaining a constant power level. CPT has now been demonstrated in a June test, as was reported at the recent AIAA Joint Propulsion Conference in Atlanta and in trade publications like Aviation Week, a useful step forward for the program.

VASIMR in Deep Space

What to make of VASIMR’s chances? When assessing something like this, I turn to my reference library, and because I’ve recently been reading Kelvin Long’s Deep Space Propulsion, I wanted to see what he said about VASIMR. Long treats the subject in a chapter on electric propulsion, a form of pushing a rocket in which a fuel is heated electrically, after which its charged particles are accelerated by electric and/or magnetic fields to provide thrust. The VASIMR wrinkle on electric propulsion is to use a fully ionized gas that has been heated hot enough to become a plasma, higher than the norm for most electrical systems.

Long’s explanation refreshed my memory on how all this works:

The engine is unique in that the specific impulse can be varied depending upon the mission requirement. It bridges the gap between high thrust low-specific impulse technology (e.g., like electric engines) and can function in either mode. The company who designs the VASIMR engine talks about possible 600 ton manned missions to Mars powered by a multi-MW nuclear electric generated VASIMR engine, reaching Mars in less than 2 months.

The proof is in the performance, of course, and we’ll see how VASIMR does in actual flight conditions. A 2015 flight prototype is in the works thanks to Ad Astra’s agreement with NASA. But Long’s book bears the subtitle “A Roadmap to Interstellar Flight,” so it’s intriguing to look at long-range developments with this technology. In theory, writes Long, a VASIMR drive could reach an exhaust velocity of up to 500 kilometers per second, corresponding to a specific impulse of 50,000 seconds, which translates into an Alpha Centauri crossing in 2200 years.

Now 2200 years may be progress — after all, Voyager-class speeds take 75,000 years to travel a similar distance — but if we’re talking about practical missions, VASIMR looks to be more valuable in the Solar System, assuming the technology lives up to Ad Astra’s expectations. But Long points something else out. A VASIMR engine could be considered a scaled down fusion development engine. The components that are similar to a fusion engine include the use of electromagnets to create a magnetic nozzle and the storage of low-mass hydrogen isotopes, along with techniques for energizing and ionizing a gas. Long sees VASIMR development as a way forward for understanding how to control plasmas in an engine for deep space.

A Survey of Interstellar Concepts

Insights like that make Deep Space Propulsion an instructive read. I find myself going back to specific sections and finding things I missed. The book has textbook aspects, containing practice exercises after each chapter, but it’s also enlivened by its author’s passion for finding the right tools to make star missions a reality. Long thus works his way through the major options, as we’ve seen in various recent Centauri Dreams articles where I’ve quoted him. Solar sails and their beamed power ‘lightsail’ cousins make their appearance, and so do futuristic concepts like the Bussard ramjet and its numerous variants. Just keeping up with the ramjet idea and how it has mutated over the years is an exercise in itself, but Long also covers antimatter, nuclear pulse (Orion) and exotic ideas like Johndale Solem’s Medusa.

It’s fascinating to work through these chapters and see how various physicists and engineers have tackled the interstellar challenge, spinning out a concept that is seized upon by others, modified, hybridized, and re-purposed as problems emerge and others solve them. Long was the guiding force behind the launch of Project Icarus, which is a re-examination of the British Interplanetary Society’s classic starship design of the 1970s. It makes sense, then, that fusion, which powered Daedalus, should be a major concern and a key element of the book.

Here it’s easy to get lost in the kind of details that, for me, make interstellar theorizing so endlessly fascinating. The BIS engineers knew their spacecraft would be vast to accommodate the propulsion needs of a vehicle designed to make the 5.9 light year crossing to Barnard’s Star, then thought to have planets. Work at Los Alamos and Lawrence Livermore National Laboratory had developed the idea of pulsed micro-explosions of small pellets using laser or electron beams to produce the needed fusion reaction. Long notes the contribution of Friedwardt Winterberg, whose study of electron-driven ignition became the core ideas of the Daedalus engine.

Winterberg is still, thankfully, with us, producing interstellar work that we’ve talked about here on Centauri Dreams, a remarkable link to a classic era of discovery considering that his PhD advisor was Werner Heisenberg. Long goes through Winterberg’s contribution and its adoption by Daedalus, work which continues to inspire the Icarus team as they develop and extend the Daedalus design. What the BIS came up with in the ‘70s was gigantic:

The propulsion system for Daedalus used electron beams to detonate 250 ICF [inertial confinement fusion] pellets per second containing a mixture of D/He3 fuel. The fusion products would produce He4 and protons, both of which could be directed for thrust using a magnetic nozzle. The D/He3 pellets would be injected into the chamber by use of magnetic acceleration, enabled by use of a micron-sized 15 Tesla superconducting shell around the pellet. The complete vehicle was to require 3 X 1010 pellets, which if manufactured over 1 year would require a production rate of 1,000 pellets per second.

Not only do you have extraordinary rates of production but you have the problem of finding the helium-3, which the Daedalus team addressed by considering a 20-year mining operation in the atmosphere of Jupiter. We’ll see how the Icarus designers solve the helium-3 issue, but it’s clear that the kind of nuclear starship envisioned by Daedalus would require an infrastructure throughout the Solar System that could reliably maintain large human crews in deep space and move industrial processes and products between the planets at will. There’s that ‘roadmap’ idea Long is talking about, as one developmental step builds upon another to make more advances possible. We can also hope that such advances teach us how best to contain our costs.

Making the Case for Star Missions

Like Gregory Matloff and Eugene Mallove, whose 1989 book The Starflight Handbook reviewed all the interstellar options then at work in the literature, Long’s Deep Space Propulsion offers a mathematical treatment of certain key ideas, especially useful for those coming up to speed on fundamentals like the rocket equation. Long throws in, in addition to the math, a good dose of interstellar advocacy. He’s keen on seeing design studies like Icarus continued around other possible technologies, so that we have constantly developing iterations of everything from nuclear rockets (NERVA) to microwave-beamed sails (Starwisp), a basis upon which future teams will finally build a starship. Along the way, generations of starship engineers learn and master their trade.

Could contests like the Ansari X-Prize be adapted for deep space missions? The book goes into some detail on how this model might work as a way of increasing the technological readiness of different propulsion schemes. But the process is lengthy:

…other authors have estimated the launch of the first interstellar probe will occur by around the year 2200 AD. This includes one author who looked at velocity trends since the 1800s. Factoring the likely uncertainties associated with the assumptions of these sorts of studies, particularly in relation to assuming linear technological progression, it is likely that the first interstellar probe mission will occur sometime between the year 2100 and 2200. To achieve this will require a significant advance in our knowledge of science or an improvement in the next generation propulsion technology. Given the tremendous scientific advances made in the twentieth century, it at least does not seem unreasonable to think that such a technology leap may in fact occur.

Creation of an Institute

Toward that end, Long has recently announced the formation of what he has called ‘the world’s first dedicated Institute for Interstellar Studies,’ whose logo you see here. The Institute is currently building a website and in a recent brochure states an accelerated goal for an interstellar mission:

“Our mission is to conduct activities or research relating to the challenges of achieving robotic and human interstellar light. We will address the scientific, technological, political and social and cultural issues. We will seed high-risk high-gain initiatives, and foster the breakthroughs where they are required. We will work with anyone co-operatively from the global community who desires to invest their time, energy and resources towards catalyzing an interstellar civilization. Our goal is to create the conditions on Earth and in space so that starlight becomes possible by the end of the twenty first century or sooner by helping to create an interplanetary and then an interstellar explorer species. We will seek out evidence of life beyond the Earth, wherever it is to be found. We will achieve this by harnessing knowledge, new technologies, imagination and intellectual value to create innovative design and development concepts, defined and targeted public outreach events as well as cutting edge entrepreneurial and educational programs.”

We’ll track this as it develops — for more information, contact Interstellarinstitute@gmail.com. Meanwhile, those wanting to keep up with the primary interstellar concepts should keep a copy of Deep Space Propulsion at hand. I started this post with a look at VASIMR because the whole range of electric propulsion concepts is intricate and in many ways confusing. Long’s chapter on this goes into the major divisions between the thruster types and untangles the issues around Hall Effect thrusters, MPD thrusters, pulsed plasma and VASIMR in ways I could understand. This is a book that will get plenty of use, and my copy is already filling with penciled-in notes.

August 9, 2012

Hit by a Falling Star

About a year ago a French couple by the name of Comette returned to their home to find that a meteorite had struck their house while they were away on holiday. It could be said that the Comettes already had a celestial connection — if in name only — but now the heavens impinged upon their lives again, a fact they didn’t realize until their roof began to leak. Living in Draveil, about 12 miles south of Paris, the couple discovered that the space rock had blown right through a thick tile and wedged itself in glass wool insulation. It turns out to be an iron-rich chondrite some 4.57 billion years old.

France, according to this article in The Telegraph, receives the highest number of meteorites per capita in the world, and the Comettes have no intention of parting with this one. The story reminded me of 14-year old Gerrit Blank, who was hit on the hand by a red-hot piece of rock about the size of a pea that went on to create a foot-wide crater in the ground. This was back in 2009 in Essen, Germany, and young Gerrit is doing just fine. I have no idea what the odds of being hit in a meteor strike are, much less of surviving one, but Gerrit now sports a three-inch scar on his hand that will be fodder for countless stories down the line.

Image: A meteor burning up in the atmosphere during the annual Perseid meteor shower, as seen by astronaut Ron Garan aboard the ISS in 2011. Credit: Ron Garan/NASA.

Celestial objects that hit our planet have also been on the mind of students at the University of Leicester, who have gone to work on the 1998 film Armageddon, in which Bruce Willis drills into an Earth-bound asteroid and detonates a nuclear device that splits the object in half. The planet is saved from destruction by this act as the remaining fragments are diverted. What the students were able to demonstrate was that Willis’ method wouldn’t have worked, not unless he had a bomb about a billion times stronger than anything ever detonated on Earth.

The Soviet Union’s ‘Big Ivan,’ says this University of Leicester news release, wouldn’t have stood any chance of splitting an asteroid with the properties described in the film. It turns out that 800 trillion terajoules of energy are needed to split the asteroid and drive its pieces away from our planet, while the total energy output of the Soviet blockbuster was 418,000 terajoules.

So much for Bruce Willis. Moreover, the students found that the asteroid would have had to be split very early in the process, almost immediately after it could have been detected. Now that’s a scenario I can work with — early detection is crucial because you have to allow time to get to the object in question, not to mention deploying whatever threat mitigation tools you bring with you. In the case of Armageddon, the depicted asteroid would surely have struck our planet because we lack the ability to see it soon enough or get to it fast enough.

The papers on this work were published in the University of Leicester Journal of Special Physics Topics and are accessible here. The journal comes out once a year and contains papers written in the final year of the students’ Master of Physics degree, so it serves as training for those planning to become actively involved in scientific publishing. In this case, the idea of taking a popular film and asking whether its science is valid is an excellent corrective. After all, movies like Armageddon reach huge audiences, but all too often contain scientific errors that compromise the story, even if a forgiving audience is ready to overlook them.

But back to smaller celestial debris. Back in 1954, a fragment the size of a grapefruit blasted through the roof of a house in Sylacauga, Alabama, eventually landing — after bouncing off a console radio — upon one Ann Elizabeth Hodges, who was asleep on her living room sofa. Until Gerrit Blank came on the scene, I’m aware of no one else being struck by a meteorite. Remarkably, the Hodges’ rented white-frame house was across the road from the Comet Drive-In Theater, which featured a neon sign showing a comet streaking through the heavens. The meteorite fragment is now found at the Alabama Museum of Natural History in Tuscaloosa.

All of which leads me to note that the Perseid meteors should be turning up late Saturday night and early Sunday morning (August 11-12). Alan MacRobert, a senior editor for Sky & Telescope, says that while the Perseids seem to emanate from the constellation Perseus, they can flash into view almost anywhere as long as the constellation is above the horizon. “So,” says MacRobert, “the best part [of] the sky to watch is wherever is darkest, probably straight up.” And don’t be too concerned about becoming the next Gerrit Blank — the Perseids are pieces of Comet Swift-Tuttle and are more like clumps of dust than lumps of rock and iron.

August 8, 2012

SETI: Contact and Enigma

I’m not surprised that Michael Chorost continues to stimulate and enliven the SETI discussion. In his most recent book World Wide Mind (Free Press, 2011), Michael looked at the coming interface between humans and machines that will take us into an enriched world, one where implants both biological and digital will enhance our experience of ourselves and each other. You’ll recall that it was a cochlear implant that restored hearing to this author, and doubtless propelled the thinking that led to this latest book. And it was the issue of hearing and communication that we looked at in an earlier discussion of Chorost’s views on SETI.

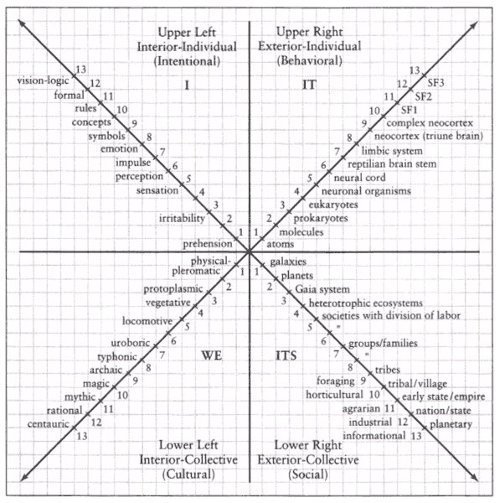

That conversation has continued in Michael’s World Wide Mind blog, as he ponders some of the comments his earlier ideas provoked on Centauri Dreams. In particular, how would we ever come to understand an extraterrestrial civilization if it differed fundamentally from us? Chorost thinks the problem is not biological, that no matter how aliens might look, we could study them to understand how they function. What would be far more tricky are questions of technology and culture, and here he brings into play Ken Wilber’s ideas about evolutionary development, notions that can be summarized in the chart immediately below.

Image: Based on Ken Wilber’s ideas of an ‘all-quadrants, all-levels’ model of evolutionary development, this chart shows four axes that trace the development of a society. Credit: Ken Wilber via Michael Chorost.

If you browse through the diagram, you’ll note the likelihood that a society at level 10 on one axis will be at level ten on the other three. Chorost explains:

For example, level 10 in the upper-left quadrant is conceptual thinking. It’s aligned with level 10 in the other quadrants, which are the complex neocortex, the tribe/village, and magical modes of thought. When brains developed complex neocortexes, that was when they were able to sustain the social structures of tribes and villages, along with rituals of propitiation. One could also put it the other way around: tribal structures facilitated the development of the modern neocortex. These are all intimately related and mutually constituting. Each level on each axis is dependent upon, and enables, the others.

All of which seems to make sense, but what we should be pondering are the implications of extending each axis indefinitely. An advanced extraterrestrial species could easily register well beyond 10 on the chart along all four axes, making our need to relate human experience to what we encounter that much more difficult. We may, in fact, lack the neural structures to conceptualize and analogize these advanced levels because an alien culture would have developed modes of thought based on its own experience that are too remote from our more limited grid.

It’s always fascinating to portray encounters with aliens and always a bit aggravating when they show up in Hollywood as clearly human with a few tweaks to make them seem different. But even as we fuss with the producers of such shows for their lack of imagination (or budget), we’re missing the bigger picture. The problem is that we may find our galactic neighbors to be incomprehensible on every level. Here I think, as I often do, of the Strugatsky brothers’ novel Roadside Picnic, in which alien visitors have no apparent interest in humans at all, leaving behind them artifacts that no one can figure out. Their presence tells us that we are not alone, but their departure leaves us with questions of intent — what was their purpose here, and what are the ‘empties’ (the book’s term for artifacts) that the aliens have abandoned?

In fact, Roadside Picnic gets across the sense of the inexplicably alien better than almost any novel I have ever read — it should definitely be on the short-list for Centauri Dreams readers. The so-called ‘Visitation’ in the novel involves six different places where the aliens appeared, though they were never actually seen by people living nearby. The ‘zones’ of visitation are filled with unusual phenomena and bizarre items like the ‘pins’ found by the protagonist, who is himself a ‘stalker’ who finds and sells alien oddities like these:

In the electric light the pins looked shot with blue and would on rare occasion burst into pure spectral colors — red, yellow, green. He picked up one pin and, being careful not to prick himself, squeezed it between his finger and thumb. He turned off the light and waited a little, getting used to the dark. But the pin was silent. He put it aside, groped for another one, and also squeezed it between his fingers. Nothing. He squeezed harder, risking a prick, and the pin started talking: weak reddish sparks ran along it and changed all at once to rarer green ones. For a couple of seconds Redrick admired this strange light show, which, as he learned from the Reports, had to mean something, possibly something very significant…

But what? The novel is shot through with ambiguity and mystery. It’s the mystery of Wilber’s diagram extended indefinitely in all four directions, assuming structures of thinking that may be so far beyond our experience as to defeat our every inquiry. In human terms, we can imagine the difficulty in trying to explain sunset colors to a color-blind person. Chorost uses a much better example: The difference between a non-literate society and a literate one. Could science develop in the absence of a written language in which to couch its arguments and record its findings? And just how you would explain these questions and the need to perform these functions with language to someone who had never experienced reading or writing?

It’s possible to see ways around these problems, as Chorost explains:

I’d like to be optimistic. I’d like to think we’d be better off than preliterates puzzling over Wikipedia on an iPad. In his book The Beginning of Infinity, David Deutsch argues that humans crossed a crucial threshold with the scientific method. We now know that everything is explainable in principle, if we make the effort to understand it. Arthur C. Clarke famously said that any sufficiently advanced technology will seem like magic. This may be true, but we will not mistake it for magic. We have a postmodern openness to difference, a future-oriented culture, and well-established methodologies for studying the unknown. Our relative horizons are much larger than our ancient ancestors’ were.

I’m a bit more ambivalent. Yes, we would make every effort to explain an extraterrestrial culture. But I think that even our best methodologies will have trouble untangling motives and intent if confronted with a civilization substantially older than our own. The solution may not be in our hands but theirs (if they have hands). How concerned will they be in establishing a relationship with us? In the Strugatskys’ novel, the aliens have come and gone, leaving behind them little more than enigma. Roadside Picnic gives us a glimpse of what an extraterrestrial encounter may be like unless the culture we meet finds us worth the effort to introduce itself.

August 7, 2012

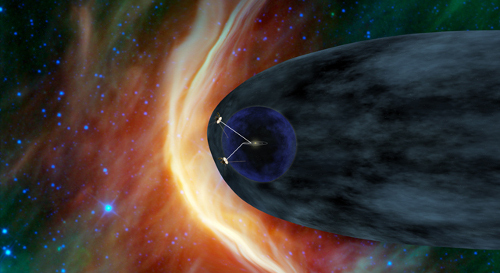

Voyager Update: Still in Choppy Waters

The continued explorations of our two Voyagers have earned these tough spacecraft the right to be considered an interstellar mission, which is how NASA now describes their journeys. Neither will come anywhere near another star for tens of thousands of years, but in this context ‘interstellar’ means putting a payload with data return into true interstellar space. Right now the Voyagers are still within the heliosphere, that great bubble opened out around our system by the Sun’s solar wind, and the signs are multiplying that a transition is soon to occur.

Three measurements are going to mark the boundary crossing, and we’re seeing that two out of the three are in a state of rapid change. This JPL news release points out that on July 28, Voyager 1’s cosmic ray instrument showed a jump of five percent in the level of galactic cosmic rays the craft was encountering. In the second half of that same day, the level of lower-energy particles flowing from inside the Solar System dropped by half. Both measurements had recovered to their former state within three days, but you can see that Voyager 1 is moving through the chop and froth that marks a boundary somewhere up ahead.

Image: The Voyager interstellar mission, pushing up against the edge of the Solar System. Credit: NASA.

The third factor is the direction of the magnetic field, which researchers expect will change direction when true interstellar space is encountered. We should have an early analysis of the latest magnetic field readings some time in the next month. At some point, all three indicators are going to switch over to a more definitive state, but even then we’ll have to see how long the back-and-forth continues in what could be a ragged boundary area.

Noting the gradual increase of high-energy cosmic rays over a period of years and the corresponding drop in lower-energy particles, Voyager project scientist Ed Stone can only say: “The increase and the decrease are sharper than we’ve seen before, but that’s also what we said about the May data. The data are changing in ways that we didn’t expect, but Voyager has always surprised us with new discoveries.” In any case, the flow of lower-energy particles is expected to drop close to zero when the final transition occurs.

As of this morning, Voyager 1, the more distant craft, is 16 hours 46 minutes and 28 seconds light-travel time from Earth, corresponding to 121.479 AU. We’re used to thinking of today’s spacecraft as being far more complex than those of previous decades, but bear in mind that the two Voyagers each contain some 65,000 individual parts, their continued functioning a testament to the skill of the scientists and engineers who designed them. What will eventually silence them is a lack of power as their radioisotope thermoelectric generators lose their punch.

Looking forward, the ultraviolet spectrometer is expected to function until mid-2013, when it will be turned off to save power. But as long as the spacecraft are still operational, the cosmic ray subsystem, the low-energy charge particle instrument, magnetometer, plasma subsystem, plasma wave subsystem and planetary radio astronomy instrument should continue to operate. We’ve got years of data return ahead and can hope for a window between the crossing into interstellar space and the loss of power around 2020 in which to see what surprises Voyager may yet spring about the environment future interstellar craft will have to move through.

August 6, 2012

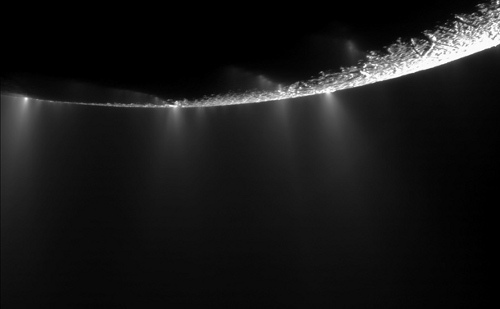

After Curiosity (whew!), Thoughts on Enceladus

At $2.5 billion, NASA’s Curiosity rover didn’t cost quite as much as Cassini ($3 billion), but what a relief to Solar System exploration both near and far to have it safely down at Gale Crater. This Reuters story tells me that 79 different pyrotechnic detonations were needed to release ballast weights, open the parachute, separate the heat shield, detach the craft’s back shell and perform the rest of the functions needed to make this hair-raising landing a success. All of this with a 14-minute round-trip radio delay that left mission engineers as no more than bystanders.

Congratulations to the entire Curiosity team on this triumphant event! As we now move into the next several weeks checking the six-wheeled rover and its instruments out for exploration, let’s ponder future targets beyond the Red Planet. For at some point, no matter what we find on Mars, we’re going to want to push on to the outer planets, where intriguing moons like Titan, Europa and Enceladus await. The latter’s stock seems to be rising, as witness this recent article in The Guardian forwarded by Andy Tribick. Although they face major challenges, astrobiological missions to Enceladus offers rich prospects indeed. Two are being studied, and it’s easy to see why.

Increasingly, Enceladus seems to be a natural for astrobiology. Cassini has already shown us that the geysers spewing out of the Saturnian moon’s south pole contain complex organic compounds, and I like what NASA astrobiologist Chris McKay has to say about the place:

“It just about ticks every box you have when it comes to looking for life on another world. It has got liquid water, organic material and a source of heat. It is hard to think of anything more enticing short of receiving a radio signal from aliens on Enceladus telling us to come and get them.”

A subterranean ocean with complex organic chemicals of the sort suggested by the Cassini findings should be an interesting place indeed, especially since it seems to rise close to the surface at the south pole, accounting for the material being vented into space along the ‘tiger stripes,’ long cracks in the crust. All this material is feeding Saturn’s E-ring which, if Enceladus were suddenly to switch off, would likely disappear. McKay calls the venting of water and organics into space ‘an open invitation to go there.’

Image: Geysers at the south pole of Enceladus, as seen by Cassini in a November, 2009 flyby. Credit: NASA.

Answering the invitation would be the Enceladus Sample Return mission, a concept NASA scientists including McKay are putting together that would involve another Saturn orbiter, one that would make periodic flybys of Enceladus to collect plume samples that would eventually be returned to Earth. With Enceladus already pumping sub-surface material into space, a landing there becomes unnecessary. The Enceladus Sample Return mission builds on missions like Stardust, from which we gained expertise in retrieving sample materials from a comet’s tail. The mission is being designed to fit within the parameters of NASA’s Discovery program, which keeps the cost (without launch) at $500 million or below, about a fifth the price tag for Curiosity.

But not everyone agrees that a landing on Enceladus isn’t necessary. The German Aerospace Center (DLR) has been exploring concepts involving landing at the south pole and drilling through the ice. Its Enceladus Explorer would use an ice drill probe that would melt its way down to a depth of 100 to 200 meters to reach a water-bearing crevasse, sampling the liquid found there for microorganisms. A prototype of the device DLR is calling an IceMole has been used at the Morteratsch glacier in Switzerland and is soon to be tested in the Antarctic.

The complicated landing and drilling operation — not to mention the navigation issues faced by the IceMole as it moves through sub-surface ice — make operating the Enceladus Explorer look as risky as Curiosity’s landing on Mars. This excerpt from its project description online explains why the German team is anxious to put instrumentation on the moon’s surface and below:

…water rises to the surface through crevasses and fissures in the ice where it evaporates explosively and freezes instantly. The resulting ice fountains can shoot up to altitudes of several hundred kilometres before the ice particles slowly fall back to the moon’s surface. The microorganisms that could have evolved in the hypothetical ocean of liquid salt water under Enceladus’ icy crust, and have been swept away by the water spouting through the crevasses in the ice, would be extremely unlikely to survive; they would explode at the surface, and all that would remain are the organic compounds whose existence was verified by the Cassini spacecraft.

In other words, forget about microorganisms once they are exposed to the vacuum — all you will see are organic compounds. DLR’s IceMole, in contrast, would examine its samples in situ, sending results back to a base station on the surface that would also serve as the power source for the probe.

Would the chance to study actual living organisms give the edge to DLR’s proposal, or is a flyby the safer and cheaper alternative and the one we’re most likely to see achieved? Ideally we wind up with both missions funded, but no one would be so rash as to predict the mission choices likely from both NASA and ESA in a time of drastically reduced budgets. Let’s just say that Enceladus is staying in the news and that ingenious proposals are emerging for its study.

And it’s interesting to speculate on whether the IceMole technology being examined for DLR’s Enceladus Explorer might be adaptable to other interesting moons like Europa, Callisto or Ganymede. Each presents more problems than Enceladus, but a first-generation IceMole might some day grow into a far more powerful probe that could get a look at Europa’s deep ocean.

Paul Gilster's Blog

- Paul Gilster's profile

- 7 followers