Paul Gilster's Blog, page 226

May 7, 2013

Update on Starship Century Symposium

We had a successful launch last night of the ESTCube-1 satellite from Kourou, about which more tomorrow when I’ll be talking about electric sails and their uses both interplanetary and interstellar. But this morning, with the Starship Century Symposium rapidly approaching, I wanted to run this overview, which corrects and updates several things in the post I published a couple of weeks ago. Seats are still available for those of you in range. Thanks to Jim Benford for the following:

The Starship Century Symposium is the inaugural event at the new Arthur C. Clarke Center for Human Imagination at UC San Diego, Tuesday Wednesday, May 21–22. The program is located here. The symposium celebrates the publication of the Benfords’ anthology, Starship Century. Jon Lomberg, the artist who collaborated extensively with Carl Sagan, has read the book and has this comment:

Starship Century is the definitive document of this moment in humanity’s long climb to the stars. Here you can find the physics, the astronomy, the engineering, and the vision that provides the surest guideposts to our future and destiny.

A number of luminaries will discuss a wide variety of starship–related topics derived from the book. The gathering features thinkers from a variety of disciplines including scientists, futurists, space advocates and science fiction writers. The program includes Freeman Dyson, Paul Davies, Robert Zubrin, Peter Schwartz, Geoffrey Landis, Ian Crawford, James Benford and John Cramer. Science fiction writers included are Neal Stephenson, Gregory Benford, Allen Steele, Joe Haldeman and David Brin. Other writers attending are Jerry Pournelle, Larry Niven and Vernor Vinge.

The book will be available for sale for the first time on Tuesday the 21st at a book signing immediately following the first day of the Symposium. There many of the authors in the anthology will be available for signing. Following the first day of the Symposium there will be a reception featuring an exhibition of Arthur C. Clarke artifacts in the Giesel Library of UCSD.

In addition to the speakers, there are a number of panels. One, about the development of the Solar System, is ‘The Future of New Space’. Another is a panel on ‘Getting to the Target Stars,’ moderated by SETI celebrity Jill Tarter. The conclusion is a science fiction writers panel, ‘Envisioning the Starship Era,’ moderated by Gregory Benford and featuring Joe Haldeman, David Brin, Vernor Vinge and Jon Lomberg. At the conclusion of the Symposium there will be a book signing for other books of the authors present. There will also be a later book signing at Mysterious Galaxy bookstore a few miles from the University. It will feature Starship Century and the works of the other writers present.

The Symposium will be webcast and then archived. The webcast, which activates at the time of the event, is here.

The Benfords will donate the profits from sale of the book to interstellar research activities. They are currently working to establish a research committee that will award research contracts. The edition available at the symposium will be unique, a collectors item. The book will then go into general distribution in the summer. The Benfords recommend purchasing through a link that will soon appear on the Starship Century website.

This route is optimal because it maximizes the percentage profit, thus maximizing the money available for research. As we all know, research dollars have been greatly lacking in the interstellar area, which is one reason why the interstellar organizations such as Icarus Interstellar, Tau Zero and the Institute for Interstellar Studies are volunteer organizations. The Benfords are planning a second symposium to be held in London in the fall.

May 6, 2013

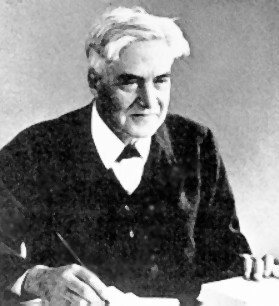

Robert Goddard’s Interstellar Migration

Astronautics pioneer Robert H. Goddard is usually thought of in connection with liquid fuel rockets. It was his test flight of such a rocket in March of 1926 that demonstrated a principle he had been working on since patenting two concepts for future engines, one a liquid fuel design, the other a staged rocket using solid fuels. “A Method of Reaching Extreme Altitudes,” published in 1920, was a treatise published by the Smithsonian that developed the mathematics behind rocket flight, a report that discussed the possibility of a rocket reaching the Moon.

While Goddard’s work could be said to have anticipated many technologies subsequently developed by later engineers, the man was not without a visionary streak that went well beyond the near-term, expressing itself on at least one occasion on the subject of interstellar flight. Written in January of 1918, “The Ultimate Migration” was not a scientific paper but merely a set of notes, one that Goddard carefully tucked away from view, as seen in this excerpt from his later document “Material for an Autobiography” (1927):

“A manuscript I wrote on January 14, 1918 … and deposited in a friend’s safe … speculated as to the last migration of the human race, as consisting of a number of expeditions sent out into the regions of thickly distributed stars, taking in a condensed form all the knowledge of the race, using either atomic energy or hydrogen, oxygen and solar energy… [It] was contained in an inner envelope which suggested that the writing inside should be read only by an optimist.”

Optimism is, of course, standard currency in these pages, so it seems natural to reconsider Goddard’s ideas here. As to his caution, we might remember that the idea of a lunar mission discussed in “A Method of Reaching Extreme Altitudes” not long after would bring him ridicule from some elements in the press, who lectured him on the infeasibility of a rocket engine functioning in space without air to push against. It was Goddard, of course, who was right, but he was ever a cautious man, and his dislike of the press was, I suspect, not so much born out of this incident but simply confirmed by it.

In the event, Goddard’s manuscript remained sealed and was not published until 1972. What I hadn’t realized was that Goddard, on the same day he wrote the original manuscript, also wrote a condensed version that David Baker recently published for the British Interplanetary Society. It’s an interesting distillation of the rocket scientist’s thoughts that speculates on how we might use an asteroid or a small moon as the vehicle for a journey to another star. The ideal propulsion method would, in Goddard’s view, be through the control of what he called ‘intra-atomic energy.’

Image: Rocket pioneer Robert H. Goddard, whose notes on an interstellar future discuss human migration to the stars.

Atomic propulsion would allow journeys to the stars lasting thousands of years with the passengers living inside a generation ship, one in which, he noted, “the characteristics and natures of the passengers might change, with the succeeding generations.” We’ve made the same speculation here, wondering whether a crew living and dying inside an artificial world wouldn’t so adapt to the environment that it would eventually choose not to live on a planetary surface, no matter what it found in the destination solar system.

And if atomic energy could not be harnessed? In that case, Goddard speculated that humans could be placed in what we today would think of as suspended animation, the crew awakened at intervals of 10,000 years for a passage to the nearest stars, and intervals of a million years for greater distances. Goddard speculates on how an accurate clock could be built to ensure awakening, which he thought would be necessary for human intervention to steer the spacecraft if it came to be off its course. Suspended animation would involve huge changes to the body:

…will it be possible to reduce the protoplasm in the human body to the granular state, so that it can withstand the intense cold of interstellar space? It would probably be necessary to dessicate the body, more or less, before this state could be produced. Awakening may have to be done very slowly. It might be necessary to have people evolve, through a number of generations, for this purpose.

As to destinations, Goddard saw the ideal as a star like the Sun or, interestingly, a binary system with two suns like ours — perhaps he was thinking of the Alpha Centauri stars here. But that was only the beginning, for Goddard thought in terms of migration, not just exploration. His notes tell us that expeditions should be sent to all parts of the Milky Way, wherever new stars are thickly clustered. Each expedition should include “…all the knowledge, literature, art (in a condensed form), and description of tools, appliances, and processes, in as condensed, light, and indestructible a form as possible, so that a new civilisation could begin where the old ended.”

The notes end with the thought that if neither of these scenarios develops, it might still be possible to spread our species to the stars by sending human protoplasm, “…this protoplasm being of such a nature as to produce human beings eventually, by evolution.” Given that Goddard locked his manuscript away, it could have had no influence on Konstantin Tsiolkovsky’s essay “The Future of Earth and Mankind,” which in 1928 speculated that humans might travel on millennial voyages to the stars aboard the future equivalent of a Noah’s Ark.

Interstellar voyages lasting thousands of years would become a familiar trope of science fiction in the ensuing decades, but it is interesting to see how, at the dawn of liquid fuel rocketry, rocket pioneers were already thinking ahead to far-future implications of the technology. Goddard was writing at a time when estimates of the Sun’s lifetime gave our species just millions of years before its demise — a cooling Sun was a reason for future migration. We would later learn the Sun’s lifetime was much longer, but the migration of humans to the stars would retain its fascination for those who contemplate not only worldships but much faster journeys.

May 2, 2013

Starship Musings: Warping to the Stars

by Kelvin F.Long

The executive director of the Institute for Interstellar Studies here gives us his thoughts on Star Trek and the designing of starships, with special reference to Enrico Fermi. Kelvin is also Chief Editor for the Journal of the British Interplanetary Society, whose latest conference is coming up. You’ll find a poster for the Philosophy of the Starship conference at the end of this post.

Like many, I have been inspired and thrilled by the stories of Star Trek. The creation of Gene Roddenberry was a wonderful contribution to our society and culture. I recently came across an old book in the shop window of a store and purchased it straight away. The book was titled The Making of Star Trek, The book on how to write for TV!, by Stephen E.Whitfield and Gene Roddenberry. It was published by Ballantine books in 1968 – the same year that the Stanley Kubrick and Arthur C Clarke 2001: A Space Odyssey came out. What with all this and Project Apollo happening, the late 1960s was a time to have witnessed history. Pity I wasn’t born until the early 1970s when the lunar program was winding down. I digress…

In this book, one finds the story of how Roddenberry tried to market his idea for a new type of television science fiction show. It is clear from reading it that Roddenberry was very much concerned for humankind and in the spirit of Clarke’s positive optimism, he was trying to steer us down a different path. In this book we find out many wonderful things about the origins of Star Trek, including that the U.S.S Enterprise was originally called the U.S.S Yorktown and that Captain James T.Kirk was originally Captain Robert T. April. He was described as being “mid-thirties, an unusually strong and colourful personality, the commander of the cruiser”.

The time period that Star Trek was said to be set was sometime between 1995 and 2995, close enough to our times for our continuing cast to be people like us, but far enough into the future for galaxy travel to be fully established. The Starship specifications were given as cruiser class, gross mass 190,000 tons, crew department 203 persons, propulsion drive space warp, range 18 years at light-year velocity, registry Earth United Spaceship. The nature of the mission was galactic exploration and investigation and the mission duration was around 5 years. Reading these words today, we see that what Roddenberry was doing was laying the foundations for many future visions of what starships would be like.

To Craft a Starship

What I found absolutely fascinating about reading this book however, was the process by which Roddenberry and team actually came up with the U.S.S Enterprise design. Roddenberry met with the art department and in the summer of 1964 the design of the starship was finalised. The art directors included Pato Guzman and Matt Jefferies. Roddenberry’s instructions to the team on how to design the U.S.S Enterprise were clear:

“We’re a hundred and fifty or maybe two hundred years from now. Out in deep space, on the equivalent of a cruise-size spaceship. We don’t know what the motive power is, but I don’t want to see any trails or fire. No streaks of smoke, no jet intakes, rocket exhaust, or anything like that. We’re not going to Mars, or any of that sort of limited thing. It will be like a deep-space exploration vessel, operating throughout our galaxy. We’ll be going to stars and planets that nobody has named yet”. He then got up and, as he started for the door, turned and said, “I don’t care how you do it, but make it look like it’s got power”.

According to Jefferies, the Enterprise design was arrived at by a process of elimination and the design even involved the sales department, production office and Harvey Lynn from the Rand Corporation. The various iterations produced many sheets of drawings – I wonder what happened to those treasures? The book shows some of the earlier concepts the team came up with.

Today, many in the general public take interstellar travel for granted, because Star Trek makes it look so easy with its warp drives and antimatter powered reactions. But for those of us who try to compute the problem of real starship design, we know the truth – that it is in fact extremely difficult. Whether you are sending a probe via fusion propulsion, laser driven sails or other means, the velocities, powers, energies are unreasonably high from the standpoint of today’s technology. But it is the dream of travelling to other stars through programs like Star Trek that keeps our candles burning late into the night as we calculate away at the problems. In time, I am sure we will prevail.

Fermi’s Enterprise?

There is an element of developing warp drive theory however that is usually neglected and I think it is now time to raise it – the implications to the Fermi Paradox. This is the calculation performed by the Italian physicist Enrico Fermi around 1950 that given the number of stars in the galaxy, their average distance, spectral type, age and how long it takes for a civilization to grow – intelligent extraterrestrials should be here by now, yet we don’t see any. Over the years there have been many proposed solutions to the Fermi paradox. In 2002 Stephen Webb published a collection of them in his book If the Universe is Teeming with Aliens…Where is Everybody? Fifty Solutions to the Fermi Paradox and the Problem of Extraterrestrial Life, published by Paxis.

One of the ways to address this is to ask if interstellar travel was even feasible in theory, and as discussed in my recent Centauri Dreams post on the British Interplanetary Society, Project Daedalus proved that it was. If you can design on paper a machine like Daedalus at the outset of the space age, what could you do in two or three centuries from now?

But even then travel times across the galaxy would be quite slow. The average distance between stars is around 5 light years, the Milky Way is 1,000 light years thick and 100,000 light years in diameter. Travelling at around ten percent of the speed of light the transit times for these distances would be 50 years, 10,000 years and 1 million years respectively. These are still quite long journeys and the probability of encountering another intelligent species from one of the 100-400 billion stars in our galaxy may be low. But what if you have a warp drive?

The warp drive would permit arbitrarily large multiple equivalents of the speed of light to be surpassed, so that you could reach distances in the galaxy fairly quickly. Just like Project Daedalus had to address whether interstellar travel was feasible as an attack on the Fermi Paradox problem, so the warp drive is yet another question – are arbitrarily large speeds possible, exceeding even the speed of light?

If so, then our neighbourhood should be crowded by alien equivalents of the first Vulcan mission that landed on Earth in the Star Trek universe. To my mind, if we can show in the laboratory that warp drive is feasible in theory as a proof of principle, and yet we don’t discover intelligent species outside of the Earth’s biosphere, then of the many solutions to the Fermi paradox, perhaps there are only two remaining. The first would be some variation on the Zoo hypothesis, and the second is that we are indeed alone on this pale blue dot called Earth. Take your pick what sort of a Universe you would rather exist in.

May 1, 2013

Stars for JWST

Red dwarfs or brown? The question relates to finding targets as the James Webb Space Telescope gets closer to launch. We’re going to want to have a well defined target list so that the JWST can be put to work right away, and part of that effort means finding candidate planets the telescope can probe. Yesterday’s white paper on a proposed search for brown dwarfs using the Spitzer Space Telescope lined up a number of reasons why these objects are good choices:

* for a given planetary equilibrium temperature, the orbit gets shorter with decreasing primary mass, increasing the probability of transit and providing 50+ occultations per year (and 50+ transits);

* the planet to brown dwarf size ratio means transiting rocky planets produce deep transits and permit the detection of planets down to Mars’ size in a single transit event when using Spitzer;

* the reliability of the detection is helped by the absence of known false astrophysical positives: brown dwarfs have very peculiar colors, small sizes, and being nearby, have a high proper motion allowing to check what is within their glare

All this is in addition to the fact that the fainter the star, the greater the contrast between the primary and the planet. But interest in red dwarfs remains high as well. Here again we are dealing with small stars where the habitable zone can be closer than the distance between Mercury and the Sun, making for easier transit detections than with G or K-class stars. Daniel Angerhausen (Rensselaer Polytechnic Institute) and team are thus proposing a project of their own called HABEBEE, for “Exploring the Habitability of Eyeball Exo-Earths.”

Eyeball? This online feature in Astrobiology Magazine lays out the background. Angerhausen knows that tidal lock will set in on a closely orbiting planet, with the night side likely covered in ice while the day side could offer, at the right orbital distance, clement conditions for life. The article cites the disputed candidate planet Gliese 581g as a possible ‘eyeball’ world, but there seems to be little need to single out such a controversial object. Planets meeting this description should be relatively common given that M-dwarfs make up 70-80 percent of all stars in the galaxy, so that it’s possible they are the most abundant locations of life.

“A little bit closer to the star — that is, hotter — they would completely thaw and become waterworlds,” Angerhausen tells Astrobiology Magazine’s Charles Choi; “[A] little bit further out in the habitable zone — that is, colder — they would become total iceballs just like Europa, but with a potential for life under the ice crust. These planets — water, eyeball or snowball — will most probably be the first habitable planets we will find and be able to characterize remotely. Thats why it is so important to study them now.”

Image: This artist’s impression shows a sunset seen from the super-Earth Gliese 667 Cc. The brightest star in the sky is the red dwarf Gliese 667 C, which is part of a triple star system. The other two more distant stars, Gliese 667 A and B appear in the sky also to the right. Finding planets in the habitable zones of red dwarfs and characterizing their atmospheres will be a major component in our search for life in the universe. Credit: ESO/L. Calçada.

There’s plenty to work with, especially given the flare situation on younger M-dwarfs, which can cause ultraviolet radiation spikes of up to 10,000 times normal levels. We have copious information about M-dwarf flares that has been gathered by observers over the years, while new observations of likely JWST candidate stars should help us characterize those more likely to host habitable planets. Radiation experiments involving the Brazilian National Synchrotron Light Source at Campinas will help the team understand the effects of radiation on ice.

The plan is to put together various models for red dwarf planets in the habitable zone that will help astronomers predict how well existing and future telescope surveys can find them. The team also hopes to travel to Antarctica to gather microbes in places that are transition zones between ice and water. They’ll use a planetary simulation chamber that was originally designed at the Brazilian Astrobiology Laboratory to mimic conditions on Mars. There the Antarctic microbes can be tested under various conditions of radiation and atmosphere to simulate M-dwarf possibilities.

So many unknowns, no matter what kind of star we home in on. I suspect that Amaury H.M.J. Triaud (Kavli Institute for Astrophysics & Space Research), who heads up the brown dwarf team, and Angerhausen himself would agree that we can’t be doctrinaire about where we look for life. Their proposals focus on brown or red dwarfs respectively not because they think these are the only possibilities for life, but because a case can be made that finding rocky worlds in the habitable zones of such stars will be quicker and the planets more easily characterized than the alternatives. The more we learn now, the better we’ll be able to use our future instruments.

April 30, 2013

Hunting for Brown Dwarf Planets

Brown dwarfs fascinate me because they’re the newest addition to the celestial menagerie, exotic objects about which we know all too little. The evidence suggests that brown dwarfs can form planets, but so far we’ve found only a few. Two gravitational microlensing detections on low mass stars have been reported, one of which is a 3.2 Earth-mass object orbiting a primary with mass of 0.084 that of the Sun, putting it into the territory between brown dwarfs and stars. The MEarth project has uncovered a planet 6.6 times the mass of the Earth orbiting a 0.16 solar mass star.

Now a new proposal to use the Spitzer Space Telescope to hunt for brown dwarfs planets is available on the Net, one that digs into what we’ve found so far, with reference to the discoveries I just mentioned:

Accounting for their low probabilities, such detections indicate the presence of a large, mostly untapped, population of low mass planets around very low mass stars (see also Dressing & Charbonneau (2013)). Arguably the most compelling discovery is that of the Kepler Object of Interest 961, a 0.13 [solar mass] star, orbited by a 0.7, a 0.8 and a 0.6 Rearth on periods shorter than two days (Muirhead et al. 2012). The KOI-961 system, remarkably, appears like a scaled-up version of the Jovian satellite system. This is precisely what we are looking for.

The plan is to use the Spitzer instrument to discover rocky planets orbiting nearby brown dwarfs, the idea being that the upcoming mission of the James Webb Space Telescope will need a suitable target list, and soon, for it to be put to work on probing the atmospheres of exoplanets. A 5400 hour campaign is the objective, the goal being to detect a small number of planetary systems with planets as small as Mars. Interestingly, the team is advocating a rapid release of all survey data to up the pace of exoplanet research and compile a database for further brown dwarf studies.

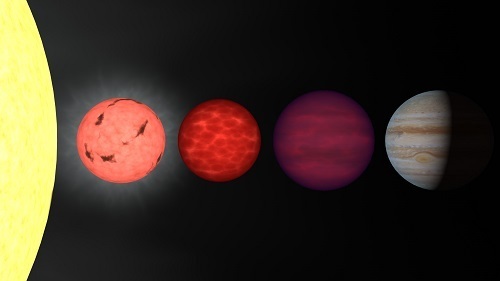

Image: The stellar menagerie: Sun to Jupiter, via brown dwarfs. Credit: Space Telescope Science Institute.

Brown dwarfs turn out to be excellent targets as we try to learn more about rocky planets around other stars. Studying the photons emitted by an atmosphere during an occultation requires relatively close targets, and as the paper on this work points out, the fainter the primary, the better the contrast between the central object and the planet. And around brown dwarfs we can expect deep transits that allow us to detect objects down to Mars size with Spitzer’s equipment. The paper also notes that brown dwarfs older than half a billion years show a near constant radius over their mass range, making it easier to estimate the size of detected planets.

Spitzer is the only facility that can survey a sufficient number of brown dwarfs, long enough, with the precision and the stability required to credibly be able to detect rocky planets down to the size of Mars, in time for JWST. We estimate that about 8 months of observations would be needed to complete the survey. Once candidates are detected, large ground-based facilities will confirm the transits, find the period (if only one event was captured by Spitzer) and check for the presence of additional companions. This program will rapidly advance the search for potentially habitable planets in the solar neighborhood and transmit to JWST a handful of characterizable rocky planet atmospheres.

This is a survey that not only probes a fascinating kind of object but one that should offer what the paper calls “the fastest and most convenient route to the detection and to the study of the atmospheres of terrestrial extrasolar planets.” It goes public at a time when 76 new brown dwarfs have been discovered by the UKIRT Infrared Deep Sky Survey, including two potentially useful ‘benchmark’ systems. The authors of the Spitzer proposal argue that observing the atmospheres of Earth-sized transiting worlds around M-Dwarfs with JWST will be much more challenging than equivalent work using brown dwarfs, assuming we get to work identifying the best targets.

The white paper is Triaud et al., “A search for rocky planets transiting brown dwarfs,” available online. The UKIRT Infrared Deep Sky Survey paper is Burningham et al., “Seventy six T dwarfs from the UKIDSS LAS: benchmarks, kinematics and an updated space density,” accepted at Monthly Notices of the Royal Astronomical Society (abstract).

April 29, 2013

Starship Congress Registration Opens

Our friends at Icarus Interstellar continue working on this summer’s conference. Just in from my son Miles is news about the opening of registration for the Dallas event.

Registration for the 2013 Starship Congress, hosted by Icarus Interstellar, is now open. The registration fee is $100; however, the first 25 paid registrations receive a $25 discount. This discount is also available to individuals who sign up by May 2nd, 2013. Students can register for a reduced rate of $50. Students must present a valid student I.D. at the Starship Congress to take advantage of the student rate. The $25 discount does not apply to student registrations. Group rates are also available. An optional lunch is offered for August 15, 16 and 17 for $25.

The Starship Congress will be held August 15-18 at the Hilton Anatole in Dallas, Texas. A discounted rate for Starship Congress attendees is available at the Hilton Anatole from August 12-20. To book a room at the special rate, click here.

Richard Obousy, President and Senior Scientist for Icarus Interstellar, provided Centauri Dreams readers with a preview of the Starship Congress, which you can read here. For any questions, contact registration@icarusinterstellar.org.

April 26, 2013

Robotic Replicators

Centauri Dreams regular Keith Cooper gives us a look at self-replication and the consequences of autonomous probes for intelligent cultures spreading into the universe. Is the Fermi paradox explained by the lack of such civilizations in the galaxy, or is there a far more subtle reason? Keith has been thinking about these matters for some time as editor of both Astronomy Now and Principium, which has just published its fourth issue in its role as the newsletter of the Institute for Interstellar Studies. Intelligent robotic probes, as it turns out, may be achievable sooner than we have thought.

by Keith Cooper

There’s a folk tale that you’ll sometimes hear told around the SETI or physics communities. Back in the 1940s and 50s, at the Los Alamos National Labs, where the first nuclear weapons were built, many physicists of Hungarian extraction worked. These included such luminaries in the field as Leó Szilárd, Eugene Wigner, Edward Teller and John Von Neumann. When in 1951 their colleague, the Italian physicist Enrico Fermi, proposed his famous rhetorical paradox – if intelligent extraterrestrial life exists, why do we not see any evidence for them? – the Hungarian contingent responded by standing up and saying, “We are right here, and we call ourselves Hungarians!”

It turns out that the story is apocryphal, started by Philip Morrison, one of the fathers of modern SETI [1]. But there is a neat twist. You see, one of those Hungarians, John Von Neumann, developed the idea of self-replicating automata, which he presented in 1948. Twelve years later astronomer Ronald Bracewell proposed that advanced civilisations may send sophisticated probes carrying artificial intelligence to the stars in order to seek out life and contact it. Bracewell did not stipulate that these probes had to be self replicating – i.e able to build replicas of themselves from raw materials – but the two concepts were a happy marriage. A probe could fly to a star system, build versions of itself from the raw materials that it finds there, and then each daughter probe could continue on to another star, where more probes are built, and so on until the entire Galaxy has been visited for the cost of just one probe.

The combination of Von Neumann machines and Bracewell’s probes made Fermi’s Paradox all the more puzzling. There has been more than enough time throughout cosmic history for one or more civilisations to send out an army of self-replicating probes that could colonise the Galaxy in anywhere between three million and 300 million years [2] [3]. By all rights, if intelligent life elsewhere in the Universe does exist, then they should have colonised the Solar System long before humans arrived on the scene – the essence of Fermi’s Paradox. The conundrum it is about to be compounded further, because human civilisation will have its own Von Neumann probes within the next two to three decades, tops. And if we can do it, so can the aliens, so where are they?

To Build a Replicator

A self-replicator requires four fundamental components: a ‘factory’, a ‘duplicator’, a ‘controller’ and an instruction program. The latter is easy – digital blueprints that can be stored on computer and which direct the factory in how to manufacturer the replica. The duplicator facilitates the copying of the blueprint, while the controller is linked to both the factory and the duplicator, first initiating the duplicator with the program input, then the factory with the output, before finally copying the program and uploading it to the new daughter probe, so it too can produce offspring in the future.

‘Duplicator’, ‘controller’, ‘factory’; these are just words. What are they in real life? In biology, DNA permits replication by following these very steps. DNA’s factory is found in the form of ribosomes, where proteins are synthesised. The duplicators are RNA enzymes and polymerase, while the controllers are the repressor molecules that can control the conveyance of genetic information from the DNA to the ribosomes by ‘messenger RNA’ created by the RNA polymerase. The program itself is encoded into the RNA and DNA, which dictates the whole process.

That’s fine for biological cells; how on earth can a single space probe take the raw materials of an asteroid and turn it into another identical space probe? The factory itself would be machinery to do the mining and smelting, but beyond this something needs to do the job of constructing the daughter probe down to the finest detail. Previously, we had assumed that nanotechnology would do the duplicating, reassembling the asteroidal material into metal paneling, computer circuits and propulsion drives. However, nanotechnology is far from reaching the level of autonomy and maturity where it is able to do this.

Perhaps there is another way, a technology for which we are only now beginning to see its potential. Additive manufacturing or, as it is more popularly known, 3D printing, is being increasingly utilised in more and more areas of technology and construction. Additive manufacturing takes a digital design (the instruction program) and is able to build it up layer by layer, each 0.1mm thick. The factory, in this sense, is then the 3D printer as a whole. The duplicator is the part that lays down the layers while the controller is the computer. It’s not a pure replica in the Star Trek sense, but it can build practically anything, including moving parts, that can otherwise only be manufactured in a real factory.

Gathering Space Resources

3D printing is not the technology of tomorrow; it’s the technology of today. It’s not a suddenly disruptive technology either (well, not in the sense of how it has gradually evolved), having been around in its most basic form since the 1970s and in its current form since 1995. Rather, it is a transformative technology. The reason it is gaining traction in modern society now is because it is becoming affordable, with small 3D printers now costing under $2,000. Within a decade or so, we’ll all have one; they’ll be as ubiquitous as a VCR, cell phone or a microwave. This will have huge consequences for manufacturing, jobs and the economy, potentially destroying large swathes of the supply chains from manufacturing to the purchaser, but, whereas the factory production lines on Earth may dry up, in space new economic opportunities will open up.

As spaceflight transitions from the domain of national space agencies to a wider field of private corporations, economic opportunities in space are already being sought after, including the mineral riches of the asteroids. One company in particular, Deep Space Industries, has already patented a 3D printer that will work in the microgravity of space [4] and they intend to use additive manufacturing to construct communication and energy platforms, space habitats, rocket fuel stations and probes from material mined from asteroids and brought into Earth orbit. For now, they envisage factory facilities in orbit and the asteroids mined will be those that come close to Earth [5]. Nevertheless, it has already been mooted that astronauts on a mission to Mars will be able to take 3D printers with them and, as we utilise asteroids further afield, we’ll start to bundle in the 3D printers with automated probes, creating an industrial infrastructure in space, first across the inner Solar System and then expanding into the outer realms.

Image: A ‘fuel harvestor’ concept as developed by Deep Space Industries. Credit: DSI.

Here’s the key; these 3D printers that will sit in orbit and are designed to build habitats or communication platforms, could easily become part of a large probe and be programmed to just build more probes. All of a sudden, we’d have a population of Von Neumann probes on our hands.

Without artificial intelligence, the probes would just be programmed automatons. They’d spend their time flitting from asteroid to asteroid, following the simple programming we have given them, but one day someone is inevitably going to direct them towards the stars. This raises two vital points. One is that if we can build Von Neumann probes, then a technological alien intelligence could surely do the same and their absence is therefore troubling. And two, Von Neumann probes will soon no longer be a theoretical concept and we are going to have to start to decide what we want them to be: explorers, or scavengers.

A Future Beyond Consumption

It seems clear that self-replicating probes will first be used for resource gathering in our own Solar System. Gradually their sphere of influence will begin to edge out into the Kuiper Belt and then the Oort Cloud, halfway to the nearest stars. That may not be for some time, given the distances involved, but when we start sending them to other stars, do we really want them rampaging through another planetary system, consuming everything like a horde of locusts? How would we feel if someone else’s Von Neumann probes entered our Solar System to do the same? Once they are let loose, we need to take responsibility for their behaviour, lest we be considered bad parents for not supervising our creations. That would not be the ‘first contact’ situation we’ve been dreaming of.

On the other hand, Bracewell’s probes were designed for contact, for communication, for the storage and conveyance of information – a far more civilised task. But standards, however low, can be set early. If our Von Neumann probes are only ever used for mining, will we be wise enough to have the vision in the future to appropriate them for other means too? It seems we need to think about how we are going to operate them now, rather than later after the horse has bolted.

And perhaps there lies the answer to Fermi’s Paradox. Maybe intelligent extraterrestrials are more interested in making a good first impression than the incessant consumption of resources. Perhaps that is why the Solar System wasn’t scoured by a wave of Von Neumann probes long ago. The folly of our assumption is that we see all before us as resources to be utilised, but why should intelligent extraterrestrial life share that outlook? Maybe they are more interested in contact than consumption – a criticism that can be levelled at other ideas in SETI, such as Kardashev civilisations and Dyson spheres that have been discussed recently on Centauri Dreams. Perhaps instead there is a Bracewell probe already here, lurking in in a Lagrange point, or in the shadow of an asteroid, watching and waiting to be discovered. If that’s the case, it may be one our own Von Neumann probes that first encounters it – and we want to make sure that we make the right impression with our own probe the day that happens.

References

[1] H Paul Schuch’s edited collection of SETI essays, SETI: Past, Present and Future, published by Springer, 2011.

[2] Birkbeck College’s Ian Crawford has calculated that the time to colonise the Galaxy could be as little as 3.75 million years, as described in an article in the July 2000 issue of Scientific American.

[3] Frank Tipler’s estimate for the time to colonise the Galaxy was 300 million years, as written in his famous 1980 paper “Extraterrestrial Intelligent Beings Do Not Exist,” that appeared in the Royal Astronomical Society’s Quarterly Journal.

[4] Deep Space Industries 22 January 2013 press announcement.

[5] Private correspondence with Deep Space Industries’ CEO, David Gump.

April 25, 2013

The Alpha Centauri Angle

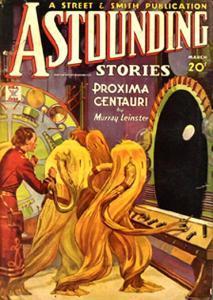

Apropos of yesterday’s article on the discovery of Proxima Centauri, it’s worth noting that Murray Leinster’s story “Proxima Centauri,” which ran in Astounding Stories in March of 1935, was published just seven years after H. A. Alden’s parallax findings demonstrated beyond all doubt that Proxima was the closest star to the Sun, vindicating both Robert Innes and J. G. E. G. Voûte. Leinster’s mile-wide starship makes the first interstellar crossing only to encounter a race of intelligent plants, the first science fiction story I know of to tackle the voyage to this star.

The work surrounding Proxima Centauri was intensive, but another fast-moving star called Gamma Draconis in Draco, now known to be about 154 light years from Earth thanks to the precision measurements of the Hipparcos astrometry satellite, might have superseded it. About 70 percent more massive than the Sun, Gamma Draconis has an optical companion that may be an M-dwarf at about 1000 AU from the parent. Its bid for history came from the work of an astronomer named James Bradley, who tried without success to measure its parallax. Bradley was working in the early 18th Century on the problem and found no apparent motion.

Stellar parallax turned out to be too small an effect for Bradley’s instruments to measure. Most Centauri Dreams readers will be familiar with the notion of observing the same object from first one, then the other side of the Earth’s orbit, looking to determine from the angles thus presented the distance to the object. It’s no wonder that such measurements were beyond the efforts of early astronomers and the apparent lack of parallax served as an argument against heliocentrism. A lack of parallax implied a far greater distance to the stars than was then thought possible, and what seemed to be an unreasonable void between the planets and the stars.

It would fall to the German astronomer Friedrich Wilhelm Bessel to make the first successful measurement of stellar parallax, using a device called a heliometer, which was originally designed to measure the variation of the Sun’s diameter at different times of the year. As so often happens in these matters, Bessel was working at the same time that another astronomer — his friend Thomas Henderson — was trying to come up with a parallax reading for Alpha Centauri. Henderson had been tipped off by an observer on St. Helena who was charting star positions for the British East India Company that Alpha Centauri had a large proper motion.

Henderson was at that time observing at the Cape of Good Hope, using what turned out to be slightly defective equipment that may have contributed to his delays in getting the Alpha Centauri parallax into circulation. In any event, Bessel’s heliometer method proved superior to Henderson’s mural circle and Dollond transit (see this Astronomical Society of Southern Africa page for more on these instruments), and Bessel’s work was accepted by the Royal Astronomical Society in London in 1842, while Henderson’s own figures were questioned.

Bessel thus goes down as the first to demonstrate stellar parallax. Henderson went on to tighten up his own readings on Alpha Centauri, using measurements taken by his successor at the Royal Observatory at the Cape of Good Hope, but it took several decades for the modern value of the parallax to be established. But both astronomers were on to the essential fact that parallax was coming within the capabilities of the instruments of their time, and by the end of the 19th Century, about 60 stellar parallaxes had been obtained. The parallax of Proxima Centauri, for the record, is now known to be 0.7687 ± 0.0003 arcsec, the largest of any star yet found.

Image: A portrait of the German mathematician Friedrich Wilhelm Bessel by the Danish portrait painter Christian Albrecht Jensen. Credit: Wikimedia Commons.

While the Hipparcos satellite was able to extend the parallax method dramatically, it falls to the upcoming Gaia mission to measure parallax angles down to an accuracy of 10 microarcseconds, meaning we should be able to firm up distances to stars tens of thousands of light years from the Earth. Indeed, working with stars down to magnitude 20 (400,000 times fainter than can be seen with the naked eye), Gaia will be able to measure the distance of stars as far away as the galactic center to an accuracy of 20 percent. The Gaia mission’s planners aim to develop a catalog encompassing fully one billion stars, producing a three-dimensional star map that will not only contain newly discovered extrasolar planets but brown dwarfs and thousands of other objects useful in understanding the evolution of the Milky Way.

One can only imagine what the earliest reckoners of stellar distance would have made of all this. Archimedes followed the heliocentric astronomer Aristarchus in calculating that the distance to the stars, compared to the Sun, was proportionally as far away as the ratio of the radius of the Earth was to the distance to the Sun (thanks to Adam Crowl for this reference). Using the figures he was working with, that works out to a stellar distance of 100 million Earth radii, a figure then all but inconceivable. If we translated into our modern values for these parameters, the stars Aristarchus was charting would be 6.378 x 1011 (637,800,000,000) kilometers away. The actual distance to Alpha Centauri is now known to be roughly 40 trillion (4 x 1013) kilometers.

April 24, 2013

Finding Proxima Centauri

It’s fascinating to realize how recent our knowledge of the nearest stars has emerged. A little less than a century has gone by since Proxima Centauri was discovered by one Robert Thorburn Ayton Innes (1861-1933), a Scot who had moved to Australia and went on to work at the Union Observatory in Johannesburg. Innes used a blink comparator to examine a photographic plate showing an area of 60 square degrees around Alpha Centauri, comparing a 1910 plate with one taken in 1915. Forty hours of painstaking study revealed a star with a proper motion similar to Alpha Centauri (4.87” per year), and about two degrees away from it.

The question Innes faced was whether the new star was actually closer than Alpha Centauri, an issue that could be resolved only with better equipment. Ian Glass (South African Astronomical Observatory) tells the tale in a short paper written for the publication African Skies. Innes ordered a micrometer eyepiece that would be fitted to the observatory’s 9-inch telescope, an addition that was completed by May of 1916. In April of the following year he realized he was in a competition. A Dutch observer working at the Royal Observatory in Cape Town wrote to Innes to say that he had begun parallax studies on the new star in February of 1916.

Image: Robert Thorburn Ayton Innes, discoverer of Proxima Centauri. Credit: Wikimedia Commons.

Proxima did not yet have its name, of course, though there was suspicion that it was bound to Alpha Centauri and thus might be Centauri C (it’s worth noting that the question isn’t fully resolved even today, though the case for a bound Proxima seems strong). The Dutch observer, who bore the majestic name of Joan George Erardus Gijsbertus Voûte, published his results before Innes could get into print. There was not enough evidence to prove that the new star was closer than Alpha Centauri, but Voûte pointed out that its implied absolute magnitude made it the least luminous star then known. The soon to be christened Proxima was hardly a headliner.

According to Glass, Innes was concerned to establish his priority in the discovery — some things never change — and so he rushed his incomplete parallax results into a 1917 meeting of the South African Association for the Advancement of Science. Relying partly on Voûte’s data, Innes wound up with a parallax value of 0.759”. Says Glass: “…without a proper discussion of the probable error of the measurement, [Innes] drew the unjustifiable conclusion that his star was closer than α Cen and therefore must be the nearest star to the Solar System.”

Thus, based on as yet incomplete evidence, the new star was named, with Innes suggesting ‘Proxima Centaurus.’ It turns out that the preliminary value Voûte calculated for Proxima’s parallax in his paper was closer to the correct one than Innes’, but the latter would always be known for the discovery of Proxima. We didn’t really peg the accurate value of Proxima’s proper motion until H. A. Alden did so at Yale Southern Station in Johannesburg in 1928. Innes, as it turned out, had made a lucky guess forced by circumstance, one that proved to be correct.

Voûte (1879-1953) turned out to be an interesting figure in his own right. Having decided at an early age to make astronomy his life’s work, he developed a keen interest in double stars and parallaxes and published on the subject over a span of some fifty years. Born to Dutch parents in Java, he returned there to found the Nederlandsch-Indische Sterrekundige Vereeniging, whose express object was to set up an astronomical observatory. Voûte would use the observatory at Lembang, 1300 meters up on the side of the Tangkoebanl Prahoe volcano, to make numerous parallax determinations and photographic studies of variable stars, concentrating particularly on closely paired double stars. You can see where he got his interest in Alpha Centauri!

Image: From left to right: J.G.E.G. Voûte (the first director of Bosscha Observatory), K.A.R. Bosscha (the principal benefactor and chairman of the NISV), Ina Voûte (Voûte’s wife). Source: Private collection of Bambang Hidayat. Credit: Tri Laksmana Astraatmadja.

World War II destroyed Voûte’s health — he was imprisoned during the Japanese occupation of Java, and never fully recovered — but he remained interested in astronomy until his death. As to Innes (whom Glass mistakenly refers to as ‘Richard’ rather than ‘Robert’), his work on binary stars would lead to 1628 discoveries and the publication of the Southern Double Star Catalog in 1927. Innes was a self-taught amateur astronomer, a wine merchant in Sydney with a passion for the sky who, despite his lack of formal training, showed enough promise to be invited to the Cape Observatory by the astronomer royal Sir David Gill, moving on to Johannesburg in 1903. It seems appropriate that a small M-dwarf in Carina with a high proper motion — GJ 3618 — is today known as Innes’ Star after its discoverer.

For more, see Glass, “The Discovery of the Nearest Star,” African Skies Vol. 11 (2007), p. 39 (abstract).

April 23, 2013

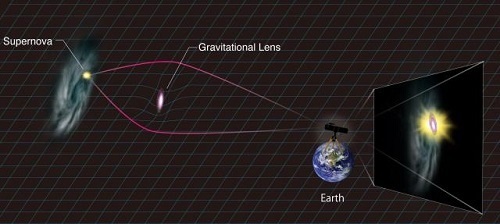

A Gravitationally Lensed Supernova?

I keep a close eye on gravitational lensing, not only because of the inherent fascination of the subject but also because the prospect of using the Sun’s own lensing to study distant astrophysical phenomena could lead to near-term missions to 550 AU and beyond. And because I’m also intrigued by ‘standard candles,’ those markers of celestial distance so important in the history of astronomy, I was drawn to a new paper on the apparent gravitational lensing of a Type Ia supernova (SNIa). This is the kind of supernova that led to the discovery of the accelerating expansion of the universe by giving us ways to measure the distance to these objects.

The point about Type Ia supernovae is that they are so much alike. We may not fully understand the mechanisms behind their explosions, but we have overwhelming evidence that these supernovae reach nearly standard peak luminosities. There is also a strong correlation between their luminosity and other observables like the shapes of their light-curves, so their value as standard candles — astronomical objects with a known luminosity — is clear. Our studies of dark energy depend crucially on Type Ia supernovae, putting a premium on learning more about them.

The supernova PS1-10afx turned up in data from the Panoramic Survey Telescope & Rapid Response System 1 (Pan-STARRS1). What drew particular attention to it was that it appeared to be extremely bright — with an inferred luminosity about 100 billion times greater than the Sun — but also extremely distant. The luminosity was not itself a problem, as it corresponded to the recently discovered category of superluminous supernovae (SLSNe). But the latter tend to be blue and show a brightness curve that changes slowly with time. Not so PS1-10afx, which was highly luminous, displayed the brightness changes of a normal supernova, and was red.

Ryan Chornock (Harvard–Smithsonian Center for Astrophysics) and colleagues reported on the new supernova’s unusual attributes in a recent paper in The Astrophysical Journal. But Robert Quinby (Kavli Institute for the Physics and Mathematics of the Universe in Tokyo) went to work on matching PS1-10afx to other supernovae. Quinby realized that after correcting for time dilation, the light curve of the new supernova was consistent with a Type Ia supernova despite the observed brightness of the object. Gravitational lensing provides an answer: The supernova is being lensed by an object between it and ourselves. The color and spectra of the supernova remain the same as does its lightcurve over time, but the lensing makes it brighter.

Image: One explanation of PS1-10afx. A massive object between us and the supernova bends light rays much as a glass lens can focus light. As more light rays are directed toward the observer than would be without the lens, the supernova appears magnified. (Credit: Kavli IPMU)

Thus we have the lightcurve of a typical SNIa but an object that is 30 times brighter. Although PS1-10afx would be the first Type Ia supernova found to be magnified by a gravitational lens, one of the co-authors of the paper on this work, Masamune Oguri, was lead author on a paper predicting several years ago that Pan-STARRS1 might discover such an object. The alternative is to assume a new kind of superluminous supernova, an idea that can only be ruled out by further observations. The Quimby paper notes that the magnification of PS1-10afx is independent of the supernova itself and should thus apply to the host environment:

This prediction of a persistent lensing source provides a test of our hypothesis. High spatial resolution images from HST may be able to resolve the Einstein ring from the magnified host galaxy. If the lens is a compact red galaxy then color information should distinguish it from the blue, star-forming galaxy in the background. If a foreground galaxy is not detected, then this would either suggest that more exotic lensing systems, such as free floating black holes, are required or it could support the hypothesis of C13 [referring to the Chornock paper] that a new class of superluminous supernovae is required to explain PS1-10afx.

The beauty of the gravitational lensing explanation is that no new physics would be needed. The Quimby paper shows that discovering a gravitationally lensed Type Ia supernova is statistically plausible for Pan-STARRS1. The paper also makes the interesting case that some massive red galaxies coincident with ‘dark’ gamma-ray bursts may actually be foreground lenses for higher redshift events. We may, in other words, soon begin to discover more lensing transients like PS1-10afx as new surveys with tools like the Large Synoptic Survey Telescope begin. Such studies should be crucial in developing our ideas about the universe’s expansion.

But back to Quimby’s explanation of PS1-10afx, about which Ryan Chornock remains dubious. Chornock is quoted in a recent Scientific American article as saying he and his team studied the lensing possibility and dismissed it:

“This was a hypothesis that we actually considered prior to his paper… But the team rejected it based on a number of factors, including the fact that no object has been found that fits the bill for a possible gravitational lens. “Based on our knowledge of the universe, which is of course imperfect, that kind of lensing is usually produced by clusters of galaxies. That’s clearly not the case here because there’s no cluster of galaxies,” he adds, noting that the explanation favored by Quimby and his colleagues “does require some sort of unexpected or unlikely alignment.”

Quimby is right to note that this is a testable result, and his team’s application for time on the Hubble Space Telescope could provide an answer between the two explanations. The Chornock paper is “PS1-10afx AT z = 1.388: PAN-STARRS1 Discovery of a New Type of Superluminous Supernova,” The Astrophysical Journal Vol. 767, No. 2 (2013), 162 (abstract). The Quimby paper is “Extraordinary Magnification of the Ordinary Type Ia Supernova PS1-10afx,” The Astrophysical Journal Letters Vol. 768, No. 1 (2013), L20 (abstract).

Paul Gilster's Blog

- Paul Gilster's profile

- 7 followers