Paul Gilster's Blog, page 188

December 9, 2014

Deep Space: Moving Toward Encounter Mode

No spacecraft has ever traveled further to reach its primary target than New Horizons, now inbound to Pluto/Charon. From 4.6 billion kilometers from Earth (four hours, 26 minutes light travel time), the spacecraft has sent confirmation that its much anticipated wake-up call from ground controllers was a success. Since December 6, New Horizons has been in active mode, a state whose significance principal investigator Alan Stern explains:

“This is a watershed event that signals the end of New Horizons crossing of a vast ocean of space to the very frontier of our solar system, and the beginning of the mission’s primary objective: the exploration of Pluto and its many moons in 2015.”

Image: Pluto and Charon, in imagery taken by New Horizons in July of 2014. Covering almost one full rotation of Charon around Pluto, the 12 images that make up the movie were taken with the spacecraft’s best telescopic camera – the Long Range Reconnaissance Imager (LORRI) – at distances ranging from about 429 million to 422 million kilometers. Credit: NASA/Johns Hopkins University Applied Physics Laboratory/Southwest Research Institute.

Not that hibernation is an unusual event for the spacecraft. We’ve followed several cycles here on Centauri Dreams, but it’s startling to realize that New Horizons has gone through eighteen hibernation periods, with two-thirds of its flight time in that state. A weekly beacon stayed alive during these periods, as did the onboard flight computer that broadcast its status tone, but much of the spacecraft was powered down to protect system components. For this special awakening, English tenor Russell Watson recorded a special version of ‘Where My Heart Will Take Me,’ which was played in New Horizons mission operations upon wake-up confirmation.

Pluto observations begin on January 15, with closest approach on July 14, and by mid-May, we will begin receiving views of Pluto and its moons that are higher in quality than anything the Hubble Space Telescope has yet given us. More on the awakening of New Horizons on this JHU/APL page. Meanwhile, the private effort to upload a message from Earth to New Horizons following the end of its science mission continues. If NASA gives the go-ahead, Jon Lomberg’s team will crowdsource content for the One Earth New Horizons Message. A workshop discussing message methods and content just concluded last week at Stanford.

The View from the Asteroid Belt

Speaking of images better than Hubble, we’ve certainly managed that with the Dawn mission, which brought us spectacular vistas from Vesta during its fourteen months in orbit around the asteroid. Now we can look forward to topping Hubble’s views of Ceres, the spacecraft’s next target. Below is an image that, while not yet better than Hubble can manage, does give us an idea of the spherical shape of the asteroid (click to enlarge). Dawn is now 1.2 million kilometers out, about three times the Earth-Moon distance from its target.

Image credit: NASA/JPL-Caltech/UCLA/MPS/DLR/IDA.

Dawn will be captured into orbit around Ceres in March, but by early 2015, we’ll be seeing images at higher resolution than Hubble has yet provided. The approach phase begins on December 26, with the nine-pixel-wide image just released serving as a final calibration of the spacecraft’s science camera. With both missions, we are pushing into unknown territory, about to see things in greater detail than our best telescopes can offer. A bit of the old Voyager and Pioneer feeling has me in its grip, a confirmation of our human need to explore and a validation of all the hard work that got us here.

At left is Ceres in the best photo we currently have, a color Hubble image. These observations were made in both visible and ultraviolet light between December 2003 and January 2004, showing brighter and darker regions that may be impact features or simply different types of surface material. 950 kilometers across, Ceres may have an interior differentiated between an inner core, an ice mantle, and a relatively thin outer crust.

Every time I see this image I remember Alfred Bester’s The Stars My Destination, a 1956 novel in which protagonist Gulliver Foyle takes the pseudonym Fourmyle of Ceres as part of the unfolding of his ingenious plan for revenge. The asteroid pops up in many science fiction tales (Larry Niven’s ‘Known Space’ stories come particularly to mind), but perhaps none as compelling as the one Dawn is about to tell us.

December 8, 2014

Young Planets, Young Stars

We’re going to be bringing both space- and ground-based assets to bear on the detection of rocky planets within the habitable zone in coming years. Cool M-class stars (red dwarfs) stand out in this regard because their habitable zones (in this case defined as where water can exist in liquid form on the surface) are relatively close to the parent star, making for increased likelihood of transits as well as a larger number of them in a given time period. Ground observatories like the Giant Magellan Telescope and the European Extremely Large Telescope should be able to perform spectroscopic studies of M-class planets as well.

So consider this: We have a high probability for planets around these stars (see Ravi Kopparapu’s How Common Are Potential Habitable Worlds in Our Galaxy?), and then factor in what Ramses Ramirez and Lisa Kaltenegger have, the fact that before they reach the main sequence, M-class stars go through a period of ‘infancy’ that can last up to 2.5 billion years. It’s an important period in the life of any planets around them because, as we saw last week in a paper by Rodrigo Luger and Rory Barnes, huge water loss can occur and there is the possibility for runaway greenhouse events that complicate a planet’s habitability.

But we need to keep digging at this issue because early M-dwarf planets are interesting targets for detection. In their new paper, Ramirez and Kaltenegger (Cornell University) point out that in the period before entering the main sequence, these stars have a luminosity much higher relative to their early main sequence values than F or G stars. In fact, an M8 star can be 180 times as bright during its contraction stage as it will be when entering the main sequence. Our G-class Sun, by contrast, was only twice as bright during this period.

What we get is a habitable zone that is located further away from the young star than we would otherwise expect, in a pre-main sequence period that, for some of the smallest stars, can last for over two billion years, meaning there is the potential for life to develop on the surface, perhaps moving below ground as the star’s luminosity gradually declines. The paper computes the luminosities of stars in classes F through M as they go through the contracting phase and onto the main sequence, with clear effects on the orbital distance of the habitable zone around the star.

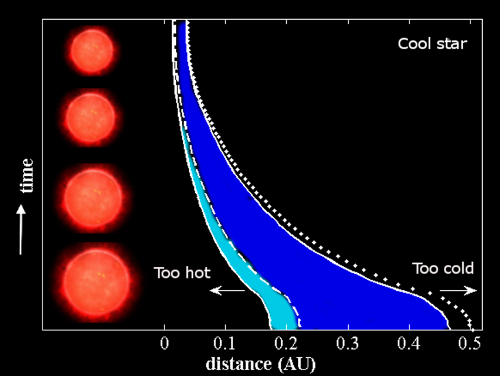

Image: Changes in the position of the habitable zone over time. Credit: L. Kaltenegger, R. Ramirez/Cornell University.

The coolest of these stars, an M8 dwarf, shows an inner zone of the HZ at 0.16 AU that moves to 0.01 AU at the end of the 2.5 billion year pre-main sequence phase. In the same period, the outer edge of the HZ moves from 0.45 AU to 0.3 AU. Contrast that with our Sun, where the inner HZ edge changes from 1 AU to 0.6 AU at the end of the pre-main sequence stage after a comparatively swift 50 million years. The Sun’s outer HZ edge moves from 2.6 AU to 1.5 AU. Variations in planetary mass appear to have only a small effect on these results.

The potential for water loss on the planets of young M-class stars is enormous, much higher for this than other stellar types. Ramirez and Kaltenegger shows that planets orbiting an M8 star at distances corresponding to the inner and outer main sequence habitable zone could lose up to 3800 and 225 Earth oceans of water respectively. An M5 planet loses 350 and 15 oceans respectively, while an M1 world loses 25 and 0.5 oceans. If we look at K5 and G2-class stars, their planets at the inner and outer habitable zone lose 1.3 and 0.75 Earth oceans respectively. These figures, remember, are for planets that end up being in the habitable zone later in the star’s life, when it is firmly established on the main sequence.

Is this water loss a fatal blow to the planet’s chances for habitability? Ramirez and Kaltenegger think not, a point that is worth emphasizing. From the paper:

Our results… suggest that planets later located in the MS HZ orbiting stars cooler than ~ a K5 may lose most of their initial water endowment. However M-dwarf MS HZ planets could acquire much more water during accretion than did Earth (Hansen, 2014), or water-rich material could be brought in after accretion, through an intense late heavy bombardment (LHB) period similar to that in our own Solar System (Hartmann 2000; Gomes et al. 2005). The second mechanism requires that both the gas disk has dissipated and the runaway greenhouse stage has ended.

We can also factor in possible planetary migration, bringing planets rich in volatiles into the habitable zone after the star has reached the main sequence. Bear in mind that the pre-main sequence period can last 195 million years for an M1 star and up to 2.42 billion for a cool M8, meaning that a planet that has lost its water through this ‘bright young star’ period could regain water and become habitable even before the star reaches the main sequence.

In terms of searching for small, rocky planets with the potential for life, then, we need to keep in mind how much farther out the habitable zone is during a young star’s pre-main sequence phase, for the larger separation between planet and star will make such planets easier to find with next-generation telescopes. This work shows that planets around stars in spectral type K5 and cooler — those that will later be located in the habitable zone during the star’s main sequence phase — can easily receive enough stellar flux to lose much or all of their water. They will need to accrete more water than Earth did or else rely on water delivered through later bombardment. They are prime candidates for remote observation and characterization.

Moreover, we learn here that planets outside the habitable zone during the star’s stay on the main sequence may once have had conditions right for life to develop, with the potential for continuing beneath the surface as the star cools. “In the search for planets like ours out there, we are certainly in for surprises,” says Kaltenegger, and it’s clear that young stars, especially M-dwarfs, offer rich targets for observation as we parse out what makes a planet habitable.

The paper is Ramirez and Kaltenegger, “The Habitable Zones of Pre-Main-Sequence Stars,” in press at Astrophysical Journal Letters (preprint). A Cornell University news release is also available.

December 5, 2014

The Interstellar Imperative

What trajectory will our civilization follow as we move beyond our first tentative steps into space? Nick Nielsen returns to Centauri Dreams with thoughts on multi-generational projects and their roots in the fundamental worldview of their time. As the inspiring monuments of the European Middle Ages attest, a civilizational imperative can express itself through the grandest of symbols. Perhaps our culture is building toward travel to the stars as the greatest expression of its own values and capabilities. Is the starship the ultimate monument of a technological civilization? In addition to continuing work in his blogs Grand Strategy: The View from Oregon and Grand Strategy Annex, Nielsen heads Project Astrolabe for Icarus Interstellar, described within the essay.

by J. N. Nielsen

If interstellar flight proves to be possible, it will be possible only in the context of what Heath Rezabek has called an Interstellar Earth: civilization developed on Earth to the degree that it is capable of launching an interstellar initiative. This is Earth, our familiar home, transformed into Earth, sub specie universalis, beginning to integrate its indigenous, terrestrial civilization into its cosmological context.

Already life on Earth is thoroughly integral with its cosmological context, in so far as astrobiology has demonstrated to us that the origins and processes of life on Earth cannot be fully understood in isolation from the Earth’s cosmic situation. The orbit, tilt, and wobble of the Earth as it circles the sun (Milankovitch cycles), the other bodies of the solar system that gravitationally interact with (and sometimes collide with) Earth, the life cycle of the sun and its changing energy output (faint young sun problem), the position of our solar system with the galactic habitable zone (GHZ), and even the solar system bobbing up and down through the galactic plane, subjecting Earth to a greater likelihood of collisions every 62 million years or so (galactic drift) – all have contributed to shaping the unique, contingent history of terrestrial life.

What other distant unknowns shape the conditions of life here on Earth? We cannot yet say, but there may be strong bounds on the earliest development of complex life in the universe, other than the age of the universe itself. For example, recent research on gamma ray bursts suggests that the universe may be periodically sterilized of complex life, as argued in the paper On the role of GRBs on life extinction in the Universe by Tsvi Piran and Raul Jimenez (and cf. my comments on this), which was also the occasion of an article in The Economist, Bolts from the blue. Intelligent life throughout the universe may not be much older than intelligent life on Earth. This argument has been made many times prior to the recent work on gamma ray bursts, and as many times confuted. A full answer must await our exploration of the universe, but we can begin by narrowing the temporal boundaries of possible intelligence and civilization.

Image: Lodovico Cardi, also known as Cigoli, drawing of Brunelleschi’s Santa Maria del Fiore, 1613.

From the perspective of natural history, there is no reason that cognitively modern human-level intelligence might not have arisen on Earth a million years earlier than it did, or even ten million years earlier than it did. On the other hand, from the same perspective there is no reason that such intelligence might not have emerged for another million years, or even another ten million years, from now. Once biology becomes sufficiently complex, and brains supervening upon a limbic system become sufficiently complex, the particular time in history at which peer intelligence emerges is indifferent on scientific time scales, though from a human perspective a million years or ten million years makes an enormous difference. [1]

Once intelligence arose, again, it is arguably indifferent in terms of natural history when civilization emerges from intelligence; but for purely contingent factors, civilization might have arisen fifty thousand years earlier or fifty thousand years later. Hominids in one form or another have been walking the Earth for about five million years (even if cognitive modernity only dates to about 60 or 70 thousand years ago), so we know that hominids are compatible with stagnation measured on geological time scales. Nevertheless, the unique, contingent history of terrestrial life eventually did, in turn, give rise to the unique, contingent history of terrestrial civilization, the product of Earth-originating intelligence.

The sheer contingency of the world makes no concessions to the human mind or the needs of the human heart. Blaise Pascal was haunted by this contingency, which seems to annihilate the human sense of time: “When I consider the short duration of my life, swallowed up in the eternity before and after, the little space which I fill and even can see, engulfed in the infinite immensity of spaces of which I am ignorant and which know me not, I am frightened and am astonished at being here rather than there; for there is no reason why here rather than there, why now rather than then.” [2]

Given the inexorable role of contingency in the origins of Earth-originating intelligence and civilization, we might observe once again that, from the perspective of the natural history of civilization, it makes little difference whether the extraterrestrialization of humanity as a spacefaring species occurs at our present stage of development, or ten thousand years from now, or a hundred thousand years from now. [3] When civilization eventually does, however, follow the example of the life that preceded it, and integrates itself into the cosmological context from which it descended, it will be subject to a whole new order of contingencies and selection pressures that will shape spacefaring civilization on an order of magnitude beyond terrestrial civilization.

The transition to spacefaring civilization is no more inevitable than the emergence of intelligence capable of producing civilization, or indeed the emergence of life itself. If it should come to pass that terrestrial civilization transcends its terrestrial origins, it will be because that civilization seizes upon an imperative informing the entire trajectory of civilization that is integral with a vision of life in the cosmos – an astrobiological vision, as it were. We cannot know the precise form such a civilizational imperative might take. In an earlier Centauri Dreams post, I discussed cosmic loneliness as a motivation to reach out to the stars. This suggests a Weltanschauung of human longing and an awareness of ourselves as being isolated in the cosmos. But if we were to receive a SETI signal next week revealing to us a galactic civilization, our motivation to reach out to the stars may well take on a different form having nothing to do with our cosmic isolation.

At the first 100YSS symposium in 2011, I spoke on “The Moral Imperative of Human Spaceflight.” I realize now I might have made a distinction between moral imperatives and civilizational imperatives: a moral imperative might or might not be recognized by a civilization, and a civilizational imperative might be moral, amoral, or immoral. What are the imperatives of a civilization? By what great projects does a civilization stand or fall, and leave its legacy? With what trajectory of history does a civilization identify itself and, through its agency, become an integral part of this history? And, specifically in regard to the extraterrestrialization of humanity and a spacefaring civilization, what kind of a civilization would not only recognize this imperative, but would center itself on and integrate itself with an interstellar imperative?

Also at the first 100YSS symposium in 2011 there was repeated discussion of multi-generational projects and the comparison of interstellar flight to the building of cathedrals. [4] In this analogy, it should be noted that cathedrals were among the chief multi-generational projects and are counted as one of the central symbols of the European middle ages, a central period of agrarian-ecclesiastical civilization. For the analogy to be accurate, the building of starships would need to be among the central symbols of an age of interstellar flight, taking place in a central period of mature industrial-technological civilization capable of producing starships.

To take a particular example, the Duomo of Florence was begun in 1296 and completed structurally in 1436—after 140 years of building. When the structure was started, there was no known way to build the planned dome. (To construct the dome in the conventional manner would have required more timber than was available at that time.) The project went forward nevertheless. This would be roughly equivalent to building the shell of a starship and holding a competition for building the propulsion system 122 years after the project started. The dome itself required 16 years to construct according to Brunelleschi’s innovative double-shelled design of interlocking brickwork, which did not require scaffolding, the design being able to hold up its own weight as it progressed. [5]

It is astonishing that our ancestors, whose lives were so much shorter than ours, were able to undertake such a grand project with confidence, but this was possible given the civilizational context of the undertaking. The central imperative for agrarian-ecclesiastical civilization was to preserve unchanged a social order believed to reflect on Earth the eternal order of the cosmos, and, to this end, to erect monuments symbolic of this eternal order and to suppress any revolutionary change that would threaten this order. (A presumptively eternal order vulnerable to temporal mutability betrays its mundane origin.) And yet, in its maturity, agrarian-ecclesiastical civilization was shaken by a series of transformative revolutions—scientific, political, and industrial—that utterly swept aside the order agrarian-ecclesiastical civilization sought to preserve at any cost. [6]

The central imperative of industrial-technological civilization is the propagation of the STEM cycle, the feedback loop of science producing technologies engineered into industries that produce better instruments for science, which then in turn further scientific discovery (which, almost as an afterthought, produces economic growth and rising standards of living). [7] One cannot help but wonder if this central imperative of industrial-technological civilization will ultimately go the way agrarian-ecclesiastical civilization, fostering the very conditions it seeks to hold in abeyance. In any case, it is likely that this imperative of industrial-technological civilization is even less understood than the elites of agrarian-ecclesiastical civilization understood the imperative to suppress change in order to retain unchanged its relation to the eternal.

It may yet come about that the building of an interstellar civilization becomes a central multi-generational project of industrial-technological civilization, as it comes into its full maturity, as a project of this magnitude and ambition would be required in order to sustain the STEM cycle over long-term historical time (what Fernand Braudel called la longue durée). We must recall that industrial-technological civilization is very young—only about two hundred years old—so that it is still far short of maturity, and the greater part of its development is still to come. Agrarian-ecclesiastical civilization was in no position to raise majestic gothic cathedrals for its first several hundred years of existence. Indeed, the early middle ages, a time of great instability, are notable for their lack of surviving architectural monuments. The Florence Duomo was not started until this civilization was several hundred years old, having attained a kind of maturity.

The STEM cycle draws its inspiration from the great scientific, technical, and engineering challenges of industrial-technological civilization. A short list of the great engineering problems of our time might include hypersonic flight, nuclear fusion, and machine consciousness. [8] The recent crash of Virgin Galactic’s SpaceShipTwo demonstrates the ongoing difficulty of mastering hypersonic atmospheric flight. We can say that we have mastered supersonic atmospheric flights, as military jets fly every day and only rarely crash, and we can dependably reach escape velocity with chemical rockets, but building a true spacecraft (both HOTOL and SSTO) requires mastering hypersonic atmospheric flight, as well as making the transition to exoatmospheric flight) and this continues to be a demanding engineering challenge.

Similarly, nuclear fusion power generation has proved to be a more difficult challenge than initially anticipated. I recently wrote in One Hundred Years of Fusion that by the time we have mastered nuclear fusion as a power source, we will have been working on fusion for a hundred years at least, making fusion power perhaps the first truly multi-generational engineering challenge of industrial-technological civilization—our very own Florentine Duomo, as it were.

With machine consciousness, if we are honest we will admit that we do not even know where to begin. AI is the more tractable problem, and the challenge that attracts research interest and investment dollars, and increasingly sophisticated AI is likely to be an integral feature of industrial-technological civilization, regardless of our ability to produce artificial consciousness.

Once these demanding engineering problems are resolved, industrial-technological civilization will need to look further afield in its maturity for multi-generational projects that can continue to stimulate the STEM cycle and thus maintain that civilization in a vital, vigorous, and robust form. A starship would be the ultimate scientific instrument produced by technological civilization, constituting both a demanding engineering challenge to build and offering the possibility of greatly expanding the scope of scientific knowledge by studying up close the stars and worlds of our universe, as well as any life and civilization these worlds may comprise. This next great challenge of our civilization, being a challenge so demanding and ambitious that it could come to be the central motif of industrial-technological civilization in its maturity, could be called the interstellar imperative, and we ourselves may be the Axial Age of industrial-technological civilization that first brings this vision into focus.

Even if the closest stars prove to be within human reach in the foreseeable future, the universe is very large, and there will be always further goals for our ambition, as we seek to explore the Milky Way, to reach other galaxies, and to explore our own Laniakea supercluster. This would constitute a civilizational imperative that could not be soon exhausted, and the civilization that sets itself this imperative as its central organizing principle would not lack for inspiration. It is the task of Project Astrolabe at Icarus Interstellar to study just these large-scale concerns of civilization, and we invite interested researchers to join us in this undertaking.

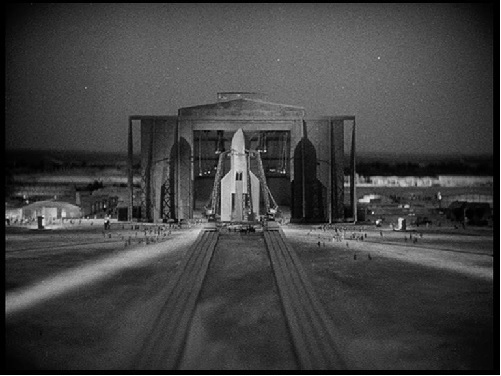

Image: Frau im Mond, Fritz Lang, 1929.

—

Jacob Shively, Heath Rezabek, and Michel Lamontagne read an earlier version of this essay and their comments helped me to improve it.

—

Notes

[1] I have specified intelligence emergent from a brain that supervenes upon a limbic system because in this context I am concerned with peer intelligence. This is not to deny that other forms of intelligence and consciousness are possible; not only are they possible, they are almost certainly exemplified by other species with whom we share our planet, but whatever intelligence or consciousness they possess is recognizable as such to us only with difficulty. Our immediate rapport with other mammals is sign of our shared brain architecture.

[2] No. 205 in the Brunschvicg ed., and Pascal again: “I see those frightful spaces of the universe which surround me, and I find myself tied to one corner of this vast expanse, without knowing why I am put in this place rather than in another, nor why the short time which is given me to live is assigned to me at this point rather than at another of the whole eternity which was before me or which shall come after me. I see nothing but infinites on all sides, which surround me as an atom and as a shadow which endures only for an instant and returns no more.” (No. 194)

[3] Or our extraterrestrialization might have happened much earlier in human civilization, as Carl Sagan imagined in his Cosmos: “What if the scientific tradition of the ancient Ionian Greeks had survived and flourished? …what if that light that dawned in the eastern Mediterranean 2,500 years ago had not flickered out? … What if science and the experimental method and the dignity of crafts and mechanical arts had been vigorously pursued 2,000 years before the Industrial Revolution? If the Ionian spirit had won, I think we—a different ‘we,’ of course—might by now be venturing to the stars. Our first survey ships to Alpha Centauri and Barnard’s Star, Sirius and Tau Ceti would have returned long ago. Great fleets of interstellar transports would be under construction in Earth orbit—unmanned survey ships, liners for immigrants, immense trading ships to plow the seas of space.” (Cosmos, Chapter VIII, “Travels in Space and Time”)

[4] While there are few examples of truly difficult engineering problems as we understand them today (i.e., in scientific terms) prior to the advent of industrial-technological civilization, because, prior to this, technical problems were only rarely studied with a pragmatic eye to their engineering solution, agrarian-ecclesiastical civilization had its multi-generational intellectual challenges parallel to the multi-generational scientific challenges of industrial-technological civilization — these are all the familiar problems of theological minutiae, such as the nature of grace, the proper way to salvation, and subtle distinctions within eschatology. And all of these problems are essentially insoluble for different reasons than a particularly difficult scientific problem may appear intractable. Scientific problems, unlike the intractable theological conflicts intrinsic to agrarian-ecclesiastical civilization, can be resolved by empirical research. This is one qualitative difference between these two forms of civilization. Moreover, it is usually the case that the resolution of a difficult scientific problem opens up new horizons of research and sets new (soluble) problems before us. The point here is that the monumental architecture of agrarian-ecclesiastical civilization that remains as its legacy (like the Duomo of Florence, used as an illustration here) was essentially epiphenomenal to the central project of that civilization.

[5] An entertaining account of the building of the dome of the Florence Duomo is to be found in the book The Feud That Sparked the Renaissance: How Brunelleschi and Ghiberti Changed the Art World by Paul Robert Walker.

[6] On the agrarian-ecclesiastical imperative: It is at least possible that the order and stability cultivated by agrarian-ecclesiastical civilization was a necessary condition for the gradual accumulation of knowledge and technology that would ultimately tip the balance of civilization in a new direction.

[7] While the use of the phrase “STEM cycle” is my own coinage (you can follow the links I have provided to read my expositions of it), the idea of our civilization involving an escalating feedback loop is sufficiently familiar to be the source of divergent opinion. For a very different point of view, Nassim Nicholas Taleb argues strongly (though, I would say, misguidedly) against the STEM cycle in his Antifragile: Things That Gain from Disorder (cf. especially Chap. 13, “Lecturing Birds on How to Fly,” in the section, “THE SOVIET-HARVARD DEPARTMENT OF ORNITHOLOGY”); needless to say, he does not use the phrase “STEM cycle.”

[8] This list of challenging technologies should not be taken to be exhaustive. I might also have cited high temperature superconductors, low energy nuclear reactions, regenerative medicine, quantum computing, or any number of other difficult technologies. Moreover, many of these technologies are mutually implicated. The development of high temperature superconductors would transform every aspect of the STEM cycle, as producing strong magnetic fields would become much less expensive and therefore much more widely available. Inexpensive superconducting electromagnets would immediately affect all technologies employing magnetic fields, so that particle accelerators and magnetic confinement fusion would directly benefit, among many other enterprises.

December 4, 2014

Atmospheric Turmoil on the Early Earth

Yesterday’s post about planets in red dwarf systems examined the idea that the slow formation rate of these small stars would have a huge impact on planets that are today in their habitable zone. We can come up with mechanisms that might keep a tidally locked planet habitable, but what do we do about the severe effects of water loss and runaway greenhouse events? Keeping such factors in mind plays into how we choose targets — very carefully — for future space telescope missions that will look for exoplanets and study their atmospheres.

But the question of atmospheres on early worlds extends far beyond what happens on M-dwarf planets. At MIT, Hilke Schlichting has been working on what happened to our own Earth’s atmosphere, which was evidently obliterated at least twice since the planet’s formation four billion years ago. In an attempt to find out how such events could occur, Schlichting and colleagues at Caltech and Hebrew University have been modeling the effects of impactors that would have struck the Earth in the same era that the Moon was formed, concluding that it was the effect of small planetesimals rather than any giant impactor that would have destroyed the early atmosphere.

“For sure, we did have all these smaller impactors back then,” Schlichting says. “One small impact cannot get rid of most of the atmosphere, but collectively, they’re much more efficient than giant impacts, and could easily eject all the Earth’s atmosphere.”

Image: An early Earth under bombardment. Credit: NASA.

A single large impact could indeed have dispersed most of the atmosphere, but as the new paper in Icarus shows, impactors of 25 kilometers or less would have had the same effect with a great deal less mass. While a single such impactor would eject atmospheric gas on a line perpendicular to the impactor’s trajectory, its effect would be small. Completely ejecting the atmosphere would call for tens of thousands of small impacts, a description that fits the era 4.5 billion years ago. Calculating atmospheric loss over the range of impactor sizes, the team found that the most efficient impactors are small planetesimals of about 2 kilometers in radius.

Schlichting believes that giant impacts cannot explain the loss of atmosphere, for recent work uses the existence of noble gases like helium-3 inside today’s Earth to argue against the formation of a magma ocean, the consequence of any such giant impact. From the paper:

Recent work suggests that the Earth went through at least two separate periods during which its atmosphere was lost and that later giant impacts did not generate a global magma ocean (Tucker & Mukhopadhyay 2014). Such a scenario is challenging to explain if atmospheric mass loss was a byproduct of giant impacts, because a combination of large impactor masses and large impact velocities is needed to achieve complete atmospheric loss… Furthermore, giant impacts that could accomplish complete atmospheric loss, almost certainly will generate a global magma ocean. Since atmospheric mass loss due to small planetesimal impacts will proceed without generating a global magma ocean they offer a solution to this conundrum.

The same impactors that drove atmospheric loss would, in this scenario, introduce new volatiles as planetesimals melted after impact. By the researchers’ calculations, a significant part of the atmosphere may have been replenished by these tens of thousands of small impactors. What happens to a newly formed planet that can lead to the emergence of life? Learning about the primordial atmosphere shows us a planet at a stage when life was about to take hold, which is why Schlichting’s team wants to move forward with an analysis of the geophysical processes that, in conjunction with small impactors, so shaped Earth’s early environment.

The paper is Schlichting et al., “Atmospheric Mass Loss During Planet Formation: The

Importance of Planetesimal Impacts,” Icarus Vol. 247 (February 2015), pp. 81-94 (abstract / preprint).

December 3, 2014

Enter the ‘Mirage Earth’

A common trope from Hollywood’s earlier decades was the team of explorers, or perhaps soldiers, lost in the desert and running out of water. On the horizon appears an oasis surrounded by verdant green, but it turns out to be a mirage. At the University of Washington, graduate student Rodrigo Luger and co-author Rory Barnes have deployed the word ‘mirage’ to describe planets that, from afar, look promisingly like a home for our kind of life. But the reality is that while oxygen may be present in their atmospheres, they’re actually dry worlds that have undergone a runaway greenhouse state.

This is a startling addition to our thinking about planets around red dwarf stars, where the concerns have largely revolved around tidal lock — one side of the planet always facing the star — and flare activity. Now we have to worry about another issue, for Luger and Barnes argue in a paper soon to be published in Astrobiology that planets that form in the habitable zone of such stars, close in to the star, may experience extremely high surface temperatures early on, causing their oceans to boil and their atmospheres to become steam.

Image: Illustration of a low-mass, M dwarf star, seen from an orbiting rocky planet. Credit: NASA/JPL.

The problem is that M dwarfs can take up to a billion years to settle firmly onto the main sequence — because of their lower mass and lower gravity, they take hundreds of millions of years longer than larger stars to complete their collapse. During this period, a time when planets may have formed within the first 10 to 100 million years, the parent star would be hot and bright enough to heat the upper planetary atmosphere to thousands of degrees. Ultraviolet radiation can split water into hydrogen and oxygen atoms, with the hydrogen escaping into space.

Left behind is a dense oxygen envelope as much as ten times denser than the atmosphere of Venus. The paper cites recent work by Keiko Hamano (University of Tokyo) and colleagues that makes the case for two entirely different classes of terrestrial planets. Type I worlds are those that undergo only a short-lived greenhouse effect during their formation period. Type II, on the other hand, comprises those worlds that form inside a critical distance from the star. These can stay in a runaway greenhouse state for as long as 100 million years, and unlike Type I, which retains most of its water, Type II produces a desiccated surface inimical to life.

Rodrigo and Barnes, extending Hamano’s work and drawing on Barnes’ previous studies of early greenhouse effects on planets orbiting white and brown dwarfs as well as exomoons, consider this loss of water and build-up of atmospheric oxygen a major factor in assessing possible habitability. From the paper:

During a runaway greenhouse, photolysis of water vapor in the stratosphere followed by the hydrodynamic escape of the upper atmosphere can lead to the rapid loss of a planet’s surface water. Because hydrogen escapes preferentially over oxygen, large quantities of O2 also build up. We have shown that planets currently in the HZs of M dwarfs may have lost up to several tens of terrestrial oceans (TO) of water during the early runaway phase, accumulating O2 at a constant rate that is set by diffusion: about 5 bars/Myr for Earth-mass planets and 25 bars/Myr for super-Earths. At the end of the runaway phase, this leads to the buildup of hundreds to thousands of bars of O2, which may or may not remain in the atmosphere.

Thus the danger in using oxygen as a biosignature: In cases like these, it will prove unreliable, and the planet uninhabitable despite elevated levels of oxygen. The key point is that the slow evolution of M dwarfs means that their habitable zones begin much further out than they will eventually become as the star continues to collapse into maturity. Planets that are currently in the habitable zone of these stars would have been well inside the HZ when they formed. An extended runaway greenhouse could play havoc with the planet’s chances of developing life.

Even on planets with highly efficient surface sinks for oxygen, the bone-dry conditions mean that the water needed to propel a carbonate-silicate cycle like the one that removes carbon dioxide from the Earth’s atmosphere would not be present, allowing the eventual build-up of a dense CO2 atmosphere, one that later bombardment by water-bearing comets or asteroids would be hard pressed to overcome. In both cases habitability is compromised.

So a planet now in the habitable zone of a red dwarf may have followed a completely different course of atmospheric evolution than the Earth. Such a world could retain enough oxygen to be detectable by future space missions, causing us to view oxygen as a biosignature with caution. Says Luger: “Because of the oxygen they build up, they could look a lot like Earth from afar — but if you look more closely you’ll find that they’re really a mirage; there’s just no water there.”

The paper is Luger and Barnes, “Extreme Water Loss and Abiotic O2 Buildup On Planets Throughout the Habitable Zones of M Dwarfs,” accepted at Astrobiology (preprint). The paper by Hamano et al. is “Emergence of two types of terrestrial planet on solidification of magma ocean,” Nature 497 (30 May 2013), pp. 607-610 (abstract). A University of Washington news release is also available.

December 2, 2014

Ground Detection of a Super-Earth Transit

Forty light years from Earth, the planet 55 Cancri e was detected about a decade ago using radial velocity methods, in which the motion of the host star to and from the Earth can be precisely measured to reveal the signature of the orbiting body. Now comes news that 55 Cancri e has been bagged in a transit from the ground, using the 2.5-meter Nordic Optical Telescope on the island of La Palma, Spain. That makes the distant world’s transit the shallowest we’ve yet detected from the Earth’s surface, which bodes well for future small planet detections.

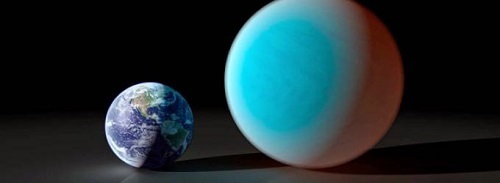

Maybe ‘small’ isn’t quite the right word — 55 Cancri e is actually almost 26000 kilometers in diameter, a bit more than twice the diameter of the Earth — which turned out to be enough to dim the light of the parent star by 1/2000th for almost two hours. The planet’s period is 18 hours, bringing it close enough to reach temperatures on the dayside of 1700° Celsius. As the innermost of the five known worlds around 55 Cancri, 55 Cancri e is not a candidate for life.

Image: A comparison between the Earth and 55 Cancri e. Credit: Harvard-Smithsonian Center for Astrophysics.

Previous transits of this world were reported by two spacecraft, MOST (Microvariability & Oscillations of Stars) and the Spitzer Space Telescope, which works in the infrared. What’s exciting about the new transit observation is that as new observatories like TESS (Transiting Exoplanet Survey Satellite) come online, the number of small candidate worlds will zoom. Each will need follow-up observation, but the sheer numbers will overload both space-based and future ground observatories. Learning how to do this work using existing instruments gives us a way to put smaller telescopes into play, a cost-effective option for continuing the hunt.

The work on 55 Cancri e grows out of an effort to demonstrate the capacity of smaller telescopes and associated instruments for such detections. Having succeeded with one planet, the researchers look at some of the problems that arise when using moderate-sized telescopes:

In the case of bright stars, scintillation noise is the dominant limitation for small telescopes, but scintillation can be significantly reduced with some intelligent planning. With the current instrumentation, the main way to do this is to use longer exposure times and thereby reduce the overheads. However, longer exposure times will likely result in saturation of the detector, which can be mitigated by either defocusing the telescope (resulting in a lower spectral resolution, and possible slitlosses), using a higher resolution grating (which will decrease the wavelength coverage), or using a neutral density filter to reduce the stellar flux. The latter option will be offered soon at the William Herschel Telescope. Another possible solution is to increase default gain levels of standard CCDs.

So if we do find numerous super-Earths in upcoming survey missions, we can spread the task of planet confirmation to a broader pool of participants as our expertise develops. As for 55 Cancri itself, it continues to intrigue us, the first system known to have five planets. It’s also a binary system, consisting of a G-class star and a smaller red dwarf separated by about 1000 AU. While 55 Cancri e does transit, the other planets evidently do not, though there have been hints of an extended atmosphere on 55 Cancri b that may at least partially transit the star.

The paper is de Mooij et al., “Ground-Based Transit Observations of the Super-Earth 55 Cnc e,” accepted for publication in the Astrophysical Journal Letters (preprint).

December 1, 2014

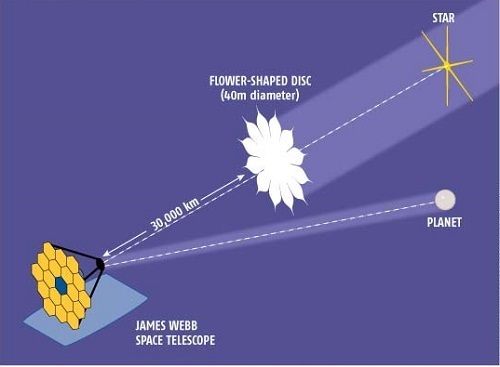

WFIRST: The Starshade Option

What’s ahead for exoplanet telescopes in space? Ashley Baldwin, who tracks today’s exciting developments in telescope technology, today brings us a look at how a dark energy mission, WFIRST, may be adapted to perform exoplanet science of the highest order. One possibility is the use of a large starshade to remove excess starlight and reveal Earth-like planets many light years away. A plan is afoot to make starshades happen, as explained below. Dr. Baldwin is a consultant psychiatrist at the 5 Boroughs Partnership NHS Trust (Warrington, UK), a former lecturer at Liverpool and Manchester Universities and, needless to say, a serious amateur astronomer.

by Ashley Baldwin

Big things have small beginnings. Hopefully.

Many people will be aware of NASA’s proposed 2024 WFIRST mission. Centauri Dreams readers will also be aware that this mission was originally identified in the 2010 Decadal Survey roadmap as a mission with a $1.7 billion budget to explore and quantify “dark energy”. As an add-on, given that the mission involved prolonged viewing of large areas of space, an exoplanet “microlensing” element as a secondary goal, with no intrusion on the dark energy component, was also proposed.

Researchers believed that the scientific requirements would be best met by the use of a wide-field telescope, and various design proposals were put forward. All of these had to sit within a budget envelope that also included testing, 5 years of mission operations costs and a launch vehicle. This allowed a maximum aperture telescope of 1.5 m, a size that has been used many times before, thus reducing the risk and need to perform extensive and costly pre-mission testing, as had happened with JWST. As the budget fluctuated over the years, the aperture was reduced to 1.3 m.

Then suddenly in 2012 the National Reconnaissance Office (NRO) donated two 2.4 m telescopes to NASA. These were similar in size, design and quality to Hubble, so had lots of “heritage”, but critically they were also were wide-field, large, and worked largely but not exclusively in the infrared. What an opportunity: Such a scope would collect up to three times as much light as the 1.3 m telescope.

Tuning Up WFIRST

At this point a proposal was put forward to add in a coronagraph in order to increase the exoplanet and science return of WFIRST, always a big driver in prioritising missions. This coronagraph is a set of mirrors and masks, an “optical train” that is placed between the telescope aperture and the “focal plane” in order to remove starlight reaching the focal plane while allowing the much dimmer reflected light of any orbiting exoplanet to do so, allowing imaging and spectrographic analysis. Given the proposed savings presented by using a “free” mirror it was felt that this addition could be made at no extra cost to the mission itself.

The drawback is that the telescope architecture of the two NRO mirrors is not designed for this purpose. There are internal supporting struts or “spiders” that could get in the way of the complex apparatus of any type of coronagraph, impinging on its performance (this is what makes the spikes on images in reflecting telescopes). The task of fitting a coronagraph was not felt to be insurmountable, though, and the potential huge science return was deemed worth it so NASA funded an investigation into the best use of the new telescopes in terms of WFIRST.

The result was WFIRST-AFTA, ostensibly a new dark energy mission with all of its old requirements but with the addition of a coronagraph. Subsequent independent analyses by organisations such as the NRC (National Research Council – the auditors), however, suggested that the addition of a coronagraph could increase the overall mission cost to over $2 billion. This was largely driven by the use of “new” technology that might need protracted and costly testing. The spectre of JWST and its damaging costs still looms and now influences space telescope policy. Although the matter is disputed, it is based on the premise that despite over ten years of research, the technology is still thought to be somewhat immature and in need of more development. A research grant was gifted to coronagraph testbed centres to rectify this.

Image: An artist’s rendering of WFIRST-AFTA (Wide Field Infrared Survey Telescope – Astrophysics Focused Telescope Assets). From the cover of the WFIRST-AFTA Science Definition Team Final Report (2013-05-23). Credit: NASA / Goddard Space Flight Center WFIRST Project – Mark Melton, NASA/GSFC.

Another issue was whether the WFIRST budget will be delivered in the 2017 budget. This is far from certain, given the ongoing requirement to pay off the JWST “debt”. Those who remember the various TPF initiatives that followed a similar pathway and were ultimately scrapped at a late stage will shiver in apprehension. And that was before the huge overspend and delay of JWST, largely due to the use of numerous new technologies and their related testing. Congress was understandably wary of avoiding a repeat.

This spawned a proposal for the two cheaper “backup” concepts I have talked of before, EXO-S and EXO-C. These are probe-class concepts with a $1 billion budget cap. Primary and backup proposals will be submitted in 2015. EXO-S itself involved using a 1.1 m mirror with a 34 m starshade to block out light, rather than the coronagraphs of WFIRST-AFTA and EXO-C. Its back-up plan proposed using the starshade with a pre-existing telescope, which with JWST already having been irrevocably ruled out, left only WFIRST. Meanwhile, a research budget was allocated to bring coronagraph technology up to a higher “technology readiness level”.

Recently sources have suggested that the EXO-S back up option has come under closer scrutiny, with the possibility of combining WFIRST with a starshade becoming more realistic. At present that would only involve making WFIRST “starshade ready”, which involves the various two-way guidance systems necessary to link the telescope and a starshade to allow for their joint operation. The cost is only a few tens of millions and easily covered by some of the coronagraph research fund plus the assistance of the Japanese space agency JAXA (who may well fund the coronagraph too, taking the pressure off NASA). This doesn’t allow for the starshade itself however, which would need a separate, later funding source.

Rise of the Starshade

What is a starshade? The idea was originally put forward by astronomer Webster Cash from the University of Colorado in 2006; Cash is now part of the EXO-S team led by Professor Sara Seager from MIT. The starshade was proposed as a cheap alternative to the high-quality mirrors (up to twenty times smoother than Hubble) and advanced precision wavefront control of light entering the telescope. A starshade is essentially a large occulting device that acts as an independent satellite and is placed between any star being investigated for exoplanets and a telescope that is staring at them. In doing so, it blocks out the light of the star itself but allows any exoplanet light to pass (the name comes from the fact that starshades were pioneered to allow telescopic imaging of the solar corona). Exactly the same principle as a coronagraph only outside the telescope rather than inside it. The brightness of a planet is not determined by its star’s brightness so much as its own reflectivity and radius, which is why anyone viewing our solar system from a distance would see both Jupiter and Venus easier than Earth.

Image: A starshade blocks out light from the parent star, allowing the exoplanet under scrutiny to be revealed. Credit: University of Colorado/Northrup Grumman.

Any exoplanetary observatory has two critical parameters that determine its sensitivity. How close it can cut out starlight to the star, allowing nearby planets to be imaged, is the so-called Inner Working Angle, IWA. For the current WFIRST plus starshade mission, this is 100 milliarcseconds (mas), which for nearby stars would incorporate any planet orbiting at Earth’s distance from a sun. Remember that Earth sits within a “habitable zone”, HBZ, determined by a temperature that allows the presence of liquid water on the planetary surface. The HBZ is determined by star temperature and thus size, so 100 mas unfortunately would not allow viewing of planets much closer to a smaller star with a smaller HBZ, such as a K-class star. A slightly less important parameter is the Outer Working Angle, OWA, which determines how far out the imager can see from the star. This applies in the narrow field of coronagraphs but not to starshades, enabling planetary imaging for many astronomical units from the star.

The second key parameter is “contrast brightness” or fractional planetary brightness. Jupiter-sized planets are, on average, a billion times dimmer than their star in visible light, and this worsens by ten times for smaller Earth-sized planets. The difference drops by about 100-1000 times in infrared light. The WFIRST starshade is designed to work predominantly in visible light, just creeping into infrared at 825 nm. This is because the most common and cheap light detectors available for telescopes, CCDs, operate best in this range. Thus to image a planet, the light of a parent star needs reducing by at least a billion times to see gas giants and ten billion to see terrestrial planets. WFIRST reduces starlight by exactly ten billion times before its data is processed, with an increase to 3 to the power of minus 11 after processing. So WFIRST can view both terrestrial and gas giant planets in terms of its contrast imaging. The 100 mas IWA allows imaging of planets in the HBZ of Sunlike stars and brighter K-class stars (whose HBZ overlap with larger stars).

By way of comparison, WFIRST/AFTA can only reduce the star’s light by a billion times, which only allows gas giants to be viewed. Its IWA is just inside Mars’ orbit, so it can see the HBZ only for some Sun-like stars or larger, as light entering the telescope diminishes with distance. EXO-C can do better than this, and aims to view terrestrial planets for nearby stars. It is limited instead by the amount of light it can collect with its smaller 1.5 m aperture, less than half WFIRST and less still when passed through the extended “optical train” of the coronagraph and the deformable mirrors used in the critical precision wavefront control.

One thing that affects starshades and indeed all forms of exoplanet imaging is that other objects appearing in an imaging field can mimic a planet although they are in fact either nearer or further away. Nebulae, supernovae and quasars are a few of the many possibilities. In general, these can be separated out by spectroscopy, as they will have spectra different from exoplanets that mimic their parent star’s light in reflection. One significant exception is “exozodiacal light”. This phenomenon is caused by the reflection of starlight from free dust within its system.

The dust arises from collisions between comets and asteroids within the system. Exozodiacal light is expressed as a ratio of its luminosity to that of the star’s luminosity, the so-called “zodis”, where one zodi is equivalent to the Sun’s system. For our own system this ratio is only 10 -7. In general the age of the system determines how high the zodiacal light ratio is, with older systems like our own having less as their dust dissipates. Thus zodiacal light in the Sol system is lower than in many systems, some of which have up to a thousand times the level. This causes problems in imaging exoplanets, as exozodiacal light is reflected at the same wavelength as planets and can clump together to form planet-like masses. Detailed photometry is needed to separate planets from this exozodiacal confounder, prolonging integration time in the process.

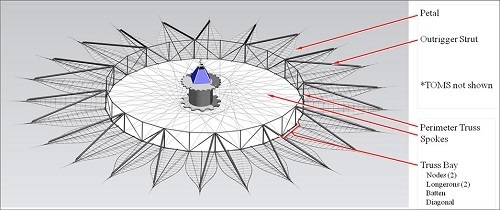

Pros and Cons of Starshades

No one has ever launched a starshade, nor has anyone ever even built a full-scale one, although large-scale operational mock-ups have been built and deployed on the ground. A lot of their testing has been in simulation. The key is that being big, starshades would need to fold up inside their launch vehicle to “deploy” once in orbit. Similar things have been done with conventional antennae in orbit, so the principle is not totally new. The other issue is one of so-called “formation” flying. To work, the shade must cast an exact shadow over the viewing telescope throughout any imaging session, where sessions could be as long as days or weeks. This requires precision control for both telescope and starshade to an accuracy of plus or minus one metre. For WFIRST to operate, the starshade/telescope distance must be 35 thousand kilometres. Such precision has been achieved before, but only over much shorter distances of tens of metres. Even so, this is not perceived as a major obstacle.

Image: Schematic of a deployed starshade. TOMS: Thermo-Optical Micrometeorite Shield. Credit: NASA/JPL/Princeton University.

The other issue is “slew” time. Either the telescope or starshade must be self-propelled in order to move into the correct position for imaging. For the WFIRST mission this will be the starshade. To move from one viewing position to another can take days, with an average time between viewings of 11 days. Although moving the starshade is time-consuming, it isn’t a problem — recall that first and foremost WFIRST is a dark energy mission, so the in-between time can be used to conduct such operations.

What are the advantages and disadvantages of starshades? To some extent we have touched on the disadvantages already. They are bulky, out of necessity requiring larger and separate launch vehicles, with unfolding or “deployment” in orbit, factors that increase costs. They require precision flying. I mentioned earlier that at longer wavelengths the contrast between planet and star diminishes, which suggests that viewing in the infrared would be less demanding.

Unfortunately, longer wavelength light is more likely to “leak” by diffraction around the starshade, thus corrupting the image. This is an issue for starshades even in visible light and it is for this reason they are flower shaped, with anything from 12 to 28 petals, a design that has been found to reduce diffraction significantly. The starshade will have a finite amount of propellant, too, allowing up to a maximum of 100 points before running out. A coronagraph, conversely, should last as long the telescope it is working in.

Critically, because of the unpredictable thermal environment around Earth as well as the obstructive effect of both the Earth and Moon ( and Sun), starshades cannot be used in Earth orbit. This means either a Kepler-like “Earth trailing” orbit ( like EXO-C) or an orbit at the Sun-Earth-Lagrange 2 null point, 1.5 million kms outward from Earth. Such orbits are more complex and thus costly options than the geosynchronous orbit originally proposed. WFIRST is also the first telescope to be made service/repair friendly. This is likely to be possible through robotic or manned missions, but a mission to L2 is an order of magnitude harder and more expensive.

The benefits of starshades are numerous. We have talked of formation flying. For coronagraphs, the equivalent, and if anything more difficult issue, is wavefront control. Additional deformable mirrors are required to achieve this, which add yet more elements between the aperture and focal plane. Remember the coronagraph has up to thirty extra mirrors. No mirror is perfect and even with a 95% reflectivity over the length of the “optical train,’ a substantial amount of light is lost, light from a very dim target. Furthermore, coronagraphs demand the use of light-blocking masks, as part of the coronagraph blocks light in a non-circular way, so care has to be taken that unwanted star light outside of this area does not go on to pollute that reaching the telescope focal plane.

Even in bespoke EXO-C, only 37% light gets to the optical plane and WFIRST-AFTA will be worse. It is possible to alleviate this by improving the quality of the primary mirror but obviously with WFIRST this is not possible. In essence, a starshade takes the pressure off the telescope and the IWA and contrast ratio depend largely on it rather than the telescope. Thus starshades can work with most telescopes irrespective of type or quality, without recourse to the complex and expensive wavefront control necessary with a coronagraph.

The Best Way Forward

In summary, it is fair to say that of the various options for a WFIRST/Probe mission, the WFIRST telescope in combination with a starshade is the most potent in terms of its exoplanetary imaging, especially for potentially habitable planets. Once at the focal plane, any light will be analysed by a spectrograph that, depending on the amount of light it receives, will be able to characterise the atmospheres of the planets it views. The more light, the more sensitive the result, so with the 2.4 m WFIRST mirror as opposed to the 1.1 m telescope of EXO-S, discovery or “integration” times will be reduced by up to five times. Conversely and unsurprisingly, the further the target planet, the longer the time to integrate and characterise it. This climbs dramatically for targets further than ten parsecs or about 33 light years away, but there are still plenty of promising stars in and around this distance and certainly up to 50 light years away. Target choice will be important.

At present it is proposed that the mission would characterise up to 52 planets during the time allotted to it during the mission. The issue is which 52 planets to image? Spectroscopy tells us about planetary atmospheres and characterises them but there are many potential targets, some with known planets, others, including many nearer targets, with none. Which stars have planets most likely to reveal exciting “biosignatures”, potential signs of life ?

It is likely that astronomers will use a list of potentially favourable targets produced by respected exoplanet astronomer Margaret Turnbull in 2003. Dr. Turnbull is also part of the EXO-S team and first floated the idea of using a starshade for exoplanetary imaging with a concept paper on a 4 m telescope, New Worlds Observer, at the end of the last decade. This design proposed using a larger, 50 m starshade with an inner working angle of 65 mas, within Venus’ orbit so effective for planets in the habitable zone around smaller, cooler stars than the sun.

To date, the plan is to view 13 known exoplanets and look at the HBZ in 19 more. The expectation is that 2-3 “Earths” or “Super-Earths” will be found from this sample. This is where the larger aperture of WFIRST comes into its own. The more light, the greater the likelihood that any finding or image is indeed a planet standing out from background light or “noise”. This is the so called Signal to Noise ratio, SNR, that is critical to all astronomical imaging. The higher the SNR, the better, eliminating the possibility of false results. A level of ten is set for the WFIRST starshade mission for spectroscopic findings that characterise exoplanetary atmospheres. Spectroscopic SNR is a related concept and is described by a spectrograph’s ability to separate spectral lines due, for example, to a biosignature gas like O2 in an atmosphere. For WFIRST this is set at 70, which while sounding reasonable is tiny when compared to the value used for searching for exoplanets using the radial velocity method, which runs into the hundreds of thousands.

Given the lack of in-space testing, the 34 m of EXO-S is nearest to the scaled model deployed in Earth at Princeton University, and thus the lowest but effective risk-size. The overall idea was considered by NASA but rejected on grounds of cost at a time of JWST development. Apart from this, only “concept” exoplanet telescopes like ATLAST, an 8 m plus scope, have been proposed with vague and distant timelines.

So now it is up to whether NASA feels able to cover making WFIRST starshade-ready and willing to allow a shift of orbit for WFIRST from geosynchronous orbit to L2. That decision could come as early as 2016, when NASA decides if the mission can go forward to see if it can get its budget. Then Congress will need to be persuaded that the $1.7 billion budget will stay at that level and not escalate. Assuming WFIRST is starshade-ready, the battle to fund a starshade will hopefully have begun once 2016 clearance is given. Ironically, NASA bid processes don’t cover starshades, so there would need to be a rule change. There are various funding pools whose timeline would support a WFIRST starshade, however. No one has ever built one, but we do know that the costs run into the hundreds of millions, especially if a launch is included, as it would be unless the next generation of large, cheap launchers like the Falcon Heavy can manage shade and telescope in one go. Otherwise the idea would be for the telescope to go up in 2024, with starshade deployment the following a year once the telescope is operational.

Meanwhile there are private initiatives. The BoldyGo Institute proposes launching a telescope, ASTRO-1, with both a coronagraph and starshade. This telescope will be off-axis, where the light from the primary is directed to a secondary mirror outside of the telescope baffle before being redirected to a focal plane behind the telescope. This helps reduce the obstruction to the aperture caused by an on-axis telescope, where the secondary mirror sits in front of the primary and directs light to a focal plane through a hole in the primary itself. This hole reduces effective mirror size and light gathering even before the optical train is reached and is the feature of a bespoke coronagraphic telescope. For further information consult the website at boldlygo.org.

Exciting but uncertain times. Coronagraph or no coronagraph, starshade or no starshade? Or perhaps with both? Properly equipped, WFIRST will become the first true space exoplanet telescope — one actually capable of seeing the exoplanet as a point source — and a potent one despite its Hubble-sized aperture. Additionally, like Gaia, WFIRST will hunt for exoplanets using astrometry. Coronagraph, starshade, micro lensing and astronomy — four for the price of one! Moreover, this is possibly the only such telescope for some time, with no big U.S. telescopes even planned. Not so much ATLAST as last chance. Either way, it is important to keep this item in the public eye as its results will be exciting and could be revolutionary.

The next decade will see the U.S. resume manned spaceflight with the SpaceX V2 and NASA’s own ORION spacecraft. At the same time, missions like Gaia, TESS, CHEOPS, PLATO and WFIRST will discover literally tens of thousands of exoplanets, which can then be characterised by the new ELTs and JWST. Our exoplanetary knowledge base will expand enormously and we may even discover biosignatures of life on some of them. Such ground-breaking discoveries will lead to dedicated “New Worlds Observer” style telescopes that with the advent of new lightweight materials, autonomous software and cheap launch vehicles, will have larger apertures than ever before, allowing even more detailed atmospheric characterisation. In this way the pioneering work of CoRoT and Kepler will be fully realised.

November 26, 2014

Astrobiology and Sustainability

As the Thanksgiving holiday approaches here in the US, I’m looking at a new paper in the journal Anthropocene that calls the attention of those studying sustainability to the discipline of astrobiology. At work here is a long-term perspective on planetary life that takes into account what a robust technological society can do to affect it. Authors Woodruff Sullivan (University of Washington) and Adam Frank (University of Rochester) make the case that our era may not be the first time “…where the primary agent of causation is knowingly watching it all happen and pondering options for its own future.”

How so? The answer calls for a look at the Drake Equation, the well-known synthesis by Frank Drake of the factors that determine the number of intelligent civilizations in the galaxy. What exactly is the average lifetime of a technological civilization? 500 years? 50,000 years? Much depends upon the answer, for it helps us calculate the likelihood that other civilizations are out there, some of them perhaps making it through the challenges of adapting to technology and using it to spread into the cosmos. A high number would also imply that we too can make it through the tricky transition and hope for a future among the stars.

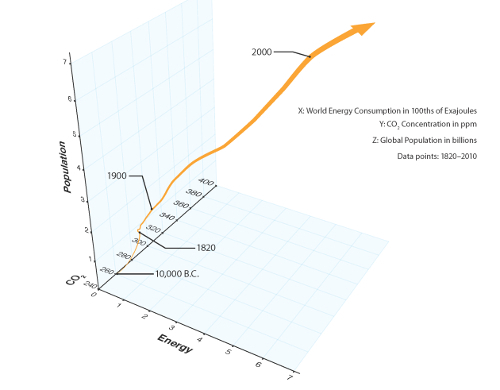

Sullivan and Frank believe that even if the chances of a technological society emerging are as few as one in 1000 trillion, there will still have been 1000 instances of such societies undergoing transitions like ours in “our local region of the cosmos.” The authors refer to extraterrestrial civilizations as Species with Energy-Intensive Technology (SWEIT) and discuss issues of sustainability that begin with planetary habitability and extend to mass extinctions and their relation to today’s Anthropocene epoch, as well as changes in atmospheric chemistry, comparing what we see today with previous eras of climate alteration, such as the so-called Great Oxidation Event, the dramatic increase in oxygen levels (by a factor of at least 104) that occurred some 2.4 billion years ago.

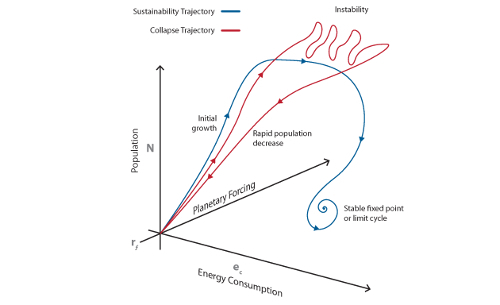

Out of this comes a suggested research program that models SWEIT evolution and the evolution of the planet on which it arises, using dynamical systems theory as a theoretical methodology. As with our own culture, these ‘trajectories’ (development paths) are tied to the interactions between the species and the planet on which it emerges. From the paper:

Each SWEIT’s history defines a trajectory in a multi-dimensional solution space with axes representing quantities such as energy consumption rates, population and planetary systems forcing from causes both “natural” and driven by the SWEIT itself. Using dynamical systems theory, these trajectories can be mathematically modeled in order to understand, on the astrobiology side, the histories and mean properties of the ensemble of SWEITs, as well as, on the sustainability science side, our own options today to achieve a viable and desirable future.

Image: Schematic of two classes of trajectories in SWEIT solution space. Red line shows a trajectory representing population collapse. Blue line shows a trajectory representing sustainability. Credit: Michael Osadciw/University of Rochester.

The authors point out that other methodologies could also be called into play, in particular network theory, which may help illuminate the many routes that can lead to system failures. Using these modeling techniques could allow us to explore whether the atmospheric changes our own civilization is seeing are an expected outcome for technological societies based on the likely energy sources being used in the early era of technological development. Rapid changes to Earth systems are, the authors note, not a new phenomenon, but as far as we know, this is the first time where the primary agent of causation is watching it happen.

Sustainability emerges as a subset of the larger frame of habitability. Finding the best pathways forward involves the perspectives of astrobiology and planetary evolution, both of which imply planetary survival but no necessary survival for a particular species. Although we know of no extraterrestrial life forms at this time, this does not stop us from proceeding, because any civilization using energy to work with technology is also generating entropy, a fact that creates feedback effects on the habitability of the home planet that can be modeled.

Image: Plot of human population, total energy consumption and atmospheric CO2 concentration from 10,000 BCE to today as trajectory in SWEIT solution space. Note the coupled increase in all 3 quantities over the last century. Credit: Michael Osadciw/University of Rochester.

Modeling evolutionary paths may help us understand which of these are most likely to point to long-term survival. In this view, our current transition is a phase forced by the evolutionary route we have taken, demanding that we learn how a biosphere adapts once a species with an energy-intensive technology like ours emerges. The authors argue that this perspective “…allows the opportunities and crises occurring along the trajectory of human culture to be seen more broadly as, perhaps, critical junctures facing any species whose activity reaches significant level of feedback on its host planet (whether Earth or another planet).”

The paper is Frank and Sullivan, “Sustainability and the astrobiological perspective: Framing human futures in a planetary context,” Anthropocene Vol. 5 (March 2014), pp. 32-41 (full text). This news release from the University of Washington is helpful, as is this release from the University of Rochester.

November 25, 2014

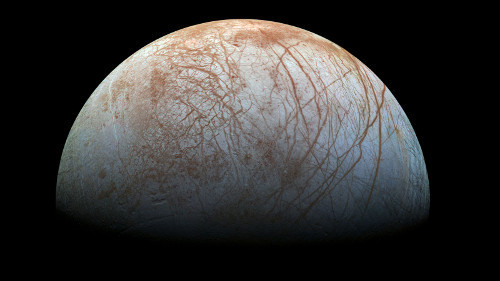

Our Best View of Europa

Apropos of yesterday’s post questioning what missions would follow up the current wave of planetary exploration, the Jet Propulsion Laboratory has released a new view of NASA’s intriguing moon Europa. The image, shown below, looks familiar because it was published in 2001, though at lower-resolution and with considerable color enhancement. The new mosaic gives us the largest portion of the moon’s surface at the highest resolution, and without the color enhancement, so that it approximates what the human eye would see.

The mosaic of images that go into this view was put together in the late 1990s using imagery from the Galileo spacecraft, which again makes me thankful for Galileo, a mission that succeeded despite all its high-gain antenna problems, and anxious for renewed data from this moon. The original data for the mosaic were acquired by the Galileo Solid-State Imaging experiment on two different orbits through the system of Jovian moons, the first in 1995, the second in 1998.

NASA is also offering a new video explaining why the interesting fracture features merit investigation, given the evidence for a salty subsurface ocean and the potential for at least simple forms of life within. It’s a vivid reminder of why Europa is a priority target.

Image (click to enlarge): The puzzling, fascinating surface of Jupiter’s icy moon Europa looms large in this newly-reprocessed color view, made from images taken by NASA’s Galileo spacecraft in the late 1990s. This is the color view of Europa from Galileo that shows the largest portion of the moon’s surface at the highest resolution. Credit: NASA/Jet Propulsion Laboratory.

Areas that appear blue or white are thought to be relatively pure water ice, with the polar regions (left and right in the image — north is to the right) bluer than the equatorial latitudes, which are more white. This JPL news release notes that the variation is thought to be due to differences in ice grain size in the two areas. The long cracks and ridges on the surface are interrupted by disrupted terrain that indicates broken crust that has re-frozen. Just what do the reddish-brown fractures and markings have to tell us about the chemistry of the Europan ocean, and the possibility of materials cycling between that ocean and the ice shell?

November 24, 2014

Rosetta: Building Momentum for Deep Space?

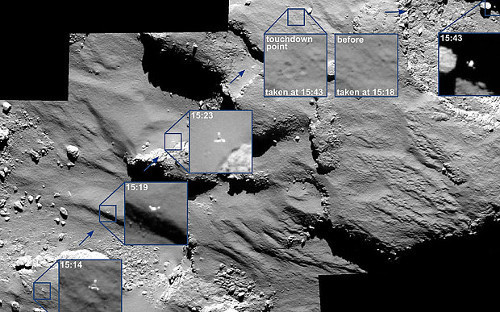

Even though its arrival on the surface of comet 67P/Churyumov-Gerasimenko did not go as planned, the accomplishment of the Rosetta probe is immense. We have a probe on the surface that was able to collect 57 hours worth of data before going into hibernation, and a mother ship that will stay with the comet as it moves ever closer to the Sun (the comet’s closest approach will be on August 13 of next year).

What a shame the lander’s ‘docking’ system, involving reverse thrusters and harpoons to fasten it to the surface, malfunctioned, leaving it to bounce twice before it landed with solar panels largely shaded. But we do know that the Philae lander was able to detect organic molecules on the cometary surface, with analysis of the spectra and identification of the molecules said to be continuing. The comet appears to be composed of water ice covered in a thin layer of dust. There is some possibility the lander will revive as the comet moves closer to the Sun, according to Stephan Ulamec (DLR German Aerospace Center), the mission’s Philae Lander Manager, and we can look forward to reams of data from the still functioning Rosetta.

What an audacious and inspiring mission this first soft landing on a comet has been. Congratulations to all involved at the European Space Agency as we look forward to continuing data return as late as December 2015, four months after the comet’s closest approach to the Sun.

Image: The travels of the Philae lander as it rebounds from its touchdown on Comet 67P/Churyumov Gerasimenko. Credit: ESA/Rosetta/Philae/ROLIS/DLR.

A Wave of Discoveries Pending

Rosetta used gravitational assists around both Earth and Mars to make its way to the target, hibernating for two and a half years to conserve power during the long journey. Now we wait for the wake-up call to another distant probe, New Horizons, as it comes out of hibernation for the last time on December 6. Since its January, 2006 launch, the Pluto-bound spacecraft has spent 1,873 days in hibernation, fully two-thirds of its flight time, in eighteen hibernation periods ranging from 36 days to 202 days, a way to reduce wear on the spacecraft’s electronics and to free up an overloaded Deep Space Network for other missions.

When New Horizons transmits a confirmation that it is again in active mode, the signal will take four hours and 25 minutes to reach controllers on Earth, at a time when the spacecraft will be more than 2.9 billion miles from the Earth, and less than twice the Earth-Sun distance from Pluto/Charon. According to the latest report from the New Horizons team, direct observations of the target begin on January 15, with closest approach on July 14.