Paul Gilster's Blog, page 164

December 1, 2015

Habitable Planets in the Same System

Learning that our own Solar System has a configuration that is only one of many possible in the universe leads to a certain intellectual exhilaration. We can, for example, begin to ponder the problems of space travel and even interstellar missions within a new context. Are there planetary configurations that would produce a more serious incentive for interplanetary travel than others? What would happen if there were not one but two habitable planets in the same system, or perhaps orbiting different stars of a close binary pair like Centauri A and B?

My guess is that having a clearly habitable world — one whose continents could be made out through ground-based telescopes, and whose vegetation patterns would be obvious — as a near neighbor would produce a culture anxious to master spaceflight. Imagine the funding for manned interplanetary missions if we had a second green and blue world that was as reachable as Mars, one that obviously possessed life and perhaps even a civilization.

Solar systems with multiple habitable planets are the subject of an interesting new paper from Jason Steffen (UNLV) and Gongjie Li (Harvard-Smithsonian Center for Astrophysics), who point to the fact that the Kepler mission has found planet pairs on similar orbits, with orbital distances that in some cases differ by little more than 10 percent. Mars is at best 200 times as far away as the Moon, but as Steffen notes, multi-habitable systems will produce much closer destinations:

“It’s pretty intriguing to imagine a system where you have two Earth-like planets orbiting right next to each other,” says Steffen. “If some of these systems we’ve seen with Kepler were scaled up to the size of the Earth’s orbit, then the two planets would only be one-tenth of one astronomical unit apart at their closest approach. That’s only 40 times the distance to the Moon.”

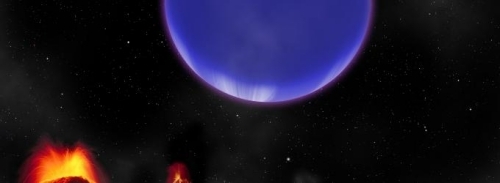

We can’t know at this point whether any of the Kepler candidates have life or not, but consider this: Kepler-36, about 1530 light years away in the constellation Cygnus, is known to be orbited by a ‘super-Earth’ and a ‘mini-Neptune’ in orbits that differ by 0.013 AU. The outer planet orbits close enough to the inner that we can pick up obvious transit timing variations (TTV) for both. Locked in a 7:6 orbital resonance, these worlds are representative of this kind of planetary configuration. Imagine the changing celestial display from the surface of the super-Earth as the larger planet sweeps out its orbit. Now consider the same view if both planets were clearly habitable!

Image: A gas giant planet spanning three times more sky than the Moon as seen from the Earth looms over the molten landscape of Kepler-36b. Credit: Harvard-Smithsonian Center for Astrophysics.

Steffen and Li focus in on two key issues. Specifically, what sort of variations in obliquity might be caused by such close planetary orbits? Obliquity measures the angle between the planet’s spin and its orbital axis — Earth’s 23.5° tilt relative to its orbit explains seasonal change and has obvious effects on climate. So we’d like to know whether close orbits disrupt planetary climates enough to wreak climatic havoc. The answer in most cases is no. From the paper:

We found that obliquity variations are generally not affected by the close proximity of the planets in a multihabitable system. Also, obliquity variations of close pairs embedded in the solar system or of potentially habitable planets in a system with a close pair were not sufficient to significantly reduce the probability of having a stable climate. Only in cases where the inclination modal frequencies coincide with the planetary precession frequency did large obliquity variation arise.

With planets this close, however, an even larger question is whether life on one planet can influence the other. We have abundant evidence of rocks from Mars that have fallen to Earth, leading to the possibility that other planets could have delivered life-bearing materials here in the process called lithopanspermia. Much closer planets should be far more susceptible to this process. Clearly, the possibility exists for life branching out from the same roots, taking different evolutionary paths just as occurs on remote islands here on Earth.

The simulations used by Steffen and Li demonstrate that two Earth-like planets in orbits like those found around Kepler-36 would have a much greater opportunity for exchanging materials than planets do in our Solar System. The relative velocities of the planets — and thus the velocities of the ejected particles — would be much less than in the case of a transfer of materials from Mars to the Earth. Biological materials transferred by collision ejecta have been considered on individual planets (with material falling back onto other parts of the same world), between binary planets or habitable moons, and even between different star systems.

Steffen and Li’s simulations show that the nearer the planets are to each other, the higher the success rate of ejecta transfer. Moving biological materials between two worlds becomes almost as feasible as moving them from one place on a single planet to another region of that planet, and the energy of the impact needed to make the transfer is much less than in our Solar System, leading to higher survivability. We can add in the fact that the time needed for biological materials to survive an interplanetary journey is that much shorter.

And there is this final factor:

…we found that the smaller the velocity of the ejected material the more uniformly they can be sourced across the originating planet. With high velocity ejecta, the range of initial longitudes is constrained relative to the direction of motion.

The result: Debris from a single impact is far more likely to strike the destination planet at multiple locations in rapid succession than when planets are spaced farther apart. That too increases the chance of life catching hold. Processes like these, which could also occur around planets with large habitable moons, allow life to spread readily within the same system. The paper adds:

Not only will panspermia be more common in a multihabitable system than in the solar system, but the close proximity of the planets to each other within the habitable zone of the host star allows for a real possibility of the planets having regions of similar climate—perhaps allowing the microbiological family tree to extend across the system. There are many things to consider in multihabitable systems, especially in the cases where intelligent life emerges.

In this UNLV news release, Steffen follows up that last thought:

“You can imagine that if civilizations did arise on both planets, they could communicate with each other for hundreds of years before they ever met face-to-face. It’s certainly food for thought.”

The scenario is striking, and I’m hoping readers can come up with some science fiction titles in which multiple habitable planets in the same system are the background for the tale.

The paper is Steffen and Li, “Dynamical considerations for life in multihabitable planetary systems,” accepted at The Astrophysical Journal (preprint).

November 30, 2015

No Catastrophic Collision at KIC 8462852

Last week I mentioned that I wanted to get into Massimo Marengo’s new paper on KIC 8462852, the interesting star that, when studied by the Kepler instrument, revealed an intriguing light curve. I’ve written this object up numerous times now, so if you’re coming into the discussion for the first time, plug KIC 8462852 into the archive search engine to get up to speed. Marengo (Iowa State) is himself well represented in the archives. In fact, I began writing about him back in 2005, when he was working on planetary companions to Epsilon Eridani.

In the new paper, Marengo moves the ball forward in our quest to understand why the star I’ll abbreviate as KIC 8462 poses such problems. The F3-class star doesn’t give us the infrared signature we’d expect from a debris disk, yet the light curves we see suggest objects of various sizes (and shapes) transiting across its surface. What we lacked from Tabetha Boyajian’s earlier paper (and it was Boyajian, working with the Planet Hunters group, that brought KIC 8462 to our attention) was data about infrared wavelengths after the WISE mission finished its work.

That was a significant omission, because the WISE data on the star were taken in 2010, while the first events Kepler flagged at KIC 8462 occurred in March of 2011, with a long series of events beginning in February of 2013 and lasting sixty days. That gave us a small window in which something could have happened — the idea of a planetary catastrophe comes to mind, perhaps even a collision between two planets, or a planet and large asteroid. What Marengo brings to the table are observations from the Spitzer Space Telescope dating from early 2015.

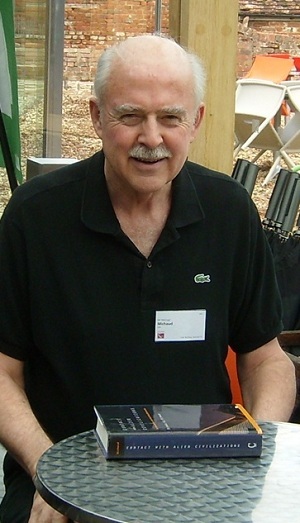

Image: Astrophysicist Massimo Marengo. Credit: Iowa State University.

We learn that the Spitzer photometry from its Infrared Array Camera (IRAC) finds no strong infrared excess — no significant amount of circumstellar dust can be detected two years after the 2013 dimming event at KIC 8462. Here is what Marengo concludes from this:

The absence of strong infrared excess at the time of the IRAC observations (after the dimming events) implied by our 4.5 µm 3σ limit suggests that the phenomenon observed by Kepler produced a very small amount of dust. Alternatively, if significant quantity of dust is present, it must be located at large distance from the star.

This seems to preclude catastrophic scenarios, while leaving a cometary solution intact. The paper continues:

As noted by B15 [this is the Boyajian paper], this makes the scenarios very unlikely in which the dimming events are caused by a catastrophic collision in KIC 8462852 asteroid belt, a giant impact disrupting a planet in the system, or a population of dust-enshrouded planetesimals. All these scenarios would produce very large amount of dust dispersed along the orbits of the debris, resulting in more mid-IR emission than what can be inferred from the optical depth of the dust seen passing along our line of sight to the star. Our limit (two times lower than the limit based on WISE data) further reduces the odds for these scenarios.

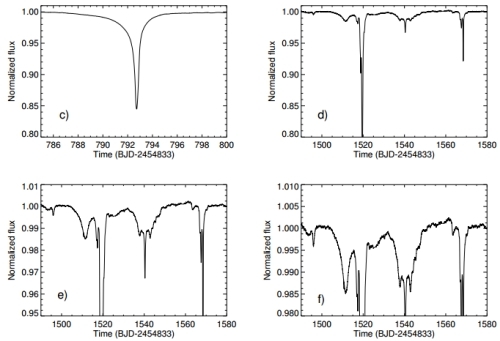

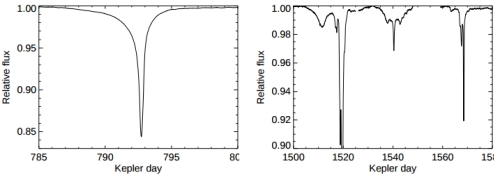

Image: Montage of flux time series for KIC 8462852 showing different portions of the 4-year Kepler observations with different vertical scalings. Panel ‘(c)’ is a blowup of the dip near day 793, (D800). The remaining three panels, ‘(d)’, ‘(e)’, and ‘(f)’, explore the dips which occur during the 90-day interval from day 1490 to day 1580 (D1500). Credit: Boyajian et al., 2015.

Tabetha Boyajian’s paper analyzed the natural phenomena that could account for KIC 8462’s light curve and concluded that a family of exocomets was the most promising explanation. Here the idea is that we have a family of comets in a highly elliptical orbit that has moved between us and the star, an idea that would be consistent with the lack of a strong infrared signature. Marengo has reached the same conclusion now that we are able to discount the idea of a large collision within the system. Both Boyajian and Marengo favor the comet hypothesis because it does not require a circular orbit and allows associated dust to quickly move away from the star.

In Marengo’s analysis, this fits the data, as the two-year gap between the Kepler light curves and the observations from Spitzer provide enough time for cometary debris to move several AU from the zone of tidal destruction from the star. The paper adds:

At such a distance, the thermal emission from the dust would be peaked at longer wavelengths and undetectable by IRAC. A robust detection at longer wavelengths (where the fractional brightness of the debris with respect to the star would be more favorable) will allow the determination of the distance of the cometary fragments and constrain the geometry of this scenario.

So we have a way to proceed here. Marengo notes that the measurements his paper presents cannot reveal the temperature or the luminosity of the dust that would be associated with such a family of comets, but long-term infrared monitoring would allow us to constrain both. The other day I also mentioned the small red dwarf (about 850 AU out) that could be the cause of instabilities in any Oort Cloud-like collection of comets around KIC 8462. Boyajian’s paper makes the case for measuring the motion or possible orbit (if bound) of this star as a way to tighten predictions on the timescale and repeatability of any associated comet showers.

Marengo dismisses SETI study of KIC 8462, with specific reference to Jason Wright’s recent paper on the matter, as “wild speculations,” an unfortunate phrase because Wright’s shrewd and analytical discussion of these matters has been anything but ‘wild.’

The Marengo paper is Marengo, Hulsebus and Willis, “KIC 8462852 – The Infrared Flux,” Astrophysical Journal Letters, Vol. 814, No. 1 (abstract / preprint). The Boyajian paper is Boyajian et al., “Planet Hunters X. KIC 8462852 – Where’s the flux?” submitted to Monthly Notices of the Royal Astronomical Society (preprint). The Jason Wright paper that examines KIC 8462 in the context of SETI signatures is Wright et al., “The Ĝ Search for Extraterrestrial Civilizations with Large Energy Supplies. IV. The Signatures and Information Content of Transiting Megastructures,” submitted to The Astrophysical Journal (preprint).

November 27, 2015

Will We Stop at Mars?

In the heady days of Apollo, Mars by 2000 looked entirely feasible. Now we’re talking about the 2030s for manned exploration, and even that target seems to keep receding. In the review that follows, Michael Michaud looks at Louis Friedman’s new book on human spaceflight, which advocates Mars landings but cedes more distant targets to robotics. So how do we reconcile ambitions for human expansion beyond Mars with political and economic constraints? A career diplomat whose service included postings as Counselor for Science, Technology and Environment at U.S. embassies in Paris and Tokyo, and Director of the State Department’s Office of Advanced Technology, Michael is also the author of Contact with Alien Civilizations (Copernicus, 2007). Here he places the debate over manned missions vs. robotics in context, and suggests a remedy for pessimism about an expansive future for Humankind.

by Michael A.G. Michaud

Many people in the space and astronomy communities will know of Louis Friedman, a tireless campaigner for planetary exploration and solar sailing. He was one of the co-founders of the Planetary Society in 1980, with Carl Sagan and Bruce Murray.

In his new book, entitled Human Spaceflight: From Mars to the Stars, Friedman states his argument up front: Humans will become a multi-planet species by going to Mars, but will never travel beyond that planet. Future humans will explore the rest of the universe vicariously through machines and virtual reality.

Friedman acknowledges that public interest in space exploration is still dominated by “human interest.” No one, he writes, is going to discontinue human spaceflight. Yet there is a conundrum. While giving up on manned missions to Mars is politically unacceptable, getting such a program approved and funded is not an achievable political step at this time. If another decade goes by without humans going farther in space, Friedman writes, public interest will likely decline and robotic and virtual exploration technologies will pass us by.

Friedman claims that going beyond Mars with humans is impossible not just physically for the foreseeable future but culturally forever. The long-range future of humankind, he declares, is to extend its presence in the universe virtually with robotic emissaries and artificial intelligence. This argument puts a permanent cap on human expansion, as if travel beyond Mars never will be possible.

Friedman sees having another world as a prudent step to prevent humankind being wiped out by a catastrophe. He argues that the danger of not sending humans to Mars is that we will become complacent. If that complacency overcomes making humankind a multi-planet species, we are doomed.

Friedman dismisses big ideas about exploiting planetary resources throughout the solar system and living everywhere to build civilizations and colonies on other worlds. He can’t see why or how we would do this, nor can he see waiting to do so. This illustrates an old split in the space interest community between those advocating space exploration and those supporting space utilization and eventual human expansion.

In his chapter entitled Stepping Stones to Mars, Friedman lists potential human spaceflight achievements with dates. An appendix presents a plan for a manned Mars mission in the 2040s. That first landing is to be followed later by missions establishing an infrastructure for human habitation, an effort that will take many decades.

Interstellar flight

This book’s subtitle is From Mars to the Stars. Yet Friedman dismisses interstellar travel by human beings as a subject of science fiction. People are too impatient, he writes, to wait for the necessary life-support developments. This contrasts with Carl Sagan’s 1966 comment that efficient interstellar spaceflight to the farthest reaches of our galaxy is a feasible objective for humanity.

Friedman argues that we have only one technology that might someday take our machines to the stars – light sailing. It may be another century before we have large enough laser power sources to drive small unmanned spacecraft over interstellar distances. The barrier of bigness will be overcome by the enablement of smallness.

Friedman suggests three interstellar precursor missions: the first launched in 2018 to the Kuiper Belt and onward to the heliopause; the second launched in 2025 to the solar gravity lens focus and on to 1,000 astronomical units; the third launched in 2040 to the Oort Cloud.

Virtual Reality

Friedman oversells virtual reality just as some others have oversold manned spaceflight. He acknowledges that we have yet to reach full cultural acceptance and satisfaction with the virtual world. Yet he seems to assume that such acceptance by the general population is inevitable.

Calling virtual reality human exploration may confuse many readers. Will we be content to watch all future exploration through robotic eyes?

There may be an unstated reason for preferring virtual reality over human presence. If future space exploration were entirely robotic, scientists would be in charge.

Cautions about Mars

Mars is far from ideal as a future home for humankind. The thin atmosphere is mostly carbon dioxide. Temperatures are low. The surface is more exposed to radiation and meteorites than Earth. Yet Mars remains the best candidate for a second planetary home within our own solar system.

Like other schedules proposed by some space advocates, Friedman’s plan for missions to Mars may be too optimistic. Yet such optimism keeps goals alive and encourages others to get involved.

What seems wildly optimistic now may be possible over the longer term. In the 1950s, some scientists thought that sending humans to the Moon was impossible.

The failure of grand visions

Friedman is correct in stating the biggest problem of space policy: the merging of grand visions with political constraints. In 1988, President Reagan’s statement on space policy included the idea of expanding human activity beyond Earth and into the solar system, an endorsement long sought by some elements of the space interest community. President George H.W. Bush fleshed out this idea in 1989 with his Space Exploration Initiative, urging that the U.S. develop a permanent presence on the Moon and the landing of a human crew on Mars by 2019. These visions failed to win the financing that would make them feasible.

Frustration and Patience

It is understandable that long-time campaigners for further exploration and use of space get frustrated, in some cases foreseeing the end of such endeavors. We all want to see major hopeful events occur in our own lifetimes. Yet we share some responsibility to look beyond.

Writing off human expansion beyond Mars for all the humans who follow us is, despite Friedman’s claim, pessimistic. The remedy is a younger generation of advocates.

A Little History

Friedman states that the settlement of Mars is the rationale for human spaceflight. The leaders of the Planetary Society did not initially support that goal. In the organization’s early years, its chief spokespersons criticized NASA’s emphasis on human missions (particularly the Space Station), which they saw as robbing funds that should have gone into further robotic exploration.

Sagan and others later realized that the planetary exploration budget rose and fell with the rise and fall of manned spaceflight programs. When NASA funding was rising, space science prospered; when NASA funding declined, space science funding declined with it. After the cancellation of further Apollo missions, planetary science was hit hardest by budget cuts . This revived a debate as old as the space program, between advocates of manned spaceflight and those who believe that priority should be given to exploration by unmanned spacecraft.

Friedman wrote in a 1984 article in Aerospace America about extending human civilization to space, suggesting a lunar base, a manned expedition to Mars, or a prospecting journey to some asteroids undertaken by an international team.

By the mid-1980s, the Planetary Society was advocating a joint U.S. Soviet manned mission to Mars. Senator Spark Matsunaga of Hawaii introduced legislation to support this idea and published a book in 1986 entitled The Mars Project: Journeys beyond the Cold War. Soviet leader Mikhail Gorbachev made overtures to the U.S. in 1987 and 1988 for a cooperative program eventually leading to a Mars landing.

Bruce Murray, reacting favorably to the 1989 Space Exploration Initiative, published an article in 1990 entitled Destination Mars—A Manifesto. Observing that the space frontier for the U.S. and the USSR had stagnated a few hundred miles up, Murray commented that neither the United States nor the Soviet Union is likely, by itself, to sustain the decades of effort necessary to reach Mars. Murray urged a joint U.S.-Soviet manned spaceflight program leading eventually to Mars.

This reviewer argued at the 1987 Case for Mars conference that relying on the Soviet Union during the Cold War made such a mission subject to political volatility. This turned out to be true. As Friedman reports, a brief flurry of interest by President Reagan and Gorbachev in a cooperative human mission to Mars disappeared quickly in the face of large global events such as the dissolution of the Soviet Union.

More recently, when the U.S. sought to punish Russia for invading Ukraine, Russian officials made public statements threatening the continuation of Russian transport of Americans to the International Space Station, even though the U.S. was paying for those flights.

References

Louis Friedman, Human Spaceflight: From Mars to the Stars, University of Arizona Press, 2015.

Louis D. Friedman, “New Era of Global Security: Reach for the Stars,” Aerospace America, August 1984, 4.

Michael A.G. Michaud, “Choosing partners for a manned mission to Mars,” Space Policy, February 1988, 12-18.

Chapter entitled “Scientists, Citizens, and Space” in Michael A.G. Michaud, Reaching for the High Frontier: The American Pro-Space Movement, 1972-1984, Praeger, 1986, 187-213.

Bruce Murray, “Destination Mars: A Manifesto,” Nature 345 (17 May 1990), 199-200.

Iosif Shklovskii and Carl Sagan, Intelligent Life in the Universe, Dell, 1966, 449.

November 25, 2015

A Cometary Solution for KIC 8462852?

KIC 8462852 is back in the news. And despite a new paper dealing with the unusual star, I suspect it will be in the news for some time to come, for we’re a long way from finding out what is causing the unusual light curves the Planet Hunters group found in Kepler data. KIC 8462, you’ll recall, clearly showed something moving between us and the star, with options explored by Tabetha Boyajian, a Yale University postdoc, in a paper we examined here in October (see KIC 8462852: Cometary Origin of an Unusual Light Curve? and a series of follow-up articles).

To recap, we’re seeing a light curve around this F3-class star that doesn’t look anything like a planetary transit, but is much more suggestive of debris. Finding a debris disk around a star is not in itself unusual, since we’ve found many such around young stars, but KIC 8462 doesn’t appear to be a young star when looked at kinematically. In other words, it’s not moving the way we would expect from a star that has recently formed. Moreover, the star shows us none of the emissions at mid-infrared wavelengths we would expect from a young, dusty disk.

Jason Wright and the team at the Glimpsing Heat from Alien Technologies project at Penn State have taken a hard look at KIC 8462 and discussed it briefly in a recent paper (citations for both the Boyajian and Wright papers are at the end of this entry). It seems entirely reasonable to do what Wright did in referencing the fact that the light curve we see around the star is what we would expect to see if an advanced civilization were building something. That ‘something’ might be a project along the lines of a ‘Dyson swarm,’ in which huge collectors gather solar energy, or it could be a kind of structure beyond our current thinking.

We all know that the media reaction was swift, and we saw some outlets acting as if Wright had declared KIC 8462 an alien outpost. He had done no such thing, nor has he or the Penn State team ever suggested anything more than continuing investigation of this strange star. What seems to bother others, who have scoffed at the idea of extraterrestrial engineering, is that Wright and company have not explicitly ruled it out as a matter of course. The assumption there is that no other civilizations exist, and therefore we could not possibly be seeing one.

I come down on the side of keeping our options open and studying the data in front of us. We have a lot of work ahead to figure out what is causing a light curve so unusual that at least one of the objects briefly occulting this star caused a 22 percent dip in its flux. That implies a huge object, evidently transiting in company with many smaller ones. There seems to be no evidence that the objects are spherically symmetric. What’s going on around KIC 8462?

A new paper from Massimo Marengo (Iowa State) and colleagues looks at what Tabetha Boyajian identified as the most likely natural cause of the KIC 8462 light curves. All I have at this point is the JPL news release and a release from Iowa State — the paper has not yet appeared online — describing evidence for a swarm of comets as the culprit. The study, which has been accepted at Astrophysical Journal Letters relies on Spitzer data dating from 2015, five years later than the WISE data that found no signs of an infrared excess.

Image: This illustration shows a star behind a shattered comet. Is this the explanation for the unusual light curves found at KIC 8462852? Image credit: NASA/JPL-Caltech.

If there had been a collision between planets or asteroids in this system, it was possible that the WISE (Wide-field Infrared Survey Explorer) data, taken in 2010, reflected conditions just before the collision occurred. Now, however, we can rule that out, because Spitzer, like WISE, finds no excess of infrared light from warm dust around KIC 8462. So the idea of planet or asteroid collisions seems even less likely. Marengo, according to the JPL document, falls back on the idea of a family of comets on an eccentric orbit. He’s also aware of just how odd KIC 8462 is:

“This is a very strange star,” [Marengo] said. “It reminds me of when we first discovered pulsars. They were emitting odd signals nobody had ever seen before, and the first one discovered was named LGM-1 after ‘Little Green Men… We may not know yet what’s going on around this star, but that’s what makes it so interesting.”

It would take a very large comet indeed to account for the drop in flux we’ve already seen, but a swarm of comets and fragments can’t be ruled out because we just don’t have enough data to make the call. I assume Marengo also gets into the fact that a nearby M-dwarf (less than 900 AU from KIC 8462, is a possible influence in disrupting the system. The comet explanation would be striking if confirmed because we have no other instances of transiting events like these, and we would have found these comets by just happening to see them at the right time in their presumably long and eccentric orbit around the star.

Image: Left: a deep, isolated, asymmetric event in the Kepler data for KIC 8462. The deepest portion of the event is a couple of days long, but the long “tails” extend for over 10 days. Right: a complex series of events. The deepest event extends below 0.8, off the bottom of the figure. After Figure 1 of Boyajian et al. (2015). Credit: Wright et al.

So, despite PR headlines like Strange Star Likely Swarmed by Comets, I think we have to take a more cautious view. We’re dealing with a curious star whose changes in flux we don’t yet understand, and we have candidate theories to explain them. We’re no more ready to declare comets the cause of KIC 8462’s anomalies than we are to confirm alien megastructures. At this point we should leave both natural and artificial causes in the mix and recognize how long it’s going to take to work out a viable solution through careful, unbiased analysis.

The Marengo paper is Marengo, Hulsebus and Willis, ”KIC 8462852: The Infrared Flux,” Astrophysical Journal Letters, Vol. 814, No. 1 (abstract). I write about it this morning only because it is getting so much media attention — more later when I can go through the actual paper. The Boyajian paper is Boyajian et al., “Planet Hunters X. KIC 8462852 – Where’s the flux?” submitted to Monthly Notices of the Royal Astronomical Society (preprint). The Wright paper is Wright et al., “The Ĝ Search for Extraterrestrial Civilizations with Large Energy Supplies. IV. The Signatures and Information Content of Transiting Megastructures,” submitted to The Astrophysical Journal (preprint).

November 24, 2015

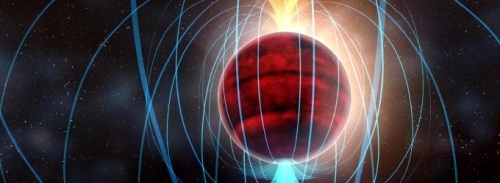

Huge Flares from a Tiny Star

Just a few days ago we looked at evidence that Kepler-438b, thought in some circles to be a possibly habitable world, is likely kept out of that category by flare activity and coronal mass ejections from the parent star. These may well have stripped the planet’s atmosphere entirely (see A Kepler-438b Caveat – and a Digression). Now we have another important study, this one out of the Harvard-Smithsonian Center for Astrophysics, taking a deep look at the red dwarf TVLM 513–46546 and finding flare activity far stronger than anything our Sun produces.

Led by the CfA’s Peter Williams, the team behind this work used data from the Atacama Large Millimeter/submillimeter Array (ALMA), examining the star at a frequency of 95 GHz. Flares have never before been detected from a red dwarf at frequencies as high as this. Moreover, although TVLM 513 is just one-tenth as massive as Sol, the detected emissions are fully 10,000 times brighter than what our star produces. The four-hour observation window was short, which may be an indication that we’re looking at a star that is frequently active.

Now considered an M9 dwarf, TVLM 513 is about 35 light years away in the constellation Boötes. It is believed to be on the borderline between red and brown dwarfs, with a radius 0.11 that of the Sun, a temperature of 2500 K, and a rotation rate of a scant two hours (the Sun takes almost a month for a complete rotation). For a habitable planet to exist here — one with temperatures allowing liquid water on the surface — it would need to orbit at about 0.02 AU. That’s obviously a problem, as Williams explains in this CfA news release:

“It’s like living in Tornado Alley in the U.S. Your location puts you at greater risk of severe storms. A planet in the habitable zone of a star like this would be buffeted by storms much stronger than those generated by the Sun.”

Image: Artist’s impression of red dwarf star TVLM 513-46546. ALMA observations suggest that it has an amazingly powerful magnetic field (shown by the blue lines), potentially associated with a flurry of solar-flare-like eruptions. Credit: NRAO/AUI/NSF; Dana Berry / SkyWorks.

Another unusual aspect of TVLM 513 is its magnetic field. Data from the Very Large Array in New Mexico had previously shown a magnetic field several hundred times stronger than the Sun’s. The paper argues that the emissions observed in the ALMA data are the result of synchrotron emission — radiation generated by the acceleration of high-velocity charged particles through magnetic fields — associated with the small star’s magnetic activity.

We have a lot to learn about small stars, their magnetic fields and their flare processes, and even in this study, the paper offers a caveat:

… confident inferences based on the broadband radio spectrum of TVLM 513 are precluded because the ALMA observations were not obtained contemporaneously with observations at longer wavelengths, and TVLM 513’s radio luminosity, and possibly its radio spectral shape, are variable. Additional support from the Joint ALMA Observatory to allow simultaneous observations with other observatories would be highly valuable.

The authors add that while it has long been known that both stars and gas giant planets have magnetic fields, the mechanisms at work are different and it is unclear what kind of magnetic activity we should expect from objects of intermediate size. Learning more about magnetic processes in small stars should help us understand more about exoplanets and their magnetic activity. This first result at millimeter wavelengths thus points to the work ahead:

Modern radio telescopes are capable of achieving ∼µJy sensitivities at high frequencies (≿20 GHz), raising the possibility of probing the means by which particles are accelerated to MeV energies by objects with effective temperatures of ≾2500 K.

So we’re going to learn a lot more about small red dwarfs as we study whether or not such stars can host habitable planets. The argument against red dwarfs and astrobiology used to focus on tidal lock and the problems of atmospheric circulation, but we’re now wondering whether, particularly in young red dwarfs, flare activity may not be the key factor. If TVLM 513 is representative of a category of flare-spitting stars, the smallest red dwarfs may be hostile to life.

The paper is Williams et al., “The First Millimeter Detection of a Non-Accreting Ultracool Dwarf,” in press at The Astrophysical Journal (preprint).

November 23, 2015

The 3 Most Futuristic Talks at IAC 2015

Justin Atchison’s name started appearing in these pages all the way back in 2007 when, in a post called Deep Space Propulsion via Magnetic Fields, I described his work at Cornell on micro-satellites the size of a single wafer of silicon. Working with Mason Peck, Justin did his graduate work on chip-scale spacecraft dynamics, solar sails and propulsion via the Lorentz force, ideas I’ve tracked ever since. He’s now an aerospace engineer at the Johns Hopkins University Applied Physics Laboratory, where he focuses on trajectory design and orbit determination for Earth and interplanetary spacecraft. As a 2015 NIAC fellow he is researching technologies that enable asteroid gravimetry during spacecraft flybys. In the entry that follows, Justin reports on his trip to Jerusalem for this fall’s International Astronautical Congress.

by Justin A. Atchison

Greetings. I’m Justin Atchison, an aerospace engineer at the Johns Hopkins University Applied Physics Laboratory. I’m proud to have previously had my graduate research included on Centauri Dreams (1,2, and 3). Now, I’m guest-writing an article about the three most futuristic talks I saw at the International Astronautical Congress in Jerusalem this past October. I was able to attend the conference thanks to a travel fellowship through the Future Space Leaders Foundation (FSLF). I’d strongly encourage any student or young-professional (under 35) to apply for this grant next year. It’s a fantastic opportunity to attend this premier conference and interact with a variety of international leaders and thinkers in the aerospace field. FSLF also hosts the Future Space Event on Capitol Hill each summer, which offers engagement with US Congress and aerospace executives on the latest and most relevant space-related subjects.

Image: Justin in the IAC-2015 exhibition hall trying on a protective harness that minimizes radiation exposure to the pelvis bone, which is particularly sensitive to radiation due to its high bone marrow production.

So with that note of thanks and recommendation, I give you “The 3 Most Futuristic Talks at IAC 2015.”

1. An Approach for Terraforming Titan

Abbishek G., D. Kothia, R.A. Kulkarni, S. Chopra, and U. Guven, “Space Settlement on Saturn’s Moon: Titan,” International Astronautical Congress, Jerusalem, Israel, IAC-15-D4.1.5, 2015.

University of Petroleum and Energy Studies, India

The authors of this paper explore options for terraforming Titan in the distant future. Specifically, this means liberating oxygen and increasing the surface temperature.

In addition to having water-ice, Titan is a candidate for human settlement for a few compelling reasons:

Abundant Water-Ice – Water is obviously critical for life and is a source of oxygen.

Solar Wind Shielding – Saturn’s magnetosphere “contains” Titan for 95% of its orbit period and is relatively stable.

Earth-like Geology – Observations of Titan show a relatively young, Earth-like surface with rivers, wind-generated dunes, and tectonic-induced mountains.

Native Atmosphere – Titan’s atmosphere is nitrogen rich (95%) and shows strata similar to Earth (troposphere, stratosphere, mesosphere, and thermosphere). The atmospheric pressure on the surface is only 60% higher than that on Earth.

However, Titan presents challenges for habitation, namely a lack of breathable oxygen and the presence of extremely cold surface temperatures (90K). The authors suggest that the solution to these two challenges is a nuclear fission plant that can dissociate oxygen from water and produce greenhouse gases.

Generating Oxygen – The main idea is to use beta or gamma radiation to set up a radiolysis process that converts hydrogen and oxygen atoms into usable constituents, including O2. This requires an artificial radiation source and a means of liberating the hydrogen and oxygen atoms from the native cold ice. They suggest a nuclear fission plant as the source of the radiation, and a duoplasmatron as the means of liberating and exciting the H and O atoms. The duoplasmatron would accelerate a beam of argon ions, which would be aimed at the water-ice. The collisions cause sputtering, where the argon ions literally knock O and H atoms out of the ice. These atoms are then collected and radiated to generate usable O2.

Heating Up the Atmosphere – At about 9.5 AU from the Sun, Titan receives only ~1% as much solar energy as Earth. The goal for raising the temperature on Titan is to capture and retain that limited energy. The authors consider the generation of greenhouse gases as the solution. There are two options they suggest:

If lightning is present on Titan, then the oxygen generated by the nuclear reactor can energetically react with the already-present nitrogen to produce nitrogen oxides, namely NO, NO2, N2O, and N2O2. Once these nitrogen oxides are able to raise the surface temperature by roughly 20 K, Titan’s methane lakes will begin to boil off, releasing gaseous methane as an additional greenhouse gas, and potentially raising the surface temperature to a habitable value.

If lightning isn’t present, or if its generation of nitrogen oxides is too inefficient, one could boil the methane lakes directly using the previously mentioned nuclear reactor. In this setup, the reactor is simply increasing the amount of vapor in the already-present methane cycle (vaporization and condensation of methane). To cause the lakes to naturally vaporize, one needs to generate sufficient vapor to affect the global climate and raise the surface temperature by 20 K.

The authors don’t estimate the total time required for terraforming, the size of the nuclear plant required to start the process, or the maximum theoretical surface temperature achievable, but they nonetheless posit a potential path forward for planetary habitation…and that’s a meaningful contribution.

____________________

2. Eternal Memory

Guzman M., A. M. Hein, and C. Welch, “Eternal Memory: Long-Duration Storage Concepts for Space” International Astronautical Congress, Jerusalem, Israel, IAC-15-D4.1.3, 2015.

International Space University and Initiative for Interstellar Studies, France

How can present day humanity leave a message for distant future civilizations (human or alien)? This question first became an option with Carl Sagan’s famous Voyager Golden Record. The authors of this review paper evaluate the requirements and near term options available to store and interpret data for millions to billions of years in space. That’s a long enough timescale that you have to start to consider the lifetime of the sun (5 billion years) and the merging of the Milky Way and Andromeda galaxies (long term dynamics may destabilize orbits in the solar system). There are a variety of current efforts, most of which are crowd-funded, including: The Helena Project, Lunar Mission Project, Time Capsule to Mars, KEO, The Rosetta Project, The Human Document Project, One Earth Message, and Moonspike.

The data storage mechanism has to survive radiation, micrometeoroids, extreme temperatures, vacuum, solar wind, and geologic processes (if landed on a planet or moon). In terms of locating the data, the authors consider just about every option: Earth orbit, the Moon, the planets, planetary moons, Lagrange points, asteroids and comets, escape trajectories, and even orbiting an M-star. The Moon appears to be a good candidate because it remains near enough to Earth for future civilizations to discover, yet distant enough to avoid too common access (it can’t be too accessible or it might be easily destroyed by malicious or careless humans). One of the proposed implementations is to bury data at the lunar north pole, where regolith can be a shield against micrometeoroid impacts.

There are a variety of near-term technologies available for this challenge, including three approaches that could likely survive the requisite millions to billions of years:

Silica Glass Etching – “Silica is an attractive material for eternal memory concepts because it is stable against temperature, stable against chemicals, has established microfabrication methods, and has a high Young’s modulus and Knoop hardness.” In this implementation, femtosecond lasers are used to etch the glass and achieve CD-ROM like data densities. A laboratory test exposed a sample wafer to 1000°C heat for two hours with no damage.

Tungsten Embedded in Silicon Nitride – A group in the Netherlands has developed and tested a process for patterning tungsten inside transparent, resilient silicon nitride. The resulting wafer can be read optically. The materials were selected for their high melting points, low coefficients of thermal expansion, and high fracture toughness. A sample QR code was generated and successfully tested at high temperatures, the result of which implied 106 year survivability.

Generational Bacteria DNA – This approach uses DNA as a means of storing data (see Data Storage: The DNA Option). Although this may sound extreme, consider that bacterial DNA has already survived millions of years in Earth’s rather unstable environment. It is a demonstrated high-density, resilient means of storing data. In this implementation, we would write data into the genome of a particularly hardy strain of bacteria, and rely on its self-survival to protect our data for the future. This option presents the challenge that it requires the future civilization to have the capability to study the bacteria’s DNA and identify the human generated code.

As humans continue to send probes to unique places in the solar system, I hope that we’ll consider and incorporate these new technologies. Who knows–In a few million years, our “cave paintings” may be hanging in some intergalactic museum.

____________________

3. Carbon Nanotubes for Space Solar Power…(And Eventually Interplanetary Travel?)

Gadhadar R., P. Narayan, and I. Divyashree, “Carbon-Nanotube Based Space Solar Power (CASSP),” 4th Space Solar Power International Student and Young Professional Design Competition, Jerusalem, Israel, 2015.

NoPo Nanotechnologies Private Limited and Dhruva Space, India.

Single-Walled Carbon Nanotubes (SWCNT) have remarkable properties:

Incredibly high strength-to-weight ratio (300x steel) [~50,000 kN m / kg]

High electrical conductivity (higher than copper) [106-107 m/Ω]

High thermal conductivity (higher than diamond) [3500 W/(m K)]

High temperature stability (up to 2800°C in a vacuum)

Tailorable semiconductive properties (based on nanotube diameter)

Ability to sustain high voltage densities (1-2 V/µm)

Ability to sustain high current densities (~109 A/cm2)

High radiation resistance

These properties, specifically the strength-to-weight ratio, make them candidates for things like space elevators and momentum exchange tethers.

The authors of this paper posit a different application for SWCNT: space based solar power. This is the concept where an enormous array of solar cells is placed in orbit around Earth. Power is collected, and then beamed down to the surface at microwave frequencies for terrestrial use. The main idea is to collect solar power outside of Earth’s atmosphere, which attenuates something like 50-60% of the energy in solar spectra. The spacecraft has access to a higher energy density of solar light, which it then beams down to Earth at microwave frequencies, at which the atmosphere is transparent. A second advantage is that high orbiting satellites have much shorter local nights (eclipses) than someone on the Earth (there’s only up to about 75 minutes of darkness for geostationary orbit).

In this paper, the authors describe an implementation of a solar power satellite that would use semiconducting SWCNT as the solar cells. Based on the authors’ analysis, it’s feasible to mature this TRL 4 technology to achieve a peak energy of 2 W/g at 10 cents per W. This is compared to current TRL 9 options that offer roughly 0.046 W/g at 250 dollar per W. The design is entirely developed from different forms of SWCNT, which are used to make a transparent substrate, a semiconducting layer, and a conducting base. The three-layered assembly would have a density of 230 g/m2, roughly a third of current technologies.

Additionally, the authors advocate for SWCNT based microwave transmitters, which could potentially be more efficient than traditional Klystron tubes and wouldn’t require active cooling.

As an added benefit, this type of SWCNT microwave source could potentially be used in the newly discussed (and certainly controversial) CANNAE drive. In the paper’s implementation, CANNAE propulsion would only be used for station-keeping…But it’s not hard to extrapolate and conceive of a solar powered, CANNAE-driven spacecraft for interplanetary exploration.

I have to admit, I’m a bit skeptical of the economics of space-based solar power concepts. But this paper is nonetheless exciting as it highlights the potential applications for this relatively new engineered material. I can’t wait to see how SWCNT are used in the coming decades and what new exploration technologies they’ll enable.

November 20, 2015

The Cereal Box

“No matter how these issues are ultimately resolved, Centauri Dreams opts for the notion that even the back of a cereal box may contain its share of mysteries.” I wrote that line in 2005, and if it sounds cryptic, read on to discover its origins, ably described by Christopher Phoenix. I first encountered Christopher in an online discussion group made up of physicists and science fiction writers, where his knack for taking a topic apart always impressed me. A writer whose interest in interstellar flight is lifelong, he is currently turning his love of science fiction into a novel that, he tells me “incorporates some of the ideas we talk about on Centauri Dreams as a background setting.” Today’s essay examines the ideas of a physicist who dismissed the idea of interstellar flight entirely, while using a set of assumptions Christopher has come to challenge.

by Christopher Phoenix

“All this stuff about traveling around the universe in space suits — except for local exploration which I have not discussed — belongs back where it came from, on the cereal box.”

Over fifty years ago, physicist Edward Purcell penned the boldest dismissal of interstellar flight on record in his paper “Radioastronomy and Communication Through Space”. In that paper, Purcell uses the elementary laws of mechanics to refute the possibility of starflight in total. There are many people, of course, who share his belief that we will never reach the stars.

Keeping a firm grounding in the laws of physics is absolutely necessary when researching interstellar travel. A healthy skeptical attitude can help keep researchers honest with themselves. Certainly, not everything we imagine is possible. Nor can we hope to ever reach for the stars if we do not keep our feet firmly planted in reality.

However, sometimes such extreme skepticism deserves some healthy skepticism itself. Even though Purcell’s equations aren’t wrong, he didn’t prove that starflight belongs back on the cereal box. Instead, he defines the problem of interstellar travel in such a way that it seems to be insurmountable.

Radioastronomy and Communication Through Space

Before we begin, I want to quickly introduce Purcell and this paper. Edward M. Purcell made important contributions to physics and radioastronomy. He shared the 1952 Nobel Prize in Physics for discovering nuclear magnetic resonance (NMR) in liquids and solids. Later, Purcell was the first to detect radio emissions from neutral galactic hydrogen, the famous “21cm line”. Many important developments in radioastronomy resulted from his work.

“Radioastronomy and Communication Through Space” was the first paper in the Brookhaven Lecture Series. These lectures were meant to provide a meeting ground for all the scientists at Brookhaven National Laboratory. In this paper, Purcell argued that traditional radio SETI, not interstellar travel, is our only way of learning about other planets in the galaxy.

Image: Edward Mills Purcell (1912–1997). Credit: Wikimedia Commons.

Purcell builds to his conclusion in three sections. The first section discusses then-recent discoveries in radioastronomy. Purcell tells how astronomers mapped the galaxy by observing radio emissions from neutral galactic hydrogen (the 21cm line). He notes in particular that we gathered all this information by capturing an astonishingly tiny amount of radio energy from space. Over nine years, the total amount of radio energy captured by all 21cm observatories added up to less than one erg (10-7 J).

The paper then jumps from radioastronomy to more speculative topics. In the second section, Purcell takes on the idea of interstellar travel and runs some calculations on relativistic rockets. He concludes that interstellar flight is “preposterous”. In the final section of his paper, Purcell argues that radio messages can be sent between the stars for relatively little energy cost, while the energy required for interstellar travel is unobtainable.

I shall primarily discuss the second part of this paper, where Purcell argued against the possibility of interstellar travel.

“This is preposterous!”

From the start, Purcell considered fast interstellar travel as our only option. Purcell noted that relativity is not the obstacle to reaching another star within a single human lifetime. We cannot travel faster than light. However, if a we travel at speeds close to that of light, time dilation becomes an important factor, reducing the amount of time that passes for us on our trip. You will age much less than your friends back home if you travel to the stars at relativistic speeds.

This is perfectly correct, in my view, so far as it goes. Special relativity is reliable. The trouble is not, as we say, with the kinematics but with the energetics… Personally, I believe in special relativity. If it were not reliable, some very expensive machines around here would be in deep trouble.

The problem, Purcell says, is building a rocket capable of carrying out this mission. He develops this argument by examining a particular example flight.

Let us consider a trip to a place 12 light years away, and back. Because we don’t want to take many generations to do it, let us arbitrarily say we will go and come back in 28 years earth time. We will reach a top speed of 99% speed of light in the middle, and slow down and come back. The relativistic transformations show that we will come back in 28 years, only ten years older. This I really believe… Now let us look at the problem of designing a rocket to perform this mission.

So, Purcell has defined the problem in a certain way. The starship must fly to another star and return to Earth within a human lifetime. To do so, it will reach a top speed of 99% the speed of light (C) in the middle of the voyage. The craft is a rocket, and it must carry all its propellant from the beginning of the trip. It cannot refuel anywhere. To reach 99% C within a short amount of time, the rocket must maintain an acceleration of one g for most of the trip.

Having laid out the starting assumptions for our trip, Purcell uses the relativistic rocket equation to calculate the amount of propellant the rocket will require to complete the trip. Remember that rockets are momentum machines. They throw a certain mass of propellant out the back, and the reaction force pushes the rocket. When that propellant is all gone, only the payload remains and the rocket has reached its final speed.

A rocket engine’s performance is determined by its exhaust velocity (Vex). This is the velocity at which propellant leaves the engine as measured by the rocket. The higher the Vex, the more efficiently the rocket engine uses propellant. Engineers refer to rocket efficiency as specific impulse (Isp). A rocket’s specific impulse is determined by its exhaust velocity.

If you have a rocket of a certain Vex, and you want to accelerate it to a certain maximum velocity (Vmax), physics imposes a certain relationship between the initial and final mass of the rocket. Engineers call this ratio a rocket’s mass ratio. This relationship is shown by the rocket equation. Unfortunately, if our Vmax is much larger than our Vex, mass ratios increase exponentially. This is because the rocket must not only accelerate the payload, but also all the as-yet unused propellant. To go faster, you need more propellant, but you need more propellant to carry that propellant- and so on.

So, our next problem is choosing an engine. We want to travel close to the speed of light, so we need an engine with the highest exhaust velocity (and thus highest Isp) possible. Chemical rockets have much to low a Vex to do this- they would require an unimaginably large amount of reaction mass to approach the speed of light. We need a far more powerful engine.

One type of engine that could perform far better than chemical rockets is the nuclear fusion rocket. So, Purcell first proposes using idealized nuclear fusion propellant. In this case, the rocket’s initial mass must be a little over a billion times its final mass to reach 99% C. A ten ton payload will require a ten billion ton rocket at the start of the journey. This is simply too much mass!

We need something far more potent. Purcell turns to idealized matter-antimatter (M/AM) propellant. Again, we assume the fuel is utilized with perfect efficiency. Matter annihilates with antimatter, and the resulting energy is exhausted as massless electromagnetic radiation (gamma rays), giving us a Vex of C. We can’t beat that.

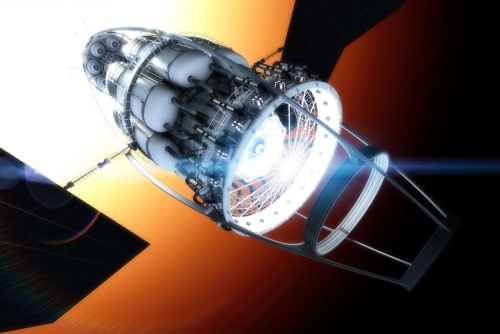

Image: VARIES (Vacuum to Antimatter Rocket Interstellar Explorer System) is a concept developed by Richard Obousy that would create its own antimatter enroute through the use of powerful lasers. Credit: Adrian Mann.

The situation is vastly improved by M/AM propellant. To reach 99% C, the rocket’s initial mass must be only 14 times its final mass. But we must also slow down at the destination, and slowing down requires just as much effort as accelerating in the first place. After that, we must turn the ship around and return to Earth.

So, during the course of our flight, the rocket shall undergo four accelerations. On the trip away from Earth, the rocket will accelerate to 99% C, and then decelerate back down to rest at the destination star. After turning around, it will accelerate back to 99% C on the trip home and then decelerate back down to rest at Earth. To do this, the rocket must start with an initial mass 40,000 times its final mass. To send a ten ton payload on this round trip will require a 400,000 ton rocket, consisting half of matter and half of antimatter.

The starship must accelerate at one g for most of the trip. At the outset of its journey, this rocket must radiate 1018 watts of radiant energy to accelerate its 400,000 tons of mass at one g. This is a little over the total power that the Earth receives from the sun. Only this energy is in gamma rays, which presents a shielding problem for any planet near the ship. In addition, once the rocket achieves relativistic velocities, cosmic dust and gas present a shielding problem for the ship itself. At these speeds, even tiny specks of matter will behave like pinpoint nuclear explosions, and individual protons will be transformed into deadly cosmic rays.

Purcell concludes that these calculations prove that interstellar flight is “preposterous”, in this solar system or any other.

Rigging the game

There isn’t anything wrong with Purcell’s calculations. The problem is that Purcell wants to take this one set of calculations and prove that any form of interstellar travel is impossible. This isn’t very fair, since the starting conditions he picked in his example lead to his pessimistic conclusions. Let’s examine these assumptions.

Purcell’s first assumption is we must travel at 99% C. Why must we travel so fast? Even to complete a trip to a nearby star within a human lifetime, you can travel slower than that. Purcell is committed to these extreme relativistic speeds in order to take advantage of time dilation and complete the round trip in a decade.

If we are willing to travel much slower, perhaps 10% C, or even 1% C, and let multiple generations of crew make the trip, the difficulties are greatly reduced. At slower speeds, propulsion requirements are far more reasonable, and deadly collisions with cosmic dust would be easier to defend against.

Of course, there are many very difficult challenges to solve before we can launch such a ship. The travelers must recycle all their air and water, grow their own food, and build a stable society able to last for centuries. Some form of artificial gravity must be provided to prevent muscle and bone loss in microgravity. The habitable sections of the ship must be shielded from cosmic rays. But none of these represent hard physical limits arising from the laws of mechanics and nothing else.

This is all assuming humans are making the trip. Slow travel is made even easier if humans do not make the trip, just as we have done with our current robotic exploration of the solar system.

The second assumption is the starship must return to Earth. Particularly if we must carry all the propellant we use from the outset, a round trip mission is far more difficult than a one-way trip. But why must the starship return to Earth? There are many interesting missions that do not require the spacecraft to return to Earth. A colonizing expedition does not have or even want to return. Neither does a robotic probe. A fly-by probe like Daedalus doesn’t even need to carry propellant to slow down at the destination.

Purcell’s third questionable assumption is an interstellar vehicle must carry all its energy and reaction mass on board from the start of the trip. Is this really true? Think about in-situ resource utilization. An interstellar expedition could mine propellant from planetoids encountered at the destination. We can use propulsion systems that use the resources present in space, like gravitational assists, solar sails, or even interstellar ramjets. Granted, gravitational assists and solar sails could not get you anywhere near relativistic speeds, but they could work for slower travel.

Image: A Bussard ramjet in flight, as imagined for ESA’s Innovative Technologies from Science Fiction project. Credit: ESA/Manchu.

If the natural resources of space are not sufficient, there are other options. Rockets carry all their energy and reaction mass from the start. Beam-rider propulsion systems are an alternative that leave heavy engines, energy sources, and propellant back home. One such craft is a photon sail pushed by a laser. Another is a spacecraft propelled by a stream of relativistic pellets, each transferring momentum to the craft. As a cursory read of Mallove and Matloff’s excellent book The Starflight Handbook shows, we are not limited to rockets only.

Ultimately, Purcell’s conclusion that all speculation about interstellar travel belongs back “on the cereal box” simply doesn’t hold air in the space vacuum.

SETI vs interstellar travel?

Purcell’s paper underscores an unfortunate split in the ranks of scientists. Many scientists interested in SETI maintain that interstellar flight is simply not feasible for any civilization. They argue that we don’t need to physically travel to other planetary systems in order to learn about the rest of the universe. We need only turn our radio telescopes to the sky and search for broadcasts from more advanced civilizations. If we find them, these advanced civilizations will hopefully tell us everything we want to know. We might even find that mature civilizations in space have formed a galactic community of communicating societies. Perhaps they might allow us to join the conversation once we demonstrate enough maturity to engage in interstellar radio communications. This an exciting possibility, if a bit idealistic, and SETI deserves our support.

However, it is important to realize it is not an either-or question. We can research interstellar travel and carry out SETI searches at the same time. Even if SETI searches find communicative aliens to talk to, that will not negate the usefulness of interstellar travel. We will still need interstellar flight to investigate the countless solar systems where such civilizations are not present, and starflight is absolutely necessary for interstellar migration. But it seems like some SETI supporters don’t see it that way.

Denying starflight has become a fundamental tenant of the SETI worldview. It speaks directly to the question of whether it might be dangerous to contact alien civilizations. Many SETI supporters claim that we don’t have to worry about this question. If we assume interstellar travel is impossible, no civilization in space can physically threaten another. As Purcell claims in his paper:

It [communicating with ETI] is a conversation which is, in the deepest sense, utterly benign. No one can threaten anyone else with objects. We have seen what it takes to send objects around, but one can send information for practically nothing. Here one has the ultimate in philosophical discourse – all you can do is exchange ideas, but you do that to your heart’s content.

In my opinion, this is the real reason why Purcell argues so vehemently against the possibility of interstellar flight. In order for communication with ETI to be completely safe, interstellar travel must be impossible for any civilization anywhere in the universe. Contact with ETI becomes more complicated if there is a possibility of encountering them or their technology physically. Of course, we can’t be entirely sure messages from ETI will be entirely harmless either, if they contain instructions or information that might pose a danger.

I suspect that Purcell’s pessimistic arguments against starflight were driven more by his desire to believe that discourse with aliens comes without risks than a genuine interest in the future of space travel. Whatever the disposition of aliens, we can’t allow our personal hopes and dislikes to bias our conclusions. While interstellar travel is very difficult, we can already conceive of ways that a sufficiently motivated civilization could reach the stars.

November 19, 2015

Directly Imaging a Young ‘Jupiter’

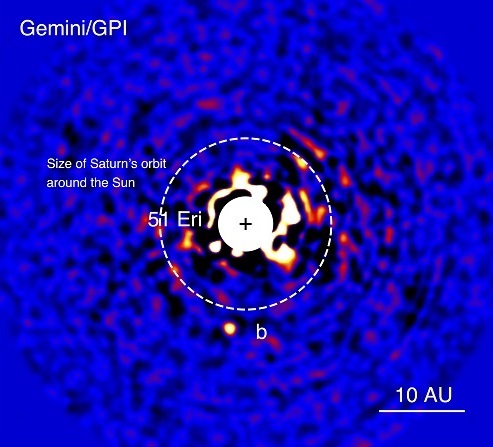

Centauri Dreams continues to follow the fortunes of the Gemini Planet Imager with great interest, and I thank Horatio Trobinson for a recent note reminding me of the latest news from researchers at the Gemini South installation in Chile. The project organized as the Gemini Planet Imager Exoplanet Survey is a three-year effort designed to do not radial velocity or transit studies but actual imaging of young Jupiters and debris disks around nearby stars. Operating at near-infrared wavelengths, the GPI itself uses adaptive optics, a coronagraph, a calibration interferometer and an integral field spectrograph in its high-contrast imaging work.

Launched in late 2014, the GPIES survey has studied 160 targets out of a projected 600 in a series of observing runs, all the while battling unexpectedly bad weather in Chile. Despite all this, project leader Bruce Macintosh (Stanford University), the man behind the construction of GPI, has been able to announce the discovery of the young ‘Jupiter’ 51 Eridani b, working with researchers from almost forty institutions in North and South America. The discovery was confirmed by follow-up work with the W.M. Keck Observatory on Mauna Kea (Hawaii).

Image: Discovery image of 51 Eri b with the Gemini Planet Imager taken in the near-infrared light on December 18, 2014. The bright central star has been mostly removed by a hardware and software mask to enable the detection of the exoplanet one million times fainter. Credits: J. Rameau (UdeM) and C. Marois (NRC Herzberg).

This is a world with about twice the mass of Jupiter, and this news release from the Gemini Observatory is characterizing it as “the most Solar System-like planet ever directly imaged around another star.” The reasons are obvious: 51 Eridani b orbits at about 13 AU, putting it a bit past Saturn in our own Solar System. And although 51 Eridani b is some 100 light years away, Macintosh and colleagues have found a strong spectroscopic signature of methane.

“Many of the exoplanets astronomers have imaged before have atmospheres that look like very cool stars” says Macintosh. “This one looks like a planet.”

Indeed, and we have further evidence that this is a planet rather than a brown dwarf in chance alignment with the star in the form of a recent paper that analyzes the motion of 51 Eridani b and finds it consistent with a forty-year orbit. Moreover, we’re going to be learning a great deal more about this interesting object in years to come, as the paper explains:

Continued astrometric monitoring of 51 Eri b over the next few years should be sufficient to detect curvature in the orbit, further constraining the semimajor axis and inclination of the orbit, and placing the first constraints on the eccentricity. Absolute astrometric measurements of 51 Eri with GAIA (e.g., Perryman et al. 2014), in conjunction with monitoring of the relative astrometry of 51 Eri b, will enable a direct measurement of the mass of the planet. Combined with the well-constrained age of 51 Eri b, such a determination would provide insight into the evolutionary history of low-mass directly imaged extrasolar planets, and help distinguish between a hot-start or core accretion formation process for this planet.

Image: The Gemini Planet Imager utilizes an integral field spectrograph, an instrument capable of taking images at multiple wavelengths – or colors – of infrared light simultaneously, in order to search for young self-luminous planets around nearby stars. The left side of the animation shows the GPI images of the nearby star 51 Eridani in order of increasing wavelength from 1.5 to 1.8 microns. The images have been processed to suppress the light from 51 Eridani, revealing the exoplanet 51 Eridani b (indicated) which is approximately a million times fainter than the parent star. The bright regions to the left and right of the masked star are artifacts from the image processing algorithm, and can be distinguished from real astrophysical signals based on their brightness and position as a function of wavelength. The spectrum of 51 Eridani b, on the right side of the animation, shows how the brightness of the planet varies as a function of wavelength. If the atmosphere was entirely transmissive, the brightness would be approximately constant as a function of wavelength. This is not the case for 51 Eridani b, the atmosphere of which contains both water (H2O) and methane (CH4). Over the spectral range of this GPI dataset, water absorbs photons between 1.5 and 1.6 microns, and methane absorbs between 1.6 and 1.8 microns. This leads to a strong peak in the brightness of the exoplanet at 1.6 microns, the wavelength at which absorption by both water and methane is weakest. Credit: Robert De Rosa (UC Berkeley), Christian Marois (NRC Herzberg, University of Victoria).

Christian Marois (National Research Council of Canada) discusses the nature of the find:

“GPI is capable of dissecting the light of exoplanets in unprecedented detail so we can now characterize other worlds like never before. The planet is so faint and located so close to its star, that it is also the first directly imaged exoplanet to be fully consistent with Solar System-like planet formation models.”

As you would expect, 51 Eridani b is a young planet, young enough that the heat of its formation gives us a solid infrared signature, allowing its direct detection. In addition to being in an orbit that reminds us of the Solar System, the young world is probably the lowest-mass planet yet imaged, just as its atmospheric methane signature is the strongest yet detected. Given that the Gemini Planet Imager Exoplanet Survey is only a fraction of the way through its observing list, we can expect to find more planets in the target area within 300 light years of the Solar System.

The paper is Macintosh et al., “Discovery and spectroscopy of the young jovian planet 51 Eri b with the Gemini Planet Imager,” Science Vol. 350, No. 6256 (2 October 2015), pp. 64-67 (abstract). The follow-up paper is DeRosa et al., “Astrometric Confirmation and Preliminary Orbital Parameters of the Young Exoplanet 51 Eridani b with the Gemini Planet Imager,” accepted at The Astrophysical Journal Letters (preprint).

November 18, 2015

A Kepler-438b Caveat (and a Digression)

Before we go interstellar, a digression with reference to yesterday’s post, which looked at how we manipulate image data to draw out the maximum amount of information. I had mentioned the image widely regarded as the first photograph, Joseph Nicéphore Niépce’s ‘View from the Window at Le Gras.’ Centauri Dreams regular William Alschuler pointed out that this image is in fact a classic example of what I’m talking about. For without serious manipulation, it’s impossible to make out what you’re seeing. Have a look at the original and compare it to the image in yesterday’s post, which has been processed to reveal the underlying scene.

Image: New official image of the first photograph in 2003, minus any manual retouching. Joseph Nicéphore Niépce’s View from the Window at Le Gras. c. 1826. Gernsheim Collection Harry Ransom Center / University of Texas at Austin. Photo by J. Paul Getty Museum.

And here again is the processed image, a much richer experience.

The University of Texas offers this explanation of how the image was made:

“Niépce thought to capture this image using a light-sensitive material so that the light itself would “etch” the picture for him. In 1826, through a process of trial and error, he finally came upon the combination of bitumen of Judea (a form of asphalt) spread over a pewter plate. When he let this petroleum-based substance sit in a camera obscura for eight hours without interruption, the light gradually hardened the bitumen where it hit, thus creating a rudimentary photo. He “developed” this picture by washing away the unhardened bitumen with lavender water, revealing an image of the rooftops and trees visible from his studio window. Niépce had successfully made the world’s first photograph.”

As with many astronomical photographs, what the unassisted human eye would see is often the least interesting aspect of the story. While we always want to know what a person looking out a window would see, we learn a great deal more by subjecting images to a variety of filters.

Meanwhile, in the Rest of the Galaxy…

Habitable zone planets are a primary attraction of the exoplanet hunt, but so often a tight analysis shows that what we know of a world isn’t enough to confirm its habitable status. Kepler-438b is a case in point, a world that is likely rocky orbiting a red dwarf some 470 light years away in the constellation Lyra. The planet orbits the primary every 35.2 days, but writing in these pages last January, Andrew LePage estimated there was only a one in four chance that Kepler-438b is in the habitable zone, declaring it more likely to be a cooler version of Venus.

Now we have more evidence that a planet some in the media have called ‘Earth-like’ is in fact a wasteland, its chances of life devastated by hard radiation from the host star. Kepler-438 produces huge flares every few hundred days, each of them approximately ten times more powerful than anything we’ve ever recorded on the Sun. These ‘superflares’ are laden with energies of 1033 erg, although energies of 1036 erg have been observed.

But the flares are part of a larger problem for Kepler-438b. They are associated with coronal mass ejections (CMEs), a phenomenon likely to have stripped away the planet’s atmosphere entirely. In work to be published in Monthly Notices of the Royal Astronomical Society, David Armstrong (University of Warwick, UK) and colleagues analyze conditions around the red dwarf. Armstrong explains in a University of Warwick news release:

“If the planet, Kepler-438b, has a magnetic field like the Earth, it may be shielded from some of the effects. However, if it does not, or the flares are strong enough, it could have lost its atmosphere, be irradiated by extra dangerous radiation and be a much harsher place for life to exist.”

Image: The planet Kepler-438b is shown here in front of its violent parent star. It is regularly irradiated by huge flares of radiation, which could render the planet uninhabitable. Here the planet’s atmosphere is shown being stripped away. Credit: Mark A Garlick / University of Warwick.

The relationship of flares and CMEs is complicated, as are the effects of a magnetic field. From the paper:

It is possible that CMEs occur on other stars that produce very energetic flares, which could have serious consequences for any close-in exoplanets without a magnetic field to deflect the influx of energetic charged particles. Since the habitable zone for M dwarfs is relatively close in to the star, any exoplanets could be expected to be partially or completely tidally locked. This would limit the intrinsic magnetic moments of the planet, meaning that any magnetosphere would likely be small. Khodachenko et al. (2007) found that for an M dwarf, the stellar wind combined with CMEs could push the magnetosphere of an Earth-like exoplanet in the habitable zone within its atmosphere, resulting in erosion of the atmosphere. Following on from this, Lammer et al. (2007) concluded that habitable exoplanets orbiting active M dwarfs would need to be larger and more massive than Earth, so that the planet could generate a stronger magnetic field and the increased gravitational pull would help prevent atmospheric loss.

A coronal mass ejection occurs when huge amounts of plasma are blown outward from the star, and the extensive flare activity on Kepler-438 makes CMEs that much more likely. With the atmosphere greatly compromised or stripped away entirely, the flares can do their work, bathing the surface in ultraviolet and X-ray radiation and a sleet of hard particles. For a time, Kepler-438b looked so intriguing from an astrobiological standpoint, especially with its small radius 1.1 the size of Earth’s, but it takes an optimistic assessment of the habitable zone indeed to include it in the first place, and it now appears that the chances for life here are remote.

The paper is Armstrong et al., “The Host Stars of Keplers Habitable Exoplanets: Superflares, Rotation and Activity,” accepted at MNRAS and available as a preprint.

November 17, 2015

Pluto and How We See It

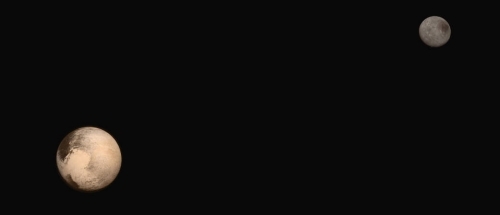

As I did after yesterday’s post, I occasionally get requests for pictures of objects in natural color, as opposed to significantly enhanced images (at various wavelengths) designed to tease out structure or detail. Here are Pluto and Charon as seen by New Horizons’ LORRI (Long Range Reconnaissance Imager), with color data supplied by the Ralph instrument. The images in this composite are from July 13 and 14 and according to JHU/APL, “…portray Pluto and Charon as an observer riding on the spacecraft would see them.”

For those interested, Jenna Garrett wrote a fine piece for WiReD last summer called What We’re Really Looking at When We Look at Pluto that goes into the instrumentation aboard New Horizons and discusses the philosophical issues separating what we see from what is really there. Let me quote briefly from this: