Paul Gilster's Blog, page 14

May 17, 2024

Seven Dyson Sphere Candidates

I’m enjoying the conversation about Project Hephaistos engendered by the article on Dyson spheres. In particular, Al Jackson and Alex Tolley have been kicking around the notion of Dyson sphere alternatives, ways of preserving a civilization that are, in Alex’s words, less ‘grabby’ and more accepting of their resource limitations. Or as Al puts it:

One would think that a civilization that can build a ‘Dyson Swarm’ for energy and natural resources would have a very advanced technology. Why then does that civilization not deploy an instrumentality more sly? Solving its energy needs in very subtle ways…

As pointed out in the article, a number of Dyson sphere searches have been mounted, but we are only now coming around to serious candidates, and at that only seven out of a vast search field. Two of these are shown in the figure below. We’re a long way from knowing what these infrared signatures actually represent, but let’s dig into the Project Hephaistos work from its latest paper in 2024 and also ponder what astronomers can do as they try to learn more.

Image: This is Figure 7 from the paper. Caption: SEDs [spectral energy distributions] of two Dyson spheres candidates and their photometric images. The SED panels include the model and data, with the dashed blue lines indicating the model without considering the emission in the infrared from the Dyson sphere and the solid black line indicating the model that includes the infrared flux from the Dyson sphere. Photometric images encompass one arcmin. All images are centered in the position of the candidates, according to Gaia DR3. All sources are clear mid-infrared emitters with no clear contaminators or signatures that indicate an obvious mid-infrared origin. The red circle marks the location of the star according to Gaia DR3. Credit: Suazo et al.

We need to consider just how much we can deduce from photometry. Measuring light from astronomical sources across different wavelengths is what photometry is about, allowing us to derive values of distance, temperature and composition. We’re also measuring the object’s luminosity, and this gets complicated in Dyson sphere terms. Just how does the photometry of a particular star change when a Dyson sphere either partially or completely encloses it? We saw previously that the latest paper from this ongoing search for evidence of astroengineering has developed its own models for this.

The model draws on earlier work from some of the co-authors of the paper we’re studying now. It relies on two approaches to the effect of a Dyson sphere on a star’s photometry. First, we need to model the obscuration of the star by the sphere itself. Beyond this, it’s essential to account for the re-emission of absorbed radiation at much longer wavelengths, as the megastructure – if we can call it that – gives off heat.

“[W]e model the stellar component as an obscured version of its original spectrum and the DS component as a blackbody whose brightness depends on the amount of radiation it collects,” write the authors of the 2022 paper I discussed in the last post. The modeling process is worth a post of its own, but instead I’ll send those interested to an even earlier work, a key 2014 paper from Jason Wright and colleagues, “The Ĝ Infrared Search for Extraterrestrial Civilizations with Large Energy Supplies. II. Framework, Strategy, and First Result.” The citation is at the end of the text.

The recently released 2024 paper from Hephaistos examined later data from Gaia (Data Release 3) while also incorporating the 2MASS and WISE photometry of some 5 million sources to create a list of stars that could potentially host a Dyson sphere. In the new paper, the authors home in on partial Dyson spheres, which will partially obscure the star’s light and would show varying effects depending on the level of completion. The waste heat generated in the mid-infrared would depend upon the degree to which the structure (or more likely, ‘swarm’) was completed as well as its effective temperature.

So we have a primary Dyson sphere signature in the form of excess heat, thermal emission that shows up at mid-infrared wavelengths, and that means we’re in an area of research that also involves other sources of such radiation. The dust in a circumstellar disk is one, heated by the light of the star and re-emitted at longer wavelengths. As we saw yesterday, all kinds of contamination are thus possible, but the data pipeline used by Project Hephaistos aims at screening out the great bulk of these.

Seven candidates for Dyson spheres survive the filter. All seven appear to be actual infrared sources that are free of contamination from dust or other sources. The researchers subjected the data to over 6 million models that took in 391 Dyson sphere effective temperatures. They modeled Dyson spheres in temperature ranges from 100 to 700 K, with covering factors (i.e., the extent of completion of the sphere) from 0.1 to 0.9. Among many factors considered here, they’re also wary of Hα (hydrogen alpha) emissions, which could flag the early stage of star growth and might be implicated in observations of infrared radiation.

Image: IC 2118, a giant cloud of gas and dust also known as the Witch Head Nebula. H-alpha emissions, which are observed over most of the Orion constellation, are shown in red. This H-alpha image was taken by the MDW Survey, a high-resolution astronomical survey of the entire night sky not affiliated with Project Hephaistos. I’m showing it to illustrate how pervasive and misleading Hα can be in a Dyson sphere search. Credit: Columbia University.

I want to be precise about what the authors are saying in this paper: “…we identified seven sources displaying mid-infrared flux excess of uncertain origin.” They are not, contra some sensational reports, saying they found Dyson spheres. These are candidates. But let’s dig in a bit, because the case is intriguing. From the paper:

Various processes involving circumstellar material surrounding a star, such as binary interactions, pre-main sequence stars, and warm debris disks, can contribute to the observed mid-infrared excess (e.g. Cotten & Song 2016). Kennedy & Wyatt (2013) estimates the occurrence rate of warm, bright dust. The occurrence rate is 1 over 100 for very young sources, whereas it becomes 1 over 10,000 for old systems (> 1 Gyr). However, the results of our variability check suggest that our sources are not young stars.

Are the candidate objects surrounded by warm debris disks? What’s interesting here is that all seven of these are M-class stars, and as the authors note, M-dwarf debris disks are quite rare, with only a few confirmed. Why this should be so is the object of continuing study, but both the temperature and luminosity of the candidate objects differs from typical debris disks. The questions deepen and multiply:

Extreme Debris Disks (EDD) (Balog et al. 2009), are examples of mid-infrared sources with high fractional luminosities (f > 0.01) that have higher temperatures compared to that of standard debris disks (Moór et al. 2021). Nevertheless, these sources have never been observed in connection with M dwarfs. Are our candidates’ strange young stars whose flux does not vary with time? Are these stars M-dwarf debris disks with an extreme fractional luminosity? Or something completely different?

The authors probe the possibilities. They consider chance alignments with distant infrared sources, and offsets in the astrometry when incorporating the WISE data. There is plenty to investigate here, and the paper suggests optical spectroscopy as a way of refuting false debris disks around M-dwarfs, which could help sort between the seven objects here identified. Stellar rotation, age and magnetic activity may also be factors that will need to be probed. But when all is said and done, we wind up with this:

…analyzing the spectral region around Hα can help us ultimately discard or verify the presence of young disks by analyzing the potential Hα emission. Spectroscopy in the MIR [mid-infrared] region would be very valuable when determining whether the emission corresponds to a single blackbody, as we assumed in our models. Additionally, spectroscopy can help us determine the real spectral type of our candidates and ultimately reject the presence of confounders.

So the hunt for Dyson spheres proceeds. Various pieces need to fall into place to make the case still more compelling, and we should remember that “The MIR data quality for these objects is typically quite low, and additional data is required to determine their nature.” This layman’s guess – and I am not qualified to do anything more than guess – is that rather than Dyson spheres we are glimpsing interesting astrophysics regarding M-dwarfs that this investigation will advance. In any case, do keep in mind that among some five million sources, only seven show compatibility with the Dyson sphere model.

If Dyson spheres are out there, they’re vanishingly rare. But finding just one would change everything.

The paper on Dyson sphere modeling is Wright et al., “The Ĝ Infrared Search for Extraterrestrial Civilizations with Large Energy Supplies. II. Framework, Strategy, and First Result,” The Astrophysical Journal Vol. 792, Issue 1 (September, 2014), id 27 (abstract). The 2022 paper from Project Hephaistos is Suazo et al., “Project Hephaistos – I. Upper limits on partial Dyson spheres in the Milky Way,” Vol. 512, Issue 2 (May 2022), 2988-3000 (abstract / preprint). The 2024 paper is Suazo et al., “Project Hephaistos – II. Dyson sphere candidates from Gaia DR3, 2MASS, and WISE,” MNRAS (6 May 2024), stae1186 (abstract / preprint).

May 15, 2024

Project Hephaistos and the Hunt for Astroengineering

For a project looking for the signature of an advanced extraterrestrial civilization, the name Hephaistos is an unusually apt choice. And indeed the leaders of Project Hephaistos, based at Uppsala University in Sweden, are quick to point out that the Greek god (known as Vulcan in Roman times) was a sort of preternatural blacksmith, thrown off Mt. Olympus for variously recounted transgressions and lame from the fall, a weapons maker and craftsman known for his artifice. Consider him the gods’ technologist.

Who better to choose for a project that pushes SETI not just throughout the Milky Way but to myriads of galaxies beyond? Going deep and far is a sensible move considering that we have absolutely no information about how common life is beyond our own Earth, if it exists at all. If the number of extraterrestrial civilizations in any given galaxy is scant, then a survey looking for evidence of Hephaistos-style engineering writ large will comb through existing observational data from our own galaxy but also consider what lies beyond. Which is why Project Hephaistos’s first paper (2015) searched for what the authors called ‘Dysonian astroengineering’ in over 1000 spiral galaxies.

More recent papers have stayed within the Milky Way to incorporate data from Gaia, the 2 Micron All Sky Survey (2MASS) and the accumulated offerings of the Wide-field Infrared Survey Explorer, which now operates as NEOWISE, analyzing the observational signatures of Dyson spheres in the process of construction and calling out upper limits on such spheres-in-the-making in the Milky Way. Such objects could present anomalously low optical brightness levels yet high mid-infrared flux. This is the basic method for searching for Dyson spheres, identifying the signature of waste heat while screening out young stellar objects and other factors that can mimic such parameters.

This article is occasioned by the release of a new paper, one that homes in on Dyson sphere candidates now identified. And it prompts reflection on the nature of the enterprise. Key to the concept is the idea that any flourishing (and highly advanced) extraterrestrial civilization will need to find sources of energy to meet its growing needs. An obvious source is a star, which can be harvested by a sphere of power-harvesting satellites. The notion, which Dyson presented in a paper in Science in 1960, explains how a search could be conducted in its title: “Search for Artificial Stellar Sources of Infrared Radiation.” In other words, comb the skies for infrared anomalies.

I strongly favor this ‘Dysonian’ approach to SETI, which makes no assumptions at all about any decision to communicate. As we have no possible idea of the values that would drive an alien culture to attempt to talk to us – or for that matter to any other civilizations – why not add to the search space the things that we can detect in other ways. However it is constructed, a Dyson sphere should produce waste heat as it obscures the light from the central star. Infrared searches could detect a star that is strangely dim but radiant at infrared wavelengths, and we might also find changes in brightness as such a ‘megastructure’ evolves that vary on relatively short timeframes.

Funding plays into our science in inescapable ways, so the fact that Dysonian SETI can be conducted using existing data is welcome. It’s also helpful that in-depth studies of particular Dyson sphere candidates may prove useful for nailing down astrophysical properties that interest the entire community, especially since there is the possibility of ‘feedback’ mechanisms on the star from any surrounding sphere of technology. We go looking for extraterrestrial megastructures but even if we don’t find them, we produce good science on unusual stellar properties and refine our observational technique. Not a bad way forward even as the traditional SETI effort in radio and optics continues.

The number of searches for individual Dyson spheres is surprisingly large, and to my knowledge extends back at least as far as 1985, when Russian radio astronomer Vyacheslav Ivanovich Slysh searched using data from the Infrared Astronomical Satellite (IRAS) mission, as did (at a later date) M. Y. Timofeev, collaborating with Nikolai Kardashev. Richard Carrigan, a scientist emeritus at the Fermi National Accelerator Laboratory, looked for Dyson signatures out to 300 parsecs.

But we can go earlier still. Carl Sagan was pondering “The Infrared Detectability of Dyson Civilizations” (a paper in The Astrophysical Journal) back in the 1960s. In more recent times, the Glimpsing Heat from Alien Technologies effort at Pennsylvania State University (G-HAT) has been particularly prominent. What becomes staggering is the realization that the target list has grown so vast as our technologies have improved. Note this, from a Project Hephaistos paper in 2022 (citation below):

Most search efforts have aimed for individual complete Dyson spheres, employing far-infrared photometry (e.g., Slysh 1985; Jugaku & Nishimura 1991; Timofeev et al. 2000; Carrigan 2009) from the Infrared Astronomical Satellite (IRAS: Neugebauer et al. 1984), while a few considered partial Dyson spheres (e.g., Jugaku & Nishimura 2004). IRAS scanned the sky in the far infrared, providing data of ≈ 2.5 × 105 point sources. However, nowadays, we rely on photometric surveys covering optical, near-infrared, and mid-infrared wavelengths that reach object counts of up to ∼109 targets and allow for larger search programs.

The Project Hepaistos work in the 2022 paper homed in on producing upper limits for partial Dyson spheres in the Milky Way by searching Gaia DR2 data and WISE results that showed infrared excess, looking at more than 108 stars. We still have no Dyson sphere confirmations, but the new Hephaistos paper adds 2MASS data and moves to Gaia data release 3, which aids in the rejection of false positives. Gaia also adds to the mix its unique capabilities at parallax, which the authors describe thus:

…Gaia also provides parallax-based distances, which allow the spectral energy distributions of the targets to be converted to an absolute luminosity scale. The parallax data also make it possible to reject other pointlike sources of strong mid-infrared radiation such as quasars, but do not rule out stars with a quasar in the background.

Notable in the new 2024 paper is its description of the data pipeline focusing on separating Dyson sphere candidates from natural sources including circumstellar dust. The authors make the case that it is all but impossible to prove the existence of a Dyson sphere based solely on photometric data, so what is essentially happening is a search for sources showing excess infrared that are consistent with the Dyson sphere hypothesis. The data pipeline runs from data collection through a grid search methodology, image classification for filtering out young stars obscured by dust or associated with dusty nebulae, inspection of the signal to noise ratio, further analysis of the infrared excess and visual inspection from all the sources to reject possible contamination.

This gets tricky indeed. Have a look at some of the ‘confounders,’ as the authors call them. The figure shows three categories of confounders: blends, irregular structures and nebular features. In blends, the target is contaminated by external sources within the WISE coverage. The nebular category is a hazy and disordered false positive without a discernible source of infrared at the target’s location. Irregulars are sources without indication of nebulosity whose exact nature cannot be determined. All of these sources would be considered unreliable at the conclusion of the pipeline:

Image: This is Figure 5 from the paper. Caption: Examples of typical confounders in our search. The top row features a source from the blends category, the middle row a source embedded in a nebular region, and the bottom row a case from the irregular category. On these scales, the irregular and nebular cases cannot be distinguished, but the nebular nature can be established by inspecting the images at larger scales. Credit: Suazo et al.

In the next post, I want to take a look at the results, which involve seven interesting candidates, all of them around a type of star I wouldn’t normally think of in Dyson sphere terms. The papers are Suazo et al., “Project Hephaistos – I. Upper limits on partial Dyson spheres in the Milky Way,” Vol. 512, Issue 2 (May 2022), 2988-3000 (abstract / preprint) and Suazo et al., “Project Hephaistos – II. Dyson sphere candidates from Gaia DR3, 2MASS, and WISE,” MNRAS (6 May 2024), stae1186 (abstract / preprint).

May 3, 2024

GDEM: Mission of Gravity

If space is infused with ‘dark energy,’ as seems to be the case, we have an explanation for the continuing acceleration of the universe’s expansion. Or to speak more accurately, we have a value we can plug into the universe to make this acceleration happen. Exactly what causes that value remains up for grabs, and indeed frustrates current cosmology, for something close to 70 percent of the total mass-energy of the universe needs to be comprised of dark energy to make all this work. Add on the mystery of ‘dark matter’ and we actually see only some 4 percent of the cosmos.

So there’s a lot out there we know very little about, and I’m interested in mission concepts that seek to probe these areas. The conundrum is fundamental, for as a 2017 study from NASA’s Innovative Advanced Concepts office tells me, “…a straightforward argument from quantum field theory suggests that the dark energy density should be tens of orders of magnitude larger than what is observed.” Thus we have what is known as a cosmological constant problem, for the observed values depart from what we know of gravitational theory and may well be pointing to new physics.

The report in question comes from Nan Yu (Jet Propulsion Laboratory) and collaborators, a Phase I effort titled “Direct probe of dark energy interactions with a Solar System laboratory.” It lays out a concept called the Gravity Probe and Dark Energy Detection Mission (GDEM) that would involve a tetrahedral constellation of four spacecraft, a configuration that allows gravitational force measurements to be taken in four simultaneous directions. These craft would operate about 1 AU from the Sun while looking for traces of a field that could explain the dark energy conundrum.

Now JPL’s Slava Turyshev has published a new paper which is part of a NIAC Phase II study advancing the GDEM concept. I’m drawing on the Turyshev paper as well as the initial NIAC collaboration, which has now proceeded to finalizing its Phase II report. Let’s begin with a quote from the Turyshev paper on where we stand today, one that points to critical technologies for GDEM:

Recent interest in dark matter and dark energy detection has shifted towards the use of experimental search (as opposed to observational), particularly those enabled by atom interferometers (AI), as they offer a complementary approach to conventional methods. Situated in space, these interferometers utilize ultra-cold, falling atoms to measure differential forces along two separate paths, serving both as highly sensitive accelerometers and as potential dark matter detectors.

Thus armed with technology, we face the immense challenge of such a mission. The key assumption is that the cosmological constant problem will be resolved through the detection of light scalar fields that couple to normal matter. Like temperature, a scalar field has no direction but only a magnitude at each point in space. This field, assuming it exists, would have to have a mass low enough that it would be able to couple to the particles of the Standard Model of physics with about the same strength as gravity. Were we to identify such a field, we would move into the realm of so-called ‘fifth forces,’ a force to be added to gravity, the strong nuclear force, the weak nuclear force and electromagnetism.

Can we explain dark energy by attempting to modify General Relativity? Consider that its effects are not detectable with current experiments, meaning that if dark energy is out there, its traces are suppressed on the scale of the Solar System. If they were not, the remarkable success scientists have had at validating GR would not have happened. A workable theory, then, demands a way to make the interaction between a dark energy field and normal matter dependent on where it occurs. The term being used for this is screening.

We’re getting deep into the woods here, and could go further still with an examination of the various screening mechanisms discussed in the Turyshev paper, but the overall implication is that the coupling between matter and dark energy could change in regions where the density of matter is high, accounting for our lack of detection. GDEM is a mission designed to detect that coupling using the Solar System as a laboratory. In Newtonian physics, the gravitational gradient tensor (CGT), which governs how the gravitational force changes in space, would have a zero trace value in a vacuum in regions devoid of mass. That’s a finding consistent with General Relativity.

But if in the presence of a dark energy field, the CGT trace value would be other than zero. The discovery of such a variance from our conventional view of gravity would be revolutionary, and would support theories deviating from General Relativity.

Image: Illustration of the proposed mission concept – a tetrahedral constellation of spacecraft carrying atomic drag-free reference sensors flying in the Solar system through special regions of interest. Differential force measurements are performed among all pairs of spacecraft to detect a non-zero trace value of the local field force gradient tensor. A detection of a non-zero trace, and its modulations through space, signifies the existence of a new force field of dark energy as a scalar field and shines light on the nature of dark energy. Credit: Nan Yu.

The constellation of satellites envisioned for the GDEM experiment would fly in close formation, separated by several thousand kilometers in heliocentric orbits. They would use high-precision laser ranging systems and atom-wave interferometry, measuring tiny changes in motion and gravitational forces, to search for spatial changes in the gravitational gradient, theoretically isolating any new force field signature. The projected use of atom interferometers here is vital, as noted in the Turyshev paper:

GDEM relies on AI to enable drag-free operations for spacecraft within a tetrahedron formation. We assume that AI can effectively measure and compensate non-gravitational forces, such as solar radiation pressure, outgassing, gas leaks, and dynamic disturbances caused by gimbal operations, as these spacecraft navigate their heliocentric orbits. We assume that AI can compensate for local non-gravitational disturbances at an extremely precise level…

From the NIAC Phase 1 report, I find this:

The trace measurement is insensitive to the much stronger gravity field which satisfies the inverse square law and thus is traceless. Atomic test masses and atom interferometer measurement techniques are used as precise drag-free inertial references while laser ranging interferometers are employed to connect among atom interferometer pairs in spacecraft for the differential gradient force measurements.

In other words, the technology should be able to detect the dark energy signature while nulling out local gravitational influences. The Turyshev paper develops the mathematics of such a constellation of satellites, noting that elliptical orbits offer a sampling of signals at various heliocentric distances, which improves the likelihood of detection. Turyshev’s team developed simulation software that models the tetrahedral spacecraft configuration while calculating the trace value of the CGT. This modeling along with the accompanying analysis of spacecraft dynamics demonstrates that the needed gravitational observations are within range of near-term technology.

Turyshev sums up the current state of the GDEM concept this way:

…the Gravity Probe and Dark Energy Detection Mission (GDEM) mission is undeniably ambitious, yet our analysis underscores its feasibility within the scope of present and emerging technologies. In fact, the key technologies required for GDEM, including precision laser ranging systems, atom-wave interferometers, and Sagnac interferometers, either already exist or are in active development, promising a high degree of technical readiness and reliability. A significant scientific driver for the GDEM lies in the potential to unveil non-Einsteinian gravitational physics within our solar system—a discovery that would compel a reassessment of prevailing gravitational paradigms. If realized, this mission would not only shed light on the nature of dark energy but also provide critical data for testing modern relativistic gravity theories.

So we have a mission concept that can detect dark energy within our Solar System by measuring deviations found within the classic Newtonian gravitational field. And GDEM is hardly alone as scientists work to establish the nature of dark energy. This is an area that has fostered astronomical surveys as well as mission concepts, including the Nancy Grace Roman Space Telescope, the European Space Agency’s Euclid, the Vera Rubin Observatory and the DESI collaboration (Dark Energy Spectroscopic Instrument). GDEM extends and complements these efforts as a direct probe of dark energy which could further our understanding after the completion of these surveys.

There is plenty of work here to bring a GDEM mission to fruition. As Turyshev notes in the paper: “Currently, there is no single model, including the cosmological constant, that is consistent with all astrophysical observations and known fundamental physics laws.” So we need continuing work on these dark energy scalar field models. From the standpoint of hardware, the paper cites challenges in laser linking for spacecraft attitude control in formation, maturation of high-sensitivity atom interferometers and laser ranging with one part per 1014 absolute accuracy. Identifying such technology gaps in light of GDEM requirements is the purpose of the Phase II study.

As I read this, the surveys currently planned should help us hone in on dark energy’s effects on the largest scales, but its fundamental nature will need investigation through missions like GDEM, which would open up the next phase of dark energy research. The beauty of the GDEM concept is that it does not depend upon a single model, and the NIAC Phase I report notes that it can be used to test any modified gravity models that could be detected in the Solar System. As to size and cost, this looks like a Large Strategic Science Mission, what NASA used to refer to as a Flagship mission, about what we might expect from an effort to answer a question this fundamental to physics.

The paper is Turyshev et al., “Searching for new physics in the solar system

with tetrahedral spacecraft formations,” Phys. Rev. D 109 (25 April 2024), 084059 (abstract / preprint).

April 26, 2024

ACS3: Refining Sail Deployment

Rocket Lab, a launch service provider based in Long Beach CA, launched a rideshare payload on April 23 from its launch complex in New Zealand. I’ve been tracking that launch because aboard the Electron rocket was an experimental solar sail that NASA is developing to study boom deployment. This is important stuff, because the lightweight materials we need to maximize payload and performance are evolving, and so are boom deployment methods. Hence the Advanced Composite Solar Sail System (ACS3), created to text composites and demonstrate new deployment methods.

The thing about sails is that they are extremely scalable. In fact, it’s remarkable how many different sizes and shapes of sails we’ve discussed in these pages, ranging from Jordin Kare’s ‘nanosails’ to the small sails envisioned by Breakthrough Starshot that are just a couple of meters to the side, and on up to the behemoth imaginings of Robert Forward, designed to take a massive starship with human crew to Barnard’s Star and other targets. Sail strategies thus move from using them as propulsive projectiles (Kare) to full-blown interstellar photon-catchers for high-speed star travel.

With ACS3, we’re at the lower end of the size spectrum and digging into such fundamental matters as composite materials and boom deployment engineering. Entertainingly, the Electron launch vehicle was named ‘Beginning of the Swarm,’ doubtless a nod to the primary payload, which is a South Korean imaging satellite that will be complemented by 10 similar craft in coming years. But I also like to think that ‘swarms’ of small solar sails like the twelve-unit (12U) CubeSat used for ACS3, will eventually offer options not only for near-Earth but also outer system observation and exploration. But first, we have to nail down those tricky deployment issues. Keats Wilkie is ACS3 principal investigator at NASA Langley in Hampton Virginia:

“Booms have tended to be either heavy and metallic or made of lightweight composite with a bulky design – neither of which work well for today’s small spacecraft. Solar sails need very large, stable, and lightweight booms that can fold down compactly. This sail’s booms are tube-shaped and can be squashed flat and rolled like a tape measure into a small package while offering all the advantages of composite materials, like less bending and flexing during temperature changes.”

Image: On 24 April 2024, Rocket Lab launched the ACS3 & NeonSat-1 missions from Onenui Station (Mahia Peninsula), New Zealand. In this image, engineers at NASA’s Langley Research Center test deployment of the Advanced Composite Solar Sail System’s solar sail. The unfurled solar sail is approximately 30 feet (about 9 meters) on a side. Credit: NASA Ames.

ACS3 reached its final orbit a little less than two hours after liftoff, after earlier deployment of the South Korean NEONSAT-1 via a kick stage that changed orbit for the second of the deployments. The craft is now roughly 1000 kilometers up, and if everything goes well, full deployment of the composite booms spanning the diagonals of the sail will give us an 80 square meter sail as bright as Sirius in the night sky. Digital cameras onboard should provide imagery of the sail before and during deployment. No signs of sail deployment yet but the satellite is being observed at numerous sites.

Still nothing from ACS3 on 2268 MHz, but an absolute rainbow of 2k4 packets on 401.5 via 2.5-turn helical omni! Decoded as 'Light-1' frames in GNU Radio / gr-satellites + upload to SatNogs. Fun pass from 1000 Km ALT whether S-Band makes an appearance or not pic.twitter.com/LDlfjm2Gkq

— Scott Chapman (@scott23192) April 25, 2024

The polymer from which the composite booms are made is reinforced with carbon fiber and flexible enough to allow it to be rolled for compact storage. According to Alan Rhodes, lead systems engineer for the mission at NASA Ames, seven meters of deployable booms can roll up into a shape that fits into the hand. Note too that these booms are 75 percent lighter than previous metallic deployable booms and should experience far less in-space thermal distortion during flight. A new tape-spool boom extraction system is being tested which will, engineers hope, minimize the possibility of the coiled booms jamming during the deployment. We shall see.

Animation: Deployment of the ACS3 sail. Credit: NASA Ames.

We’re getting pretty good at miniaturization, as shown by the fact that the 12-unit CubeSat carrying ACS3 into orbit measures roughly 23 centimeters by 34 centimeters, which makes it about the size of the microwave oven sitting on my kitchen counter. Refining the material and structure of the booms is another step toward lower-cost missions which we can eventually hope to deploy in networked swarms. Imagine a constellation of exploratory craft to targets like the ice giants. Larger sails using these technologies may eventually fly the kind of ‘sundiver’ missions we’ve often discussed here, deploying at perihelion for maximum thrust to deep space.

April 25, 2024

PLEASE UPDATE THE RSS FEED

The RSS feed URL you're currently using https://follow.it/centauri-dreams-imagining-and-planning-interstellar-exploration will stop working shortly. Please add /rss at the and of the URL, so that the URL will be https://follow.it/centauri-dreams-imagining-and-planning-interstellar-exploration/rss

April 24, 2024

Voyager 1: A Splendid Fix

Although it’s been quite some time since I’ve written about Voyager, our two interstellar craft (and this is indeed what they are at present, the first to return data from beyond the heliosphere) are never far from my mind. That has been the case since 1989, when I stayed up all night for the Neptune encounter and was haunted by the idea that we were saying goodbye to these doughty travelers. Talk about naivete! Now that I know as many people in this business as I do, I should have realized just how resilient they were, and how focused on keeping good science going from deep space.

Not to mention how resilient and well-built the craft they control are. Thirty five years have passed since the night of that encounter (I still have VCR tape from it on my shelf), and the Voyagers are still ticking. This despite the recent issues with data return from Voyager 1 that for a time seemed to threaten an earlier than expected end to the mission. We all know that it won’t be all that long before both craft succumb to power loss anyway. Decay of the onboard plutonium-238 enabling their radioisotope thermal generators (RTGs) means they will be unable to summon up the needed heat to allow continued operation. We may see this regrettable point reached as soon as next year.

But it’s been fascinating to watch over the years how the Voyager interstellar team manages the issue, shutting down specific instruments to conserve power. The glitch that recently occurred got everyone’s attention in November of 2023, when Voyager 1 stopped sending its normal science and engineering back to Earth. Both craft were still receiving commands, but it took considerable investigation to figure out that the flight data subsystem (FDS) aboard Voyager 1, which packages and relays scientific and engineering data from the craft for transmission, was causing the problem.

What a complex and fascinating realm long-distance repair is. I naturally think back to Galileo, the Jupiter-bound mission whose high-gain antenna could not be properly deployed, and whose data return was saved by the canny use of the low-gain antenna and a revised set of parameters for sending and acquiring the information. Thus we got the Europa imagery, among much else, that is still current, and will be complemented by Europa Clipper by the start of the next decade. The farther into space we go, the more complicated repair becomes, an issue that will force a high level of autonomy on our probes as we push well past the Kuiper Belt and one day to the Oort Cloud.

Image: I suppose we all have heroes, and these are some of mine. After receiving data about the health and status of Voyager 1 for the first time in five months, members of the Voyager flight team celebrate in a conference room at NASA’s Jet Propulsion Laboratory on April 20. Credit: NASA/JPL-Caltech.

In the case of Voyager 1, the problem was traced to the aforesaid flight data subsystem, which essentially hands the data off to the telemetry modulation unit (TMU) and radio transmitter. Bear in mind that all of this is 1970s era technology still operational and fixable, which not only reminds us of the quality of the original workmanship, but also the capability we are developing to ensure missions lasting decades or even centuries can continue to operate. The Voyager engineers gave a command to prompt Voyager 1 to return a readout of FDS memory, and that allowed them to confirm that about 3 percent of that memory had been corrupted.

Culprit found. There may be an errant chip involved in the storage of memory within Voyager 1’s FDS, possibly a result of an energetic particle hit, or more likely, simple attrition after the whopping 46 years of Voyager operation. All this was figured out in March, and the fix was determined to be avoiding the now defunct memory segment by storing different portions of the needed code in different addresses in the FDS, adjusting them so that they still functioned, and updating the rest of the system’s memory to reflect the changes. This with radio travel times of 22 ½ hours one way.

The changes were implemented on April 18, ending the five month hiatus in normal communications. I hadn’t written about any of the Voyager 1 travails, more or less holding my breath in hopes that the problem would somehow be resolved. Because the day the Voyagers go silent is something I don’t want to see. Hence my obsession with the remaining possibilities for the craft, laid out in Voyager to a Star.

Engineering data is now being returned in usable form, with the next target, apparently achievable, being the return of science data. So a fix to a flight computer some 163 AU from the Sun has us back in the interstellar business. The incident casts credit on everyone involved, but also forces the question of how far human intervention will be capable of dealing with problems as the distance from home steadily increases. JHU/APL’s Interstellar Probe, for example, has a ‘blue sky’ target of 1000 AU. Are we still functional with one-way travel times of almost six days? Where do we reach the point where onboard autonomy completely supersedes any human intervention?

April 20, 2024

SETI and Gravitational Lensing

Radio and optical SETI look for evidence of extraterrestrial civilizations even though we have no evidence that such exist. The search is eminently worthwhile and opens up the ancillary question: How would a transmitting civilization produce a signal strong enough for us to detect it at interstellar distances? Beacons of various kinds have been considered and search strategies honed to find them. But we’ve also begun to consider new approaches to SETI, such as detecting technosignatures in our astronomical data (Dyson spheres, etc.). To this mix we can now add a consideration of gravitational lensing, and the magnifications possible when electromagnetic radiation is focused by a star’s mass. For a star like our Sun, this focal effect becomes useful at distances beginning around 550 AU.

Theoretical work and actual mission design for using this phenomenon began in the 1990s and continues, although most work has centered on observing exoplanets. Here the possibilities are remarkable, including seeing oceans, continents, weather patterns, even surface vegetation on a world circling another star. But it’s interesting to consider how another civilization might see gravitational lensing as a way of signaling to us. Indeed, doing so could conceivably open up a communications channel if the alien civilization is close enough, for if we detect lensing being used in this way, we would be wise to consider using our own lens to reply.

Or maybe not, considering what happens in The Three Body Problem. But let’s leave METI for another day. A new paper from Slava Turyshev (Jet Propulsion Laboratory) makes the case that we should be considering not just optical SETI, but a gravitationally lensed SETI signal. The chances of finding one might seem remote, but then, we don’t know what the chances of any SETI detection are, and we proceed in hopes of learning more. Turyshev argues that with the level of technology available to us today, a lensed signal could be detected with the right strategy.

Image: Slava Turyshev (Jet Propulsion Laboratory). Credit: Asteroid Foundation.

“Search for Gravitationally Lensed Interstellar Transmissions,” now available on the arXiv site, posits a configuration involving a transmitter, receiver and gravitational lens in alignment, something we cannot currently manage. But recall that the effort to design a solar gravity lens (SGL) mission has been in progress for some years now at JPL. As we push into the physics involved, we learn not only about possible future space missions but also better strategies for using gravitational lensing in SETI itself. We are now in the realm of advanced photonics and optical engineering, where we define and put to work the theoretical tools to describe how light propagates in a gravity field.

And while we lack the technologies to transmit using these methods ourselves (at least for now), we do have the ability to detect extraterrestrial signals using gravitational lensing. In an email yesterday, Dr. Turyshev offered an overview of what his analysis showed:

Many factors influence the effectiveness of interstellar power transmission. Our analysis, based on realistic assumptions about the transmitter, shows that substantial laser power can be effectively transmitted over vast distances. Gravitational lensing plays a crucial role in this process, amplifying and broadening these signals, thereby increasing their brightness and making them more distinguishable from background noise. We have also demonstrated that modern space- and ground-based telescopes are well-equipped to detect lensed laser signals from nearby stars. Although individual telescopes cannot yet resolve the Einstein rings formed around many of these stars, a coordinated network can effectively monitor the evolving morphology of these rings as it traces the beam’s path through the solar system. This network, equipped with advanced photometric and spectroscopic capabilities, would enable not only the detection but also continuous monitoring and detailed analysis of these signals.

We’re imagining, then, an extraterrestrial civilization placing a transmitter in the region of its own star’s gravitational lens, on the side of its star opposite to the direction of our Solar System. The physics involved – and the mathematics here is quite complex, as you can imagine – determine what happens when light from an optical transmitter is sent to the star so that when it encounters the warped spacetime induced by the star’s mass, the diffracted rays converge and create what scientists call a ‘caustic,’ a pattern created by the bending of the light rays and their resulting focused patterns.

In the case of a targeted signal, the lensing effect emerges in a so-called ‘Einstein ring’ around the distant star as seen from Earth. The signal is brightened by its passage through warped spacetime, and if targeted with exquisite precision, could be detected and untangled by Earth’s technologies. Turyshev asks in this paper how the generated signal appears over interstellar distances.

The answer should help us understand how to search for transmissions that use gravitational lensing, developing the best strategies for detection. We’ve pondered possible interstellar networks of communication in these pages, using the lensing properties of participating stellar systems. Such signals would be far more powerful than the faint and transient signals detectable through conventional optical SETI.

Laser transmissions are inherently directional, unlike radio waves, the beams being narrow and tightly focused. An interstellar laser signal would have to be aimed precisely towards us, an alignment that in and of itself does not resolve all the issues involved. We can take into account the brightness of the transmitting location, working out the parameters for each nearby star and factoring in optical background noise, but we would have no knowledge of the power, aperture and pointing characteristics of a transmitted signal in advance. But if we’re searching for a signal boosted by gravitational lensing, we have a much brighter beam that will have been enhanced for best reception.

Image: Communications across interstellar distances could take advantage of a star’s ability to focus and magnify communication signals through gravitational lensing. A signal from—or passing through—a relay probe would bend due to gravity as it passes by the star. The warped space around the object acts somewhat like a lens of a telescope, focusing and magnifying the light. Pictured here is a message from our Sun to another stellar system. Possible signals from other stars using these methods could become SETI targets. Image credit: Dani Zemba / Penn State. CC BY-NC-ND 4.0 DEED.

Mathematics at this level is something I admire and find beautiful much in the same way I appreciate Bach, or a stunning Charlie Parker solo. I have nowhere near the skill to untangle it, but take it in almost as a form of art. So I send those more mathematically literate than I am to the paper, while relying on Turyshev’s explanation of the import of these calculations, which seek to determine the shape and dimensions of the lensed caustic, using the results to demonstrate the beam propagation affected by the lens geometry, and the changes to the density of the EM field received.

It’s interesting to speculate on the requirements of any effort to reach another star with a lensed signal. Not only does the civilization in question have to be able to operate within the focal region of its stellar lens, but it has to provide propulsion for its transmitter, given the relative motion between the lens and the target star (our own). In other words, it would need advanced propulsion just to point toward a target, and obviously navigational strategies of the highest order within the transmitter itself. As you can imagine, the same issues emerge within the context of exoplanet imaging. From the paper:

…we find that in optical communications utilizing gravitational lenses, precise aiming of the signal transmissions is also crucial. There could be multiple strategies for initiating transmission. For instance, in one scenario, the transmission could be so precisely directed that Earth passes through the targeted spot. Consequently, it’s reasonable to assume that the transmitter would have the capability to track Earth’s movement. Given this precision, one might question whether a deliberately wider beam, capable of encompassing the entire Earth, would be employed instead. This is just [a] few of many scenarios that merit thorough exploration.

Detecting a lensed signal would demand a telescope network optimized to search for transients involving nearby stars. Such a network would be capable of a broad spectrum of measurements which could be analyzed to monitor the event and study its properties as it develops. Current and near-future instruments from the James Webb Space Telescope and Nancy Grace Roman Space Telescope to the Vera C. Rubin Observatory’s LSST, the Thirty Meter Telescope and the Extremely Large Telescope could be complemented by a constellation of small instruments.

Because the lens parameters are known for each target star, a search can be constructed using a combination of possible transmitter parameters. A search space emerges that relies on current technology for each specific laser wavelength. According to Turyshev’s calculations, a signal targeting a specific spot 1 AU from the Sun would be detectable with such a network with the current generation of optical instruments. Again from the paper:

Once the signal is detected, the spatial distribution of receivers is invaluable, as each will capture a distinct dataset by traveling through the signal along a different path… Correlating the photometric and spectral data from each path enables the reconstruction of the beam’s full profile as it [is] projected onto the solar system. Integrating this information with spectral data from multiple channels reveals the transmitter’s specific features encoded in the beam, such as its power, shape, design, and propulsion capabilities. Additionally, if the optical signal contains encoded information, transmitted via a set of particular patterns, this information will become accessible as well.

While microlensing events created by a signal transmitted through another star’s gravitational lens would be inherently transient, they would also be strikingly bright and should, according to these calculations, be detectable with the current generation of instruments making photometric and spectroscopic observations. Using what Turyshev calls “a spatially dispersed network of collaborative astronomical facilities,” it may be possible not only to detect such a signal but to learn if message data are within. The structure of the point spread function (PSF) of the transmitting lens could be determined through coordinated ground- and space-based telescope observations.

We are within decades of being able to travel to the focal region of the Sun’s gravitational lens to conduct high-resolution imaging of exoplanets around nearby stars, assuming we commit the needed resources to the effort. Turyshev advocates a SETI survey along the lines described to find out whether gravitationally lensed signals exist around these stars, pointing out that such a discovery would open up the possibility of studying an exoplanet’s surface as well as initiating a dialogue. “[W]e have demonstrated the feasibility of establishing interstellar power transmission links via gravitational lensing, while also confirming our technological readiness to receive such signals. It’s time to develop and launch a search campaign.“

The paper is Turyshev, “Search for gravitationally lensed interstellar transmissions,” now available as a preprint. You might also be interested in another recent take on detecting technosignatures using gravitational lensing. It’s Tusay et al., “A Search for Radio Technosignatures at the Solar Gravitational Lens Targeting Alpha Centauri,” Astronomical Journal Vol. 164, No. 3 (31 August 2022), 116 (full text), which led to a Penn State press release from which the image I used above was taken.

April 17, 2024

Medusa: Deep Space via Nuclear Pulse

The propulsion technology the human characters conceive in the Netflix version of Liu Cixin’s novel The Three Body Problem clearly has roots in the ideas we’ve been kicking around lately. I should clarify that I’m talking about the American version of the novel, which Netflix titles ‘3 Body Problem,’ and not the Chinese 30-part series, which is also becoming available. In the last two posts, I’ve gone through various runway concepts, in which a spacecraft is driven forward by nuclear explosions along its route of flight. We’ve also looked at pellet options, where macroscopic pellets are fired to a departing starship to impart momentum and/or to serve as fusion fuel.

All this gets us around the problem of carrying propellant, and thus offers real benefits in terms of payload capabilities. Even so, it was startling to hear the name Stanislaw Ulam come up on a streaming TV series. Somebody was doing their homework, as Freeman Dyson liked to say. Ulam’s name will always be associated with nuclear pulse propulsion (along with the Monte Carlo method of computation and many other key developments in nuclear physics). It was in 1955 that he and Cornelius Everett performed the first full mathematical treatment of what would become Orion, but the concept goes back as far as Ulam’s initial Los Alamos calculations in 1947.

Image: Physicist and mathematician Stanislaw Ulam. Credit: Los Alamos National Laboratory.

Set off a nuclear charge behind a pusher plate and the craft attached to that plate moves forward. Set off enough devices and you begin to move at speeds unmatched by any other propulsion method, so the deep space concepts that Freeman Dyson, Ted Taylor and team discussed began to seem practicable, including human missions to distant targets like Enceladus. Dyson pushed the concept into the interstellar realm and envisioned an Orion variant reaching Alpha Centauri in just over a century. So detonating devices is a natural if you’re a writer looking for ways to take current technology to deep space in a hurry, as the characters in ‘3 Body Problem’ are.

Johndale Solem’s name didn’t pop up on ‘3 Body Problem,’ but his work is part of the lineage of the interstellar solution proposed there. Solem was familiar with Ted Cotter’s work at Los Alamos, which in the 1970s had explored ways of doing nuclear pulse propulsion without the pusher plate and huge shock absorbers that would be needed for the Orion design. Freeman Dyson explored the concept as well – both he and Cotter were thinking in terms of steel cables unreeled from a spacecraft as it spun on its axis. Dyson would liken the operation to “the arms of a giant squid,” as cables with flattened plates at each end would serve to absorb the momentum of the explosions set off behind the vehicle. Familiar with this work, Johndale Solem took the next step.

Image: Physicist Johndale Solem in 2014. Credit: Wikimedia Commons.

Solem worked at Los Alamos from 1969 to 2000, along the way authoring numerous scientific and technical papers. In the early 1990s, he discussed the design he called Medusa, noting in an internal report that his spacecraft would look something like a jellyfish as it moved through space. He had no interest in Orion’s pusher plate because examining the idea, he saw only problems. For one thing, you couldn’t build a pusher plate big enough to absorb anything more than a fraction of the momentum from the bombs being detonated behind the spacecraft. To protect the crew, the plate and shock absorbers had to be so massive as to degrade performance even more.

The solution: Replace the pusher plate with a sail deployed ahead of the vehicle. The nuclear detonations are now performed between the sail and the spacecraft, driving the vehicle forward. The sail would receive a much greater degree of momentum, and it would be equipped with tethers made so long and elastic that the acceleration would be smoothed out. I quoted Solem some years back on using a servo winch in the vehicle which would operate in combination with the tethers. Let’s look at that again, from the Los Alamos report:

When the explosive is detonated, a motorgenerator powered winch will pay out line to the spinnaker at a rate programmed to provide a constant acceleration of the space capsule. The motorgenerator will provide electrical power during this phase of the cycle, which will be conveniently stored. After the space capsule has reached the same speed as the spinnaker, the motorgenerator will draw in the line, again at a rate programmed to provide a constant acceleration of the space capsule. The acceleration during the draw-in phase will be less than during the pay-out phase, which will give a net electrical energy gain. The gain will provide power for ancillary equipment in the space capsule…

This is hard to visualize, so let’s look at it in two different ways. First, here is a diagram of the basic concept:

Image: Medusa in operation. Here we see the design 1) At the moment of bomb explosion; 2) As the explosion pulse reaches the parachute canopy; 3) Effect on the canopy, accelerating it away from the explosion, with the spacecraft playing out the main tether with its winch, braking as it extends, and accelerating the vehicle; 4) The tether being winched back in. Imagine all this in action and the jellyfish reference becomes clear. Credit: George William Herbert/Wikimedia.

Second, a video that Al Jackson pointed out to me, made by artist and CGI expert Nick Stevens, shows what Medusa would look like in flight. I recall Solem’s words when I watch this:

One can visualize the motion of this spacecraft by comparing it to a jellyfish. The repeated explosions will cause the canopy to pulsate, ripple, and throb. The tethers will be stretching and relaxing. The concept needed a name: its dynamics suggested MEDUSA.

Bear in mind as you watch, though, that Solem’s Los Alamos report speaks of a 500-meter canopy that would be spin-deployed along with 10,000 tethers. The biggest stress that suggested itself to readers when we’ve discussed Medusa in the past is in the tethers themselves, which is why Solem made them as long as he did. Even so, I became rather enthralled with Medusa early when I first encountered the idea, an interest reinforced by Greg Matloff’s statement (in Deep Space Probes): “Although much analysis remains to be carried out, the Medusa concept might allow great reduction in the mass of a nuclear-pulse starprobe.” With Dyson having given up on Orion, Medusa seemed a way to reinvigorate nuclear pulse propulsion, although to be sure, Dyson’s chief objection to Orion when I talked with him about it was the sheer impracticality of the concept, an issue which surely would apply to Medusa as well.

Like so much in the Netflix ‘3 Body Problem,’ the visuals of the bomb runway sequence are well crafted. In fact, I find Liu Cixin’s trilogy so stuffed with interesting ideas that my recent re-reading of The Three Body Problem and subsequent immersion in the following two novels have me wanting to explore his other work. I haven’t yet attempted the Chinese series, which is longer and presents the daunting prospect of dealing with a now familiar set of plot elements with wholly different actors. I’ll need to dip into it as Netflix ponders a second season for the American series.

Anyway, notice the interesting fact that what you have as a propulsion method on ‘3 Body Problem’ is essentially Medusa adapted to a nuclear bomb runway, with the sail-driven craft intercepting a series of nuclear weapons. As each explodes, the spacecraft is pushed to higher and higher velocities. I’m curious to know how the Chinese series handles this aspect of the story, and also curious about who introduced this propulsion concept, which I still haven’t located in the novels. I’m not aware of a fusion runway combined with a sail anywhere in the interstellar literature. Nice touch!

The Los Alamos report I refer to above is Solem’s “Some New Ideas for Nuclear Explosive Spacecraft Propulsion,” LA-12189-MS, October 1991 (available online). Solem also wrote up the Medusa concept in “Medusa: Nuclear Explosive Propulsion for Interplanetary Travel,” JBIS Vol. 46, No. 1 (1993), pp. 21-26. Two other JBIS papers also come into play for specific mission applications: “The Moon and the Medusa: Use of Lunar Assets in Nuclear-Pulse Propelled Space Travel,” JBIS Vol. 53 (2000), pp. 362-370 and “Deflection and Disruption of Asteroids on Collision Course with Earth,” JBIS Vol. 53 (2000), pp. 180-196. To my knowledge, Freeman Dyson’s ‘The Bolo and the Squid,’ a 1958 memo at Los Alamos treating these concepts, remains classified.

April 12, 2024

Fusion Pellets and the ‘Bussard Buzz Bomb’

Fusion runways remind me of the propulsion methods using pellets that have been suggested over the years in the literature. Before the runway concept emerged, the idea of firing pellets at a departing spacecraft was developed by Clifford Singer. Aware of the limitations of chemical propulsion, Singer first studied charged particle beams but quickly realized that the spread of the beam as it travels bedevils the concept. A stream of macro-pellets, each several grams in size, would offer a better collimated ‘beam’ that would vaporize to create a hot plasma thrust when it reaches the spacecraft.

Even a macro-pellet stream does ‘bloom’ over time – i.e., it loses its tight coherency because of collisions with interstellar dust grains – but Singer was able to show through papers in The Journal of the British Interplanetary Society that particles over one gram in weight would be sufficiently massive to minimize this. In any case, collimation could also be ensured by electromagnetic fields sustained by facilities along the route that would measure the particles’ trajectory and adjust it.

Image: Clifford Singer, whose work on pellet propulsion in the late 1970s has led to interesting hybrid concepts involving on-board intelligence and autonomy. Credit: University of Illinois.

Well, it was a big concept. Not only did Singer figure out that it would take a series of these ‘facilities’ spaced 340 AU apart to keep the beam tight (he saw them as being deployed by the spacecraft itself as it accelerated), but it would also take an accelerator 105 kilometers long somewhere in the outer Solar System. That sounds crazy, but pushing concepts forward often means working out what the physics will allow and thus putting the problem into sharper definition. I’ve mentioned before in these pages that we have such a particle accelerator in the form of Jupiter’s magnetic field, which is fully 20,000 times stronger than Earth’s.

We don’t have to build Jupiter, and Mason Peck (Cornell University) has explored how we could use its magnetic field to accelerate thousands of ‘sprites’ – chip-sized spacecraft – to thousands of kilometers per second. Greg Matloff has always said how easy it is to overlook interstellar concepts that are ‘obvious’ once suggested, but it takes that first person to suggest them. Going from Singer’s pellets to Peck’s sprites is a natural progression. Sometimes nature steps in where engineering flinches.

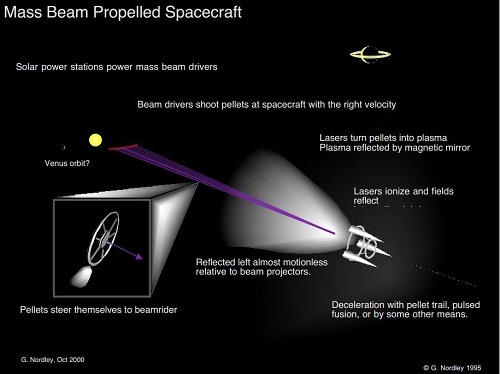

The Singer concept is germane here because the question of fusion runways depends in part upon whether we can lead our departing starship along so precise a trajectory that it will intercept the fuel pellets placed along its route. Gerald Nordley would expand upon Singer’s ideas to produce a particle stream enlivened with artificial intelligence, allowing course correction and ‘awareness’ at the pellet level. Now we have a pellet that is in a sense both propellant and payload, highlighting the options that miniaturization and the growth of AI have provided the interstellar theorist.

Image: Pushing pellets to a starship, where the resulting plasma is mirrored as thrust. Nordley talks about nanotech-enabled pellets in the shape of snowflakes capable of carrying their own sensors and thrusters, tiny craft that can home in on the starship’s beacon. Problems with beam collimation thus vanish and there is no need for spacecraft maneuvering to stay under power. Credit: Gerald Nordley.

Jordin Kare’s contributions in this realm were striking. A physicist and aerospace engineer, Kare spent years at Lawrence Livermore National Laboratory working on early laser propulsion concepts and, in the 1980s, laser-launch to orbit, which caught the attention of scientists working in the Strategic Defense Initiative. He would go on to become a spacecraft design consultant whose work for the NASA Institute for Advanced Concepts (as it was then called) analyzed laser sail concepts and the best methods for launching such sails using various laser array designs.

Kare saw ‘smart pellets’ in a different light than previous researchers, thinking that the way to accelerate a sail was to miniaturize it and bring it up to a percentage of c close to the beamer. This notion reminds me of the Breakthrough Starshot sail concept, where the meter-class sails are blasted up to 20 percent of lightspeed within minutes by a vast laser array. But Kare would have nothing to do with meter-class sails. His notion was to make the sails tiny, craft them out of artificial diamond (he drew this idea from Robert Forward) and use them not as payload but as propulsion. His ‘SailBeam’, then, is a stream of sails that, like Singer’s pellets, would be vaporized for propulsion as they arrived at a departing interstellar probe.

Kare was a character, to put it mildly. Brilliant at what he studied, he was also a poet well known for his ‘filksongs,’ the science fiction fandom name for SF-inspired poetry, which he would perform at conventions. His sense of humor was as infectious as his optimism. Thus his DIHYAN, a space launch concept involving reusable rockets (if he could only see SpaceX’s boosters returning after launch!). DIHYAN, in typical Kare fashion, stood for “Do I Have Your Attention Now?” Kare’s role in the consideration of macro-scale matter sent for propulsion is secure in the interstellar literature.

And by the way, when I write about Kare, I’m always the recipient of email from well-meaning people who tell me that I’ve misspelled his name. But no, ‘Jordin’ is correct.

We need to talk about SailBeam at greater length one day soon. Kare saw it as “the most engineering-practical way to get up to a tenth of the speed of light.” It makes sense that a mind so charged with ideas should also come up with a fusion runway that drew on his SailBeam thinking. Following on to the work of Al Jackson, Daniel Whitmire and Greg Matloff, Kare saw that if you could place pellets of deuterium and tritium carefully enough, a vehicle initially moving at several hundreds of kilometers per second would begin encountering them with enough velocity to fire up its engines. He presented the idea at a Jet Propulsion Laboratory workshop in the late 1980s.

We’re talking about an unusual craft, and it’s one that will resonate not only with Johndale Solem’s Medusa, which we’ll examine in the next post, but also with the design shown in the Netflix version of Liu Cixin’s The Three Body Problem. This was not the sleek design familiar from cinema starships but a vehicle shaped more or less like a doughnut, although a cylindrical design was also possible. Each craft would have its own fusion pellet supply, dropping a pellet into the central ‘hole’ as one of the fusion runway pellets was about to be encountered. Kare worked out a runway that would produce fusion explosions at the rate of thirty per second.

Like Gerald Nordley, Kare worried about accuracy, because each of the runway pellets has to make a precise encounter with the pellet offered up by the starship. When I interviewed him many years back, he told me that he envisioned laser pulses guiding ‘smart’ pellets. Figure that you can extract 500 kilometers per second from a close solar pass to get the spacecraft moving outward at sufficient velocity (a very optimistic assumption, relying on materials technologies that are beyond our grasp at the moment, among other things), and you have the fusion runway ahead of you.

Initial velocity is problematic. Kare believed the vehicle would need to be moving at several hundreds of kilometers per second to attain sufficient velocity to begin firing up its main engines as it encountered the runway of fusion pellets. Geoff Landis would tell me he thought the figure was far too low to achieve deuterium/tritium ignition. But if it can be attained, Kare’s calculations produced velocities of 30,000 kilometers per second, fully one-tenth the speed of light. The fusion runway would extend about half a light day in length, and the track would run from near Earth to beyond Pluto’s orbit.

And there you have the Bussard Buzz Bomb, as Kare styled it. The reference is of course to the German V-1, which made a buzzing, staccato sound as it moved through English skies that those who heard it would come to dread, because when the sound stopped, you never knew where it would fall. You can’t hear anything in space, but if you could, Kare told me, his starship design would sound much like the V-1, hence the name.

In my next post, I’ll be talking about Johndale Solem’s Medusa design, which uses nuclear pulse propulsion in combination with a sail in startling ways. Medusa didn’t rely on a fusion runway, but the coupling of this technology with a runway is what started our discussion. The Netflix ‘3 Body Problem’ raised more than a few eyebrows. I’m not the only one surprised to see the wedding of nuclear pulse propulsion, sails and runways in a single design.

Clifford Singer’s key paper is “Interstellar Propulsion Using a Pellet Stream for Momentum Transfer,” JBIS 33 (1980), pp. 107-115. He followed this up with “Questions Concerning Pellet-Stream Propulsion,” JBIS 34 (1981), pp. 117-119. Gerald Nordley’s “Interstellar Probes Propelled by Self-steering Momentum Transfer Particles” (IAA-01-IAA.4.1.05, 52nd International Astronautical Congress, Toulouse, France, 1-5 Oct 2001) offers his take on self-guided pellets. Jordin Kare’s report on SailBeam concepts is “High-Acceleration Micro-Scale Laser Sails for Interstellar Propulsion,” Final Report, NIAC Research Grant #07600-070, revised February 15, 2002 and available here. You might also enjoy my SailBeam: A Conversation with Jordin Kare.

April 9, 2024

The Interstellar Fusion Runway Evolves

Let’s talk about how to get a spacecraft moving without onboard propellant. As noted last week, this is apropos of the design shown in the Netflix streaming video take on Liu Cixin’s novels, which the network titles ‘3 Body Problem.’ There, a kind of ‘runway’ is conceived, one made up of nuclear weapons that go off in sequence to propel a sail and its payload. The plan is to attain 0.012 c and reach an oncoming fleet that is headed to Earth but will not arrive for another four centuries.

This is an intriguing notion, and one with echoes in the interstellar literature. Because Johndale Solem mixed sails and nuclear weapons in a design called ‘Medusa’ that he described in a Los Alamos report back in 1991, although its roots go back decades earlier, as I’ll discuss in an upcoming article. Mixing sails, nuclear weapons and a fusion runway is an unusual take, a hybrid concept that caught my eye immediately, as it did that of Al Jackson, who alluded to runways in a paper in the 1970s. I’ve just become aware of a Greg Matloff paper from 1979 on runways as well.

So let’s start with the runway concept and in subsequent posts, I will be looking at how Medusa evolved and consider whether the hybrid concept of ‘3 Body Problem’ is worth pursuing. Jackson’s paper, written with Daniel Whitmire, is one we’ve considered before in these pages. The concept is to power up a starship by a laser but use reaction mass gathered from the interstellar medium, collecting the latter with a ramscoop. Here the model draws on Robert Bussard’s ramjet notions, originally published in 1960 and more or less immortalized in Poul Anderson’s novel Tau Zero. Jackson and Whitmire’s version was one of several variants on Bussard’s original concept and offered a number of performance benefits.

Image: The interstellar ramjet, as envisioned by British artist Adrian Mann. Variants have appeared in the literature to get around the drag issue induced by the ramscoop design. A fusion runway seeds fuel along a track that the craft follows as it accelerates.

You’ll notice that this is also a hybrid concept, combining ramjet capabilities with laser beaming. Lasers had already been considered for beaming a terrestrial or Solar System-based laser at the departing craft, which could deploy a lightsail to draw momentum from the incoming photons. Jackson and Whitmire found the latter method inefficient. Their solution was to beam the laser at a ramjet that would use reaction mass obtained from a Bussard-style magnetic ram scoop. The ramjet uses the laser beam as a source of energy but, unlike the sail, not as a source of momentum.

Jackson and Whitmire were a potent team, and this is one of their best papers. These methods could be used to reach 0.14 c, allowing the vehicle to switch into full ramjet mode at that point. And because the laser is a source of energy rather than momentum, it can also be used as a means to decelerate on the return trip. For our purposes today, I turn to the last part of the paper, which outlines other starship concepts that grow out of the laser beaming analysis. Here is the relevant passage:

Another possibility would be to artificially make a fusion ramjet runway. Micron-size frozen deuterium pellets could be accelerated electrostatically or electromagnetically beginning several years prior to take-off at which time a fusion ramjet with a relatively modest scoop cross section (perhaps a physical structure) would begin acceleration.

So we have a spacecraft that collects its fuel along the way. As opposed to the ‘pure’ ramjet, which scoops up interstellar material and is dependent on the medium through which it moves, this fusion runway ramjet would know exactly the trajectory to take to collect the needed fuel pellets as it accelerates. Bear in mind the original Bussard ramjet problem of having to reach a certain percentage of lightspeed before being able to ignite its fusion engine. Problem solved.

In recent correspondence, Jackson pointed out that the idea harkens back to the German Vergeltungswaffe 3 (“Vengeance Weapon 3”), which was a gun originally designed to bombard London but only saw use against Allied targets in Luxembourg in 1945 (the bunkers at the Pas-de-Calais were destroyed by bombing raids). Multiple solid-fuel rocket boosters were ignited by the gases pushing the projectile as it moved in staged fashion through the barrel. The French Army had considered plans for such a staged cannon as far back as 1918, and the idea dates to the 19th Century.

Image: The prototype V-3 cannon at Laatzig, Germany (now Zalesie, Poland) in 1942. Credit: Bundesarchiv, Bild 146-1981-147-30A / CC-BY-SA 3.0.

Greg Matloff picked up on the Jackson and Whitmire paper in a 1979 paper in The Journal of the British Interplanetary Society which he was kind enough to pass along to me. The Jackson/Whitmire fusion runway would, he believed, improve ramjet performance and alleviate aerodynamic drag, which is a problem that sharply reduces a Bussard vehicle’s acceleration. He considered in the paper a fusion fuel released as fuel pellets moving in the direction of the destination star, with the ramjet moving up from behind to capture and fuse the pellets. In one scenario, tanker craft would be launched over a 50 year period to produce a runway 0.1 light years long.

Matloff as well as Jackson and Whitmire considered other variations on the interstellar ramjet idea, and I want to just mention these before moving on. From the Matloff paper:

As Whitmire and Jackson have mentioned, the performance of a ramjet might be of interest just above the photosphere of the Sun, n a high-energy, high-particle environment. More prosaically, a ramscoop could be utilized near the Earth to collect fusion fuel from the solar wind over a few decades. Then, if the fuel is utilized to power a ram-augmented interstellar rocket (RAIR), such an approach might be competitive in any discussion of the difficulties and merits of the various ramjet derivatives.

A Sun-skimming ramjet is one I had never seen discussed until I read Jackson and Whitmire. It would make for a lively hard SF tale, that’s for sure. Given the problems with ramjet drag that have been wrestled with in the subsequent literature, it’s worth considering Matloff’s idea of solar wind fuel collection at much lower speeds in the inner system. In any case, the fusion runway notion offers one way to collect a known supply of fuel over the length of a runway that would launch an interstellar craft.

When I wrote my Centauri Dreams book early in the century, I was unaware of both the papers we’ve looked at today, and focused on the only runway concept that was then known to me, the so-called ‘Bussard Buzz Bomb’ of the free-thinking Jordin Kare. Kare is, alas, no longer with us, but I enjoyed a long conversation with him on his runway concept, and I want to cover that in the next post before moving on to Johndale Solem’s Medusa.

The Jackson/Whitmire paper on laser-powered ramjets is “Laser Powered Interstellar Ramjet,” Journal of the British Interplanetary Society Vol. 30 (1977), 223-226. Al Jackson: A Laser Ramjet Reminiscence presents Al’s thoughts on this paper as written for this site. Greg Matloff’s paper on fusion runways is “The Interstellar Ramjet Acceleration Runway,” JBIS Vol. 32 (1979), 219-220. The ToughSF site offers a detailed explanation of runway concepts in Fusion Highways in Space.

Paul Gilster's Blog

- Paul Gilster's profile

- 7 followers