Aaron Gustafson's Blog, page 3

February 3, 2023

Rebuilding a PHP App using Isometric JavaScript with Eleventy & Netlify

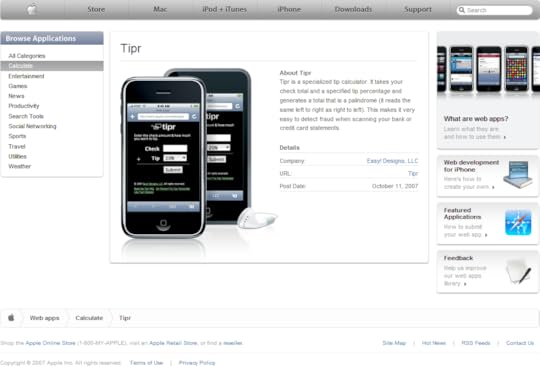

Back in the early days of the iPhone, I created Tipr, a tip calculator that always produces a palindrome total.1 This is an overview of the minimal work I did to make it a modern web app that can run without a traditional back-end.

# What I had to work withThe previous iteration of Tipr was built in my hotel room while I was on site doing some consulting for a certain Silicon Valley company. I was rocking a Palm Treo 650 at the time and that day a few of my colleagues had lined up to wait for the release of the very first iPhone. At the time, web apps were the only way to get an ���app��� on the iPhone as there was no SDK or even an App Store.

I did a lot of PHP development back in the day, so armed with all of the mobile web development best practices of the day, I set about building the site and making it speedy. Some of the notable features of Tipr included:

Inlining CSS & JS file contents into the HTML.Using PHP output buffers to compress the HTML on the server before sending it over the wire.Server side processing in PHP.Client side processing via XHR to a PHP-driven API.At the time, most of these approaches were very new. As an industry, we weren���t doing a whole lot to ensure peak performance on mobile because most people���s mobile browsers were pretty crappy. This was the heyday of Usablenet���s ���mobile friendly��� bolt-on and WAP. Then came Mobile Safari.

The Tipr site has remained largely untouched since I built it in the Summer of 2007. That October, I added a theme switcher that made the site pink for October (Breast Cancer Awareness Month). I added a free text message-based interface using the then-free TextMarks service and a Twitter bot as well. But as far as the web interface went, it remained largely untouched.

Here are a handful of things that have come to the web in the intervening years:

HTML5 Form Validation APISVG supportCSS3Media QueriesWeb App ManifestService Worker (and its precursor the AppCache)FlexboxCSS GridPhew, that���s a lot! While I haven���t made upgrades in all these areas, I did sprinkle in a few, mainly to make it a true PWA and boost performance.

# Moving from PHP to a ���static��� siteMuch of my work over the last few years has been in the world of static site generators (e.g., Jekyll, Eleventy). I���m quite enamored of Eleventy, having used it for a number of projects at this point. Since I know it really well, it made sense to use it for this project too. The installation steps are minimal and I already had a library of configuration options, plugins, and filters to roll with.

While in the process of migrating to Eleventy, I also took the opportunity to

Swap raster graphics for SVGs,Set up a Web App Manifest,Add a Service Worker, andUpdate the site���s meta info to reflect current best practices.I also swapped out the PHP logic that governed the pink color theme for a simple script in the head of the every page. Since the color change is an enhancement, rather than a necessity, I didn���t feel like it was something I needed to manage another way.

The greatest challenge in moving Tipr over to a static site was setting up the tip calculation engine, which had been in PHP to ensure it would work even if JavaScript wasn���t available.

# Migrating the core logic to isomorphic JavaScriptWhen I originally built Tipr, JavaScript on the back-end wasn���t a thing. That���s why the core tip calculation engine was built in PHP. At the time, even XHR was in its infancy, so the fact that I could use PHP to do the calculations for both the server-side���for when JavaScript wasn���t available���and client-side���when it was���was pretty amazing.

Today, JavaScript is ubiquitous across the whole stack, which made it the logical choice for building out the revised tip calculator. As with the original, I needed the calculation to work on the client side if it could���saving a round trip to the server���but to also have the ability to fall back to a traditional form submission if the client-side approach wasn���t feasible. That would be possible by having client-side JavaScript for the form itself, with the server-side piece handled by Netlify���s Edge Functions (integrated through Eleventy���s Edge plugin).

From an architectural standpoint, I really didn���t want to have my logic duplicated in each place, so I began to play around with ensconcing the calculation logic in a JavaScript include, so I could import it into the form page itself and a JavaScript module that the Edge Function could use.

You can view Tipr���s source on GitHub, but here���s a basic rundown of the relevant directories and files:

netlifyedge-functions

tipr.js

src

_includes

js

tipr.js

j

process.njk

index.html

netlify.toml#src/_includes/js/tipr.js

This file contains the central logic of the tip calculator. It���s written in vanilla JavaScript with the intent that it would be understandable by the widest possible assortment of browsers out there.

#src/index.htmlThe homepage of the site is also home to the tip calculation form. Below the form is an embedded script element containing the logic for interacting with the DOM for the client-side version of the tip calculator. I include the logic at the top of that script:

<script>{%include���js/tipr.js���%}

//TherestoftheJavaScriptlogic

</script>#src/j/process.njk

This file exists solely to export the JavaScript logic from the include in a way that it can be consumed by the Edge Function. It will render a new JavaScript file called ���process.js��� and turns the central processing logic into a JavaScript module that Deno can use (Deno powers Netlify���s Edge Functions):

---layout:false

permalink:/j/process.js

---

{%include���js/tipr.js���%}

export{process};#netlify/edge-functions/tipr.js

We define Edge Functions for use with Netlify in the netlify/edge-functions folder. To make use of the core JavaScript logic in the Edge Function, I can import it from the module created above before using it in the function itself:

import{ process }from���./���/���/_site/j/process.js���;functionsetCookie(context, name, value){

context.cookies.set({

name,

value,

path:���/���,

httpOnly:true,

secure:true,

sameSite:���Lax���,

});

}

exportdefaultasync(request, context)=>{

let url =newURL(request.url);

// Save to cookie, redirect back to form

if(url.pathname ===���/process/���&& request.method ===���POST���)

{

if( request.headers.get(���content-type���)===���application/x-www-form-urlencoded���)

{

let body =await request.clone().formData();

let postData = Object.fromEntries(body);

let result =process( postData.check, postData.percent );

setCookie( context,���check���, result.check );

setCookie( context,���tip���, result.tip );

setCookie( context,���total���, result.total );

returnnewResponse(null,{

status:302,

headers:{

location:���/results/���,

}

});

}

}

return context.next();

};

What���s happening here is that when a request comes in to this Edge Function, the default export will be executed. Most of this code is directly lifted from Netlify���s Edge Functions demo site. I grab the form data, pass it into the process function, and then set browser cookies for each of the returned values before redirecting the request to the result page.

On that page, I use Eleventy���s Edge plugin to render the check, tip, and total amounts:

{%edge%}{%setcheck=eleventy.edge.cookies.check%}

{%settip=eleventy.edge.cookies.tip%}

{%settotal=eleventy.edge.cookies.total%}

<trid=���check���>

<thscope=���row���>Check </th>

<td>${{check}}</td>

</tr>

<trid=���tip���>

<thscope=���row���>Tip </th>

<td>${{tip}}</td>

</tr>

<trid=���total���>

<thscope=���row���>Total </th>

<td>${{total}}</td>

</tr>

{%endedge%}

Side note: The cookies get reset using a separate Edge Function.

#netlify.tomlTo wire up the Edge Functions, we use put a netlify.toml file in the root of the project. Configuration is pretty straightforward: you tell it the Edge Function you want to use and the path to associate it with. You can choose to associate it with a unique path or run the Edge Function on every path.

Here���s an excerpt from Tipr���s netlify.toml as it pertains to the Edge Function above:

[[edge_functions]]function=���tipr���

path=���/process/���

This tells Netlify to route requests to /process/ through netlify/edge-functions/tipr.js. Then all that was left to do was wire up the form to use that endpoint as its action:

<formid=���calc���method=���post���action=���/process/���># Isometric EdgesIt took a fair bit of time to figure this all out, but I���m pretty excited by the possibilities of this approach for building more static isomorphic apps. Oh, and the new site��� is fast.

Why a palindrome? Well, it makes it pretty easy to detect tip fraud because all restaurant totals will always be the same forwards & backwards. It���s a little easier than a checksum. ������

December 13, 2022

303 Creative LLC v. Elenis is Incredibly Problematic

Before I get into this, let me start with this preface: I am not a legal expert by any means. I never even watched Law & Order. That said, I am keenly interested in the law and how it relates to bias and discrimination, particularly if that intersects with technology, especially the web.

Which brings me to the subject at hand: 303 Creative LLC v. Elenis. I tweeted about this case, which is currently before the Supreme Court of the United States, the other day, but felt like I owed it a lengthier���and perhaps more enduring���discussion. So here goes���

# The case, in a nutshellLorie Smith, a web designer operating as 303 Creative LLC, is interested in getting into the wedding announcement website game. She does not believe same-sex couples should be able to marry, so she wanted to put a notice on her website to that effect, stating that she would not create wedding announcements for same-sex weddings. This violates Colorado���s anti-discrimination law (some of you may recall it from Masterpiece Cakeshop v. Colorado Civil Rights Commission) which prevents public businesses from discriminating against gay people, who are a ���protected class��� in legal speak.

Smith contests that her web design work is her ���expression��� as an ���artist��� and that the First Amendment protects her right to that expression. What a lot of the coverage fails to include, however, is

She does not currently offer wedding announcement website services, andNo same-sex couples have requested her services in creating a wedding announcement website.In other words, this case is not based on fact, but rather on hypotheticals. Additionally, there has been no injury on either side, just the potential for one. Anyway, if you���re interested in learning more about the case, you can check out the following:

303 Creative LLC v Elenis on WikipediaCourt Docket at the Supreme CourtOral Arguments before the Supreme CourtStrict Scrutiny podcast episode following oral argumentsBoom! Lawyered podcast episode following oral argumentsIn particular, I highly recommend listening to Justices Ketanji Brown Jackson���s and Sonia Sotomayor���s contributions during oral arguments as they really cut through the bullshit and get to the heart of the case and its implications.

# Fact: Design ��� ArtThis is something I talked about way back in my 2013 talk ���Designing With Empathy���: Design is not art. Art is self-expression and serves the artist; design serves someone else (typically the client or their audience). If you don���t work in the industry, however, this distinction isn���t always clear. To quote Jeff Veen:

I���ve been amazed at how often those outside the discipline of design assume that what designers do is decoration. Good design is problem solving.

Design is not the creation of pretty pictures and decoration. Design serves a purpose. In fact, the term ���design��� originated in Medieval Latin as designare which meant ���to mark out��� (hence the related term designate). To design is ���to devise for a specific function or end.��� To practice ���web design��� is to use the tools of graphic design to achieve the purpose of the project.

In the context 303 Creative LLC seeks to operate, the purpose of each project would be to announce and provide the details about a wedding. 303 Creative LLC seeks to provide these services in exchange for money, at the behest of a client. It is not artistic expression any way you slice it.

# Fact: This Case is About Advertising BigotryIf you���ve run any sort of service business, you���ve likely come across clients and projects you had to turn away. Sometimes you don���t have the bandwidth to take on the project. Other times you may not be interested in the kind of work it entails. Still others, you might not have the right expertise to do the project justice. And sometimes you just get a sense that the potential client is not someone you���d work well with (perhaps based on the tone of their inquiry). Regardless of the reason, however, you can gently explain to them that you cannot do the project for them and either leave it at that or recommend someone who might be able to help them.

In the case of 303 Creative LLC, Smith could have easily used this approach to turn away same-sex couples without making it a thing. She could even have a form email prepared for this very purpose! And unless several couples approached her at roughly the same time and got wildly different responses with respect to her ability to create them a wedding website���which, to reiterate, is not a service she currently offers���no one would be any the wiser when it came to her belief that same-sex marriage doesn���t (or shouldn���t) exist.

But no, that���s not the route that Ms. Smith and 303 Creative LLC seeks to go. Instead, she would like to be able to express her ���firmly held religious belief��� that same-sex marriages should not happen and to put a notice on her website explicitly saying she refuses to create a website for a same-sex wedding. She wants to put her bigotry on full display and she doesn���t think she should suffer any legal consequences for doing so.

# Clarification: Who qualifies as ���Protected���?Over on Mastodon, I was asked to clarify whether the law allows you to refuse to work for a particular individual or corporation. For example, could a web designer refuse to do work for Chick-fil-a on account of their anti-LGBTQIA+ positions (a stance which I think they���ve reversed, but I don���t eat there so I���m not sure). A similar question was asked in Oral Arguments, framed as a speech writer���s ability to refuse to write a speech for a political candidate they disagree with. Public accommodations law, which is what is being considered in this case, would not require you to work on any project for anyone as long as the reason you are refusing your service is not on account of their membership in a protected class.

Corporations are not a protected class. Neither are politicians. Same-sex couples are protected from discrimination under both the Equal Pay Act of 1963 and the Civil Rights Act of 1964.

# Potential FalloutIf the conservative majority on the Court decides to ignore all of the facts in this case an rule in favor of 303 Creative LLC, that decision will open the floodgates for discrimination against people based on their protected status by anyone who claims to have a religious objection to treating that person respectfully.

For example, people with disabilities are a protected class under the Americans with Disabilities Act. If this ruling goes in 303 Creative LLC���s favor, a business owner could claim eugenics as a ���firmly held religious view��� and refuse to provide accommodations for them. From the web side of things, that could mean they could intentionally make their site inaccessible to people who use screen readers. In the physical world, it could mean they could make entry to their business impossible for anyone using a wheelchair.

It might take a little time, but we���d likely end up in another ���Jim Crow���-like era where restaurants are once again free to adorn their windows with ���Whites Only��� signs. Where the grocery store hangs a ���Christians Only��� sign on its door. Where the local bank proudly announces that only ���Heterosexual Evangelical Christian Women��� can apply for an open teller position. Where only women under 25 can date Leonardo DiCaprio��� wait.

Instead of embracing our differences as a complement to one another and for the betterment of our society, condoning this would further drive us apart and foster a world of exclusion. People could use their religion to mask their bigotry and claim exemption from having to provide equal access to people based on their disabilities, gender (or gender expression), sexual orientation, racial characteristics, religion, age, or any other protected category. That���s not a world I want to live in nor is it a future I want for my kid.

If it comes to pass, I suppose the one silver lining is that we���ll learn what companies deserve our business, but that hardly outweighs the potential harms for people who need access to food, clothing, shelter, information, and other necessities for existence both online and off that are supposed to be guaranteed by anti-discrimination laws.

It���s all in the Supreme Court���s hands at this point, but I am more than a little concerned with what this could mean for the future here in the United States.

October 12, 2022

Representation in `alt` text

Inclusion can take many forms.

Throughout my career, I���ve often defaulted to using alt text for photography with people in it that mentions the people, but rarely mentions things like their gender, skin tone, and the like. Often this was because I thought about people as just, well, people. Their individual characteristics were almost always immaterial to the primary purpose of describing the photo. If their difference served a purpose in the context, I included it, but that was my line.

In reading this piece from L��onie, however, I realized that I was approaching my image labeling from a very privileged position. I didn���t include information about these individuals because I am privileged. In my life experience, my gender, race, orientation, etc. seemed���to me���unimportant because these attributes are highly valued by the society I exist in. Because I didn���t see the value of my own attributes, I could sweep away other people���s differences and focus on their human-ness. And that can seem like a good thing, but it���s insidious. Like exclaiming ���I don���t see color��� it erases people and their many intersecting identities.

By including information about people���s physical attributes in the alt text we author, we can both normalize and embrace our differences. We can celebrate diversity and promote representation.

To describe a photo as ���Doctor Smith handing a file to a colleague��� is not nearly as meaningful as ���Doctor Smith, a Black woman, handing a file to her office manager, Jenifer Jones, a Filipino woman who uses a wheelchair.��� If I was blind, I could learn a lot of useful information about the medical practice from this extra bit of detail. Taking it further, if I was Black, I might feel more comfortable seeing Doctor Smith, knowing she was Black as well (especially given the unequal treatment Black folks receive in the U.S. medical system). If I use a support cane to get around, knowing the office manager uses a wheelchair tells me a good portion of the office is likely to offer generous pathways for me to walk around.

So I���m going to take L��onie���s advice to heart and work on my making my alt text more inclusive. The one area I will exercise restraint in my descriptions, however, is with respect to assigning folks a gender identity. Unless I have explicit knowledge of how they identify, it���s something best left to them to share.

Representation in alt text

Inclusion can take many forms.

Throughout my career, I���ve often defaulted to using alt text for photography with people in it that mentions the people, but rarely mentions things like their gender, skin tone, and the like. Often this was because I thought about people as just, well, people. Their individual characteristics were almost always immaterial to the primary purpose of describing the photo. If their difference served a purpose in the context, I included it, but that was my line.

In reading this piece from L��onie, however, I realized that I was approaching my image labeling from a very privileged position. I didn���t include information about these individuals because I am privileged. In my life experience, my gender, race, orientation, etc. seemed���to me���unimportant because these attributes are highly valued by the society I exist in. Because I didn���t see the value of my own attributes, I could sweep away other people���s differences and focus on their human-ness. And that can seem like a good thing, but it���s insidious. Like exclaiming ���I don���t see color��� it erases people and their many intersecting identities.

By including information about people���s physical attributes in the alt text we author, we can both normalize and embrace our differences. We can celebrate diversity and promote representation.

To describe a photo as ���Doctor Smith handing a file to a colleague��� is not nearly as meaningful as ���Doctor Smith, a Black woman, handing a file to her office manager, Jenifer Jones, a Filipino woman who uses a wheelchair.��� If I was blind, I could learn a lot of useful information about the medical practice from this extra bit of detail. Taking it further, if I was Black, I might feel more comfortable seeing Doctor Smith, knowing she was Black as well (especially given the unequal treatment Black folks receive in the U.S. medical system). If I use a support cane to get around, knowing the office manager uses a wheelchair tells me a good portion of the office is likely to offer generous pathways for me to walk around.

So I���m going to take L��onie���s advice to heart and work on my making my alt text more inclusive. The one area I will exercise restraint in my descriptions, however, is with respect to assigning folks a gender identity. Unless I have explicit knowledge of how they identify, it���s something best left to them to share.

October 7, 2022

Thoughts on the proposed Websites and Software Applications Accessibility Act

Last month, Senator Tammy Duckworth (D-Ill.) and Representative John P. Sarbanes (D-Md.) introduced the Websites and Software Applications Accessibility Act���I���m gonna call it the WS3A for short���simultaneously in the U.S. Senate (S. 4998) and House of Representatives (H.R. 9021) to explicitly bring websites���and other forms of digital media that didn���t exist when the ADA was signed into law���into the purview of the Americans with Disabilities Act (ADA). I am definitely in favor of this effort as it removes the ambiguity that currently exists in U.S. law as to whether websites are governed by the ADA. The WS3A is a reasonable framework, but there is still a lot of work to be done when it (hopefully) passes.

On reading the text of the WS3A, I really appreciated the thought they put into the way it���s structured. In a nutshell, it

Affirms that the (ADA) prohibits discrimination against people with disabilities in their use of websites.Tasks the Department of Justice (DOJ) and the Equal Employment Opportunity Commission (EEOC) with establishing, updating, and enforcing accessibility standards for websites and applications.Sets up a committee to work with these government offices to establish the rules that govern digital accessibility. It also establishes a central repository (and governing committee) to provide guidance on how to achieve compliance. Both committees must include representatives from numerous named disability communities as well as digital accessibility experts.Identifies who needs to abide by these standards (e.g., the government, employers, commercial entities).Establishes the legal grounding for litigation (from both the government and citizens) of violations of the established standards.There���s more to it than that, but it���s a reasonable summary. I suggest reading Senator Duckworth���s summary doc for more detail as well.

# We don���t all need ���the same��� experienceIn Section 3 of the WS3A, a part of the definition for ���accessibility��� is the requirement for people with disabilities to be able ���to engage in the same interactions as��� people without disabilities. This sounds good on first blush, but I think ���the same��� could be misleading.

A number of years back, I was consulting with a financial services firm. Their web team was quite interested in putting accessibility into practice on their website after spending a few days talking about it with me. When I circled back a few months later, things weren���t going so well. The team that was focused on accessibility was two sprints behind ���because of accessibility��� and management was ready to give up on it. And so I asked them to walk me through what was going on.

The problem was an interactive graph they had built for a page in their company���s marketing site. It allowed users to see how much they���d save in fees over a certain number of years based on an initial investment amount that could be adjusted via a slider control. The slider allowed the initial investment amount to range from a low of $5,000 to something like $5,000,000 in increments of $5,000. They had run into all sorts of delays in getting the slider to work identically for people using either a mouse (the default they���d considered) or a keyboard.

After getting the details, I had them take a step back and look at the big picture. I asked them to consider the goal of the interface: to help people understand the more they invest with this company, the more they will save in fees. Then I asked them if the keyboard experience of that slider was a good one. It wasn���t; no one is going to move the slider potentially several hundred times to get to the exact amount they���re considering investing, nor should they have to. So I asked them to reconsider their approach and come up with other ways to achieve the goal. After all, this was for a marketing site; they weren���t displaying someone���s actual account information, where they would need to be more exacting in their approach.

They realized the same goal could be achieved in two ways. First, they could ensure the copy that came before the visualization offered the same information in textual form. Second, they could simplify the slider interface to have a set number of stops for keyboard users, to give users a sense of how things would change, but without making the interface tedious to use. These were both excellent alternatives and provided a better, some might say more accessible experience for folks who relied on a keyboard to both navigate and interact with he web.

When it comes to accessibility, it���s easy to get hung up while trying to provide an identical experience when that isn���t always the best thing. Everyone should be granted the opportunity to accomplish the core goal of an interface, but they don���t necessarily need to do it in the same way. If we get too caught up in providing the same experience, it���s easy to miss out on providing the right experience.

So, in reflecting on the WS3A���s definition of accessibility, I would prefer to see a little more nuance. I did notice that later, in the Rulemaking section, they use the phrase ���equally effective experiences for users with disabilities and users without disabilities.��� That���s a much better goal here. The same opportunities need to exist; parity is important, but not everyone requires the same accommodations.

This applies in other contexts too. For example, some images are informational, others are decorative; strict reading of a requirement for ���the same��� experience might lead folks to believe all images require descriptions, which they don���t. Similarly, users need to have control over how they receive information. They need to be able to adjust font sizes, stop things form moving around on the screen, change the colors of the interface to improve readability, eliminate distractions, and so on. We need to be building interfaces that can adapt to serve our users��� individualized needs across a wide range of intersections encompassing their own capabilities and disabilities as well as those of their device, their network, and the influence of their environment. (Insert shameless plug for my book here.)

# We need to better-define an ���undue burden���The WS3A provides two pathways for an entity to side-step their obligations under the ADA. One seems pretty reasonable: if compliance would ���fundamentally alter the nature��� of the entity���s offerings, they can be excused from having to comply. The other reason creates a huge loophole, however: if compliance would ���impose an undue burden��� on the entity in violation.

What exactly is an ���undue burden���? According to the ADA, it means ���significant difficulty or expense,��� which is determined in consideration of:

The nature and cost of the action needed under this part;The overall financial resources of the site or sites involved in the action; the number of persons employed at the site; the effect on expenses and resources; legitimate safety requirements that are necessary for safe operation, including crime prevention measures; or the impact otherwise of the action upon the operation of the site;The geographic separateness, and the administrative or fiscal relationship of the site or sites in question to any parent corporation or entity;If applicable, the overall financial resources of any parent corporation or entity; the overall size of the parent corporation or entity with respect to the number of its employees; the number, type, and location of its facilities; andIf applicable, the type of operation or operations of any parent corporation or entity, including the composition, structure, and functions of the workforce of the parent corporation or entity.

The exact math is not exactly clear-cut���especially when we���re talking about digital products as opposed to physical structures���and I could see a number of corporations declaring something an ���undue burden��� when, in fact, it is relatively easy to address. It would be nice to see some guidance around what is considered to be a regular ���cost of doing accessible business��� in terms of percentage of operating revenue or percentage of staff dedicated to identifying and remediating accessibility issues.

# Other things I hope to see addressedBelow are a some additional questions and thoughts I think the commissions operating under the WS3A should seek to address:

If we agree accessibility is a journey, not a destination (i.e., we���re never ���done���), how can we declare something is ���accessible��� (or not)? It feels like the Web Content Accessibility Guidelines (WCAG) offer some solid guidance and that will likely set the bar for this effort, especially given that Section 508 of the Rehabilitation Act relies on that set of guidelines as well. I���m encouraged by their recognition that what���s needed is ongoing evaluation of the guidance as well, but it still presents challenges around how we define ���accessible enough.��� Additionally, digital products are different because they are easily changed, updated, and improved (as opposed to, say, a giant set of concrete stairs).We don���t really have clarity around the mechanisms for informing non-compliant entities of their violations. It���s also unclear as to whether there is a reasonable grace period for them to remediate the issue and report back for validation. Having a grace period to address issues will also be key for heading litigation trolls off at the pass.We need some guidance around how much of a disruption is grounds for a formal complaint. Many organizations ship code pretty much constantly and may introduce an accessibility bug and then address it within a few minutes, hours, or days. Again, a grace period starting when the entity is made aware of the violation would be key for addressing this.Third-party code is not addressed at all in the WS3A. Whose responsibility is it? A huge percentage of the web (and other software projects) are built using open source and commercial codebases that they don���t own or control. If an entity tests a piece of third-party code and determines that it meets their obligations under ADA and then an update to that code introduces an accessibility bug and they are unaware, who is at fault and who is responsible for the remediation? The process seems a little more straightforward with open source code, provided the library maintainer is open to contributions that improve accessibility, but commercial code is a whole other thing.On the whole, I really appreciate what Senator Duckworth and Representative Sarbanes (and their staff and partners) are trying to do here. I hope it passes and look forward to keeping tabs on the work of the various commissions tasked with providing the necessary guidance and resources that will lead to a more inclusive web.

��������

October 3, 2022

Apply Now for My 2023 Mentorship Cohort

Are you a web professional (or aspiring web professional) who is looking for career guidance and opportunities? Consider applying for my 2023 mentorship cohort.

Before you apply, I highly recommend reading up on my mentorship program to see if it will be a good fit for you.

The application will remain open through November 30th.

September 19, 2022

Spellcheckers exfiltrating PII��� not so fast

A recent post from the Otto JS research team highlighted how spellcheck services can inadvertently exfiltrate sensitive user data, including passwords, from your site. To be honest, I found the post a tad alarmist and lacking when it came to recommending solid protections. Consider this your no-nonsense guide to protecting your users��� sensitive information.

# BackgroundIn their research, the Otto JS team found that Chrome and Edge each use their own web services to drive their spellchecking services. In Chrome���s case it seems to only be with their ���advanced spellcheck��� feature turned on. I was able to validate this behavior using Charles on macOS Monterey using the latest Chrome Stable and the latest Edge Canary. I did not see the same exfiltration happen with Safari or Firefox. I created a CodePen form with several fields to test different permutations of form field attributes and behaviors. What I am sharing, below, is a result of that testing.

# What gets sent?In both Chrome and Edge, the information sent to their services is the text value itself, disconnected from any specific field name. Chrome also sends the user���s language, and country. Edge, which uses the Microsoft Editor service under the hood, also sends a bunch of licensing details and the user���s language.

# Password fields are safe<inputtype=���password���id=���password���

name=���password���>

Browsers do a lot to protect the contents of password fields already, so I wasn���t surprised to see that the contents of password fields are not passed to a spellcheck service.

If you are not using a true password field, however, the contents of that field are not protected in the same way. If you build a custom password control, you���re on the hook to replicate the entirety of the browser���s feature set when it comes to protecting user data. Unless you���re a glutton for punishment, you should probably just use the built-in password field instead.

# Fields marked readonly and disabled are not exposedAs you���d hope, neither read-only fields nor disabled fields are exposed ot the service. This even holds true when you change the values of these fields programmatically or via DevTools.

I should note, however, that readonly fields are send to the server when the form is submitted and their contents are editable via JavaScript and DevTools, so you should always assume readonly fields are informational for the user only and never trust their contents on the server side. Fields that are marked disabled, in contrast, are never sent to the server.

# You can protect interactive fields with the spellcheck attribute<inputid=���no-spellcheck���name=���no-spellcheck���

spellcheck=���false���>

The spellcheck attribute can be applied to any element and setting it to ���false��� instructs browsers to turn off spellchecking services for its contents. The post from Otto JS showed this being used globally on the body element, but that is overkill. It would be better to use the attribute on specific fields you want to protect, as shown above.

# Don���t forget about password controls that support show/hideEdge has a neat feature in its password field implementation where it enables a user to toggle the visibility of the password within the control itself. When users show their password using that built-in functionality, no content is shared with the spellchecker. Not all browsers have this feature, however, which has led to JavaScript-based implementations that simply swap the ���password��� type value for ���text��� to show the contents and then swap it back again to hide the contents. Here���s a quick & dirty example of what the toggle button���s event handler might look like:

functiontogglePassword(e){var $btn = e.target,

$field = $btn.parentNode.querySelector(���input���),

state = $field.type;

if( $btn && $field ){

if( state ==���password���){

$field.type =���text���;

$btn.innerText =���Hide���;

}else{

$field.type =���password���;

$btn.innerText =���Show���;

}

e.preventDefault();

e.stopPropagation();

}

}

The problem with this approach, when it comes to the spellchecker, is that the text field is considered fair game for checking. Its contents aren���t sent right away, but if the field receives focus or its value is changed in any way while in the ���text��� state, its contents are sent to the spellcheck service.

Protecting the field���s contents are fairly easy, however: turn off the spellchecker for that field, even in its ���password��� state.

<inputtype=���password���id=���password-show-nospellcheck���

name=���password-show-nospellcheck���

spellcheck=���false���>

With spellcheck=���false��� in place, you can turn the field into a text field safely, without the contents being exposed to the spellcheck service.

HTML is a pretty powerful language and, when wielded properly, can be an excellent tool for protecting user data, provided we use it properly. And know that you know how to protect your users��� sensitive data, minimize this tab and start filing those pull requests���

Spellcheckers exfiltrating PII��� not so fast

A recent post from the Otto JS research team highlighted how spellcheck services can inadvertently exfiltrate sensitive user data, including passwords, from your site. To be honest, I found the post a tad alarmist and lacking when it came to recommending solid protections. Consider this your no-nonsense guide to protecting your users��� sensitive information.

# BackgroundIn their research, the Otto JS team found that Chrome and Edge each use their own web services to drive their spellchecking services. In Chrome���s case it seems to only be with their ���advanced spellcheck��� feature turned on. I was able to validate this behavior using Charles on macOS Monterey using the latest Chrome Stable and the latest Edge Canary. I did not see the same exfiltration happen with Safari or Firefox. I created a CodePen form with several fields to test different permutations of form field attributes and behaviors. What I am sharing, below, is a result of that testing.

# What gets sent?In both Chrome and Edge, the information sent to their services is the text value itself, disconnected from any specific field name. Chrome also sends the user���s language, and country. Edge, which uses the Microsoft Editor service under the hood, also sends a bunch of licensing details and the user���s language.

# Password fields are safe<inputtype="password"id="password"name="password">Browsers do a lot to protect the contents of password fields already, so I wasn���t surprised to see that the contents of password fields are not passed to a spellcheck service.

If you are not using a true password field, however, the contents of that field are not protected in the same way. If you build a custom password control, you���re on the hook to replicate the entirety of the browser���s feature set when it comes to protecting user data. Unless you���re a glutton for punishment, you should probably just use the built-in password field instead.

# Fields marked readonly and disabled are not exposedAs you���d hope, neither read-only fields nor disabled fields are exposed ot the service. This even holds true when you change the values of these fields programmatically or via DevTools.

I should note, however, that readonly fields are send to the server when the form is submitted and their contents are editable via JavaScript and DevTools, so you should always assume readonly fields are informational for the user only and never trust their contents on the server side. Fields that are marked disabled, in contrast, are never sent to the server.

# You can protect interactive fields with spellcheck="false"<inputid="no-spellcheck"name="no-spellcheck"spellcheck="false">The spellcheck attribute can be applied to any element and setting it to ���false��� instructs browsers to turn off spellchecking services for its contents. The post from Otto JS showed this being used globally on the body element, but that is overkill. It would be better to use the attribute on specific fields you want to protect, as shown above.

# Don���t forget about password controls that support show/hideEdge has a neat feature in its password field implementation where it enables a user to toggle the visibility of the password within the control itself. When users show their password using that built-in functionality, no content is shared with the spellchecker. Not all browsers have this feature, however, which has led to JavaScript-based implementations that simply swap the ���password��� type value for ���text��� to show the contents and then swap it back again to hide the contents. Here���s a quick & dirty example of what the toggle button���s event handler might look like:

functiontogglePassword(e){var $btn = e.target,

$field = $btn.parentNode.querySelector("input"),

state = $field.type;

if( $btn && $field ){

if( state =="password"){

$field.type ="text";

$btn.innerText ="Hide";

}else{

$field.type ="password";

$btn.innerText ="Show";

}

e.preventDefault();

e.stopPropagation();

}

}

The problem with this approach, when it comes to the spellchecker, is that the text field is considered fair game for checking. Its contents aren���t sent right away, but if the field receives focus or its value is changed in any way while in the ���text��� state, its contents are sent to the spellcheck service.

Protecting the field���s contents are fairly easy, however: turn off the spellchecker for that field, even in its ���password��� state.

<inputtype="password"id="password-show-nospellcheck"name="password-show-nospellcheck"spellcheck="false">With spellcheck="false" in place, you can turn the field into a text field safely, without the contents being exposed to the spellcheck service.

HTML is a pretty powerful language and, when wielded properly, can be an excellent tool for protecting user data, provided we use it properly. And know that you know how to protect your users��� sensitive data, minimize this tab and start filing those pull requests���

August 31, 2022

Salvaging linkrot with the Wayback Machine

While making some updates to the site, I did a 404 scan of my link blog and the results were��� less than awesome. So I decided to work some Eleventy magic to recover from them.

# Step 1: Log the 404s to a fileI make ample use of Eleventy���s global data files, but 404s didn���t feel like something I needed to have as part of the data cascade. Instead, I���m logging them to a YAML file in my ./_cache folder. For simplicity, they get logged like this:

https://path.to/original/page/that-is...I chose YAML as it���s about as bare-bones as you can get when it comes to file formats and is pretty easy to work with in the context of Eleventy.

# Step 2: Add an Eleventy data file to my links folderIf you���re not familiar, Eleventy allows you to create directory-level data files that can be used to augment file-level data. I was originally using it to define the layout and permalink front matter variables for all the links using the JSON option, but as a JavaScript file, directory-level data becomes even more powerful.

Setting up your data file is relatively straightforward using module.exports:

module.exports ={layout:"layouts/link.html",

permalink:"/notebook/{{ page.filePathStem }}/",

eleventyComputed:{

custom_property:(data)=>{

return some_value_based_on_data;

}

}

};

Here I���m defining two static values (layout and permalink) and a computed value (the hypothetical custom_property).

# Step 3: Consult the 404 logAs I mentioned, the 404 logging happens separately and results in updates to _cache/404.yml. To make use of all this in the Eleventy data file, I need to set up a few things at the top of the file:

const fs =require('fs');const yaml =require('js-yaml');

const cached404s = yaml.load(fs.readFileSync('_cache/404s.yml'));

Here I���m bringing in Node���s File System and JS-YAML. Then I am loading the YAML file into memory as cached404s, leveraging those utilities.

Next up is defining a helper function to search cached404s for a match:

functionis404ing(url){return( url in cached404s );

}

This function takes the URL as an argument and returns true or false. Making use of this in the eleventyComputed section is straightforward:

module.exports ={layout:"layouts/link.html",

permalink:"/notebook/{{ page.filePathStem }}/",

eleventyComputed:{

is_404:(data)=>{

returnis404ing(data.ref_url);

}

}

};

In my case, ref_url is the front matter field storing the URL I���m linking to from my link blog, so I return the value of passing that to is404ing() as is_404.

# Step 4: Lean on the Wayback MachineThe next thing I want to do is generate a link that has a good chance of working for my readers. Thankfully the Wayback Machine has a predictable URL structure for entries and it���s pretty good about handgun redirects to the most temporally-proximate snapshot when you give it a date to work from. Knowing that, I set up another helper function:

functionarchived(data){let archive_url ='https://web.archive.org/web/{{ DATE }}/{{ URL }}';

let month = data.date.getUTCMonth() 1;

month = month <10?"0" month : month;

let day = data.date.getDay();

day = day <10?"0" day : day;

archive_url = archive_url

.replace('{{ DATE }}',`${data.date.getUTCFullYear()}${month}${day}`)

.replace('{{ URL }}', data.ref_url );

return archive_url;

}

Note: I know this isn���t the most elegant/efficient code, I wanted to show step-by-step what���s happening here.

This function takes the data object as an argument and composes a URL that points to a snapshot of the given page (data.ref_url) at the time I saved the link (data.date). The data.date value is already a JavaScript date, so it���s pretty easy to turn it into the format the Wayback Machine expects (YYYYMMDD). In the end, this method returns a URL that looks something like this:

https://web.archive.org/web/20150102/http://andregarzia.com/posts/en/whatsappdoesntunderstandtheweb/

With that helper in place, I can make use of it within eleventyComputed:

module.exports ={layout:"layouts/link.html",

permalink:"/notebook/{{ page.filePathStem }}/",

eleventyComputed:{

is_404:(data)=>{

returnis404ing(data.ref_url);

},

archived:(data)=>{

returnis404ing(data.ref_url)?archived(data):false;

}

}

};

Now every link in my link blog will have an is_404 value that is true or false and an archived value that is either a valid Wayback Machine URL (if the page is 404-ing) or false.

# Step 5: Using these in the my templateI use Nunjucks for most of my site���s templating, but you can make use of these computed properties in any supporting templating language. Knowing if a linked URL is 404-ing allows me to

display the title without a link,display the source without a link, andprovide additional copy about the link���s 404 status and provide the Wayback Machine link instead.I am only going to share code with you for that final bit as it should give you enough of a sense of how you can use these properties in the other contexts too.

{%if is_404 %}<p>This link is 404-ing{%if archived %}, but

<arel="bookmark"href="://{{ archived }}">you can view an

archived version on the Wayback Machinea>{%endif%}.

p>

{%endif%}

Here you can see I am injecting a footer into the markup when the entry is 404-ing. Within that footer, I note the link���s status. Then I inject some additional text to point to the Wayback Machine���s archive of the page. It���s worth noting that I am being overly cautious here and only injecting the link if post.data.archived is truthy. This will ensure that the link won���t be shown if something fails in my code or I change how I am implementing the archived property.

# Crossing my fingersRelying on an unverified URL, even one at the Wayback Machine, is risky, but so far this approach seems to be working. If you���ve got a link blog suffering from link rot, you might consider setting up something similar. Hopefully this will help jumpstart that project for you.

August 22, 2022

Bring Focus to the First Form Field with an Error

While filling out a long form the other day, I couldn���t figure out why it wasn���t submitting. Turns out I���d forgotten to fill in a field, but I didn���t know that because it had scrolled out of the viewport. This is a common problem on the web, but easily remedied with a little bit of JavaScript.

# Step 1: Make the Most of Your MarkupWhenever you���re building a form, you should use every tool in your toolbox for building that form properly. That means:

Associate labels with their fieldsUse specialized input types (e.g., email, URL) as appropriateIdentify required fields with required and aria-requiredIf you���re expecting a field to match a particular format, note that with pattern and provide an example with placeholderAdditional information about a field should be bound to it using aria-describedbyBy following these steps, you���re likely already in a good place when it comes to your form���s UX and accessibility. Browsers will pay attention to the instructions in the markup, validate the user���s input, and even provide guidance on how to correct issues without you having to lift a finger. They���ll even focus the first invalid field on your behalf!

But what if you want to customize the experience?

# Step 2: Enhanced Browser ValidationWhen you use proper markup for your forms, enhancing them with JavaScript becomes straightforward, thanks to the Constraint Validation API. Consider the following form field:

<inputid="name"name="name"

required

aria-required="true"

>

Using JavaScript, we can check to see it���s validity at any point:

document.getElementById("name").validity.valid;// Is either true or false

There are also a host of other properties on the field���s validity property that provide even more detail as to the state of the field���s validity (hence the name: ValidityState). In the case of the field above, if valueMissing is true (relating back to that required attribute), valid is false.

Armed with that knowledge, you could use another feature of the Constraint Validation API to show a custom error message in the browser UI:

var input = document.getElementById("name");if(! input.validity.valid ){

input.setCustomValidity("Please enter your name");

input.reportValidity();

}

The thing is, you don���t really want to litter your JavaScript with strings like that. It���s not maintainable. Thankfully, we can leverage markup to achieve the same goal:

<inputid="name"name="name"

required

aria-required="true"

data-error-required="Please enter your name"

>

The input in this example has a data attribute containing the error string (data-error-required). We can create whatever data attributes we want and access them using that element���s dataset property. It���s worth noting that hyphenated property names become camelCase when accessed as a named property of dataset.

var input = document.getElementById("name");if(! input.validity.valid ){

input.setCustomValidity(input.dataset.errorRequired);

input.reportValidity();

}

With that, we get the same result with far looser coupling between individual fields and the JavaScript handling validation.

You can even extend the approach to handle different kinds of errors:

<inputtype="email"id="email"

name="email"

required

aria-required="true"

data-error-required="Please enter your email"

data-error-invalid="Your email doesn���t look right"

>

Here I���m using two different data attributes to apply in different error scenarios and the one I show will depend on the type of error a user has encountered. Pretty cool!

And since we���re still operating in the context of the browser���s built-in validation experience, users will still get directed to the first field with an error when they submit the form.

But what if you want to bypass the built-in experience and go full custom?

# Step 3: Going RogueThe built-in browser validation UI is pretty great, but maybe it���s not your cup of tea. Thankfully, it���s pretty easy to take the training wheels off. You just add a novalidate attribute to the form element. But before you go dropping that attribute in your markup, consider that your custom validation code will all be JavaScript and if that fails to run���yes, it happens���the browser won���t step in and fill the void. So instead of putting it in your markup, inject it into the form when you know absolutely all of the JavaScript code necessary to run your custom validation experience is loaded and ready to rock. In fact, it should be the last line of code to execute:

// Validation Logic Definition// including validateMe() function

document.querySelectorAll("form")

.forEach(function($form){

$form.addEventListener('submit', validateMe,false);

$form.setAttribute('novalidate','');

});

With that in place, we can turn our attention to handling the validation setup.

For simplicity, and based on personal preference, I am going to start with setting up my form to validate when the form is submitted rather than whenever an individual field is changed (hence the ���submit��� event listener). When the event is fired, the event handler will loop through the fields in the form and validate each one. For the sake of the widest possible browser compatibility, I���m foregoing fat arrow functions and other ES2015 goodies and rockin��� this old school.

functionvalidateMe(e){var $form = e.target,

i =0,

field_count = $form.elements.length,

$first_error =false;

for( i; i< field_count; i++){

var $field = $form.elements[i],

valid =isValid($field);

if(!$first_error &&!valid ){

$first_error = $field;

}

}

if( $first_error ){

e.preventDefault();

$first_error.focus();

}

}

First, I get a reference to the form that needs to be validated, then I set up the loop for each of the fields. I also set up a reference to the first field with an error���that is the point of the article after all���as $first_error and set it to false.

Within the loop, I grab a reference to the field and pipe it into another function that will do the actual validation (isValid()). That function will return true if the field is valid and false if it isn���t. The last bit of the loop checks to see if $first_error has been set already and, if it hasn���t, sets it to the current field if it isn���t valid.

Finally, I check to see if $first_error is set (i.e., true) and if it is, the form has an issue, so I prevent the default behavior of the submit event (using preventDefault()) and focus the field captured in $first_error.

# Addendum: Associating Custom Error MessagesI don���t want to get too into the weeds with my isValid() method, as you can see the end result at the bottom of this post, but I do want to take a moment to describe how you should be handling inline field errors.

First off, you will need to add aria-invalid="true" to any fields that have an error. Secondly, you will want to inject the error message into the markup, give its container an id, and associate it with the field using aria-errormessage. So when your JavaScript is done with it, your field will look something like this:

<labelfor="name">What���s Your Name?label><inputid="name"

name="name"

required

aria-required="true"

data-error-required="Please enter your name"

aria-errormessage="name-validation-error"

aria-invalid="true"

>

<strongclass="form-validation-error"

id="name-validation-error"

>Please enter your namestrong>

It���s also worth noting that you���ll want to reset and re-validate the form field once the user has changed the contents of the field (and hopefully remediated the error). Resetting the field will involve removing the aria-invalid attribute and doing one of two things with the error message: either remove it from the markup entirely or simply hide it (e.g., display: none). Once the aria-invalid state is reset, it doesn���t matter that there is an existing aria-errormessage attribute. That said, I generally prefer to totally reset the field entirely, removing both attributes and the validation error element as well.

Here you can see a complete working prototype of this approach:

Hopefully this helps you with the development of your own forms. Good luck!