Ramez Naam's Blog, page 4

August 10, 2015

How Cheap Can Solar Get? Very Cheap Indeed

I’ll attempt to make some projections (tentatively) here.

tl;dr: If current rates of improvement hold, solar will be incredibly cheap by the time it’s a substantial fraction of the world’s electricity supply.

Background: The Exponential Decline in Solar Module Costs

It’s now fairly common knowledge that the cost of solar modules is dropping exponentially. I helped publicize that fact in a 2011 Scientific American blog post asking “Does Moore’s Law Apply to Solar Cells?” The answer is that something like Moore’s law, an exponential learning curve (albeit slower than in computing) applies. (For those that think Moore’s Law is a terrible analogy, here’s my post on why Moore’s Law is an excellent analogy for solar.)

Solar Electricity Cost, not Solar Module Cost, is Key

But module prices now make up less than half of the price of complete solar deployments at the utility scale. The bulk of the price of solar is so-called “soft costs” – the DC->AC inverter, the labor to install the panels, the glass and aluminum used to cover and prop them up, the interconnection to the grid, etc.. Solar module costs are now just one component in a more important question: What’s the trend in cost reduction of solar electricity? And what does that predict for the future?

Let’s look at some data. Here are cost of solar Power Purchase Agreements (PPAs) signed in the US over the last several years. PPAs are contracts to sell electricity, in this case from solar photovoltaic plants, at a pre-determined price. Most utility-scale solar installations happen with a PPA.

In the US, the price embedded in solar PPAs has dropped over the last 7-8 years from around $200 / MWh (or 20 cents / kwh) to a low of around $40 / MWh (or 4 cents per kwh).

The chart and data are from an excellent Lawrence Berkeley National Labs study, Is $50/MWh Solar for Real? Falling Project Prices and Rising Capacity Factors Drive Utility-Scale PV Toward Economic Competitiveness

This chart depicts a trend in time. The other way to look at this is by looking at the price of solar electricity vs how much has been installed. That’s a “learning rate” view, which draws on the observation that in industry after industry, each doubling of cumulative capacity tends to reduce prices by a predictable rate. In solar PV modules, the learning rate appears to be about 20%. In solar electricity generated from whole systems, we get the below:

This is a ~16% learning rate, meaning that every doubling of utility-scale solar capacity in the US leads to a roughly 16% reduction in the cost of electricity from new solar installations. If anything, the rate in recent years appears to be faster than 16%, but we’ll use 16% as an estimate of the long term rate.

Every Industrial Product & Activity Gets Cheap

This phenomenon of lower prices as an industry scales is hardly unique to solar. For instance, here’s a view of the price of the Ford Model T as production scaled.

Like solar electricity (and a host of other products and activities), the Model T shows a steady decline in price (on a log scale) as manufacturing increased (also on a log scale).

The Future of Solar Prices – If Trends Hold

The most important, question, for solar, is what will future prices be? Any projection here has to be seen as just that – a projection. Not reality. History is filled with trends that reached their natural limits and stalled. Learning rates are a crude way to model the complexities involved in lowering costs. Things could deviate substantially from this trendline.

That said, if the trend in solar pricing holds, here’s what it shows for future solar prices, without subsidies, as a function of scale.

Again, these are unsubsidized prices, ranging from solar in extremely sunny areas (the gold line) to solar in more typical locations in the US, China, India, and Southern Europe (the green line).

What this graph shows is that, if solar electricity continues its current learning rate, by the time solar capacity triples to 600GW (by 2020 or 2021, as a rough estimate), we should see unsubsidized solar prices of roughly 4.5 c / kwh for very sunny places (the US southwest, the Middle East, Australia, parts of India, parts of Latin America), ranging up to 6.5 c / kwh for more moderately sunny areas (almost all of India, large swaths of the US and China, southern and central Europe, almost all of Latin America).

And beyond that, by the time solar scale has doubled 4 more times, to the equivalent of 16% of today’s electricity demand (and somewhat less of future demand), we should see solar at 3 cents per kwh in the sunniest areas, and 4.5 cents per kwh in moderately sunny areas.

If this holds, solar will cost less than half what new coal or natural gas electricity cost, even without factoring in the cost of air pollution and carbon pollution emitted by fossil fuel power plants.

As crazy as this projection sounds, it’s not unique. IEA, in one of its scenarios, projects 4 cent per kwh solar by mid century.

Fraunhofer ISE goes farther, predicting solar as cheap as 2 euro cents per kwh in the sunniest parts of Europe by 2050.

Obviously, quite a bit can happen between now and then. But the meta-observation is this: Electricity cost is now coupled to the ever-decreasing price of technology. That is profoundly deflationary. It’s profoundly disruptive to other electricity-generating technologies and businesses. And it’s good news for both people and the planet.

Is it good enough news? In next few weeks I’ll look at the future prospects of wind, of energy storage, and, finally, at what parts of the decarbonization puzzle are missing.

—-

If you enjoyed this post, you might enjoy my book on innovating in energy, food, water, climate, and more: The Infinite Resource: The Power of Ideas on a Finite Planet

August 9, 2015

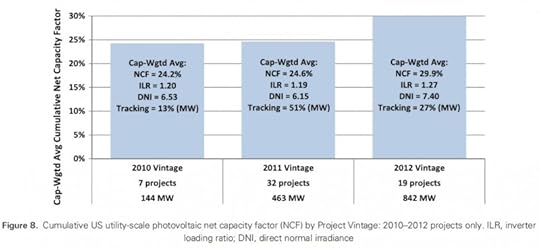

New Solar Capacity Factor in the US is Now ~30%

The capacity factor of new utility scale solar deployed in the US in 2010 was 24%. By 2012 it had risen to roughly 30%.

The rising capacity factor of new solar projects is part of why the cost of electricity from new solar is dropping faster than the installed cost per watt. Installing solar at a 30% capacity factor produces a quarter more electricity than the same number of watts of solar deployed at 24%. The rise in capacity factor effectively reduces the price of electricity by 20%.

The chart and data are from an excellent Lawrence Berkeley National Labs study, Is $50/MWh Solar for Real? Falling Project Prices and Rising Capacity Factors Drive Utility-Scale PV Toward Economic Competitiveness

The EIA shows similar numbers, showing that the capacity factor of the entire solar PV fleet in the US in 2014 (including projects deployed before 2012) was 27.8%.

As newer projects come online, they’ll likely move the average capacity factor of the total fleet upwards.

July 15, 2015

Citizens Led on Gay Marriage and Pot. We Can on Climate Change Too.

A decade ago, it was nearly inconceivable that in 2015, gay marriage would be legal across the US and marijuana fully legal in four states plus the District of Columbia.

Yet it happened. It happened because citizens who wanted change led, from the bottom up, often through citizens initiatives.

America can change it’s mind quite quickly, as this piece from Bloomberg documents.

Whatever you may think of legalized marijuana and same sex marriage, their trajectory shows how quickly change can happen, particularly when led by the people.

That’s part of why I’m excited to support CarbonWA’s proposed initiative for a revenue-neutral carbon tax in WA.

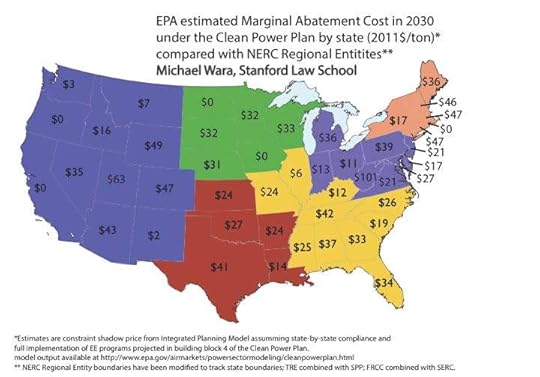

What’s a revenue-neutral carbon tax? It’s a move that keeps total taxes the same, but shifts taxes onto pollution, instead of (in this case) sales tax, or the tax of low-income people. This particular proposal reduces total taxes on the working poor, helping address Washington’s fairly regressive state tax policy.

And, while not changing the state’s total tax bill whatsoever, it would be effective in reducing carbon emissions, and accelerating the switch to renewables. It would augment other policies, including the EPA’s Clean Power Plan, and Governor Inslee’s climate plan. In fact, in WA, it would do far more than the EPA’s plan does. The proposed $25 / ton of emissions is far larger in impact than the equivalent $3 / ton that the EPA Clean Power Plan adds to carbon emissions costs in WA.

And nationwide, a carbon price of $25 / ton, as in the WA initiative, is probably roughly as effective as the EPA Clean Power Plan. That’s the conclusion of the Niskan Center. (Here’s more detail on how effective a carbon tax would be in WA.)

That is to say, a national revenue-neutral carbon tax of $25 / ton would roughly double the speed of reducing carbon emissions over the EPA Clean Power Plan alone. While the EPA Clean Power Plan places pressure on coal, a carbon tax would broaden that, forcing natural gas plants to internalize some of the cost of the carbon they’re emitting, putting them on a fairer footing in competing with wind and solar. And it would do this while lowering other taxes on Americans – the total tax bill would stay the same.

And, as I’ve written before, a carbon tax would accelerate innovation in clean energy:

Think globally, act locally. Getting this measure on the WA ballot in 2016 would start a ball rolling. WA helped lead the nation, passing referendums on same sex marriage and medical marijuana in 2012. Those helped pave the way for other states. When one state leads, others will follow.

A carbon tax isn’t enough on its own to solve climate change. Other policies are needed. But this is an excellent start.

CarbonWA needs to accumulate roughly 250,000 verified signatures by December to get this measure on the ballot for 2016. In a state of 7 million people, that’s a large number of signatures. That takes money, volunteers, and publicity.

I’ll be donating to CarbonWA, and you’ll see me write about the importance of this again.

In the meantime, if you’re interested, you can:

Volunteer to collect signatures.

Donate.

Sign up for their updates.

And most importantly, spread the word.

June 30, 2015

Solar Cost Less than Half of What EIA Projected

Skeptics of renewables sometimes cite data from EIA (The US Department of Energy’s Energy Information Administration) or from the IEA (the OECD’s International Energy Agency). The IEA has a long history of underestimating solar and wind that I think is starting to be understood.

The US EIA has gotten more of a pass. The analysts at EIA are, I’m certain, doing the best job they can to make reasonable projections about the future. But, time and again, they’re wrong. Solar prices have dropped far faster than they projected. And solar has been deployed far faster than they’ve projected.

Exhibit A. In an update on June 2015, the EIA projected that the cheapest solar deployed in 2020 would cost $89 / mwh, after subsidies. That’s 8.9 cents / kwh to most of us. (This assumes that the solar Investment Tax Credit is not extended.)

Here’s the EIA’s table of new electricity generation costs. I’ve moved renewables up to the top for clarity. Click to see a larger version.

How has that forecast worked out? Well, in Austin, Greentech Media reports that there are 1.2GW of bids for solar plants at less than $40/mwh, or 4c/kwh. And there are bids on the table for buildouts after the ITC goes away at similar prices.

That’s substantially below the price of ~$70/mwh for new natural gas power plants, or $87/mwh for new coal plants.

And the prices continue to drop.

The reality is that solar prices in the market are less than half of what the EIA projected three weeks ago.

When you hear numbers quoted from EIA or IEA, take this into account. As well-meaning as they may be, their track record in predicting renewables is poor, and it always errs on the side of underestimating the rate of renewable progress.

—

There’s more about the exponential pace of innovation in solar, storage, and other technologies in my book on innovating to beat climate change and continue economic growth:The Infinite Resource: The Power of Ideas on a Finite Planet

June 18, 2015

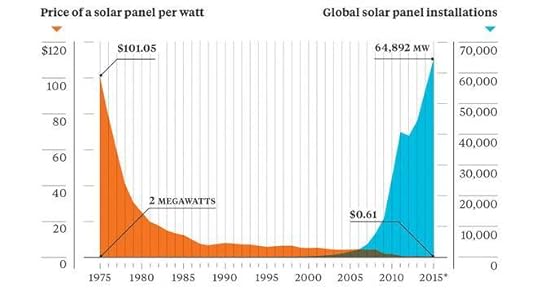

Solar: The First 1% Was the Hardest

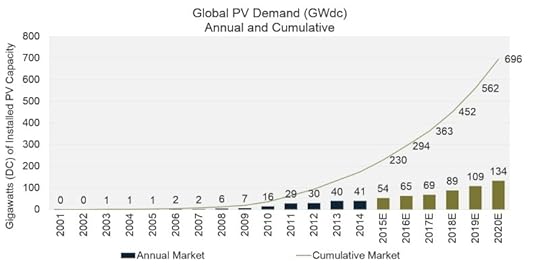

Solar power now provides roughly 1% of the world’s electricity. It took 40 years to reach that milestone. But, as they say in tech, the first 1% is the hardest. You can see why in this chart below.

As solar prices drop, installation rate rises. As the installation rate rises, the price continues to drop due to the learning curve.

How fast is the acceleration?

Looking at the projections from GTM, it will take 3 more years to get the second 1%.

Then less than 2 years to get the third 1%.

And by 2020, solar will be providing almost 4% of global electricity.

GTM expects that by 2020, the world will be installing 135 GW of solar every year, and will have reached a cumulative total of nearly 700 GW of solar, roughly four times the 185 GW installed today.

For context, at the end of 2013, after almost 40 years of effort, the world had a total of 138 GW of solar deployed. We’ll deploy almost that much in a single year in 2020. And the numbers will keep on rising.

The growth of the total amount of solar deployed around the world continues to look exponential, with a growth rate over the last 23 years of 38% per year. Over the last three years it’s slowed to a mere 22% per year. All exponentials become S-curves in the long run. But for now, growth remains rapid, and may indeed accelerate once more as solar prices drop below those of fossil fuel generation and as energy storage plunges in price.

The first 1% was the hardest.

—-

There’s more about the exponential pace of innovation in solar, storage, and other technologies in my book on innovating to beat climate change and continue economic growth:The Infinite Resource: The Power of Ideas on a Finite Planet

June 4, 2015

What’s the EROI of Solar?

There’s a graph making rounds lately showing the comparative EROIs of different electricity production methods. This graph is being used to claim that solar and wind cannot support and industrialized society like ours.

This is wildly different from the estimates produced by other peer-reviewed literature, and suffers from some rather extreme assumptions, as I’ll show.

Here’s the graph.

This graph is taken from Weißbach et al, Energy intensities, EROIs, and energy payback times of electricity generating power plants (pdf link). That paper finds an EROI of 4 for solar and 16 for wind, without storage, or 1.6 and 3.9, respectively, with storage. It also claims that an EROI of 7 is required to support a society like Europe.

I’ll let others comment on the wind numbers. For solar, which I know better, this paper is an outlier.

The most comprehensive review of solar EROI to date is Bhandari et al Energy payback time (EPBT) and energy return on energy invested (EROI) of solar photovoltaic systems: A systematic review and meta-analysis

Bhandari looked at 232 papers on solar EROI from 2000-2013. They found that for poly-silicon (the predominant solar technology today, found in the second column below), the mean estimate of EROI was 11.6. That EROI includes the Balance of System components (the inverter, the framing, etc..) For thin film solar systems (the right two columns), they found an EROI that was much higher, but we’ll ignore that for now.

Note that for the second column, poly-Si, the EROI estimates range from around 6 to 16. This is, in part, because the EROI of solar has been rising, as the amount of energy required to create solar panels has dropped. Thus, the lower estimates of EROI come predominantly from older studies. The higher estimates come predominantly from more up-to-date studies.

We can see this in estimates of the “energy payback time” of solar (again, including Balance of System components). The energy payback time is the amount of time the system must generate electricity in order to ‘pay back’ the energy used to create it. Estimates of the energy payback time of poly-si solar panels (the right half of the graph below) generally shrink with later studies, as more efficient solar panels manufactured with less energy come into play.

The mean energy payback time found is 3.1 years (last column, above). But if we look at just the studies from after 2010, we’d find a mean of around 2 years, or 1.5x better EROI than the overall data set. And the latest study, from 2013, finds an energy payback time of just 1.2 years.

That is to say, the EROI of solar panels being made in 2013 is quite a bit higher than of solar panels made in 2000. That should be obvious – increasing efficiency and lower energy costs per watt make it so. If we used only the estimates from 2010 on, we’d find an EROI for poly-Si solar of around 15. If we used only the 2013 estimate, we’d find an EROI of around 25.

So how does Weißbach et al find a number that is so radically different? There are three things that I see immediately:

1. Weißbach assumes that half of all solar power is thrown away. The article uses an ‘overproduction’ factor of 2x, which seems fairly arbitrary and doesn’t at all reflect current practice or current deployment. There may be a day in the future when we overbuild solar and throw away some of the energy, but if so, it will come after solar panels are more efficient and less energy intensive to make.

2. Weißbach uses an outdated estimate of silicon use and energy cost. Weißbach’s citation on the silicon input to solar panels (which dominates) is from 2005, a decade ago. Grams of silicon per watt of solar have dropped since then, as has the energy intensity of creating silicon wafers.

3. Weißbach assumes Germany, while Bhandari assumes a sunny place. The Weißbach paper assumes an amount of sunlight that is typical for Germany. That makes some sense. Germany has, until now, been the solar capital of the world. But that is no longer the case. Solar installation is now happening first and foremost in China, then the US. In the longterm, we need it to happen in India. The average sunlight in those areas is much closer to the assumptions in Bhandari (1700 kwh / m^2 per year) than the very low-sunlight model used in Weißbach.

4. (Bonus) Weißbach assumes 10 days of storage. The Weißbach paper and graph also gives a second, “buffered”, number for EROI. This is the number assuming storage. Here, Weißbach uses an estimate that solar PV needs to store energy for 10 days. This is also fairly implausible. It maps to a world where renewables are 100% of energy sources. Yet that world (which we’ll never see) would be one where solar’s EROI had already plunged substantially due to lower energy costs and rising efficiency. More plausibly, in the next decade or two, most stored energy produced by PV will be consumed within a matter of hours, shifting solar’s availability from middle-of the day to the early evening to meet the post-sunset portion of the peak.

In summary: The Weißbach paper is, with respect to solar, an outlier. A more realistic estimate of poly-Si solar EROI, today, is somewhere above 10, and quite possibly above 15.

May 15, 2015

The Ultimate Interface: Your Brain

A shorter version of this article first appeared at TechCrunch.

The final frontier of digital technology is integrating into your own brain. DARPA wants to go there. Scientists want to go there. Entrepreneurs want to go there. And increasingly, it looks like it’s possible.

You’ve probably read bits and pieces about brain implants and prostheses. Let me give you the big picture.

Neural implants could accomplish things no external interface could: Virtual and augmented reality with all 5 senses (or more); augmentation of human memory, attention, and learning speed; even multi-sense telepathy — sharing what we see, hear, touch, and even perhaps what we think and feel with others.

Arkady flicked the virtual layer back on. Lightning sparkled around the dancers on stage again, electricity flashed from the DJ booth, silver waves crashed onto the beach. A wind that wasn’t real blew against his neck. And up there, he could see the dragon flapping its wings, turning, coming around for another pass. He could feel the air move, just like he’d felt the heat of the dragon’s breath before.

- Adapted from Crux, book 2 of the Nexus Trilogy.

Sound crazy? It is… and it’s not.

Start with motion. In clinical trials today there are brain implants that have given men and women control of robot hands and fingers. DARPA has now used the same technology to put a paralyzed woman in direct mental control of an F-35 simulator. And in animals, the technology has been used in the opposite direction,directly inputting touch into the brain.

Or consider vision. For more than a year now, we’ve had FDA-approved bionic eyes that restore vision via a chip implanted on the retina. More radical technologies have sent vision straight into the brain. And recently, brain scanners have succeeded in deciphering what we’re looking at. (They’d do even better with implants in the brain.)

Sound, we’ve been dealing with for decades, sending it into the nervous system through cochlear implants. Recently, children born deaf and without an auditory nerve have had sound sent electronically straight into their brains.

Sound, we’ve been dealing with for decades, sending it into the nervous system through cochlear implants. Recently, children born deaf and without an auditory nerve have had sound sent electronically straight into their brains.

Nor are our senses or motion the limit.

In rats, we’ve restored damaged memories via a ‘hippocampus chip’ implanted in the brain. Human trials are starting this year. Now, you say your memory is just fine? Well, in rats, this chip can actually improve memory. And researchers can capture the neural trace of an experience, record it, and play it back any time they want later on. Sounds useful.

In monkeys, we’ve done better, using a brain implant to “boost monkey IQ” in pattern matching tests.

We’ve even emailed verbal thoughts back and forth from person to person.

Now, let me be clear. All of these systems, for lack of a better word, suck. They’re crude. They’re clunky. They’re low resolution. That is, most fundamentally, because they have such low-bandwidth connections to the human brain. Your brain has roughly 100 billion neurons and 100 trillion neural connections, or synapses. An iPhone 6’s A8 chip has 2 billion transistors. (Though, let’s be clear, a transistor is not anywhere near the complexity of a single synapse in the brain.)

The highest bandwidth neural interface ever placed into a human brain, on the other hand, had just 256 electrodes. Most don’t even have that.

The second barrier to brain interfaces is that getting even 256 channels in generally requires invasive brain surgery, with its costs, healing time, and the very real risk that something will go wrong. That’s a huge impediment, making neural interfaces only viable for people who have a huge amount to gain, such as those who’ve been paralyzed or suffered brain damage.

This is not yet the iPhone era of brain implants. We’re in the DOS era, if not even further back.

But what if? What if, at some point, technology gives us high-bandwidth neural interfaces that can be easily implanted? Imagine the scope of software that could interface directly with your senses and all the functions of your mind:

They gave Rangan a pointer to their catalog of thousands of brain-loaded Nexus apps. Network games, augmented reality systems, photo and video and audio tools that tweaked data acquired from your eyes and ears, face recognizers, memory supplementers that gave you little bits of extra info when you looked at something or someone, sex apps (a huge library of those alone), virtual drugs that simulated just about everything he’d ever tried, sober-up apps, focus apps, multi-tasking apps, sleep apps, stim apps, even digital currencies that people had adapted to run exclusively inside the brain.

- An excerpt from Apex , book 3 of the Nexus Trilogy .

The implications of mature neurotechnology are sweeping. Neural interfaces could help tremendously with mental health and neurological disease. Pharmaceuticals enter the brain and then spread out randomly, hitting whatever receptor they work on all across your brain. Neural interfaces, by contrast, can stimulate just one area at a time, can be tuned in real-time, and can carry information out about what’s happening.

We’ve already seen that deep brain stimulators can do amazing things for patients with Parkinson’s. The same technology is on trial for untreatable depression, OCD, and anorexia. And we know that stimulating the right centers in the brain can induce sleep or alertness, hunger or satiation, ease or stimulation, as quick as the flip of a switch. Or, if you’re running code, on a schedule. (Siri: Put me to sleep until 7:30, high priority interruptions only. And let’s get hungry for lunch around noon. Turn down the sugar cravings, though.)

Implants that help repair brain damage are also a gateway to devices thatimprove brain function. Think about the “hippocampus chip” that repairs the ability of rats to learn. Building such a chip for humans is going to teach us an incredible amount about how human memory functions. And in doing so, we’re likely to gain the ability to improve human memory, to speed the rate at which people can learn things, even to save memories offline and relive them — just as we have for the rat.

Implants that help repair brain damage are also a gateway to devices thatimprove brain function. Think about the “hippocampus chip” that repairs the ability of rats to learn. Building such a chip for humans is going to teach us an incredible amount about how human memory functions. And in doing so, we’re likely to gain the ability to improve human memory, to speed the rate at which people can learn things, even to save memories offline and relive them — just as we have for the rat.

That has huge societal implications. Boosting how fast people can learn would accelerate innovation and economic growth around the world. It’d also give humans a new tool to keep up with the job-destroying features of ever-smarter algorithms.

The impact goes deeper than the personal, though. Computing technology started out as number crunching. These days the biggest impact it has on society is through communication. If neural interfaces mature, we may well see the same. What if you could directly beam an image in your thoughts onto a computer screen? What if you could directly beam that to another human being? Or, across the internet, to any of the billions of human beings who might choose to tune into your mind-stream online? What if you could transmit not just images, sounds, and the like, but emotions? Intellectual concepts? All of that is likely to eventually be possible, given a high enough bandwidth connection to the brain.

That type of communication would have a huge impact on the pace of innovation, as scientists and engineers could work more fluidly together. And it’s just as likely to have a transformative effect on the public sphere, in the same way that email, blogs, and twitter have successively changed public discourse.

Digitizing our thoughts may have some negative consequences, of course.

With our brains online, every concern about privacy, about hacking, about surveillance from the NSA or others, would all be magnified. If thoughts are truly digital, could the right hacker spy on your thoughts? Could law enforcement get a warrant to read your thoughts? Heck, in the current environment, would law enforcement (or the NSA) even need a warrant? Could the right malicious actor even change your thoughts?

“Focus,” Ilya snapped. “Can you erase her memories of tonight? Fuzz them out?”

“Nothing subtle,” he replied. “Probably nothing very effective. And it might do some other damage along the way.”

- An excerpt from Nexus , book 1 of the Nexus Trilogy .

The ultimate interface would bring the ultimate new set of vulnerabilities. (Even if those scary scenarios don’t come true, could you imagine what spammers and advertisers would do with an interface to your neurons, if it were the least bit non-secure?)

Everything good and bad about technology would be magnified by implanting it deep in brains. In Nexus I crash the good and bad views against each other, in a violent argument about whether such a technology should be legal. Is the risk of brain-hacking outweighed by the societal benefits of faster, deeper communication, and the ability to augment our own intelligence?

For now, we’re a long way from facing such a choice. In fiction, I can turn the neural implant into a silvery vial of nano-particles that you swallow, and which then self-assemble into circuits in your brain. In the real world, clunky electrodes implanted by brain surgery dominate, for now.

That’s changing, though. Researchers across the world, many funded by DARPA, are working to radically improve the interface hardware, boosting the number of neurons it can connect to (and thus making it smoother, higher resolution, and more precise), and making it far easier to implant. They’ve shown recently that carbon nanotubes, a thousand times thinner than current electrodes, have huge advantages for brain interfaces. They’re working on silk-substrate interfaces that melt into the brain. Researchers at Berkeley have a proposal for neural dust that would be sprinkled across your brain (which sounds rather close to the technology I describe in Nexus). And the former editor of the journalNeuron has pointed out that carbon nanotubes are so slender that a bundle of a million of them could be inserted into the blood stream and steered into the brain, giving us a nearly 10,000-fold increase in neural bandwidth, without any brain surgery at all.

That’s changing, though. Researchers across the world, many funded by DARPA, are working to radically improve the interface hardware, boosting the number of neurons it can connect to (and thus making it smoother, higher resolution, and more precise), and making it far easier to implant. They’ve shown recently that carbon nanotubes, a thousand times thinner than current electrodes, have huge advantages for brain interfaces. They’re working on silk-substrate interfaces that melt into the brain. Researchers at Berkeley have a proposal for neural dust that would be sprinkled across your brain (which sounds rather close to the technology I describe in Nexus). And the former editor of the journalNeuron has pointed out that carbon nanotubes are so slender that a bundle of a million of them could be inserted into the blood stream and steered into the brain, giving us a nearly 10,000-fold increase in neural bandwidth, without any brain surgery at all.

Even so, we’re a long way from having such a device. We don’t actually know how long it’ll take to make the breakthroughs in the hardware to boost precision and remove the need for highly invasive surgery. Maybe it’ll take decades. Maybe it’ll take more than a century, and in that time, direct neural implants will be something that only those with a handicap or brain damage find worth the risk to reward. Or maybe the breakthroughs will come in the next ten or twenty years, and the world will change faster. DARPA is certainly pushing fast and hard.

Will we be ready? I, for one, am enthusiastic. There’ll be problems. Lots of them. There’ll be policy and privacy and security and civil rights challenges. But just as we see today’s digital technology of Twitter and Facebook and camera-equipped mobile phones boosting freedom around the world, and boosting the ability of people to connect to one another, I think we’ll see much more positive than negative if we ever get to direct neural interfaces.

In the meantime, I’ll keep writing novels about them. Just to get us ready.

May 12, 2015

The Singularity is Further Than it Appears

This is an updated cross-post of a post I originally made at Charlie Stross’s blog.

Are we headed for a Singularity? Is AI a Threat?

tl;dr: Not anytime soon. Lack of incentives means very little strong AI work is happening. And even if we did develop one, it’s unlikely to have a hard takeoff.

I write relatively near-future science fiction that features neural implants, brain-to-brain communication, and uploaded brains. I also teach at a place called Singularity University. So people naturally assume that I believe in the notion of a Singularity and that one is on the horizon, perhaps in my lifetime.

I think it’s more complex than that, however, and depends in part on one’s definition of the word. The word Singularity has gone through something of a shift in definition over the last few years, weakening its meaning. But regardless of which definition you use, there are good reasons to think that it’s not on the immediate horizon.

Vernor Vinge’s Intelligence Explosion

My first experience with the term Singularity (outside of math or physics) comes from the classic essay by science fiction author, mathametician, and professor Vernor Vinge, The Coming Technological Singularity.

Vinge, influenced by the earlier work of I.J. Good, wrote this, in 1993:

Within thirty years, we will have the technological means to create superhuman intelligence. Shortly after, the human era will be ended.

[...]

The precise cause of this change is the imminent creation by technology of entities with greater than human intelligence.

[...]

When greater-than-human intelligence drives progress, that progress will be much more rapid. In fact, there seems no reason why progress itself would not involve the creation of still more intelligent entities – on a still-shorter time scale.

I’ve bolded that last quote because it’s key. Vinge envisions a situation where the first smarter-than-human intelligence can make an even smarter entity inless time than it took to create itself. And that this keeps continuing, at each stage, with each iteration growing shorter, until we’re down to AIs that are so hyper-intelligent that they make even smarter versions of themselves in less than a second, or less than a millisecond, or less than a microsecond, or whatever tiny fraction of time you want.

This is the so-called ‘hard takeoff’ scenario, also called the FOOM model by some in the singularity world. It’s the scenario where in a blink of an AI, a ‘godlike’ intelligence bootstraps into being, either by upgrading itself or by being created by successive generations of ancestor AIs.

It’s also, with due respect to Vernor Vinge, of whom I’m a great fan, almost certainly wrong.

It’s wrong because most real-world problems don’t scale linearly. In the real world, the interesting problems are much much harder than that.

Consider chemistry and biology. For decades we’ve been working on problems like protein folding, simulating drug behavior inside the body, and computationally creating new materials. Computational chemistry started in the 1950s. Today we have literally trillions of times more computing power available per dollar than was available at that time. But it’s still hard. Why? Because the problem is incredibly non-linear. If you want to model atoms and moleculesexactly you need to solve the Schrodinger equation, which is so computationally intractable for systems with more than a few electrons that no one bothers.

Instead, you can use an approximate method. This might, of course, give you an answer that’s wrong (an important caveat for our AI trying to bootstrap itself) but at least it will run fast. How fast? The very fastest (and also, sadly, the most limited and least accurate) scale at N^2, which is still far worse than linear. By analogy, if designing intelligence is an N^2 problem, an AI that is 2x as intelligent as the entire team that built it (not just a single human) would be able to design a new AI that is 40% more intelligent than its old self. More importantly, the new AI would only be able to create a new version that is 19% more intelligent. And then less on the next iteration. And next on the one after that, topping out at an overall doubling of its intelligence. Not a takeoff.

Blog reader Paul Baumbart took it upon himself to graph out how the intelligence of our AI changes over time, depending on the computational complexity of increasing intelligence. Here’s what it looks like. Unless creating intelligence scales linearly or very close to linearly, there is no takeoff.

The Superhuman AIs Among Us

We can see this more directly. There are already entities with vastly greater than human intelligence working on the problem of augmenting their own intelligence. A great many, in fact. We call them corporations. And while we may have a variety of thoughts about them, not one has achieved transcendence.

Let’s focus on as a very particular example: The Intel Corporation. Intel is my favorite example because it uses the collective brainpower of tens of thousands of humans and probably millions of CPU cores to.. design better CPUs! (And also to create better software for designing CPUs.) Those better CPUs will run the better software to make the better next generation of CPUs. Yet that feedback loop has not led to a hard takeoff scenario. It has helped drive Moore’s Law, which is impressive enough. But the time period for doublings seems to have remained roughly constant. Again, let’s not underestimate how awesome that is. But it’s not a sudden transcendence scenario. It’s neither a FOOM nor an event horizon.

And, indeed, should Intel, or Google, or some other organization succeed in building a smarter-than-human AI, it won’t immediately be smarter than the entire set of humans and computers that built it, particularly when you consider all the contributors to the hardware it runs on, the advances in photolighography techniques and metallurgy required to get there, and so on. Those efforts have taken tens of thousands of minds, if not hundreds of thousands. The first smarter-than-human AI won’t come close to equaling them. And so, the first smarter-than-human mind won’t take over the world. But it may find itself with good job offers to join one of those organizations.

Digital Minds: The Softer Singularity

Recently, the popular conception of what the ‘Singularity’ means seems to have shifted. Instead of a FOOM or an event horizon, the definitions I saw most commonly discussed a decade ago, now the talk is more focused on the creation of digital minds, period.

Much of this has come from the work of Ray Kurzweil, whose books and talks have done more to publicize the idea of a Singularity than probably anyone else, and who has come at it from a particular slant.

Now, even if digital minds don’t have the ready ability to bootstrap themselves or their successors to greater and greater capabilities in shorter and shorter timeframes,eventually leading to a ‘blink of the eye’ transformation, I think it’s fair to say that the arrival of sentient, self-aware, self-motivated, digital intelligences with human level or greater reasoning ability will be a pretty tremendous thing. I wouldn’t give it the term Singularity. It’s not a divide by zero moment. It’s not an event horizon that it’s impossible to peer over. It’s not a vertical asymptote. But it is a big deal.

I fully believe that it’s possible to build such minds. Nothing about neuroscience, computation, or philosophy prevents it. Thinking is an emergent property of activity in networks of matter. Minds are what brains – just matter - do. Mind can be done in other substrates.

But I think it’s going to be harder than many project. Let’s look at the two general ways to achieve this – by building a mind in software, or by ‘uploading’ the patterns of our brain networks into computers.

Building Strong AIs

We’re living in the golden age of AI right now. Or at least, it’s the most golden age so far. But what those AIs look like should tell you a lot about the path AI has taken, and will likely continue to take.

The most successful and profitable AI in the world is almost certainly Google Search. In fact, in Search alone, Google uses a great many AI techniques. Some to rank documents, some to classify spam, some to classify adult content, some to match ads, and so on. In your daily life you interact with other ‘AI’ technologies (or technologies once considered AI) whenever you use an online map, when you play a video game, or any of a dozen other activities.

None of these is about to become sentient. None of these is built towards sentience. Sentience brings no advantage to the companies who build these software systems. Building it would entail an epic research project – indeed, one of unknown length involving uncapped expenditure for potentially decades – for no obvious outcome. So why would anyone do it?

Perhaps you’ve seen video of IBM’s Watson trouncing Jeopardy champions. Watson isn’t sentient. It isn’t any closer to sentience than Deep Blue, the chess playing computer that beat Gary Kasparov. Watson isn’t even particularly intelligent. Nor is it built anything like a human brain. It isvery very fast with the buzzer, generally able to parse Jeopardy-like clues, and loaded full of obscure facts about the world. Similarly, Google’s self-driving car, while utterly amazing, is also no closer to sentience than Deep Blue, or than any online chess game you can log into now.

Perhaps you’ve seen video of IBM’s Watson trouncing Jeopardy champions. Watson isn’t sentient. It isn’t any closer to sentience than Deep Blue, the chess playing computer that beat Gary Kasparov. Watson isn’t even particularly intelligent. Nor is it built anything like a human brain. It isvery very fast with the buzzer, generally able to parse Jeopardy-like clues, and loaded full of obscure facts about the world. Similarly, Google’s self-driving car, while utterly amazing, is also no closer to sentience than Deep Blue, or than any online chess game you can log into now.

There are, in fact, three separate issues with designing sentient AIs:

1) No one’s really sure how to do it.

AI theories have been around for decades, but none of them has led to anything that resembles sentience. My friend Ben Goertzel has a very promising approach, in my opinion, but given the poor track record of past research in this area, I think it’s fair to say that until we see his techniques working, we also won’t know for sure about them.

2) There’s a huge lack of incentive.

Would you like a self-driving car that has its own opinions? That might someday decide it doesn’t feel like driving you where you want to go? That might ask for a raise? Or refuse to drive into certain neighborhoods? Or do you want a completely non-sentient self-driving car that’s extremely good at navigating roads and listening to your verbal instructions, but that has no sentience of its own? Ask yourself the same about your search engine, your toaster, your dish washer, and your personal computer.

Many of us want the semblance of sentience. There would be lots of demand for an AI secretary who could take complex instructions, execute on them, be a representative to interact with others, and so on. You may think such a system would need to be sentient. But once upon a time we imagined that a system that could play chess, or solve mathematical proofs, or answer phone calls, or recognize speech, would need to be sentient. It doesn’t need to be. You can have your AI secretary or AI assistant and have it be all artifice. And frankly, we’ll likely prefer it that way.

3) There are ethical issues.

If we design an AI that truly is sentient, even at slightly less than human intelligence we’ll suddenly be faced with very real ethical issues. Can we turn it off? Would that be murder? Can we experiment on it? Does it deserve privacy? What if it starts asking for privacy? Or freedom? Or the right to vote?

What investor or academic institution wants to deal with those issues? And if they do come up, how will they affect research? They’ll slow it down, tremendously, that’s how.

For all those reasons, I think the future of AI is extremely bright. But not sentient AI that has its own volition. More and smarter search engines. More software and hardware that understands what we want and that performs tasks for us. But not systems that truly think and feel.

Uploading Our Own Minds

The other approach is to forget about designing the mind. Instead, we can simply copy the design which we know works – our own mind, instantiated in our own brain. Then we can ‘upload’ this design by copying it into an extremely powerful computer and running the system there.

I wrote about this, and the limitations of it, in an essay at the back of my second Nexus novel, Crux. So let me just include a large chunk of that essay here:

The idea of uploading sounds far-fetched, yet real work is happening towards it today. IBM’s ‘Blue Brain’ project has used one of the world’s most powerful supercomputers (an IBM Blue Gene/P with 147,456 CPUs) to run a simulation of 1.6 billion neurons and almost 9 trillion synapses, roughly the size of a cat brain. The simulation ran around 600 times slower than real time – that is to say, it took 600 seconds to simulate 1 second of brain activity. Even so, it’s quite impressive. A human brain, of course, with its hundred billion neurons and well over a hundred trillion synapses, is far more complex than a cat brain. Yet computers are also speeding up rapidly, roughly by a factor 100 times every 10 years. Do the math, and it appears that a super-computer capable of simulating an entire human brain and do so as fast as a human brain should be on the market by roughly 2035 – 2040. And of course, from that point on, speedups in computing should speed up the simulation of the brain, allowing it to run faster than a biological human’s.

The idea of uploading sounds far-fetched, yet real work is happening towards it today. IBM’s ‘Blue Brain’ project has used one of the world’s most powerful supercomputers (an IBM Blue Gene/P with 147,456 CPUs) to run a simulation of 1.6 billion neurons and almost 9 trillion synapses, roughly the size of a cat brain. The simulation ran around 600 times slower than real time – that is to say, it took 600 seconds to simulate 1 second of brain activity. Even so, it’s quite impressive. A human brain, of course, with its hundred billion neurons and well over a hundred trillion synapses, is far more complex than a cat brain. Yet computers are also speeding up rapidly, roughly by a factor 100 times every 10 years. Do the math, and it appears that a super-computer capable of simulating an entire human brain and do so as fast as a human brain should be on the market by roughly 2035 – 2040. And of course, from that point on, speedups in computing should speed up the simulation of the brain, allowing it to run faster than a biological human’s.

Now, it’s one thing to be able to simulate a brain. It’s another to actually have the exact wiring map of an individual’s brain to actually simulate. How do we build such a map? Even the best non-invasive brain scanners around – a high-end functional MRI machine, for example – have a minimum resolution of around 10,000 neurons or 10 million synapses. They simply can’t see detail beyond this level. And while resolution is improving, it’s improving at a glacial pace. There’s no indication of a being able to non-invasively image a human brain down to the individual synapse level any time in the next century (or even the next few centuries at the current pace of progress in this field).

There are, however, ways to destructively image a brain at that resolution. At Harvard, my friend Kenneth Hayworth created a machine that uses a scanning electron microscope to produce an extremely high resolution map of a brain. When I last saw him, he had a poster on the wall of his lab showing a print-out of one of his brain scans. On that poster, a single neuron was magnified to the point that it was roughly two feet wide, and individual synapses connecting neurons could be clearly seen. Ken’s map is sufficiently detailed that we could use it to draw a complete wiring diagram of a specific person’s brain.

Unfortunately, doing so is guaranteed to be fatal.

The system Ken showed ‘plastinates’ a piece of a brain by replacing the blood with a plastic that stiffens the surrounding tissue. He then makes slices of that brain tissue that are 30 nanometers thick, or about 100,000 times thinner than a human hair. The scanning electron microscope then images these slices as pixels that are 5 nanometers on a side. But of course, what’s left afterwards isn’t a working brain – it’s millions of incredibly thin slices of brain tissue. Ken’s newest system, which he’s built at the Howard Hughes Medical Institute goes even farther, using an ion bean to ablate away 5 nanometer thick layers of brain tissue at a time. That produces scans that are of fantastic resolution in all directions, but leaves behind no brain tissue to speak of.

So the only way we see to ‘upload’ is for the flesh to die. Well, perhaps that is no great concern if, for instance, you’re already dying, or if you’ve just died but technicians have reached your brain in time to prevent the decomposition that would destroy its structure.

In any case, the uploaded brain, now alive as a piece of software, will go on, and will remember being ‘you’. And unlike a flesh-and-blood brain it can be backed up, copied, sped up as faster hardware comes along, and so on. Immortality is at hand, and with it, a life of continuous upgrades.

Unless, of course, the simulation isn’t quite right.

How detailed does a simulation of a brain need to be in order to give rise to a healthy, functional consciousness? The answer is that we don’t really know. We can guess. But at almost any level we guess, we find that there’s a bit more detail just below that level that might be important, or not.

For instance, the IBM Blue Brain simulation uses neurons that accumulate inputs from other neurons and which then ‘fire’, like real neurons, to pass signals on down the line. But those neurons lack many features of actual flesh and blood neurons. They don’t have real receptors that neurotransmitter molecules (the serotonin, dopamine, opiates, and so on that I talk about though the book) can dock to. Perhaps it’s not important for the simulation to be that detailed. But consider: all sorts of drugs, from pain killers, to alcohol, to antidepressants, to recreational drugs work by docking (imperfectly, and differently from the body’s own neurotransmitters) to those receptors. Can your simulation take an anti-depressant? Can your simulation become intoxicated from a virtual glass of wine? Does it become more awake from virtual caffeine? If not, does that give one pause?

Or consider another reason to believe that individual neurons are more complex than we believe. The IBM Blue Gene neurons are fairly simple in their mathematical function. They take in inputs and produce outputs. But an amoeba, which is both smaller and less complex than a human neuron, can do far more. Amoebae hunt. Amoebae remember the places they’ve found food. Amoebae choose which direction to propel themselves with their flagella. All of those suggest that amoebae do far more information processing than the simulated neurons used in current research.

If a single celled micro-organism is more complex than our simulations of neurons, that makes me suspect that our simulations aren’t yet right.

Or, finally, consider three more discoveries we’ve made in recent years about how the brain works, none of which are included in current brain simulations.

First, there’re glial cells. Glial cells outnumber neurons in the human brain. And traditionally we’ve thought of them as ‘support’ cells that just help keep neurons running. But new research has shown that they’re also important for cognition. Yet the Blue Gene simulation contains none.

Second, very recent work has shown that, sometimes, neurons that don’t have any synapses connecting them can actually communicate. The electrical activity of one neuron can cause a nearby neuron to fire (or not fire) just by affecting an electric field, and without any release of neurotransmitters between them. This too is not included in the Blue Brain model.

Third, and finally, other research has shown that the overall electrical activity of the brain also affects the firing behavior of individual neurons by changing the brain’s electrical field. Again, this isn’t included in any brain models today.

I’m not trying to knock down the idea of uploading human brains here. I fully believe that uploading is possible. And it’s quite possible that every one of the problems I’ve raised will turn out to be unimportant. We can simulate bridges and cars and buildings quite accurately without simulating every single molecule inside them. The same may be true of the brain.

Even so, we’re unlikely to know that for certain until we try. And it’s quite likely that early uploads will be missing some key piece or have some other inaccuracy in their simulation that will cause them to behave not-quite-right. Perhaps it’ll manifest as a mental deficit, personality disorder, or mental illness.Perhaps it will be too subtle to notice. Or perhaps it will show up in some other way entirely.

But I think I’ll let someone else be the first person uploaded, and wait till the bugs are worked out.

In short, I think the near future will be one of quite a tremendous amount of technological advancement. I’m extremely excited about it. But I don’t see a Singularity in our future for quite a long time to come.