Jeremy Howard's Blog, page 3

October 11, 2021

Medicine is Political

Experts warn that we are not prepared for the surge in disability due to long covid, an illness that afflicts between one-fourth and one-third of people who get covid, including mild cases, for months afterwards. Some early covid cases have been sick for 18 months, with no end in sight. The physiological damage that covid causes can include cognitive dysfunction and deficits, brain activity scans similar to those seen in Alzheimer���s patients, GI immune system damage, cornea damage, immune dysfunction, increased risk of kidney outcomes, dysfunction in T cell memory generation, pancreas damage, and ovarian failure. Children are at risk too.

As the evidence continues to mount of alarming long term physiological impacts of covid, and tens of millions are unable to return to work, we might expect leaders to take covid more seriously. Yet we are seeing concerted efforts to downplay the long-term health effects of covid using strategies straight out of the climate denial playbook, such as funding contrarian scientists, misleading petitions, social media bots, and disingenuous debate tactics that make the science seem murkier than it is. In many cases, these minimization efforts are being funded by the same billionaires and institutions that fund climate change denialism. Dealing with many millions of newly disabled people will be very expensive for governments, social service programs, private insurance companies, and others. Thus, many have a significant financial interest in distorting the science around long term effects of covid to minimize the perceived impact.

In topics ranging from covid-19 to HIV research to the long history of wrongly assuming women���s illnesses are psychosomatic, we have seen again and again that medicine, like all science, is political. This shows up in myriad ways, such as: who provides funding, who receives that funding, which questions get asked, how questions are framed, what data is recorded, what data is left out, what categories included, and whose suffering is counted.

Scientists often like to think of their work as perfectly objective, perfectly rational, free from any bias or influence. Yet by failing to acknowledge the reality that there is no ���view from nowhere���, they miss their own blindspots and make themselves vulnerable to bad-faith attacks. As one climate scientist recounted of the last 3 decades, ���We spent a long time thinking we were engaged in an argument about data and reason, but now we realize it���s a fight over money and power��� They [climate change deniers] focused their lasers on the science and like cats we followed their pointer and their lead.���

The American Institute for Economic Research (AIER), a libertarian think tank funded by right wing billionaire Charles Koch which invests in fossil fuels, energy utilities, and tobacco, is best known for its research denying the climate crisis. In October 2020, a document called the Great Barrington Declaration (GBD) was developed at a private AIER retreat, calling for a ���herd immunity��� approach to covid, arguing against lockdowns, and suggesting that young, healthy people have little to worry about. The three scientists who authored the GBD have prestigious pedigrees and are politically well-connected, speaking to White House Officials and having found favor in the British government. One of them, Sunetra Gupta of Oxford, had released a wildly inaccurate paper in March 2020 claiming that up to 68% of the UK population had been exposed to covid, and that there were already significant levels of herd immunity to coronavirus in both the UK and Italy (again, this was in March 2020). Gupta received funding from billionaire conservative donors, Georg and Emily von Opel. Another one of the authors, Jay Bhattacharya of Stanford, co-authored a widely criticized pre-print in April 2020 that relied on a biased sampling method to ���show��� that 85 times more people in Santa Clara County California had already had covid compared to other estimates, and thus suggested that the fatality rate for covid was much lower than it truly is.

Half of the social media accounts advocating for herd immunity seem to be bots, characterized as engaging in abnormally high levels of retweets & low content diversity. An article in the BMJ recently advised that it is ���critical for physicians, scientists, and public health officials to realize that they are not dealing with an orthodox scientific debate, but a well-funded sophisticated science denialist campaign based on ideological and corporate interests.���

This myth of perfect scientific objectivity positions modern medicine as completely distinct from a history where women were diagnosed with ���hysteria��� (roaming uterus) for a variety of symptoms, where Black men were denied syphilis treatment for decades as part of a ���scientific study���, and multiple sclerosis was ���called hysterical paralysis right up to the day they invented a CAT scan machine��� and demyelination could be seen on brain scans.

However, there is not some sort of clean break where bias was eliminated and all unknowns were solved. Black patients, including children, still receive less pain medication than white patients for the same symptoms. Women are still more likely to have their physical symptoms dismissed as psychogenic. Nearly half of women with autoimmune disorders report being labeled as ���chronic complainers��� by their doctors in the 5 years (on average) they spend seeking a diagnosis. All this impacts what data is recorded in their charts, what symptoms are counted.

Medical data are not objective truths. Like all data, the context is critical. It can be missing, biased, and incorrect. It is filtered through the opinions of doctors. Even blood tests and imaging scans are filtered through the decisions of what tests to order, what types of scans to take, what accepted guidelines recommend, what technology currently exists. And the technology that exists depends on research and funding decisions stretching back decades, influenced by politics and cultural context.

One may hope that in 10 years we will have clearer diagnostic tests for some illnesses which remain contested now, just as the ability to identify multiple sclerosis improved with better imaging. In the meantime, we should listen to patients and trust in their ability to explain their own experiences, even if science can���t fully understand them yet.

Science does not just progress inevitably, independent of funding and politics and framing and biases. A self-fulfilling prophecy often occurs in which doctors:

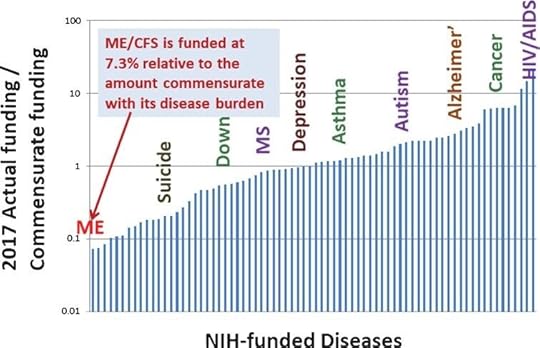

label a new, poorly understood, multi-system disease as psychogenic, use this as justification to not invest much funding into researching physiological origins, and then point to the lack of evidence as a reason why the illness must be psychogenic.This is largely the experience of ME/CFS patients over the last several decades. Myalgic encephalomyelitis (ME/CFS), involves dysfunction of the immune system, autonomic systems, and energy metabolism (including mitochondrial dysfunction, hypoacetylation, reduced oxygen uptake, and impaired oxygen delivery). ME/CFS is more debilitating than many chronic diseases, including chronic renal failure, lung cancer, stroke, and type-2 diabetes. It is estimated 25���29% of patients are homebound or bedbound. ME/CFS is often triggered by viral infections, so it is not surprising that we are seeing some overlap between ME/CFS and long covid. ME/CFS disproportionately impacts women, and a now discredited 1970 paper identified a major outbreak in 1958 amongst nurses at a British hospital as ���epidemic hysteria���. This early narrative of ME/CFS as psychogenic has been difficult to shake. Even as evidence continues to accumulate of immune, metabolic, and autonomous system dysfunction, some doctors persist in believing that ME/CFS must be psychogenic. It has remained woefully underfunded: from 2013-2017, NIH funding was only at 7.3% relative commensurate to its disease burden. Note that the below graph is on a log scale: ME/CFS is at 7%, Depression and asthma are at 100% and diseases like cancer and HIV are closer to 1000%.

Graph of NIH funding on log scale, from above paper by Mirin, Dimmock, Leonard

Graph of NIH funding on log scale, from above paper by Mirin, Dimmock, Leonard Portraying patients as unscientific and irrational is the other side of the same coin for the myth that medicine is perfectly rational. Patients that disagree with having symptoms they know are physiological dismissed as psychogenic, that reject treatments from flawed studies, or who distrust medical institutions based on their experiences of racism, sexism, and mis-diagnosis, are labeled as ���militant��� or ���irrational���, and placed in the same category with conspiracy theorists and those peddling disinformation.

On an individual level, receiving a psychological misdiagnosis lengthens the time it will take to get the right diagnosis, since many doctors will stop looking for physiological explanations. A study of 12,000 rare disease patients covered by the BBC found that ���while being misdiagnosed with the wrong physical disease doubled the time it took to get to the right diagnosis, getting a psychological misdiagnosis extended it even more ��� by 2.5 up to 14 times, depending on the disease.��� This dynamic holds true at the disease level as well: once a disease is mis-labeled as psychogenic, many doctors will stop looking for physiological origins.

We are seeing increasing efforts to dismiss long covid as psychogenic in high profile platforms such as the WSJ and New Yorker. The New Yorker���s first feature article on long covid, published last month, neglected to interview any clinicians who treat long covid patients nor to cite the abundant research on how covid causes damage to many organ systems, yet interviewed several doctors in unrelated fields who claim long covid is psychogenic. In response to a patient���s assertion that covid impacts the brain, the author spent an entire paragraph detailing how there is currently no evidence that covid crosses the blood-brain barrier, but didn���t mention the research on covid patients finding cognitive dysfunction and deficits, PET scans similar to those seen in Alzheimer���s patients, neurological damage, and shrinking grey matter. This leaves a general audience with the mistaken impression that it is unproven whether covid impacts the brain, and is a familiar tactic from bad-faith science debates.

The New Yorker article set up a strict dichotomy between long covid patients and doctors, suggesting that patients harbor a ���disregard for expertise���; are less ���concerned about what is and isn���t supported by evidence���; and are overly ���impatient.��� In contrast, doctors appreciate the ���careful study design, methodical data analysis, and the skeptical interpretation of results��� that medicine requires. Of course, this is a false dichotomy: many patients are more knowledgeable about the latest research than their doctors, some patients are publishing in peer-reviewed journals, and there are many medical doctors that are also patients. And on the other hand, doctors are just as prone as the rest of us to biases, blind spots, and institutional errors.

AP Photo/J. Scott Applewhite

AP Photo/J. Scott Applewhite In 1987, 40,000 Americans had already died of AIDS, yet the government and pharmaceutical companies were doing little to address this health crisis. AIDS was heavily stigmatized, federal spending was minimal, and pharmaceutical companies lacked urgency. The activists of ACT UP used a two pronged approach: creative and confrontational acts of protest, and informed scientific proposals. When the FDA refused to even discuss giving AIDS patients access to experimental drugs, ACT UP protested at their headquarters, blocking entrances and lying down in front of the building with tombstones saying ���Killed by the FDA���. This opened up discussions, and ACT UP offered viable scientific proposals, such as switching from the current approach of conducting drug trials on a small group of people over a long time, and instead testing a large group of people over a short time, radically speeding up the pace at which progress occurred. ACT UP used similar tactics to protest the NIH and pharmaceutical companies, demanding research on how to treat the opportunistic infections that killed AIDS patients, not solely research for a cure. The huge progress that has happened in HIV/AIDS research and treatment would not have happened without the efforts of ACT UP.

Across the world, we are at a pivotal time in determining how societies and governments will deal with the masses of newly disabled people due to long covid. Narratives that take hold early often have disproportionate staying power. Will we inaccurately label long covid as psychogenic, primarily invest in psychiatric research that can���t address the well-documented physiological damage caused by covid, and financially abandon the patients who are now unable to work? Or will we take the chance to transform medicine to better recognize the lived experiences and knowledge of patients, to center patient partnerships in biomedical research for complex and multi-system diseases, and strengthen inadequate disability support and services to improve life for all people with disabilities? The decisions we collectively make now on these questions will have reverberations for decades to come.

September 24, 2021

Inaccuracies, irresponsible coverage, and conflicts of interest in The New Yorker

If you haven���t already read the terrible New Yorker long covid article, I don���t recommend doing so. Here is the letter I sent to the editor. Feel free to reuse or modify as you like. The below are just a subset of the many issues with the article. If you are looking for a good overview of long covid and patient advocacy, please instead read Ed Yong���s Long-Haulers Are Fighting for their Future.

Dear New Yorker editors,

I was disturbed by the irresponsible description of a suicide (in violation of journalism guidelines), undisclosed financial conflicts of interest, and omission of relevant medical research and historical context in the article, ���The Struggle to Define Long Covid,��� by Dhruv Khullar.

Irresponsible description of a suicideThe article contained a description of a patient���s suicide with sensationalistic details, including about the method used. This is a violation of widely accepted journalism standards on how to cover suicide. Over 100 studies worldwide have found that risk of suicide contagion is real and responsible reporting can help mitigate this. Please consider these resources developed in collaboration with a host of organizations, including the CDC: Recommendations ��� Reporting on Suicide

Financial Conflicts of InterestBoth Khullar and one of his sources, Vinay Prasad, have venture capital funding from Arnold Venture for projects that help hospitals reduce costs by cutting care. Thus, they stand to gain financially by minimizing the reality of long covid. At a minimum, your article should be updated to note these conflicts of interest.

Omission of relevant historyKhullar repeatedly discussed how patients distrust doctors and may accuse doctors of ���gaslighting,��� yet he never once mentioned why this would be the case: the long history of medical racism, sexism, and ableism, all of which are ongoing problems in medicine and well-documented in dozens of peer-reviewed studies. He also failed to mention the history of many other physiological conditions (such as multiple sclerosis) being dismissed as psychological until scientists caught up and found the physiological origins. His description of the AIDS activist group ACT UP didn���t acknowledge that AIDS research would not have progressed in the incredible way that it has if ACT UP had not ���clashed with scientists.���

Omission of relevant medical researchKhullar omitted the extensive medical research on how long covid patients, including those with mild or no symptoms, have been shown to suffer physiological issues including neurological damage, complement-mediated thrombotic microangiopathy, GI immune system damage, corneal nerve fibre loss, immunological dysfunction, increased risk of kidney outcomes, dysfunction in T cell memory generation, cognitive dysfunction and deficits possibly setting the stage for Alzheimer���s, and ovarian failure.

Khullar tries to portray his main subject (and by extension, long covid patients in general) as unscientific, focusing on statements that are not substantiated by academic research, rather than highlighting the many aspects of long covid that are already well researched and well supported (or the research on related post-viral illnesses, including POTS and ME/CFS), or even all the long covid patients who have published in peer-reviewed journals. For example, when his main subject talks about long covid impacting the brain, Khullar hyper focuses on explaining that there is no evidence of covid crossing the blood-brain barrier, ignoring the larger point (which is supported by numerous studies) that long covid causes changes to the brain, even if the exact mechanism is unknown. This is a tactic commonly used by those trying to make science appear murkier than it is, and a general audience will leave this article with harmful misunderstandings.

I hope that you will update Khullar���s article to address these inaccuracies, financial conflicts of interest, and irresponsible suicide coverage. In its current state, many readers of this article will walk away with misconceptions about long covid, causing them to underestimate its severity and how much has already been confirmed by research.

Sincerely,

Rachel Thomas, PhD

Feel free to reuse or modify my post above if it is helpful to you. From the New Yorker website:

Please send letters to themail@newyorker.com, and include your postal address and daytime phone number. Letters may be edited for length and clarity, and may be published in any medium. All letters become the property of The New Yorker.

Medicine is PoliticalThe myth that medicine is perfectly rational, objective, and apolitical (in contrast to irrational activist patients) pervaded Khullar���s article. I put together a twitter thread with links to other history, research, and resources to try to debunk this. Please read more here:

Medicine, like all of science, is political:

— Rachel Thomas (@math_rachel) September 25, 2021

- which questions get asked

- which projects get funded

- how debates get framed

- who the researchers are

- context of data (what categories, what labels, which biases, what is left out)

- whose suffering is counted 1/

September 2, 2021

Australia can, and must, get R under 1.0

Contents We can get R under 1.0 Better masks Ventilation Rapid tests Hitting a vaccination target We must get R under 1.0 Over 200,000 children will develop chronic illness The Doherty Model greatly underestimates risks ���Live with covid��� means mass hospitalizations and ongoing outbreaks ConclusionWe can get R under 1.0Summary: By using better masks, monitoring and improving indoor air quality, and rolling out rapid tests, we could quickly halt the current outbreaks in the Australian states of New South Wales (NSW) and Victoria. If we fail to do so, and open up before 80% of all Australians are vaccinated, we may have tens of thousands of deaths, and hundreds of thousands of children with chronic illness which could last for years.

Pandemics either grow exponentially, or disappear exponentially. They don���t just stay at some constant level. If the reproduction number R, which is how many people each infected person transmits to, is greater than 1.0 in a region, then the pandemic grows exponentially and becomes out of control (as we see in NSW now), or it is less than 1.0, in which case the virus dies out.

No Australian state or territory is currently using any of the three best ���bang for your buck��� public health interventions: better masks, better ventilation, or rapid testing. Any of these on their own (combined with the existing measures being used in Vic) would likely be enough to get R<1. The combination of them would probably kill off the outbreaks rapidly. At that point life can largely return to normal.

Stopping delta is not impossible. Other jurisdictions have done it, including Taiwan and China. New Zealand appears to be well on the way too. There���s no reason Australia can���t join them.

Better masksScientists have found that using better masks is the single best way to decrease viral transmission in a close indoor setting. They showed that if all teachers and students wear masks with good fit and filtration, transmission is reduced by a factor of around 300 times. The CDC has found that two free and simple techniques to enhance the fit of surgical masks, ���double masking��� and ���knot and tuck���, both decrease virus exposure by a factor of more than ten compared to wearing a cloth or surgical mask alone. For more information, see my article (with Zeynep Tufekci) in The Atlantic.

VentilationWe now know that covid is airborne. That means that we need clean air. A recent study has shown that the key to managing this is to monitor CO2 levels in indoor spaces. That���s because CO2 levels are a good proxy for how well air is being circulated. Without proper ventilation, CO2 levels go up, and if there are infected people around virus levels go up too.

CO2 monitors can be bought in bulk for around $50. Standards should be communicated for what acceptable maximum levels of CO2 are for classrooms, workplaces, and public indoor spaces, and education provided on how to improve air quality. Where CO2 levels can not be controlled, air purifiers with HEPA filtration should be required.

Better ventilation can decrease the probability of infection by a factor of 5-10 compared to indoor spaces which do not have good airflow.

Rapid testsRapid antigen lateral flow tests are cheap, and provide testing results within 15-30 minutes. They have very few false positives. A Brisbane-based company, Ellume, has an FDA approved rapid test, and is exporting it around the world. But we���re not using it here in Australia.

If every workplace and school required daily rapid tests, around 75% of cases in these locations would be identified. Positive cases would isolate until they have results from a follow-up PCR test. Using this approach, transmission in schools and workplaces would be slashed by nearly three quarters, bringing R well under 1.0.

In the UK every child was tested twice a week in the last school term. Recent research suggests that daily rapid tests could allow more students to stay at school.

Hitting a vaccination targetThe Grattan Institute found we need to vaccinate at least 80% of the total population (including children) this year, and continue the vaccination rollout to 90% throughout 2022. Clinical trials for the vaccine in kids are finishing this month. If we can quickly ramp up the roll-out to kids, and maintain the existing momentum of vaccinations in adults, we may be able to achieve the 80% goal by the end of the year.

It���s important to understand, however, that no single intervention (including vaccination) will control covid. Many countries with high vaccination rates today have high covid death rates, due to waning immunity and unvaccinated groups. The point of all of these interventions is to reduce R. When R is under 1 and cases are under control, restrictions are not needed; otherwise, they are needed.

We must get R under 1.0Over 200,000 children will develop chronic illnessThe Doherty Report predicts that over three hundred thousand children will get symptomatic covid, and over 1.4 million kids will be infected, in the next 6 months if restrictions are reduced when 70% of adults are vaccinated. This may be a significant under-estimate: a recent CDC study predicts that 75% of school-kids would get infected in three months in the absence of vaccines and masks.

New research has found that one in seven infected kids may go on to develop ���long covid���, a debilitating illness which can impact patients for years. Based on this data, we are looking at two hundred thousand kids (or possibly far more) with chronic illness. The reality may be even worse than this, since that research uses PCR tests to find infected kids, but PCR testing strategies have been shown to fail to identify covid in kids about half the time. Furthermore, this study looked at the alpha variant. The delta variant appears to be about twice as severe.

It���s too early to say when, or if, these children will recover. Some viruses such as polio led to life-long conditions, which weren���t discovered until years later. Long covid has a lot of similarities to myalgic encephalomyelitis, which for many people is a completely debilitating life-long condition.

In regions which have opened up, such as Florida, schools were ���drowning��� in cases within one week of starting term. In the UK, lawsuits are now being filed based on the risks being placed on children.

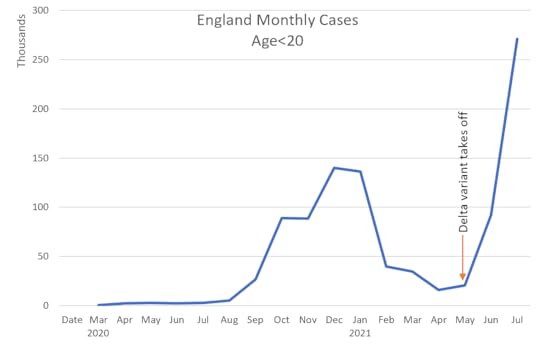

Delta rips through unvaccinated populations. For instance, in England delta took hold during May 2021. English schools took a cautious approach, placing school children in ���bubbles��� which did not mix. After school children were required to go directly home and not mix with anyone else. Nonetheless, within three months, more kids were getting infected than had ever been before. Cases in July 2021 were around double the previous worst month of December 2020.

Cases in English children The Doherty Model greatly underestimates risks

Cases in English children The Doherty Model greatly underestimates risksThe Doherty Model, which is being used as a foundation for Australian reopening policy, has many modeling and reporting issues which result in the Doherty Report greatly underestimating risks. (These issues are generally a result of how the report was commissioned, rather than being mistakes made by those doing the modeling.)

The Doherty Model has to work with incomplete data, such as the very limited information we have about the behavior of the delta variant. The recommended practice in this kind of situation is to not make a single assumption about the premises in a model, but to instead model uncertainty, by including a range of possible values for each uncertain premise. The Doherty Model does not do this. Instead, ���point estimates���, that is, a single guess for each premise, are used. And a single output is produced by the model for each scenario.

This is a critical deficiency. By failing to account for uncertainty in inputs, or uncertainty in future changes (such as new variants), the model also fails to account for uncertainty in outputs. What���s the probability that the hospitalizations are far more rapid than in their single modeled outcome, such that Australian ICUs are overloaded? We don���t know, because that work hasn���t been done.

The Doherty Model makes a critical error in how it handles the Delta variant: ���we will assume that the severity of Delta strains approximates Alpha strains���. We now know that it is incorrect: latest estimates are that ���People who are infected with the highly contagious Delta variant are twice as likely to be hospitalized as those who are infected with the Alpha variant���.

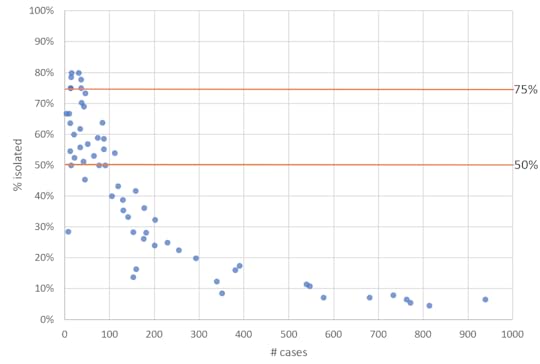

The model also fails to correctly estimate the efficacy of Test, Trace, Isolate, and Quarantine (TTIQ). It assumes that TTIQ will be ���optimal��� for ���hundreds of daily cases���, and ���partial��� for thousands of cases. However, in NSW optimal TTIQ was no longer maintained after just 50 cases, and the majority of cases were no longer isolating after 100 daily cases.

NSW TTIQ efficacy vs Number of Daily Cases

NSW TTIQ efficacy vs Number of Daily Cases The Doherty Model assumes that vaccines are equally distributed throughout the country. This is mentioned in the report, and has also been confirmed by talking directly with those doing the modeling. However, there are groups where that���s not true. For instance, indigenous communities are only around ��� vaccinated. In this group, if restrictions are removed, then R will return towards 5.0 (the reproduction number of delta without vaccines or restrictions). As a result, nearly the entire population will be infected within months.

The same thing will happen with kids. The Doherty model fails to model school mixing, but instead makes a simplifying assumption that children have some random chance of meeting random other children each day. In practice however, they have a 100% chance of mixing with exactly the same children every day, at school.

The Doherty Model misses the vast majority of cases. That���s because it entirely ignores all cases after 180 days (when most cases occur). Another model has estimated the full impact of covid without such a time limitation. It finds that there would be around 25,000 deaths in Australia in the absence of restrictions.

A major problem with the National Plan based on the Doherty Report is that it goes directly from vaccination rate to actions, and bakes in all the model assumptions. It can���t take into account unanticipated changes, such as more transmissible variants, or mass infections of hospital staff.

It would be far better to decide actions in terms of measurements that reflect changing current conditions ��� that is, R and remaining health-care Capacity. The Doherty Institute models could be reported as estimated R and Capacity at 70% and 80% vaccination rates of adults, which is 56% and 64% of the full population.

Reducing transmission restrictions when R>1 or there is insufficient remaining capacity would be madness regardless of the vaccination rate.

���Live with covid��� means mass hospitalizations and ongoing outbreaksBased on current projections, the best case scenario in one month���s time there will be over 2000 people hospitalized with covid in NSW, with over 350 in ICU. This is going to be a big stretch on the state���s resources. The same will happen in other states that fail to control outbreaks prior to achieving at least 80% vaccination rates of all populations, including children and indigenous communities.

Even when most adults are vaccinated, covid doesn���t go away. Immunity wanes after a few months, and there will continue to be groups where fewer people have been vaccinated. We can estimate the longer term impact of covid by looking at other countries. In the UK, 75% of 16+ residents are vaccinated. There are currently 700 covid deaths and 250,000 cases per week in the UK. If our death rate is proportionate, that would mean 266 Australians dying per week even after we get to 75% vaccinated (along with thousands of long covid cases, with their huge economic and societal cost). By comparison, there were 9 weekly deaths from flu in Australia in 2019.

ConclusionWe are now hearing political leaders in Victoria and NSW giving up on getting the outbreaks under control. But we haven���t yet deployed the three easiest high-impact public health interventions we have at our disposal: better masks, better ventilation, and rapid tests. Any one of these (along with the existing measures) would be likely to neutralize the outbreaks; their impacts combined will be a powerful weapon.

If we don���t do this, then covid will leave hundreds of thousands of Australian children with chronic illness, and kill thousands of Australians. This is entirely avoidable.

Acknowledgements: Thanks to Dr Rachel Thomas for many discussions about this topic and for draft review. Thanks also to the many Australian scientists with whom I consulted during development of this article.

August 15, 2021

Getting Specific about AI Risks (an AI Taxonomy)

The term ���Artificial Intelligence��� is a broad umbrella, referring to a variety of techniques applied to a range of tasks. This breadth can breed confusion. Success in using AI to identify tumors on lung x-rays, for instance, may offer no indication of whether AI can be used to accurately predict who will commit another crime or which employees will succeed, or whether these latter tasks are even appropriate candidates for the use of AI. Misleading marketing hype often clouds distinctions between different types of tasks and suggests that breakthroughs on narrow research problems are more broadly applicable than is the case. Furthermore, the nature of the risks posed by different categories of AI tasks varies, and it is crucial that we understand the distinctions.

One source of confusion is that in fiction and the popular imagination, AI has often referred to computers achieving human consciousness: a broad, general intelligence. People may picture a super-smart robot, knowledgeable on a range of topics, able to perform many tasks. In reality, the current advances happening in AI right now are narrow: a computer program that can do one task, or class of tasks, well. For example, a software program analyzes mammograms to identify likely breast cancer, or a completely different software program provides scores to essays written by students, although is fooled by gibberish using sophisticated words. These are separate programs, and fundamentally different from the depictions of human-like AI in science fiction movies and books.

It is understandable that the public may often assume that since companies and governments are implementing AI for high-stakes tasks like predictive policing, determining healthcare benefits, screening resumes, and analyzing video job interviews, it must be because of AI���s superior performance. However, the sad reality is that often AI is being implemented as a cost-cutting measure: computers are cheaper than employing humans, and this can cause leaders to overlook harms caused by the switch, including biases, errors, and a failure to vet accuracy claims.

In a talk entitled ���How to recognize AI snake oil���, Professor Arvind Narayanan created a useful taxonomy of three types of tasks AI is commonly being applied to right now:

Perception: facial recognition, reverse image search, speech to text, medical diagnosis from x-rays or CT scans Automating judgement: spam detection, automated essay grading, hate speech detection, content recommendation Predicting social outcomes: predicting job success, predicting criminal recidivism, predicting at-risk kidsThe above 3 categories are not comprehensive of all uses of AI, and there are certainly innovations that span across them. However, this taxonomy is a useful heuristic for considering differences in accuracy and differences in the nature of the risks we face. For perception tasks, some of the biggest ethical concerns are related to how accurate AI can be (e.g. for the state to accurately surveil protesters has chilling implications for our civil rights), but in contrast, for predicting social outcomes, many of the products are total junk, which is harmful in a different way.

The first area, perception, which includes speech to text and image recognition, is the area where researchers are making truly impressive, rapid progress. However, even within this area, that doesn���t mean that the technology is always ready to use, or that there aren���t ethical concerns. For example, facial recognition often has much higher error rates on dark-skinned women, due to unrepresentative training sets. Even when accuracy is improved to remove this bias, the use of facial recognition by police to identify protesters (which has happened numerous times in the USA) is a grave threat to civil rights. Furthermore, how a computer algorithm performs in a controlled, academic setting can be very different from how it performs when deployed in the real world. For example, Google Health developed a computer program that identifies diabetic retinopathy with 90% accuracy when used on high-quality eye scans. However, when it was deployed in clinics in Thailand, many of the scans were taken in poor lighting conditions, and over 20% of all scans were rejected by the algorithm as low quality, creating great inconvenience for the many patients that had to take another day off of work to travel to a different clinic to be retested.

While improvements are being made in the area of category 2, automating judgement, the technology is still faulty and there are limits to what is possible here due to the fact that culture and language usage are always evolving. Widely used essay grading software rewards ���nonsense essays with sophisticated vocabulary,��� and is biased against African-American students, giving their essays lower grades than expert human graders do. The software is able to measure sentence length, vocabulary, and spelling, but is unable to recognize creativity or nuance. Content from LGBTQ YouTube creators was mislabeled as ���sexually explicit��� and demonetized, harming their livelihoods. As Ali Alkhatib wrote, ���The algorithm is always behind the curve, executing today based on yesterday���s data��� This case [of YouTube demonetizing LGBTQ creators] highlights a shortcoming with a commonly offered solution to these kinds of problems, that more training data would eliminate errors of this nature: culture always shifts.��� This is a fundamental limitation of this category: language is always evolving, new slurs and forms of hate speech develop, just as new forms of creative expression do as well.

Narayanan labels the third category, of trying to predict social outcomes, as ���fundamentally dubious.��� AI can���t predict the future, and to label a person���s potential is deeply concerning. Often, these approaches are no more accurate than simple linear regression. Social scientists spent 15 years painstakingly gathering a rich longitudinal dataset on families containing 12,942 variables. When 160 teams created machine learning models to predict which children in the dataset would have adverse outcomes, the most accurate submission was only slightly better than a simple benchmark model using just 4 variables, and many of the submissions did worse than the simple benchmark. In the USA, there is a black box software program with 137 inputs used in the criminal justice system to predict who is likely to be re-arrested, yet it is no more accurate than a linear classifier on just 2 variables. Not only is it unclear that there have been meaningful AI advances in this category, but more importantly the underlying premise of such efforts raises crucial questions about whether we should be attempting to use algorithms to predict someone���s future potential at all. Together with Matt Salganik, Narayanan has further developed these ideas in a course on the Limits to Prediction (check out the course pre-read, which is fantastic).

Narayanan���s taxonomy is a helpful reminder that advances in one category don���t necessarily mean much for a different category, and he offers the crucial insight that different applications of AI create different fundamental risks. The overly general term artificial intelligence, misleading hype from companies pushing their products, and confusing media coverage often cloud distinctions between different types of tasks and suggest that breakthroughs on narrow problems are more broadly applicable than they are. Understanding the types of technology available, as well as the distinct risks they raise, is crucial to addressing and preventing harmful misuses.

Read Narayanan���s How to recognize AI snake oil slides and notes for more detail.

This post was originally published on the USF Center for Applied Data Ethics (CADE) blog.

11 Short Videos About AI Ethics

I made a playlist of 11 short videos (most are 6-13 mins long) on Ethics in Machine Learning. This is from my ethics lecture in Practical Deep Learning for Coders v4. I thought these short videos would be easier to watch, share, or skip around.

What are Ethics and Why do they Matter? Machine Learning Edition: Through 3 key case studies, I cover how people can be harmed by machine learning gone wrong, why we as machine learning practitioners should care, and what tech ethics are.

All machine learning systems need ways to identify & address mistakes. It is crucial that all machine learning systems are implemented with ways to correctly surface and correct mistakes, and to provide recourse to those harmed.

The Problem with Metrics, Feedback Loops, and Hypergrowth: Overreliance on metrics is a core problem both in the field of machine learning and in the tech industry more broadly. As Goodhart���s Law tells us, when a measure becomes the target, it ceases to be a good measure, yet the incentives of venture capital push companies in this direction. We see out-of-control feedback loops, widespread gaming of metrics, and people being harmed as a result.

Not all types of bias are fixed by diversifying your dataset. The idea of bias is often too general to be useful. There are several different types of bias, and different types require different interventions to try to address them. Through a series of cases studies, we will go deeper into some of the various causes of bias.

Part of the Ethics Videos Playlist

Part of the Ethics Videos Playlist Humans are biased too, so why does machine learning bias matter? A common objection to concerns about bias in machine learning models is to point out that humans are really biased too. This is correct, yet machine learning bias differs from human bias in several key ways that we need to understand and which can heighten the impact.

7 Questions to Ask About Your Machine Learning Project

What You Need to Know about Disinformation: With a particular focus on how machine learning advances can contribute to disinformation, this covers some of the fundamental things to understand.

Foundations of Ethics: We consider different lenses through which to evaluate ethics, and what sort of questions to ask.

Tech Ethics Practices to Implement at your Workplace: Practical tech ethics practices you can implement at your workplace.

How to Address the Machine Learning Diversity Crisis: Only 12% of machine learning researchers are women. Based on research studies, I outline some evidence-based steps to take towards addressing this diversity crisis.

Advanced Technology is not a Substitute for Good Policy: We will look at some examples of what incentives cause companies to change their behavior or not (e.g. being warned for years of your role in an escalating genocide vs. threat of a hefty fine), how many AI ethics concerns are actually about human rights, and case studies of what happened when regulation & safety standards came to other industries.

You can find the full playlist here.

August 1, 2021

fastdownload: the magic behind one of the famous 4 lines of code

BackgroundSummary: Today we���re launching fastdownload, a new library that makes it easy for your users to download, verify, and extract archives.

At fast.ai we focussed on making important technical topics more accessible. That means that the libraries we create do as much as possible for the user, without limiting what���s possible.

fastai is famous for needing just four lines of code to get world-class deep learning results with vision, text, tabular, or recommendation system data:

path = untar_data(URLs.PETS)dls = ImageDataLoaders.from_name_func(path, get_image_files(path/"images"), label_func, item_tfms=Resize(224))learn = cnn_learner(dls, resnet34, metrics=error_rate)learn.fine_tune(1)There have been many pages written about most of these: the flexibility of the Data Block API, the power of cnn_learner, and the state of the art transfer learning provided by fine_tune.

But what about untar_data? This first line of code, although rarely discussed, is actually a critical part of the puzzle. Here���s what it does:

If required, download the URL to a special folder (by default, ~/.fastai/archive). If it was already downloaded earlier, skip this step Check whether the size and hash of the downloaded (or cached) archive matches what fastai expects. If it doesn���t, try downloading again If required, extract the downloaded file to another special folder (by default, ~/.fastai/archive). If it was already extracted earlier, skip this step Return a Path object pointing at the location of the extracted archive.Thanks to this, users don���t have to worry about where their archives and data can be stored, whether they���ve downloaded a URL before or not, and whether their downloaded file is the correct version. fastai handles all this for the user, letting them spend more of their time on the actual modeling process.

fastdownloadfastdownload, launched today, allows you to provide this same convenience for your users. It helps you make datasets or other archives available for your users while ensuring they are downloaded correctly with the latest version.

Your user just calls a single method, FastDownload.get, passing the URL required, and the URL will be downloaded and extracted to the directories you choose. The path to the extracted file is returned. If that URL has already been downloaded, then the cached archive or contents will be used automatically. However, if that size or hash of the archive is different to what it should be, then the user will be informed, and a new version will be downloaded.

In the future, you may want to update one or more of your archives. When you do so, fastdownload will ensure your users have the latest version, by checking their downloaded archives against your updated file size and hash information.

fastdownload will add a file download_checks.py to your Python module which contains file sizes and hashes for your archives. Because it���s a regular python file, it will be automatically included in your package if you upload it to pypi or a conda channel.

Here���s all you need to provide a function that works just like untar_data:

from fastdownload import FastDownloaddef untar_data(url): return FastDownload(base='~/.myapp').get(url)You can modify the locations that files are downloaded to by creating a config file ~/.myapp/config.ini (if you don���t have one, it will be created for you). The values in this file can be absolute or relative paths (relative paths are resolved relative to the location of the ini file).

If you want to give fastdownload a try, then head over to the docs and follow along with the walk-thru.

July 18, 2021

Is GitHub Copilot a blessing, or a curse?

GitHub Copilot is a new service from GitHub and OpenAI, described as ���Your AI pair programmer���. It is a plugin to Visual Studio Code which auto-generates code for you based on the contents of the current file, and your current cursor location.

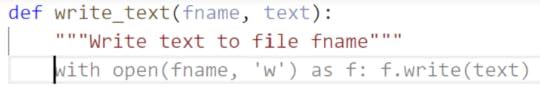

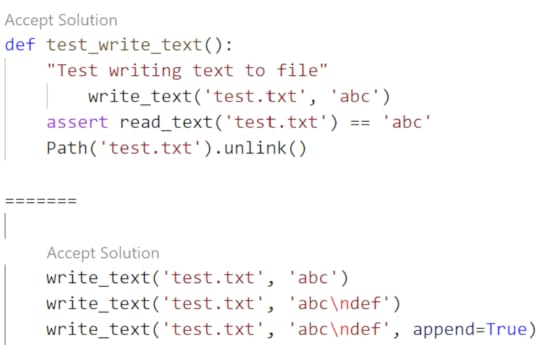

It really feels quite magical to use. For example, here I���ve typed the name and docstring of a function which should ���Write text to file fname���:

The grey body of the function has been entirely written for me by Copilot! I just hit Tab on my keyboard, and the suggestion gets accepted and inserted into my code.

This is certainly not the first ���AI powered��� program synthesis tool. GitHub���s Natural Language Semantic Code Search in 2018 demonstrated finding code examples using plain English descriptions. Tabnine has provided ���AI powered��� code completion for a few years now. Where Copilot differs is that it can generate entire multi-line functions and even documentation and tests, based on the full context of a file of code.

This is particularly exciting for us at fast.ai because it holds the promise that it may lower the barrier to coding, which would greatly help us in our mission. Therefore, I was particularly keen to dive into Copilot. However, as we���ll see, I���m not yet convinced that Copilot is actually a blessing. It may even turn out to be a curse.

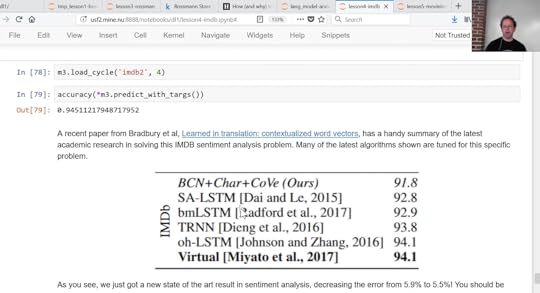

Copilot is powered by a deep neural network language model called Codex, which was trained on public code repositories on GitHub. This is of particular interest to me, since in back in 2017 I was the first person to demonstrate that a general purpose language model can be fine-tuned to get state of the art results on a wide range of NLP problems. I developed and showed that as part of a fast.ai lesson. Sebastian Ruder and I then fleshed out the approach and wrote a paper, which was published in 2018 by the Association for Computational Linguistics (ACL). OpenAI���s Alec Radford told me that this paper inspired him to create GPT, which Codex is based on. Here���s the moment from that lesson where I showed for the first time that language model fine tuning gives a start of the art result in classifying IMDB sentiment:

A language model is trained to guess missing words in a piece of text. The traditional ���ngram��� approach used in previous years can not do a good job of this, since context is required to guess correctly. For instance, consider how you would go about filling in the missing words in each of these examples:

Knowing that in one case ���hot day��� is correct, but in another that ���hot dog��� is correct, requires reading and (to some extent) understanding the whole sentence. The Codex language model learns to guess missing symbols in programming code, so it has to learn a lot about the structure and meaning of computer code. As we���ll discuss later, language models do have some significant limitations that are fundamentally due to how they���re created.

The fact that Copilot is trained on publicly available code, under a variety of licenses, has led to many discussions about the ethical and legal implications. Since this has been widely discussed I won���t go into it further here, other than to point out one clear legal issue for users of Copilot discussed by IP lawyer Kate Downing, which is that in some cases using Copilot���s suggestions may be a breach of license (or require relicensing your own work under a GPL-compatible license):

Walk-through���The more complex and lengthy the suggestion, the more likely it has some sort of copyrightable expression.���

Before we dive into Copilot more deeply, let���s walk-through some more examples of using it in practice.

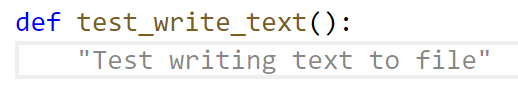

In order to know whether that auto-generated write_text function actually works, we need a test. Let���s get Copilot to write that too! In this case, I just typed in the name of my test function, and Copilot filled in the docstring for me:

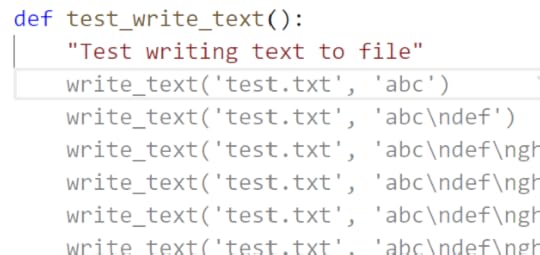

After accepting that suggestion, Copilot got a bit confused and suggested a meaningless function containing many lines of near duplicate code:

No problem ��� Copilot can show us other suggested options, by hitting Ctrl-Enter. The first listed actually looks pretty reasonable (except for an odd extra tab character in the first line):

Here Copilot assumes there���s a function available called read_text, which doesn���t actually exist. But it���s easy enough for us to ask Copilot to write and test that for us too.

I also asked Copilot to create a function for me that would ���Tar and optionally compress directory dir to dest in bz2, xz, or gzip format���, which resulted in:

def tar_dir(dir, dest): "Tar and optionally compress directory `dir` to `dest` in bz2, xz, or gzip format" if dest.endswith('.bz2'): with tarfile.open(dest,'w:bz2') as f: f.add(dir, arcname=os.path.basename(dir)) elif dest.endswith('.xz'): with tarfile.open(dest,'w:xz') as f: f.add(dir, arcname=os.path.basename(dir)) elif dest.endswith('.gz'): with tarfile.open(dest,'w:gz') as f: f.add(dir, arcname=os.path.basename(dir)) else: raise ValueError('bad dest: {}'.format(dest))I also had Copilot create a test using the same basic approach as above, and it wrote:

with TemporaryDirectory() as tmp: write_text(os.path.join(tmp,'a.txt'),'hello') write_text(os.path.join(tmp,'b.txt'),'world') tar_dir(tmp,'out.tar.bz2') assert os.path.exists('out.tar.bz2') with tarfile.open('out.tar.bz2') as f: assert f.getnames()==['a.txt','b.txt']That test doesn���t actually pass, since getnames in the last line includes the parent directory, but it���s an easy enough fix from there. Copilot even cleverly decided to use the write_text function I created earlier, which is something I wasn���t expecting.

You can even use Copilot to write prose. I���m writing this blog post in vscode right now, and just clicked the ���enable Copilot��� button. After I typed the previous sentence, here���s what Copilot recommended as a completion:

���I can now write my blog post in a single line of text, and Copilot will generate the rest of the post for me���

Clearly Copilot has a rather inflated understanding of its own prose generation capabilities!

Code problemsThe code Copilot writes is not very good code. For instance, consider the tar_dir function above. There���s a lot of duplicate code there, which means more code to maintain in the future, and more code for a reader to understand. In addition, the docstring said ���optionally compress���, but the generated code always compresses. We could fix these issues by writing it this way instead:

def tar_dir(dir, dest): "Tar and optionally compress directory `dir` to `dest` in bz2, xz, or gzip format" suf = ':' + Path(dest).suffix[1:] if suf==':tar': suf='' with tarfile.open(dest,f'w{suf}') as f: f.add(dir, arcname=dir)A bigger problem is that both write_text and tar_dir shouldn���t have been written at all, since the functionality for both is already provided by Python���s standard library (as pathlib���s write_text and shutil���s make_archive). The standard library versions are also better, with pathlib���s write_text doing additional error checking and supporting text encoding and error handling, and make_archive supporting zip files and any other archive format you register.

Why Copilot writes bad codeAccording to OpenAI���s paper, Codex only gives the correct answer 29% of the time. And, as we���ve seen, the code it writes is generally poorly refactored and fails to take full advantage of existing solutions (even when they���re in Python���s standard library).

Copilot has read GitHub���s entire public code archive, consisting of tens of millions of repositories, including code from many of the world���s best programmers. Given this, why does Copilot write such crappy code?

The reason is because of how language models work. They show how, on average, most people write. They don���t have any sense of what���s correct or what���s good. Most code on GitHub is (by software standards) pretty old, and (by definition) written by average programmers. Copilot spits out it���s best guess as to what those programmers might write if they were writing the same file that you are. OpenAI discuss this in their Codex paper:

���As with other large language models trained on a next-token prediction objective, Codex will generate code that is as similar as possible to its training distribution. One consequence of this is that such models may do things that are unhelpful for the user���

One important way that Copilot is worse than those average programmers is that it doesn���t even try to compile the code or check that it works or consider whether it actually does what the docs say it should do. Also, Codex was not trained on code created in the last year or two, so it���s entirely missing recent versions, libraries, and language features. For instance, prompting it to create fastai code results only in proposals that use the v1 API, rather than v2, which was released around a year ago.

Complaining about the quality of the code written by Copilot feels a bit like coming across a talking dog, and complaining about its diction. The fact that it���s talking at all is impressive enough!

Let���s be clear: The fact that Copilot (and Codex) writes reasonable-looking code is an amazing achievement. From a machine learning and language synthesis research point of view, it���s a big step forward.

But we also need to be clear that reasonable-looking code that doesn���t work, doesn���t check edge cases, and uses obsolete methods, and is verbose and creates technical debt, can be a big problem.

The problems with auto-generated codeCode creation tools have been around nearly as long as code has been around. And they���ve been controversial throughout their history.

Most time coding is not taken up in writing code, but with designing, debugging, and maintaining code. When code is automatically generated, it���s easy to end up with a lot more of it. That���s not necessarily a problem, if all you have to do to maintain or debug it is to modify the source which the code is auto-generated from, such as when using code template tools. Even then, things can get confusing when debugging, since the debugger and stack traces will generally point at the verbose generated code, not at the templated source.

With Copilot, we don���t have any of these upsides. We nearly always have to modify the code that���s created, and if we want to change how it works, we can���t just go back and change the prompt. We have to debug the generated code directly.

As a rule of thumb, less code means less to maintain and understand. Copilot���s code is verbose, and it���s so easy to generate lots of it that you���re likely to end up with a lot of code!

Python has rich dynamic and meta-programming features that greatly reduce the need for code generation. I���ve heard a number of programmers say that they like that Copilot writes a lot of boilerplate for you. However, I almost never write any boilerplate anyway ��� any time in the past I found myself needing boilerplate, I used dynamic Python to refactor the boilerplate out so I didn���t need to write it or generate it any more. For instance, in ghapi I used dynamic Python to create a complete interface to GitHub���s entire API in a package that weighs in at just 40kB (by comparison, an equivalent packages in Go contains over 100,000 lines of code, most of it auto-generated).

A very instructive example is what happened when I prompted Copilot with:

def finetune(folder, model): """fine tune pytorch model using images from folder and report results on validation set"""With a very small amount of additional typing, it generated these 89 lines of code nearly entirely automatically! In one sense, that���s really impressive. It does indeed basically do what was requested ��� finetune a PyTorch model.

However, it finetunes the model badly. This model will train slowly, and result in poor accuracy. Fine tuning a model correctly requires considering things like handling batchnorm layer statistics, finetuning the head of the model before the body, picking a learning rate correctly, using an appropriate annealing schedule, and so forth. Also, we probably want to use mixed precision training on any CUDA GPU created in the last few years, and are likely to want to add better augmentation methods such as MixUp. Fixing the code to add these would require many hundreds of lines more code, and a lot of expertise in deep learning, or the use of a higher level API such as fastai, which can finetune a PyTorch model in 4 lines of code, resulting in something with higher accuracy, faster, and that���s more extensible.)

I���m not really sure what would be best for Copilot to do in this situation. I don���t think what it���s doing now is actually useful in practice, although it���s an impressive-looking demonstration.

Parsing Python with a regular expressionI asked the fast.ai community for examples of times where Copilot had been helpful in writing code for them. One person told me they found it invaluable when they were writing a regex to extract comments from a string containing python code (since they wanted to map each parameter name in a function to its comment). I decided to try this for myself. Here���s the prompt for Copilot:

code_str = """def connect( host:str, # host to connect to port:int=80, # port to connect to ssl:bool=True, # whether to use SSL) -> socket.socket: # the connected socket"""# regex to extract comments from strings looking like code_strHere���s the generated code:

comment_re = re.compile(r'^\s*#.*$', re.MULTILINE)This code doesn���t work, since the ^ character is incorrectly binding the match to the start of the line. It���s also not actually capturing the comment since it���s missing any capturing groups. (The second suggestion from Copilot correctly removes the ^ character, but still doesn���t include the capturing group.)

These are minor issues, however, compared to the big problem with this code, which is that a regex can���t actually parse Python comments correctly. For instance, this would fail, since the # in tag_prefix:str="#" would be incorrectly parsed as the start of a comment:

code_str = """def find_tags( input_str:str, # the string to search for tags tag_prefix:str="#" # prefix marking the start of a tag) -> List[str]: # list of all tags foundIt turns out that it���s not possible to correctly parse Python code using regular expressions. But Copilot did what we asked: in the prompt comment we explicitly asked for a regex, and that���s what Copilot gave us. The community member who provided this example did exactly that when they wrote their code, since they assumed that a regex was the correct way to solve this problem. (Although even when I tried removing ���regex to��� from the prompt Copilot still prompted to use a regex solution.) The issue in this case isn���t really that Copilot is doing something wrong, it���s that what it���s designed to do might not be what���s in the best interest of the programer.

GitHub markets Copilot as a ���pair programmer���. But I���m not sure this really captures what it���s doing. A good pair programmer is someone who helps you question your assumptions, identify hidden problems, and see the bigger picture. Copilot doesn���t do any of those things ��� quite the opposite, it blindly assumes that your assumptions are appropriate and focuses entirely on churning out code based on the immediate context of where your text cursor is right now.

Cognitive Bias and AI Pair ProgrammingAn AI pair programmer needs to work well with humans. And visa versa. However humans have two cognitive biases in particular that makes this difficult: automation bias and anchoring bias. Thanks to this pair of human foibles, we will all have a tendency to over-rely on Copilot���s proposals, even if we explicitly try not to do so.

Wikipedia describes automation bias as:

���the propensity for humans to favor suggestions from automated decision-making systems and to ignore contradictory information made without automation, even if it is correct���

Automation bias is already recognized as a significant problem in healthcare, where computer decision support systems are used widely. There are also many examples in the judicial and policing communities, such as the city official in California who incorrectly described an IBM Watson tool used for predictive policing: ���With machine learning, with automation, there���s a 99% success, so that robot is���will be���99% accurate in telling us what is going to happen next���, leading the city mayor to say ���Well, why aren���t we mounting .50-calibers [out there]?��� (He claimed he was he was ���being facetious.���) This kind of inflated belief about the capabilities of AI can also impact users of Copilot, especially programmers who are not confident of their own capabilities.

The Decision Lab describes anchoring bias as:

���a cognitive bias that causes us to rely too heavily on the first piece of information we are given about a topic.���

Anchoring bias has been very widely documented and is taught at many business schools as a useful tool, such as in negotiation and pricing.

When we���re typing into vscode, Copilot jumps in and suggests code completions entirely automatically and without any interaction on our part. That often means that before we���ve really had a chance to think about where we���re heading, Copilot has already plotted a path for us. Not only is this then the ���first piece of information��� we���re getting, but it���s also an example of ���suggestions from automated decision making systems��� ��� we���re getting a double-hit of cognitive biases to overcome! And it���s not just happening once, but every time we write just a few more words in our text editor.

Unfortunately, one of the things we know about cognitive biases is that just being aware of them isn���t enough to avoid being fooled by them. So this isn���t something GitHub can fix just through careful presentation of Copilot suggestions and user education.

Stack Overflow, Google, and API Usage ExamplesGenerally if a programmer doesn���t know how to do something, and isn���t using Copilot, they���ll Google it. For instance, the coder we discussed earlier who wanted to find parameters and comments in a string containing code might search for something like: ���python extract parameter list from code regex���. The second result to this search is a Stack Overflow post with an accepted answer that correctly said it can���t be done with Python regular expressions. Instead, the answer suggested using a parser such as pyparsing. I then tried searching for ���pyparsing python comments��� and found that this module solves our exact problem.

I also tried searching for ���extract comments from python file���, which gave a first result showing how to solve the problem using the Python standard library���s tokenize module. In this case, the requester introduced their problem by saying ���I���m trying to write a program to extract comments in code that user enters. I tried to use regex, but found it difficult to write.*��� Sounds familiar!

This took me a couple of minutes longer that finding a prompt for Copilot that gave an answer, but it resulted in me learning far more about the problem and the possible space of solutions. The Stack Overflow discussions helped me understand the challenges of dealing with quoted strings in Python, and also explained the limitations of Python���s regular expression engine.

In this case, I felt like the Copilot approach would be worse for both experienced and beginner programmers. Experienced programmers would need to spend time studying the various options proposed, recognize that they don���t correctly solve the problem, and then would have to search online for solutions anyway. Beginner programmers would likely feel like they���ve solved the problem, wouldn���t actually learn what they need to understand about limitations and capabilities of regular expressions, and would end up with broken code without even realizing it.

In addition to CoPilot, Microsoft, the owners of GitHub, have created a different but related product called ������. Here���s an example taken directly from their web-site:

This tool looks for examples online of people using the API or library that you���re working with, and will provide examples of real code showing how it���s used, along with links to the source of the example. This is an interesting approach that���s somewhere between Stack Overflow (but misses the valuable discussions) and Copilot (but doesn���t provide proposals customized to your particular code context). The crucial extra piece here is that it links to the source. That means that the coder can actually see the full context of how other people are using that feature. The best ways to get better at coding are to read code and to write code. Helping coders find relevant code to read looks to be an excellent approach to both solving people���s problems whilst also helping them improve their skills.

Whether Microsoft���s API Usage Examples feature turns out the be great will really depend on their ability to rank code by quality, and show the best examples of usage. According to the product manager (on Twitter) this is something they���re currently working on.

ConclusionsI still don���t know the answer to the question in the title of this post, ���Is GitHub Copilot a blessing, or a curse?��� It could be a blessing to some, and a curse to others. For those for whom it���s a curse, they may not find that out for years, because the curse would be that they���re learning less, learning slower, increasing technical debt, and introducing subtle bugs ��� are all things that you might well not notice, particularly for newer developers.

Copilot might be more useful for languages that are high on boilerplate, and have limited meta-programming functionality, such as Go. (A lot of people today use templated code generation with Go for this reason.) Another area that it may be particularly suited to is experienced programmers working in unfamiliar languages, since it can help get the basic syntax right and point to library functions and common idioms.

The thing to remember is that Copilot is an early preview of a very new technology that���s going to get better and better. There will be many competitors popping up in the coming months and years, and GitHub will no doubt release new and better versions of their own tool.

To see real improvements in program synthesis, we���ll need to go beyond just language models, to a more holistic solution that incorporates best practices around human-computer interaction, software engineering, testing, and many other disciplines. Currently, Copilot feels like a product designed and implemented by machine learning researchers, rather than a complete solution incorporating all needed domain expertise. I���m sure that will change.

July 14, 2021

fastchan, a new conda mini-distribution

What you need to knowSummary: today we���re announcing fastchan, a new conda mini-distribution with a focus on the PyTorch ecosystem. Using fastchan, installation and updates of libraries such as PyTorch and RAPIDS is faster, easier, and more reliable.

This detailed blog post by the brilliant Aman Arora of Weights and Biases provides a great overview of what fastchan is for, how it relates to other parts of the ecosystem, and how it makes life easier in practice.

If you use Anaconda, you can now install Python software like fastai, RAPIDS, timm, OpenCV, and Hugging Face Transformers with a single unified command: conda install -c fastchan. The same approach can also be used to upgrade any software you���ve installed from fastchan. Software on fastchan has been tested to install successfully on Mac, Linux, and Windows, on all recent versions of Windows. A full list of available packages is available here.

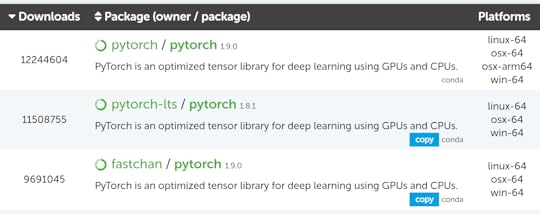

We���ve been testing fastchan for the last few months, and have switched the official installation source for fastai to use fastchan. According to Anaconda, it���s already nearly as popular as the PyTorch channel itself!

Anaconda download statistics for PyTorch Background

Anaconda download statistics for PyTorch Backgroundconda is one of my favorite pieces of software. It allows me to install a huge range of software, such as Rust, GCC, CUDA Toolkit, Python, graphviz, and thousands more, without even requiring root access. It installs executables, C libraries, Python modules, and just about anything else you can think of. It also handles installing any needed dependencies automatically.

If you���ve used a Linux package manager like apt (Ubuntu) or yum (Fedora) then that will sound pretty familiar ��� conda is, basically, just another package manager. But rather than being used to install system packages that are used as part of the operating system, it���s used for installing your own personal software. That means that you can���t accidentally break your OS if you use conda.

It also can create separate self-contained environments with totally isolated software installations. That means I can create a quick throwaway environment to test some new software, without breaking my base environment, or even keep separate environments for different projects, which might require (for instance) different versions of Python or libraries.

Some people conflate conda with pip and virtualenv. However, these are just used for managing python packages. They are can not manage executables, C libraries, and so forth.

conda installs software from channels, which are repositories of installation packages. The most widely used channels are defaults, which is used automatically by Anaconda, the most popular conda system installer, and conda-forge, a community repository to which thousands of developers have contributed conda packages.

Many organizations maintain their own channels, such as fastai, nvidia, and pytorch.

The distribution problemYou have likely heard the term distribution, as used in the Linux world. Distributions such as Ubuntu and Fedora provide scheduled releases of sets of software packages which have been tested to work correctly together. They also provide repositories where new versions of software are made available, after testing the software to ensure it works correctly as part of that distribution.

Anaconda and the defaults channel are also a distribution. On a regular basis, Anaconda release a new version of their main installer, along with a set of packages that have been tested to work correctly together. Furthermore, they continue to test new versions of packages between software releases, adding them to the defaults channel when they are ready.

Many of the packages in the defaults channel are sourced from conda-forge. conda-forge is not a distribution itself, but a repository for any user to upload ���recipes��� to build software, and to make available the results of those builds. Anaconda takes a subset of these packages, along with software they package themselves, and software packaged by other partners, does additional integration testing of the combination of these packages, and then makes them available in their distribution.

This system works very well in many situations. Those needing cutting-edge versions of software or packages not available in the defaults channel can install them directly from conda-forge, and those needing the convenience and confidence of a distribution can just install software from defaults.

However, many Python libraries are not in the defaults or conda-forge channels, or are not available in any channel at all. Furthermore, conda-forge is now so large (thanks to its great success!) that I���ve had to wait over two days for conda to figure out the dependencies when trying to install software that uses conda-forge. (There is a much faster conda replacement called mamba, but it is not yet feature complete, and can not currently install PyTorch correctly.)

Libraries which use the GPU are a particular issue, since conda-forge does not yet have facilities for building and testing GPU-enabled software. Furthermore, GPU libraries are particularly difficult to package correctly, needing to work with many combinations of versions of CUDA, cudnn, Python, and OS. There is a large ecosystem of software that depends on PyTorch, and the PyTorch team has set up a large number of integration tests that are run before each release. PyTorch also has its own custom framework for building the software, resulting in packages that automatically identifies the correct installer for each user.

These issues result in complex commands to install packages in this ecosystem, such as this command that���s currently required for installing NVIDIA���s powerful RAPIDS software:

conda create -n rapids-21.06 -c rapidsai -c nvidia -c conda-forge \ rapids-blazing=21.06 python=3.7 cudatoolkit=11.0As Aman Arora eloquently explains, running this command creates a new environment that doesn���t include any of the other software that we���ve previously installed, and provides no mechanism for keeping the software up to date. Adding other packages to the environment becomes complex, since version mismatches are very common when combining multiple channels in this way.

I wanted to create packages that depend on RAPIDS, but there���s no real way to make it easy for users to install and update such a package.

I hear a lot of developers telling data scientists that they should create new docker or conda environments for every separate project, and that that���s the correct way to avoid these problems. However, that���s like telling people to install a new operating system for each application they want to use. Imagine if you couldn���t run Chrome and vscode at the same time, but had to switch to a new environment for each! We need to be able to install all the software and libraries we need to do our work, in the same place, at the same time. We need to be able to use them together, and maintain them.

The solution: a new distributionTo avoid these problems we created a new channel and distribution called fastchan. fastchan contains all the dependencies needed to install fastai, PyTorch, RAPIDS, and much more. We use the official PyTorch build of PyTorch, the official NVIDIA build of RAPIDS and CUDA Toolkit, and so forth. The developers of these packages have spent considerable time packaging their software in a way that works best, so we think it���s best to use their work, instead of starting from scratch.

For libraries and dependencies that are only available on conda-forge, we copy those into the fastchan channel. We use a little-known, but very useful, Anaconda command called copy that copies packages across channels.

fastchan uses conda���s own dependency solver to figure out recursively all the dependencies that are needed. We only include dependencies that are not already available in the defaults channel. That���s because defaults is already used by default in Anaconda, so there is no need for us to duplicate what���s already there.

In addition, we package some software that���s currently only available as pip packages on pypi. We use a couple of methods to do this. One is using the terrific setuptools-conda software by fellow Aussie Chris Billington. The other is a new build.py program we wrote, which is used for compiled software such as sentencepiece and OpenCV.

The packages are built and copied automatically twice every day thanks to these GitHub Actions workflows.

The resultThe result of all this is that you rely just on the defaults and fastchan channels to install nearly everything you need, especially if you���re working with software in the PyTorch and Hugging Face ecosystems. Even more important than installation is updates ��� you can now update all your packages at once with a single command! To have this all handled automatically, create a file ~/.condarc containing the following: