Bruce Clay's Blog, page 45

June 12, 2014

SMX Liveblog: Executing A Flawless Content Marketing Strategy

SMX Liveblog: Executing A Flawless Content Marketing Strategy was originally published on BruceClay.com, home of expert search engine optimization tips.

Lunch is over and hopefully everyone is recharged. This session will focus on how to get the most out of your content strategy. This particular topic is something I’ve recently been working on with my own clients so I can’t wait to hear what tips these speakers will offer. The speakers for this session include:

Chris Bennett, CEO & Founder, 97th Floor (@chrisbennett)

David Roth, VP Marketing, Realtor.com (@daverothsays)

Purna Virji, Director of Communications, Petplan Pet Insurance (@purnavirji)

Chris Bennett: ‘Get your MacGyver on!’

Chris wants to talk about getting mileage out of your content so you can stop working so hard. Stop spreading yourself too thin and stop wearing too many hats. Stop with the checklist marketing mentality.

You can stop this madness by repurposing your content in different areas for different uses. Take a piece of content (video, infographic, image etc.) and “get your MacGyver on!”

Examples of How to Repurpose Content

Take an infographic and put it on Slideshare. 97th floor has seen better user engagement when infographics are actually split into several slides.

Take a Slideshare and turn it into a mini graphic/image rich article. Your graphic can then link back to your site where a full blog post or article can then be browsed by the user.

Spice up your rich articles with GIF’s just to add a little extra zing to it.

The basic idea is to not reinvent the wheel. Leverage what you have by spreading the love. This means you can have videos living in several areas including your own site. Consider changing the videos just slightly so that each platform has something different from the others – long form vs. short form, gifs, etc.

The basic idea is to not reinvent the wheel. Leverage what you have by spreading the love. This means you can have videos living in several areas including your own site. Consider changing the videos just slightly so that each platform has something different from the others – long form vs. short form, gifs, etc.

Short videos are working well on social and on mobile – people don’t mind being interrupted for a few seconds because they aren’t investing too much of their time.

Make sure your content has Open Graph, Twitter Cards and rich snippets. These types of posts, search results, etc. are just different enough that they easily capture the attention of users. There are so very few brands actually taking advantage of these markups yet they give great results. Go ahead, don’t be afraid to try something new and take that content to the next level on social platforms. Pinterest has the Rich Pins too, that markup products, recipes, location and more.

Interactive marketing apps are part of the future of content marketing. HTML5 JS mobile friendly apps is what Chris is talking about. Really cool apps can be created and become wildly successful out on the web, even for boring topics like taxes like CPA Select did for tax codes. 97th floor has seen that when users use these apps you are then able to have really strong CTAs to convert that traffic.

Chris’ slides:

Purna Virji: Trends Matter

How to give your existing content wings. Since Purna works in the pet industry, her whole deck is set to animals … aww.

Part 1: How do you find media worth content that exists?

You want to build brand authority, awareness, high quality links , good will and more coverage in the SERPs right? Here are 3 ideas that will help.

Pitch in time for “national ____ day”. Do you have existing content that is relevant? Can you make it new and improved?

Lesser-known facts or answers to FAQs. Come up with an idea that may group existing content together in one area like common poisons for pets. They links to old content they previously posted.

Seasonal, timely content. Think of the season like summer or bridal season. What content do you have that is relevant to the current or upcoming season and respin it.

BONUS: These sites give you ideas of what’s trending so you can possibly piggy-back on what’s popular:

WhatsTrending.com

Google Trends

Part 2: Pitching your content

When it comes to getting content published, it’s smart to use social media tools available to you. Find relevant audience members and target ads to them a week or two before you are thinking of pitching content to them. The idea is to be Top of Mind when you go to companies to pitch your idea.

Part 3: Getting conversions from your pitch

Personalize your pitch to companies, emphasize the value your adding but keep it short and sweet. Build and nurture the relationship with your contact person and make sure to allow for a lot of lead time in order to close the deal and iron out the kinks. Once you have closed the deal, don’t stop nurturing there. Send a thank you note to your contact. Be available to return the favor. In the future give these contacts perks like “first look” on future content.

I loved her deck! So many cute animal pictures include dog and cat yoga poses.

David Roth: Content is Currency

David’s presentation is going to be a case study to a strategy they used at Realtor.com.

“Content is the currency with which we tell our story to our audience. Content for the sake of content is inherently worthless.” Good marketing is about storytelling so what’s your story? Who are you telling it to? Why do they care and what kind of content will best tell that story?

Creating, Pitching, Testing and measuring content marketing. This is the cycle that Realtor.com uses when doing their celebrity real estate content. Realtor.com is able to take their new listings and cross reference public records with a celebrity database to find candidate listings that would work in this particular strategy. Once they find a listing or two they then create content around it whether it’s features of the property a known celebrity is selling or the price its being sold for etc. Different properties present opportunities that appeal to different audiences include fans, enthusiasts of various passions, sports fanatics and more. They’ve come up with a content strategy that will constantly give them a new audience to present brand awareness to. Genius!

Going to the next step after you have your content, it’s time to take it to social. Realtor.com writes copy for the social networks, makes their images social ready, generate pictures and videos for instagram or collages and pin maps for Pinterest. They use relevant hashtags for the celebrities too in order to capture more audience.

Aside from social they also utilize other marketing areas with this content. Ways that Realtor.com does this are things like:

Best of blog lists in an email

Content syndication

SEO-friendly blogs

Social outreach

Buying relevant keywords

YouTube

How do you know when you’ve won with your strategy? When things like other authority sites reach out to you or you get great referral traffic and SEO benefits out of the content. Your analytics will help you measure that success with the proper tagging/filtering and tracking. Baseline your traffic at the beginning and track it throughout the process. Watch for spikes and quantify lift while measuring ROI.

Above all else, remember that Authority and Authenticity matters! Don’t compromise those as your create and roll out your content strategy.

David’s slides:

Building A Content Marketing Practice: An Experiement In New Media By Dave Roth from Search Marketing Expo – SMX

SMX Liveblog: Advanced Technical SEO Issues

SMX Liveblog: Advanced Technical SEO Issues was originally published on BruceClay.com, home of expert search engine optimization tips.

Diving into technical SEO, we have the following esteemed speakers:

Bill Hunt, President, Back Azimuth (@billhunt)

Maile Ohye, Sr. Developer Programs Engineer, Google (@maileohye)

Eric Wu, VP, Growth & Product, SpinMedia (@eywu)

It’s still a little early for some and the peeps here seem to be a little slow moving this morning, but I’ve downed my cup of coffee and I’m ready to dive into technical issues with these speakers this morning. I love tackling technical problems on client sites and the bigger the site the bigger the problems usually. Hopefully these experts will have a few good nuggets of information for us.

It’s still a little early for some and the peeps here seem to be a little slow moving this morning, but I’ve downed my cup of coffee and I’m ready to dive into technical issues with these speakers this morning. I love tackling technical problems on client sites and the bigger the site the bigger the problems usually. Hopefully these experts will have a few good nuggets of information for us.

Maile Ohye: Javascript Execution and Benefits of HTTPS

“We recommend making sure Googlebot can access any embedded resource that meaningfully contributes to your site’s visible content or it’s layout,” Ohye said.

On May 23, Google announced they are doing more JavaScript exe. They’ve been fine tuning and were finally able to release. Shortly after they launched Fetch as Google that now shows 200 kb of a page, view text content, fix blocked resources at the rate of 500 fetches per week. You are able to select different crawlers including mobile. Use this to check and make sure Google is able to fetch all the important pieces of your site.

Modals and interstitials – they are everywhere. You need to determine if it’s worth interrupting user’s workflow. These are interrupting their workflow when it might not be necessary. Check analytics to see if it’s even needed. If you don’t want your modal or interstitial to be indexed, check the fetch as google rendering to see if it is and you can always disallow in robots.txt. Check one more time to make sure the disallow worked.

Optimize the content that is indexed. Make sure your css/js resources are crawlable. Use the fetch as Google to make sure they are rendering and remember to prioritize the solid server performance. Consider keeping (rather than deleting) old js files on the server. Sometimes Google may need these files when doing their crawling. Lastly, degrade well because not all browsers and SEs execute JS. Make sure you test.

HTTPS Benefits

Who’s prioritizing security? Security is becoming a bigger deal and there are several big sites now offering secure browsing. Why the switch

Using TLS allows for users to know they are where they expect to be on a site – authentication; it gives you data integrity and also encrypts data. TLS gives an added layer of security for the users. Google can spider https. Yes https is search friendly. Boy, that wasn’t the case a few years ago. Webmaster tools is equipped for https. You can actually verify a https site within GWT. Make sure only one version is available for crawl to avoid issues.

Crawling and indexing https sites – you want to do a 301 from http to https to avoid dup content. Serve all https resources and make sure your rel=canonical are correct. When deciding to move over to https, make sure you test your site in browsers to ensure all your resources are showing correctly.

The web is growing towards authentication, integrity and encryption so be ready. HTTPS site migration can still be search friendly if you do it right and are consistent in serving up resources.

Whew, she went through a lot of data very quickly.

Eric Wu: Ajax is like Violence – if It Doesn’t Solve your Problems, You aren’t Using It Enough

Most sites use things like jQuery, angular JavaScript or even backbone JavaScript. These help to speed up the site depending on how you utilizing them. The idea is to improve the site speed to improve the user experience and crawlability of a site. On a test, after improving the site speed Eric’s team saw an 80% increase in organic search sessions.

Google has been trying to crawl js since 2004. Over the years they’ve gotten better and better until present day where they are finally comfortable saying they can crawl it. The GWT Fetch & Render is a way to see that they’re capable of doing this now.

Eric suggests implementing Infinite Scroll. Not only because it works well on mobile but because it’s a better user experience. Use rel=next / prev when implementing Infinite Scroll.

Continuous Content uses PushState that requires a simple bit of code:

History.pushState (

{}

titleOfPage,

newURL

);

History.replaceState ();

Ajax Galleries in terms of slideshows on sites like publishers. Slideshows give you big user engagement, more social shares and numerous other benefits. Eric mentions Vox as a site that is using pushState, and rel=next / prev effectively in this case.

Defered Image Loading is something that Eric says there isn’t a good solution for…yet. Work arounds include using 1×1 blanks, skeleton screens or using low resolution as the “lazy” loading solution.

When using responsive images you can use:

• Scrset

• Polyfill

• Ua detection

In order to load images for different devices, Eric suggests using noscript.

Bill Hunt: Improving Indexibility & Relevance

As the final speaker for this session, Hunt promises to not be as “geeky” as the previous speakers.

He promises basic, so he breaks SEO down into four areas: indexability, relevance, authority, and clickability. Bill will speak about 2 of these items.

To improve indexability you need to remember that if spiders can’t get to the content they can’t store it! Improve the crawl efficiency on large sites to allow the spiders get to the content. Reduce the errors by checking them and fixing them. As development gets more complicated the more you’re going to have to tell search engines where to go and how to fetch the data you want indexed.

When submitting a XML sitemap to the search engines, check for the errors and fix any that are reported. Bing has stated that if more than 1% of pages submitted have errors, they will stop crawling the urls in the sitemap. Clean up your errors folks; make things easier on the search engines. It’s not their problem to figure out, it’s yours. You don’t want to have a disconnect in the number of pages on a site and the number of pages in sitemaps. If Google and Bing bother to tell you where you have problems on your site, take notice and actually fix them.

Some common challenges that Bill has seen on sites have include:

URL case inconsistency: site has both upper and lower case in the urls

Page with no offers: nearly 2M soft 404 errors are due to no offers/content

Canonical tags resulting in 2-200 duplicate pages.

Bill suggests:

Mandating lowercase URLs

If there are five or fewer results on a page, add noindex & nofollow

Implement custom 404 with 404 header

Dynamically built xml based on taxonomy logic

Add sitemap error review to weekly workflow

Leverage hrefs on global sites. What hrefs can do is prevent duplicate content from country pages and helps the search engines understand which version is for which country and language. When doing hrefs you MUST reference the original url somewhere in the code. Many sites don’t do this and a lot of the tools don’t do this. Another mistake is referencing the incorrect country and language. Bill actually built a href builder (hrefbuilder.com) that will help you build URLs.

And with that Bill’s done. This session delivered what it promised – lots of technical goodies for us geeks.

SMX Liveblog: You & A with Matt Cutts

SMX Liveblog: You & A with Matt Cutts was originally published on BruceClay.com, home of expert search engine optimization tips.

It’s the session we’ve all been waiting for: the You & A with Google’s Head of Webspam Matt Cutts. Next to plush hummingbirds, Cutts laughs with Search Engine Land Editor Danny Sullivan. I’ll keep up with the convo as fast as my fingers can type!

Announcements and Algorithm Updates

Danny starts by asking Matt if there are any announcements, and with that, the session is off and running.

Matt: Yes, there are a lot. One is that the second part of the latest aspect of the Payday loan update will be coming soon. Probably later this week, maybe as soon as tomorrow.

Matt: Yes, there are a lot. One is that the second part of the latest aspect of the Payday loan update will be coming soon. Probably later this week, maybe as soon as tomorrow.

Danny: How is that different from the update last week?

Matt: It targets different sites. This is looking at spammy queries instead of spammy sites. This update could effect sites that are doing counterfeit for example.

Danny: What happened to metafilter site? Was it Panda?

Matt: It wasn’t Panda. What happened is that it was effected by an algo update that wasn’t Panda or Penguin. Even though the site is slightly out of date, it’s a good quality site. Google is working to figure out how to improve the algo-based on this incident. Google does not think that metafilter is spammy or has spammy links. Google had never sent a notification saying that metafilter was spammy.

*Matt announces that Google is trying to make improvements for the reconsideration request. They are looking into a way to make it clearer where the problems are for site owners. Now, when Google rejects a request for re-inclusion, the Google team member has an opportunity to fill out more details on an individual basis.*

Danny: Says that with two different roll outs in close proximity (Panda and Payday), people may become confused as to what update they were hit by. Danny thinks it would be great if, for example, Google would show in GWT what algorithm the site was hit by.

Matt: Google has over 500 different algorithms so it would be difficult to do for every update. Matt agrees to think about giving that type of info in GWT. They do try to update people on large updates via tweet, but he agrees that it’s a good question and will consider it.

*Announcement by Matt: GWT – it’s a good time to check it out. Google rolled out Fetch & Render which allows you to see what googlebot sees for your site. They really are trying to make ‘fetch’ happen. Over the next few months there will be improvements in the robots.txt testing, more with site moves by making them better and easier, better reports, etc. Keep your eyes on GWT – lots to come.*

Danny: Has there been a Penguin update since last year?

Matt: No, they’ve been focusing on Panda.

Link Removal and Walks of Shame

Danny: Danny talks about getting an email from a person requesting a link removal. Come to find out, it’s a link that was spammed into a comment that they did themselves. The “link walk of shame” is a good punishment for the folks who did spam, but it’s becoming an issue for the sites they spammed. Why can’t they just disavow?

Matt: Fair question. The work for ‘walk of shame’ does create more work for the site owners but it’s tricky because they want to make sure the folks trying to do the right thing won’t be at a disadvantage for those who play dirty and ask for forgiveness.

*Danny suggests a two strikes rule – first time you’re forgiven … the second time not so much and time to do the walk of shame. Matt doesn’t seem keen on it, but the banter draws giggles from the audience. Soon thereafter, Matt announces that Google is working on getting IE8 referrers back. Danny asks if Google can give us the data in GWT such as a year’s worth vs. the 90 days currently available — why hold our data hostage? Matt basically side steps this question and gave no immediate answer as to when more data will be available*

Danny: Is link building dead?

Matt: No, it’s not dead. There is a perception that links are hard to come by and many are nofollowed. That’s not true. A small percentage of links are actually no followed. There is still mileage to be had in links. If you do enough interesting and compelling stuff, the links will come to you.

It’s easier to be real, then to fake being real

Danny: Is it possible to asses a page without using links?

Matt: It would be tricky but it is possible.

Author Rank, Google+ and Matt’s Favorite Tool

Danny: Is Google using author rank for anything aside from in-depth articles?

Matt: Nice try. (Matt then goes on to say that the long-term trend of author rank is that the data will be used more).

Danny: Are you looking at CTR, Bounce Rate and other engagement metrics in regards to ranking

Matt: In general, they are open to looking at signals but he will not spill the beans as to whether or not they look at the “engagement” of the site for rankings.

Danny: When do sites get boost for using secure ssl

Matt: Sites don’t get a boost for using https. Google used to prefer http over https, but Matt believes they’ve backed that out of the algo. Matt does prefer a secure web though.

Danny: What’s the timeline on manual actions and their expiration?

Matt: If Google does a manual action, you get a notification. If it’s a demotion it has an expiration on it. Minor infractions might have a shorter timeline. Major items have a longer timeline. If you wait long enough, they will expire. It expires even if the penalty isn’t fixed

Danny: is the knowledge graph carousel going insane?

Matt: I’ve never gotten trapped in the carousel but will look into it

Danny: is Google+ dead? (Lots of laughs)

Matt: No, I posted something yesterday. (Here, Matt notes, however, that Google is not using G+ data in general rankings.

Danny: Can you reavow a disavowed link?

Matt: yes, upload a new disavow file that does not include the site

Danny: what is better for mobile?

Matt: tends to like responsive but you can do all sorts of things. Google allows you do any of the main formats and will accept them. Google’s mobile traffic will exceed desktop in the very near future. Asks for a show of hands for those who know if their mobile site is marked up for autocomplete. Minimal show of hands. Make your site faster, easier to use (including autocomplete, Google wallet etc.) so that people can make transactions quickly.

Danny: Is speed a ranking factor?

Matt: If your site is very very slow, it will hurt your rankings. Normal sites don’t have much to worry about. It’s in your best interest to make your site fast.

Danny: What’s going on with negative SEO

Matt: We’re aware of it – because people are worried about it. The upcoming payday update will help close negative SEO loopholes

Danny: What are your favorite Google tools?

Matt: Fetch as Google. (Watch a video and learn more about the finer points of Fetch as Google here)

*At this point Matt does a demonstration for wearable search with his phone on voice search for things like where the space needle is, how tall is it, who built it, restaurants near there, Italian restaurants near there and then finally navigating to the first restaurant. This shows that you can do multiple queries related to one topic and have Google understand the searches are all connected. Hummingbird helps connect all these searches into relevant information.*

White Hat Link Building and ‘Sweat + Creativity’

Danny: Are links in JavaScript handled like regular links?

Matt: Google can find them and follow them. You can also nofollow those links, too.

Danny: Would you trust white hat link building companies.

Matt: It’s possible to do white hat link building. Usually requires you to be excellent. Sweat + creativity will do better than any tool.

Danny: Are there manual benefits? The opposite of manual penalties.

Matt: No. there are no exception lists for Panda.

Danny: Any last parting words?

Matt: Get ready for mobile. Stressing again the auto complete in your forms. (Hmm, is that a little clue? That’s not the first time Matt mentioned the auto complete in this session)

That’s it for the You & A with Matt!

June 11, 2014

SMX Liveblog: What Advanced SEOs Should Be Doing about Mobile SEO

SMX Liveblog: What Advanced SEOs Should Be Doing about Mobile SEO was originally published on BruceClay.com, home of expert search engine optimization tips.

In this session, we’re going to hear from a panel of experts that include:

Cindy Krum, CEO, MobileMoxie (@suzzicks)

Michael Martin, Senior SEO Manager, Covario (@mobile__martin)

Maile Ohye, Senior Developer Programs Engineer, Google Inc. (@maileohye)

Jim Yu, CEO, Brightedge (@jimyu)

Maile Ohye: Search Results on Smart Phones, Mobile Acquisitions Channels, Mobile Opt-ins and More

Websites do appear in SERPs in the mobile environment, but so do apps. Installed apps will show as an autocomplete in the search box. Furthermore, they can take a mobile result for a search and open it within an app, such as an IMDB result on a phone that has that app installed. This is done in order to enhance the user experience. However, in order for this to work it requires deep links within the app and mapping in the sitemap.

Recently Google just launched their Faulty redirect badging for results they’ve found faulty sites in the results. This is a badge you DO NOT want! This tells the user that the site they are about to click on may have issues with it.

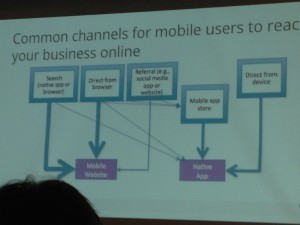

Mobile Acquisition Channels

Asking for app downloads isn’t the best thing to do right off the bat – this is better for relationship-ready customers!

Improving the app user experience is much like web SEO:

Good content or service

Enticing search appearance

Optimizing the Mobile Search Pipeline

There are two main bots – both are Googlebot, but one is iPhone specific.

When you develop a mobile website, you want to signal to search engines the relationship you have between the desktop URLs and mobile URLs.

RWD

Dynamic Serving

Google crawls mobile just like they do desktop. They will crawl a desktop site looking for mobile URLs and then re-crawl using the iPhone crawler.

Mobile URL content is clustered with desktop URL content

Indexing signals consolidated

Desktop version is primary source of content

Desktop title is used

Some signals from desktop page are incorporated into mobile rankings. The user experience is so important. Make sure your mobile version is optimized for task completion. As you do mobile SEO, remember that desktop SEO is still important!

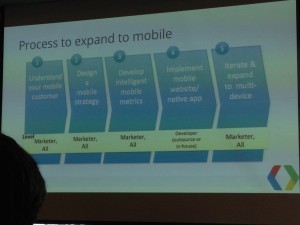

Process For Building a Mobile Strategy

Understand your mobile customer

Design a mobile strategy

Develop intelligent mobile metrics

Implement mobile website/native app

Iterate and expand to multi-device

Google does have a checklist for Mobile Website Improvement. They also have a UX testing tool in page speed. They just launched 25 principles of mobile site design, and they have info for help changing mobile site configuration within Google Developers. There is an area for app indexing as well.

When Creating an App

Use deep links

List canonical URLs in sitemap

First-click free experience from search results

Takeaways

Implement technical SEO best practices

Signal desktop/mobile relations

Fix redirect issues

Mobile first doesn’t mean mobile only

Create deep links within native app

Focus on user experience

Fulfill all 5 stages of the process

Cindy Krum: Mobile Page Speed

Krum breaks down mobile ranking signals — Google says explicitly to use bi-directional annotation, implement redirection to a m. subdomain, to avoid broken content and to have quick page speed. Moreover, Google indicates:

no side-to-side scrolling

optimized above the fold rendering

necessary JS inline

necessary CSS inline

No flash

Efficient CSS selectors

Deferred loading of JS

She then elaborates on these important factors …

Understanding Varies: User-agent

This is an explicit signal that tells Google to send the mobile crawler. If you don’t use this, they may still figure it out, but it will take them longer to find, crawl and index. Make it easy on Google.

Broken Content

Google doesn’t want broken content. This means you shouldn’t have things like flash, popups, hover effects, sideways scrolling, tiny font sizes, tiny buttons, device specific content and slow pages. These things will increase your “mobile bounce rate”.

Page Speed

Why is page speed important? It’s important for mobile because if you have a slow page it can hurt the crawler, meaning they won’t get through all the content and they’ll crawl less content upon their visit. It also hurts the user experience. If it’s bad on mobile over WiFi, it’s worse on 3G. People don’t appreciate slow content on mobile. They have higher expectations of mobile content and don’t cut you any slack for slow sites.

Responsive

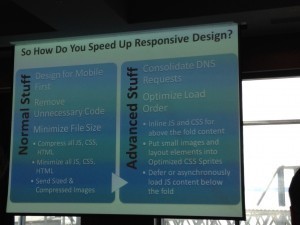

ResponsiveWhile in theory, it sounds great for one design to work across all platforms, but the reality isn’t always ideal. It requires additional coding and can cause one version to be bulky and slow it down for one or all devices. The catch 22 is that Google prefers responsive design. However, they also prefer fast page. One isn’t always available with the other.

How do you speed up responsive design? Design for mobile first and remove unnecessary code and minimize your file sizes of all elements on the page. Then, consolidate DNS requests and optimize load order.

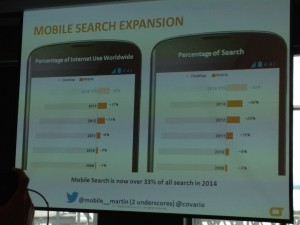

Michael Martin: The Mobile Search Result Difference

Mobile Search Expansion goes up every year.

Mobile search is now over 33% of all search in 2014. With 1 out of every 3 searches done on a mobile device, mobile search is becoming more important than ever.

Mobile search is now over 33% of all search in 2014. With 1 out of every 3 searches done on a mobile device, mobile search is becoming more important than ever.

The mobile search result difference – what is the difference in the search results for:

Generic terms – 58% variance in results from desktop to mobile

Localized terms – 73% difference

The evolution of mobile has gone from .mobi to m. to responsive design to dynamic serving.

To address speed and usability, it’s best to start with Google’s pagespeed insights. This gives you a good starting point.

Next, you want to identify keywords for mobile, and you can use Google’s keyword planner for the mobile users.

From the keywords you want to find out the intent using data available such as the stock of products available nearby, the top number of products and services, reviews, videos and interactions with products, click to call and locations.

Year over year, what are the numbers to prove good responsive design results:

Mobile SEO traffic can increase with responsive design – 81%

Mobile rankings will go up 1

Conversions increase 23%

Looking at average mobile results with non SEO year over year:

Mobile traffic 75%

Mobile rankings increased 1

Conversions 17%

Dynamic/adaptive serving results:

Mobile traffic 167%

Mobile rankings +3

Conversions 82%

Dynamic is beating the average of responsive design or not doing anything at all. Dynamic design is the way to go for the best results.

Advanced Actionable Insights:

Rwd for scale & dynamic for hub pages

Dynamic/adaptive should be used if motile intent is sig. different than desktop

Mobile seo can be applied to dynamice serving

Jim Yu: Common Mobile Search Errors

Mobile is outpacing desktop by 10x, and smartphone share is roughly 23% for search traffic. The anking factors are quite different for mobile vs desktop search. In fact, 62% of keywords have different ranks on the two platforms.

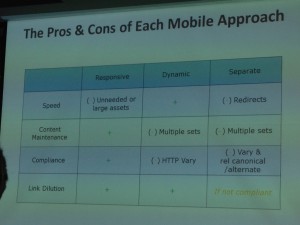

There are different approaches to implementing a mobile site, which were already talked about. Responsive, dynamic and a separate site. Brightedge found minimal performance differences across the types of environments. So they dug deeper and found that most of the errors appeared for those with separate mobile websites. Seventy-two percent of the implements of a separate site were done with errors, while only 30 percent of the dynamic sites had errors.

What happens when you have incorrect implementation? Brightedge found a two position difference meaning a 68% lost smartphone opportunity for traffic.

What really matters to your mobile seo approach?

1. Mis-implementation – smartphone is only becoming more important

2. Ongoing maintenance – you’ve configured everything correctly bu with each website release, the configuration needs to be checked again.

There are pros and cons to each type of implementation you need to consider when developing your mobile environment.

There are pros and cons to each type of implementation you need to consider when developing your mobile environment.

Summary

• Smartphone share of voice is 23% and growing by 50% year

• Mobile is outpacing desktop 10X

• 62% of keywords have different rank

• Ranking variables are becoming more complex

• There is a slight difference in rank via mobile

• Incorrect implementations can dramatically impact results

SMX Liveblog: Enhancing Search Results with Structured Data & Markup

SMX Liveblog: Enhancing Search Results with Structured Data & Markup was originally published on BruceClay.com, home of expert search engine optimization tips.

Get practical advice on using structured data and real world examples of schema markup in this informative session featuring:

Jay Myers, Emerging Digital Platforms Product Manager, BestBuy.com (@jaymyers)

Jeff Preston, Senior Manager of SEO, Disney Interactive (@jeffreypreston)

Marshall Simmonds, CEO, Define Media Group, Inc. (@mdsimmonds)

Coming back from lunch and there are plenty of folks who seem to be eager to learn about Structured Data. This session promises to teach us how companies are implementing markup and benefiting from it. This is one of my favorite things to recommend to clients so let’s get started …

Coming back from lunch and there are plenty of folks who seem to be eager to learn about Structured Data. This session promises to teach us how companies are implementing markup and benefiting from it. This is one of my favorite things to recommend to clients so let’s get started …

Marshall Simmonds: Authorship and Rich Snippets

The evolution of indexation first began with crawling, when Google would come and grab your content. Then, it evolved to HTML sitemaps and eventually XML sitemaps to show them what kind of data you had on your website (image, video, news, pages). Now, indexation has evolved to include structured data.

Why do we do this?

Schema says you can expect that more data will be used in more ways. There are digital assets you, as a site owner or caretaker, can benefit from as they are served up from the SERPs. Did you know that roughly 30% of SERPs have structured data results being served? Interestingly enough, the structured data results differ across browsers — Chrome will show different results than Firefox for the exact same search only moments apart on the same computer. Factor in that Google is regularly updating their algorithms you have to realize that things are constantly changing and different results are constantly being given based on several different factors. Anytime you can capture some of that traffic on a consistent basis is a good thing, right? Structured data can help you do this.

Authorship is one of the more well known types of structured data being used today. With Authorship, an author’s thumbnail and name appears in the SERPs. Marshall says this was basically Google’s way of rewarding people for setting up their G+ accounts. He goes on to ask for a show of hands how many people in the audience have G+ accounts and there is a large show of hands. Marshall then goes on to ask for a show of hands of how many actually use G+ and barely any hands remain in the air. Oops! Google keeps telling us it’s important and yet, in a room full of “advanced search marketers” only a small percentage of people are actually using it.

Late in 2013 Google started showing Authorship results less in the SERPs, however Marshall has noticed an uptick in the appearance in the results more recently. There are a few factors at the site level that help a site to appear in the SERPs for an authorship listing. These things include:

The authority of the site

Having high quality content on the site

The domain longevity

additional factors vary based on queries

There are also factors at the author level to consider when trying to appear for Authorship listings. These include:

Reputation (who you are, where you publish etc.)

Quality of content

Authority of site you’re contributing to

Additional factors vary based on queries

Remember, there will be times when you roll out enhancements, and you WON’T see an immediate reaction. Some roll outs will need to be looked at year over year in order to get great data on the reaction. For Reviews, it seems that these are picked up rather quickly, and you’ll see immediate reactions depending on the industry. For recipes, structured data can help results for certain searches depending on how specific the search is. Somewhat generic searches for recipes will show structured data results, but very very specific searches will not show rich snippet results. Article markups can encourage in-depth categorization with other factors involved. Video is a great area to be in and snippets greatly help.

Structured data is only one check point in your overall strategy – SEO is still important.

Takeaways

Authorship has a slow to medium indexation with a sporadic appearance in the SERPs with minimal traffic impact.

TV Reviews have a fast indexation cycle with a slower appearance (based on seasonality) in the SERPs and results in a medium level traffic impact.

Product reviews have a fast indexation cycle with a fast appearance in the SERPs and a good traffic impact

Recipes have a fast indexation cycle with a fast appearance in the SERPs with a minimal impact on traffic

Articles are a little different with a hard to track indexation cycle and they don’t always require schema

Video currently have a fast indexation cycle and are having results appear in the SERPs very fast along with a significant impact on traffic.

Tools & Resources

Structured Data Markup Validationg & Testing Tools

Semantic Search Marketing Google+ Community

NerdyData.com – The Search Engine for Source Code

It’s still very early to get in on rich snippets. If you can get in before competitors, you’re usually sitting better than they are by the time they get around to doing it too.

Use Structured data as another check point in the overall strategy. It helps compete when you can’t break through using regular SEO techniques. It helps to future proof your site from future updates that specifically deal with this area and BOTH Google and Bing want this type of data!

Lastly, remember that this is a marathon, and you have to look at the results year over year.

Jay Myers: How Best Buy Implemented and Benefited from Structured Data

Jay is going to talk about Best Buy’s Journey with structured data – Then & Now. Best Buy actually started implementing structured data as early as 2008, way ahead of the curve and even before it was really recommended to the search community. One way that they implemented structured data in the beginning was to add the markup to each individual store page. The store pages had valuable information, so using RDFa, they added the coding and this resulted in a double digit increase in traffic year over year. And remember, this was years before it was even recommended.

After seeing those types of results with the store pages, Best Buy went on to using markup on an experimental site. After marking up the site and allowing it to be crawled and indexed, they were surprised to see it outranking the main Best Buy site in Google (in 2009).

Shortly after, Best Buy then added structured data to their “shop URLs” that would serve as rich data experiences for both human and machines. Soon they found that these pages were showing in SERPs when they hadn’t before.

These initial efforts were all implemented prior to the real Schema.org push, pre-2010. When Best Buy began implementing, they focused on:

Publishing data that has valuable meaning beyond keywords

“clean” and “cool” URLs

Syntax: RDFa – resource description framework in attributes

Ontologies ( a loose set of rules to help machines understand data)

GoodRelations – the web vocabulary for ecommerce

FOAF – friend of a friend

GEO – basic methods for representing spatially-located things

Now, Best Buy properties all use microdata and Schema.org in order to better publish their data. They switched from the RDFa to Schema and saw a nice uptick in their traffic. They have found that there are additional data elements that are showing in SERPs such as addresses/phone numbers on store pages is bringing in a better CTR. They are also seeing reviews for the store pages along with the store info in the SERPs and this further drives the customer engagement. The product pages have prices, reviews, availability all showing in the SERPs. This helps enhance the user experience. Best Buy is engaging with the user right from the SERP rather than the user having to come to the site and hunt for the data.

In the future Best Buy is looking to use Gmail Actions in the Inbox, which uses Schema.org to trigger the actions. This enables actions within email simply by using some basic level coding in the emails. At Best Buy, there is also a pilot being pushed to improve the visibility of product information on the web. Best Buy will also focus on enhancing their result in the Knowledge Graph by marking up data feeds things like upcoming events, recent publications, etc.

Jeff Preston: Real World Examples of Structured Data & SEO

Jeff works for Disney and has been implementing rich snippets for awhile with varied but good results. Reviewing what others have said, rich snippets:

Helps search engines better understand content & markup

Provides opportunity to improve search engine listings, tweets, Facebook and other social posts

Will NOT fix other SEO problems; fix other SEO problems first before trying Schema

Open Graph markup helps with Facebook (also G+ and Twitter) listings when people share the content. The code appears in the of the page code. The code allows you to define certain data from the page that will also standardize the way information appears in a Facebook share.

For Schema.org, it’s a microformat vocabulary to describe your data. The search engines support and encourage this type of markup. Disney uses it to markup things like movie pages to call out info like the title, actors and more. Jeff gives a good bit of advice that people should always remember – validate your coding to make sure that the proper things are tagged.

You can use Schema on the navigation. In a test Disney did, it resulted in the site links appearing in the SERP result in Google. Disney also used Schema on an Event Microsite to see if it would help. They added the code to the site with event markup giving details like the name, start data, name of location and address. When they pushed it live they noticed in about 2 days a rich snippet appearing in SERP showing the date, event name and location of the particular event.

Additional applications that Disney has used schema on:

Videos

Executive and staff bios

Official logos

Local search: name, address, phone number

Products

Ratings

They are seeing good results whenever they implement schema, especially on content assets that previously had difficulty getting indexed.

Another thing Disney has experimented with is Twitter Cards. Twitter cards:

Gives you control of how your content is displayed in tweets

Links together official website to Twitter account

Need to apply to Twitter for your cards to be approved

Fairly easy to implement

Twitter also has a good code validator to check the code.

Some of the things that Disney has done and have seen results in is the Knowledge Graph. Jeff has noticed that Google is pulling some data from Freebase.com, the Schema.org markup, Wikipedia.org as well as other databases that Google might be able to pull entity data like IMDB or Rotten Tomatoes. Do what you can to optimize and influence this information when it relates to your site in order to have a great Knowledge Graph appearing for searches.

Structured Data Resources

Open Graph: developers.facebook.com/docs/opengraph

Schema.org: schema.org/docs/schemas.html

Twitter Cards: dev.twitter.com/cards

Overall Takeaway

Structured data is still very new even though it’s been around a couple of years and having it implemented on your site definitely helps improve your SERP results, CTR and user experience.

SMX Liveblog: 25 Social Media Ideas for the Advanced Search Marketer

SMX Liveblog: 25 Social Media Ideas for the Advanced Search Marketer was originally published on BruceClay.com, home of expert search engine optimization tips.

Get the skinny on social media tools, tactics and more in this session geared towards savvy search marketers, featuring:

Michael King, Executive Director of Owned Media, Acronym (@ipullrank)

Matt Siltala, President, Avalaunch Media (@Matt_Siltala)

Mark Traphagen, Senior Director of Online Marketing, Stone Temple Consulting (@marktraphagen)

Lisa Williams, Director, Digital Marketing Strategy, Search Discovery (@seopollyanna)

Lisa Williams on Adding Social Media to Overall Strategy

Williams tells us to start with the actual product and make a connection to the product with great storytelling. Look at search technology as a way to tell a story about your product. Communication strategy should be your first step to making that connection and storytelling. Come up with a communication strategy that’s specific, measurable, actionable, relevant and timebound.

When collaborating between the search and social teams, unify silos with a methodology. Embrace a process that drives business objectives and encourage role clarity and ownership amongst the team. It’s important to have a goal to approach together as a team. Some actionable tasks for your team:

Create timelines that are specific to the audience. For example, stay high level for execs. Search and social marketers need to have a timeline laid out to work together that is defined for the audience.

Define your assets, and know what you’re optimizing. You don’t know what users will choose to interact with, so you want to optimize all types of content including landing pages, images, videos, etc.

Define channel priorities: Prioritize your paid, owned and earned opportunities. Make a grid of the three categories with all your platforms (Facebook, Twitter, Pinterest) and list out the opportunities for those platforms. Again, this should be shared with both the search and social teams.

Create collaborative calendars: get strategic for social and search to thrive together. Make an editorial calendar that lists out dates, topics, content assets, keywords, target audience, calls-to-action, channels and additional notes in a spreadsheet.

Williams said it’s also important to visualize with content pillars. Think about a story or piece of content and look at other channels that you want to use to promote it. Think about how the platforms are connected and the opportunities on each platform. Furthermore:

Define influencer outreach: build relationships and authority.

Nurture relationships: you can’t automate relationships (automation isn’t strategy). Work hard to collaborate together once you’ve defined influencers you’d like to collaborate with.

Engage media and movements: define amplification opportunities. Who do you want to partner with for specific campaigns? Define these opportunities

Define partnerships: research partners for curation and collaboration. Curation doesn’t mean repurposing! Take a group of content, put it together in a meaningful, but NEW way.

Alignment on KPIs: what does success look like? Define the KPIs so you can measure your success.

Michael King: Finding Content That Works for Your Marketing

Social SEO Tactics from Michael King

King advises us to integrate SEO and social – 95 percent of marketers that consider themselves superior strategists have integrated search and social according to a 2013 study. Moreover, King notes that every good SEO/social campaign starts with research! Research informs you so you can make great content. Social media acts as your focus group; when building personas, use data from social media.

King stresses the importance of defining business goals, conducting keyword research, listening to social activity and taking social inventory. He recommends some tools:

Keyword research tools

Keyword planner

Uber suggest

Use Google for suggest terms

Social Listening Tools

Organic: Topsy

Organic: Social Mention

Organic: Icerocket

Paid: radian6

Paid: Sprinkler

King recommends identifying influencers via:

Followerwonk

Twtrland

Quora

When it comes to content, he recommends using Quora or Reddit to determind what users want.

Bottom line? Do award-winning work. Be king of kings.

Matt Siltala: How to Bring to Life Visual Content to Compel Social Strategies

Siltala is going to talk about bringing two worlds together – offline and online — through social media and visual content.

Siltala is going to talk about bringing two worlds together – offline and online — through social media and visual content.

Types of visual content:

Infographics

Pitch decks/slides

Ebooks/white papers

Interactive graphics

Motion graphics

Then take things a step further with the visual content marketing and create stuff that businesses can use both online and offline! Use infographics as brochures for example.

Your job as a company is to help companies to not fall behind the curve. Creative content helps. Find opportunities where sales/knowledge needs to increase and then create content for on and offline. Ask sales team questions, listen to social buzz, define problem and then strategize.

Instagram is HOT. One of the most powerful tools you can use right now.

Great platform to integrate a social strategy. Waffle Crush is an example of a company doing it RIGHT. They only post locations, specials, etc. on their Instagram account.

Use it to show off your products in context. Online & Offline worlds come together here.

Infographics can make great offline handouts so use this to your advantage.

How to cross over from social to the store – Nordstrom’s does a good job of this.

Encourage cross social sharing.

Social media can bring you back to the top.

Mark Traphagen: Google+ and Its Impact on Search

So what makes author authority? An authoritative author is both the originator and promoter. In social, you have got to be the original creator of the content. If you don’t like what’s being said, change the conversation. If you’re a creator, you want to be the one who’s changing the conversation.

Rel=author and beyond

Rel=author, in Dec. 2013, Google began to reduce the amount of authorship showing in SERPs.

Site authority and history was the No. 1 factor in author rank.

If you’re writing more on high authority sites, you’re more likely to get picked up.

Someone who produces good content with depth is more likely to show up.

Google has confirmed that author trust is a factor — they look at the overall trust that people seem to have in an author.

What does rel=author do for you?

Reinforces your brand

This becomes powerful as more and more searching show your results

Shows you in personalized search (+1s)

Google wants to know who you are.

How to Build Author Authority

This is going to become more important in the future. It’s important now for your brand to connect through social to your audience.

Be the “anyone” that people want to listen to

Be productive

Not necessarily publishing every day, but often enough

Be authoritative

Know your stuff … and know your stuff better than anyone else

You’re good, get better

Be thorough

Be different/don’t be afraid to stand out

Say things no one else is saying

Find your own voice

Find a way of presenting content that is unique

Be ubiquitous

Build social following

Share your best content, even if it’s from the past

If you want some respect, go out and get it for yourself

Author Authority for Advanced Search Marketers from Mark Traphagen

SMX Liveblog: The Periodic Table of SEO Ranking Factors

SMX Liveblog: The Periodic Table of SEO Ranking Factors was originally published on BruceClay.com, home of expert search engine optimization tips.

This room is packed with a good share of returnees and newbies. We’re told this session is going to cover everything you need to do to rank well in the SERP … mm hmm.

Speaking in this morning’s “The Periodic Table Of SEO Ranking Factors: 2014 Edition” are:

Matthew Brown, SVP of Special Projects, Moz (@MatthewJBrown)

Marianne Sweeny, SR Search Specialist, Portent (@msweeny)

Marcus Tober, CTO, Searchmetrics Inc. (@marcustober)

This session is fast and furious, and so is this liveblog. Here we go!

The 2014 Ranking Factors According to Marcus Tober

Tober promises to explain the Google algo in detail in the next 90 minutes (his ranking metrics presentation is set to the Iron Man theme). In the recent study they’ve done they compared ranking factors of 2013 to 2014 and what they are seeing more commonly across top ranking sites now vs then.

A few of the 2014 Ranking Factors:

Google+ and +1s don’t always give you great ranking results, however they help significantly in personalization results

On-page

Site Speed showed a strong increase in importance

The number of internal links to pages; pages in the site that have targeted and well thought out internal links will sometimes outrank pages in the site with an inflated number of internal links. You should still optimize for the flow of your link juice through the site. Sometimes Less is More.

Brand factor is also important

What’s even more important?

Content.

In the past the feeling was a little “yeah, content is important, I should have some”. Whereas now, it’s more like “Yes, you need to have content and your content needs to be optimized.” Marcus says they have noticed that the number of characters and the overall length of content has increased across top ranking sites compared to a year ago. Not only is it important to have more content, but you want to deliver a better user experience because the user’s expectation is higher than it was a year ago.

In the past the feeling was a little “yeah, content is important, I should have some”. Whereas now, it’s more like “Yes, you need to have content and your content needs to be optimized.” Marcus says they have noticed that the number of characters and the overall length of content has increased across top ranking sites compared to a year ago. Not only is it important to have more content, but you want to deliver a better user experience because the user’s expectation is higher than it was a year ago.

When writing your content you want to make sure to use Relevant Keywords and Proof Keywords. Relevant Keywords are the words and phrases that are relevant to your topic and are commonly used by other competitors. Proof Keywords are additional common words that aren’t necessarily ‘relevant’ but they were also found across the top ranking sites. Use synonymous keywords through out the content; don’t just reuse the same keyword phrase over and over again.

The readability of the content (Fleisch reading ease) is a factor so make sure to check this when creating content. Remember that the Panda algorithm focuses on the quality of the content on your site, so you want to have high quality content. Try to become holistic on your topic and become the best on the topic.

Social.

There was a high correlations with social +1s and Facebook shares among the top ranking sites that SearchMetrics looked at. The takeaway of this is that good content is shared more often, so create good content that people will want to share.

Link Profile.

Backlinks and their quality still matter — get good links from good sites.

Additional factors:

Number of links to to home page

New/fresh backlinks which are backlinks obtained within the last 30 days

URL anchors

Domain brand in anchor

Brands might not always have more “fresh” links but they have the brand awareness, so they typically rank high, and then the sites with a high number of “fresh” links were found to be ranking immediately after the brand.

When it comes to the mobile environment, the following factors were found to be important:

Site speed

Text length in characters; you want less content than what you might display on your desktop version of the site

Number of back links

Facebook likes

When analyzing desktop vs a mobile environment, only 64% of the sites ranking on the 2 platforms were the same in the SERPs. This makes it easy to say that mobile is ranked somewhat separate than desktop in the results with a fair amount of overlap.

Additional factors that SearchMetrics found to still be important in the ranking algo commonly among top ranking sites were:

CTR

Time on site

Bounce Rate

The amount of time on site and the click through rate seem to be more important than the Bounce Rate according to their study. This makes since seems how Bounce Rate can vary depending on the site, industry and information the user is seeking.

Marcus says that SEO shouldn’t be defined as Search Engine Optimization anymore, but it should be Search Experience Optimization instead.

With that, Marcus closes and Danny states a basic observation -the search algorithm is supposed to mirror what humans actually like. Humans typically don’t like junk, spam or bad sites so why should those types of sites rank higher than the quality sites that people actually do want to see. Good food for thought.

Marianne Sweeny on the User Experience

Marianne starts with a funny statement about how UX folks are annoying. I think to myself, “Really? More than SEOs to an IT team?” (ha ha ha).

She states that searchers are growing increasingly more lazy since search has become easier. Search has improved by the searchers behavior hasn’t improved at the same rate.

Historically, UX and SEO have developed on separate tracks when in fact they should have been joined all along. Back in 2011 when Panda started, this was the first real clue to the industry that UX was important in the SERPs even though it had been murmured about for ages.

UX factors that are important to the ranking algorithm include:

CTR

Engagement

Content

Links

CTR is impacted by the SERP and how users decide on what result to click on. Users scan results and because of this “lazy” behavior, Google decided to shorten the length of Titles they display and increase the font size in order to make it easier for those scanners.

Engagement: Users are not inclined to scroll on a home page unless induced to do so. They like to see the usable content above the fold. Maximize the space of your webpage above the fold.

Proto-typicality put simply means – Don’t put things in odd places, or call them odd things. Don’t get fancy and put your main global navigation at the bottom of a long page, or hide the search button in an inconspicuous spot on the page if your site has a high interaction with the search. Don’t reinvent the wheel here folks. If it ain’t broke, don’t fix it.

Visual Complexity is something else to think about. This is the ratio and relation of images to content. If a site has too much visual complexity it is perceived as not useful. Keep the ratio within reason to avoid any issues.

Navigation should be put where it is supposed to be and where users are used to finding it. Don’t have too many different types of navigation and make the overall navigation simple and easy to use so that users can find the pages they are looking for.

A popular design nowadays is having the large Hero graphic across the middle of the page, but according to Marianne, these don’t always work for UX. You’re taking away an opportunity to make the site useful.

Keep your “Click Distance” to task completion to only a few clicks. Don’t make people go to extra pages just to complete something that can be done in 3 pages. Also, think about the overall depth at which you bury your content. This use to be big a few years ago and Marianne still thinks it’s important enough to mention – don’t bury your great content 7 or 8 directories deep in the URL structure. This could lead search engines to believe the content is less important.

Content Inventory

Take a look at what content you have and what is presented to users. Do a Content Audit and assign each piece of content to a person where they have to either Keep it, Kill it or Revise it. Remember that dense, subject specific content is preferred over “thin” content – by users and the engines (Panda anyone?). Hummingbird also needs to be considered when creating or revising the content to make sure synonymous phrases, semantic search and the like are supported.

Some Measurable UX to think about for your site:

Conversions

Page value

Unique visitors

Bounce Rate

Consider setting “scrolling” as an action to factor into the Bounce Rate of a page, because after all, scrolling is a form of engagement.

Social Actions (shares, likes, pins etc.)

Number of pages visited

Avg time spent on a page

And the exit rate

Matthew Brown on SEO Success Factors

Brown opens by quoting Edward Tufte: “correlation is not causation but it sure is a hint.” He then advises us to look at correlation studies as HINTS, and not as actual ranking factors.

Things you can bank on:

Links: they’ll still be around for a few years but Google is trying to figure out how an expert user would say this particular page matched their information needs.

Anchor text: if you have better anchor text, you will rank better. Internal and external

“Iffy” factors

Keyword strings in Title tags – Google is doing a lot of rewriting of Title tags and descriptions so this doesn’t work as well nowadays

Structure data – marking up for the wrong thing can negatively impact CTR. Match the markup to what the intent should be in order to benefit fully

Ranking factors – not as useful because it’s so mixed; knowing what is showing up in the actual SERPs is more important

New things with potential:

Entity based optimization and semantic search – know what other entities appear in the SERPs along with semantic useage

Alchemy API – a tool to learn about entities and gives you clues of other things to target in your optimization efforts

Knowledge graph optimization – the Knowledge graph takes up so much space and includes a ton of useful data so use that to your benefit

Some additional information can be found here: www.Blindfiveyearold.com/knowledge-gr...

Mobile – it continues to rise with 25% of total web usage being from a mobile vs. 14% last year Globally

Unknown unknowns: “There are things we know; there are things we don’t know and there are things we don’t know we don’t know.”

Think about this for a second. There are things we know about and can easily guess that they may or may not become more important in the ranking algorithm. Then there are things we don’t know that may one day become important. The important thing is to just pay attention and be smart about the competition. Know what is working for a set of competitors and then move towards that target.

Hummingbird requires us to retest all previous SEO assumptions. You now need to optimize content around entities and relationships. Furthermore, every site has its own set of success factors. Know your competitors and what those factors are.

Final takeaway: SEO is going to be harder, and SEOs will be in demand for a long time.

June 5, 2014

The State of SEO in Europe (Right to Be Forgotten & More) by BCI’s EU Director

The State of SEO in Europe (Right to Be Forgotten & More) by BCI’s EU Director was originally published on BruceClay.com, home of expert search engine optimization tips.

What’s the state of Internet marketing in Europe, and what are the biggest needs among EU marketers? In this interview, Ale Agostini, head of Bruce Clay, Inc. Europe, weighs in from a first-hand perspective.

Ale Agostini

According to Agostini, the biggest need among European marketers is for solid SEO training — because companies that understand Internet marketing the best, succeed the most — especially in Europe’s complex multilingual market. Here in the U.S., we may take for granted the wealth of training opportunities, conferences, and expert information sources available. But marketing in another country and language can be quite different. Search engines roll out updates on a delayed schedule, translated information may be slow in coming, and the number of search industry conferences is still low in comparison.

To help fill the need, Bruce Clay, Inc. Europe will put on a special two-day SEOToolSet® Training in Milan, Italy July 2–3. This event is specially geared for search marketers from Italy, Germany, UK, Holland, France and across Europe, and features Bruce Clay himself as the instructor.

In preparation for this event, we’re turning our focus to Europe and asking Ale Agostini to weigh in on:

Top needs of European marketers

State of SEO in Europe (including Right To Be Forgotten and Panda 4.0)

What to expect from Bruce Clay’s upcoming training in Italy

Interview with Ale Agostini

You head up the European office of Bruce Clay, Inc. Do you serve clients throughout Europe, and what types of services do you offer?

We serve clients in eight different countries in Europe, mainly Italy, UK, Holland, Germany and Switzerland; our clients are usually medium to large companies that take full advantage of online marketing to boost their business (on and offline).

Our office is in Switzerland because it’s the only country in Europe with three official languages (German, French, Italian) matching the three biggest EU markets. Our client base is very wide and goes from innovative ecommerce and online services to “brick and mortar” business websites.

What are the greatest needs you see among new clients?

Companies need online branding, traffic and conversions, but often they do not have the people who can do the job; that’s why we provide different types of Internet marketing services, from SEO, PPC, Google+, ORM and YouTube marketing to usability and conversion optimization. Our services are offered in all the main EU languages.

Normally we have a very strong loyalty index among our clients. Most of our SEO clients have increased their organic traffic at least by 100% in a semester of work.

The biggest need I see overall is for EU marketers to understand what they are buying. Usually, clients who understand our work have a higher return on investment on their Internet marketing expenses. That’s why I often attend conferences and provide trainings.

The state of SEO in Europe

What can you say about the state of SEO in Europe?

The switch from traditional advertising to online advertising is moving forward, leading to an increased interest in search engine marketing. Small business owners want to reach more targeted consumers with alternative selling channels; business owners realize that PPC and SEO investments are the most effective ways to drive business on and offline.

What’s your perspective on the European Court’s recent “Right To Be Forgotten” ruling?

RTF (Right To Be Forgotten) might create huge trouble for Google and the online players (social media). Focusing on search, EU governments are considering what information people can force Google and other search engines to remove from results. The so-called “take-down requests” will need to be somehow integrated into search engine algorithms.

The German ministry has already said that talks with Google and other engines will begin once the government has finalized its position. Nevertheless, we should consider that Europe still has many countries that could each produce different legislation — so how many “RTF algorithm patches” will we need? Will they be “global” or at the TLD country/language level? How will the arbitration system work for Right-To-Be-Forgotten complaints? And who will lead & rule this “information bureau” or “censorship court” that will officially decide what information can and cannot reside in the corpus of search results?

On the other hand, Right To Be Forgotten will be a great opportunity for IT lawyers that might be involved.

Since the Google algorithm updates rolled out in May, have you seen any effect in European search results?

Panda 4.0 is brand new and we are still analyzing its impact and constantly monitoring Google SERPs. None of our clients have registered any associated SEO loss so far, though we have received some new-client inquiries related to Panda from prospects that use a lot of press releases. I think we’ll have some extra findings by the end of June, in time for our SEO training in Milan.

About the SEO Training in Italy

Bruce Clay is coming to Europe to personally give his highly acclaimed SEOToolSet® Training in Milan July 2–3. How will this training work?

This is a very enlightening and advanced program condensed into a two-day SEO course. We will cover the basics plus the latest SEO trends, from Panda 4.0 up to semantic web.

The course will be taught in English, with simultaneous translation in Italian and probably German. (A German company is sending 8 people to the training.)

Note: Milan is a very interesting city the first week in July. There are some big shopping events going on that attract people (normally women, but do not ask me why… :) ) from all over Europe.

Bruce Clay provides SEO training around the world.

Who should attend this SEO training?

The primary audience for our SEOToolSet Training class is marketing managers, web designers, webmasters and business owners, because they need to understand how natural rankings and paid search on Google really work. In recent years, we’ve also noticed an increased interest from copywriters who, after typically working in offline environments, find that the process of writing for the web (with SEO in mind) requires a totally different skill set.

What benefits will students receive during the two-day course and afterwards?

Great training, good networking (we have some great companies attending like Priceline, Panasonic, Generali and Lavazza), awesome food, excellent freshly brewed espresso (I hope from Lavazza), course materials on SEO, and powerful SEO tools. Our course includes a subscription to our latest version SEOToolSet®, so attendees will be able to continue using advanced and fast SEO power tools.

Attendees will also receive the latest Italian book on SEO authored by Bruce and me (which has been ranking number 1 on the Amazon.it bestselling books list).

Ale Agostini (third from left) holding the Italian book on SEO that he co-authored with Bruce Clay

The last time Bruce Clay gave an SEO training in Italy was 2012. What stands out in your memory of that experience?

People loved Bruce’s training for the quantity and quality of information he shared. The Italian national TV network RAI (similar to PBS in the U.S.) came to interview him and review the first edition of our book. I’m sure that the 2014 Bruce Clay course will receive similar attention and the same positive results.

The last time Bruce Clay brought SEOToolSet® Training to Italy was in 2012

How can this Bruce Clay SEO course help European Internet marketers?

The first time I attended Bruce’s training (2007), I had to fly over to the U.S., which is not cheap if you fly from Europe. Our July 2–3 training will bring people from all over Europe for this opportunity that’s closer to home and focused on EU markets.

Bruce has extensive and solid experience in Internet marketing; he is one of the few guys around the world who can clearly explain how search engines and Internet marketing work and how to take full advantage for your business.

To learn more about Bruce Clay’s SEOToolSet® Training in Milan, visit Bruce Clay, Inc. Europe.

June 4, 2014

SMX Advanced 2014 Series: Christine Churchill on Keyword Research Post-Hummingbird

SMX Advanced 2014 Series: Christine Churchill on Keyword Research Post-Hummingbird was originally published on BruceClay.com, home of expert search engine optimization tips.

We’re a week out from SMX Advanced 2014 and Bruce’s one-day SEO Training Workshop (less than five seats remain!), and we’ve got two more installments of the SMX Advanced 2014 Interview Series. Over the past month, I’ve interviewed VIP speakers to get a preview of the tips they’re going to share at SMX Advanced 2014. I’ve delved into:

Search + social strategy with Lisa Williams

Content that ignites passion with David Roth

Paid search secrets with Seth Meisel

Today, we continue with Christine Churchill, president and SEO of KeyRelevance. Churchill has been a leader in Internet marketing for more than a decade and is the co-founder of SEMPO (Search Engine Marketing Professional Organization). She will grace the SMX stage once again next week to share her keyword expertise in “Keyword Research On ‘Roids! Advanced Workarounds For Vanishing Keyword Data” at 11 a.m. on June 11.

Today, we continue with Christine Churchill, president and SEO of KeyRelevance. Churchill has been a leader in Internet marketing for more than a decade and is the co-founder of SEMPO (Search Engine Marketing Professional Organization). She will grace the SMX stage once again next week to share her keyword expertise in “Keyword Research On ‘Roids! Advanced Workarounds For Vanishing Keyword Data” at 11 a.m. on June 11.

Read on to discover Churchill’s advice for SEO newbies, her tried-and-true tools, her thoughts on “SEO is dead” and her keyword research strategies post-Hummingbird, and much more.

You’ve been in the ever-changing SEO industry since 1997. What advice do you have for those just starting out so they can infiltrate the field?

Most people will tell you to be a specialist, but the advice I would give to my own daughter if she were going into this field is to learn many skills so you make yourself indispensable. If you’re a one trick pony and that trick stops working, you’re out of luck. Being able to wear many hats and being skilled in several areas makes you more adaptable and gives you an advantage if you want to stay in this field for the long haul. I saw many early-day SEOs who were purely technical drop out as the search engines got smarter. They got stuck in the code and couldn’t lift their head to see what the user was doing. The successful marketer today has to have a good grounding in many fields including marketing fundamentals, psychology and usability to understand motivation and visitor behavior, plus some technical skills. I would encourage anyone wanting to go into SEO to learn HTML. I’m always surprised when some self-proclaimed SEO “guru” can’t understand basic HTML. You will always be limited in what you can do in SEO if you don’t have a basic understanding of the code. HTML is a markup language, it’s not hard to master and it comes in handy when you have to implement basic things like schema code.

As an experienced SEO and SEM speaker, you have to stay on top of the latest strategies. How do you make sure your presentations are up to date?

You have to stay engaged — I am still actively working in search (#firsthand experience), plus:

I read a lot

I’m active in my local search marketing organization

I attend industry conferences

This field changes constantly so you have to constantly learn. The conferences are a fast way to immerse yourself in the latest changes — they’re the search industry’s best place for professional development. You find out what is new, what’s changed, and what your peers have learned.

As for the presentations, I have to constantly update them – tools change and the algorithm changes, so even though I frequently speak on keyword research, how we actually do that the research changes — so my presentations need to reflect the changes. I’ve also seen the audience change over the years; it used to be all SEOs, but now it’s heavy with copywriters, content developers, and social media marketers. They need to learn the vocabulary of their target audience so they can speak the same language. The content needs to speak to the customer and the easiest way to do that is to use their words. That is the essence of good keyword research. It’s not stuffing keywords to trick an engine, it’s to communicate better with your customers: and if you do that, you’re helping the engines better understand the material as well.

Who would benefit the most from your SMX Advanced session on keyword research? Just the SEO-savvy or should some of the big dogs and CEOs be in attendance?