Bruce Clay's Blog, page 20

November 16, 2015

Search Expert Duane Forrester Joins Bruce Clay, Inc. as VP of Organic Search Operations to Take the Road Less Traveled

Search Expert Duane Forrester Joins Bruce Clay, Inc. as VP of Organic Search Operations to Take the Road Less Traveled was originally published on BruceClay.com, home of expert search engine optimization tips.

Where does the former lead SEO at Microsoft/MSN and leader in the development of Bing Webmaster Tools go after an eight-year tenure at Microsoft? If the corporate world is a freeway, Duane Forrester heads for the exit, takes the road less traveled, and joins Bruce Clay, Inc. in a newly created position as Vice President, Organic Search Operations.

It’s time to enjoy the open road.

Bruce Clay, Inc. adds Duane Forrester to the team in order to provide businesses with an exclusive advantage in search engine optimization methodology and digital marketing strategy. Forrester, who was awarded Search Personality of the Year at the 2014 U.S. Search Awards, is a visible and popular figure in the search industry.

“Everyone knows that Duane could have gone to work for any company he wanted,” says our president, Bruce Clay. “It is an honor that he chose to work here.”

Duane will have oversight of the organic search direction at Bruce Clay, Inc., as well as the company’s content, social media and design departments. He will also serve as a spokesman for Bruce Clay, Inc. by attending and speaking at industry conferences.

“Duane has great experience with how things work, and that will help us to enhance our existing capabilities for all aspects of digital marketing, including SEO, social media and web design. This is the man who created the Bing Webmaster Tools — that will give us a wealth of insight,” Bruce points out.

An SEO with Many Talents

Duane’s professional career path reveals a man who wears many hats — but one who wears each hat confidently. It’s easy to see why, no matter which hat he wears, Duane gives his all to the project or company at hand. He comes from a small business background and helped run a family-owned motel for 16 years. It was during this time that he learned what it takes to run a small business and discovered that he has a passion for helping companies grow.

Duane has made significant contributions to every place he’s worked, from developing the first player’s program for Caesar’s Palace in Canada to managing SEO at a sports startup that has since become a leader in the sports content publishing world.

Then, Microsoft called. During his eight-year career at Microsoft, Duane served as senior product manager at Bing — a role that led to the creation of Bing Webmaster Tools — and spent nearly five years as the primary touchpoint between Bing and the webmaster community. During this time he became known as the approachable face of Bing, bridging the gap between the search engine’s inner workings and the information-hungry SEO community.

“If you’ve ever heard Duane speak at a conference, you know that he is able to answer any question about how a search engine handles particular SEO concerns, from 404 pages to HTTPS,” said Mindy Weinstein, author and director of training at Bruce Clay, Inc. “He always has an answer and a thorough explanation — we are so excited to be able to take that experience and infuse it into our analysts’ ongoing education and our open enrollment SEOToolSet Training.”

So, the Big Question: Why BCI?

“Over the past decade I’ve been approached by countless agencies,” says Duane. “However, Bruce Clay, Inc. is different because of three main pillars that we stand on: services, proprietary tools, and talent. Most agencies don’t have as deep a tool set. They use rich tools, but not proprietary tools. If they need something fine-tuned for a client, they can’t walk down the hall and ask the programmer to add a parameter — which is something we can do.”

“When it comes to talent, I see agencies struggle. BCI is very stable and has an expert knowledge base. I know the team that’s here — and they’re smart SEOs.”

The Road Ahead

For Duane, the role of VP of organic search operations at BCI will allow him to take all of his cumulative experience and use it in new ways. He will be free to talk openly about Google, turn ideas into products faster, and enjoy the open roads of sunny Southern California — on the weekends, of course.

For BCI, having Duane on the team is a natural part of the company’s growth and commitment to excellence. For the last eight years in a row, Bruce Clay, Inc. has made the Inc. Magazine 500|5000 list as one of the fastest-growing private companies in the U.S. Most recently, in September the company added author, speaker, and paid search expert David Szetela as VP of paid search marketing operations.

“A growing number of our clients come to us for both SEO and PPC services, and Duane’s deep understanding of both disciplines will help accelerate that growth,” David explains.

Are You Confident in Your SEO Agency?

SEO is technical. You pay an SEO agency for its technical insight, experience, and ability to drive traffic to your site that grows your business. What if an agency had all of the above, plus skills and experience that no other agency could claim?

What if an SEO agency had Duane Forrester on the team?

With the addition of Duane Forrester, Bruce Clay, Inc. brings his knowledge of SEO to the world-class offerings of the company.

“If companies want to win at SEO, they should talk to us at some point,” says Bruce.

So let’s talk.

November 12, 2015

Is Google about to Unplug Its Penguin?

Is Google about to Unplug Its Penguin? was originally published on BruceClay.com, home of expert search engine optimization tips.

TL;DR – A theory: The next Google Penguin update, expected to roll out before year’s end, will kill link spam outright by eliminating the signals associated with inorganic backlinks. Google will selectively pass link equity based on the topical relevance of linked sites, made possible by semantic analysis. Google will reward organic links and perhaps even mentions from authoritative sites in any niche. As a side effect, link-based negative SEO and Penguin “penalization” will be eliminated.

Is the End of Link Spam Upon Us?

Google’s Gary Illyes has recently gone on record regarding Google’s next Penguin update. What he’s saying has many in the SEO industry taking note:

The Penguin update will launch before the end of 2015. (Since it’s been more than a year since the last update, this would be a welcome release.)

The next Penguin will be a “real-time” version of the algorithm.

Many anticipate that once Penguin is rolled into the standard ranking algorithm, ranking decreases and increases will be doled out in near real-time as Google considers negative and positive backlink signals. Presumably, this would include a more immediate impact from disavow file submissions — a tool that has been the topic of much debate in the SEO industry.

But what if Google’s plan is to actually change the way Penguin works altogether? What if we lived in a world where inorganic backlinks didn’t penalize a site, but were instead simply ignored by Google’s algorithm and offered no value? What if the next iteration of Penguin, the one that is set to run as part of the algorithm, is actually Google’s opportunity to kill the Penguin algorithm altogether and change the way they consider links by leveraging their knowledge of authority and semantic relationships on the web?

We at Bruce Clay, Inc. have arrived at this theory after much discussion, supposition and, like any good SEO company, reverse engineering. Let’s start with the main problems that the Penguin penalty was designed to address, leading to our hypothesis on how a newly designed algorithm would deal with them more effectively.

Working Backwards: The Problems with Penguin

Of all of the algorithmic changes geared at addressing webspam, the Penguin penalty has been the most problematic for webmasters and Google alike.

It’s been problematic for webmasters because of how difficult it is to get out from under. If some webmasters knew just how difficult it would be to recover from Penguin penalties starting in April of 2012, they may have decided to scrap their sites and start from scratch. Unlike manual webspam penalties, where (we’re told) a Google employee reviews link pruning and disavow file work, algorithmic actions are reliant on Google refreshing their algorithm in order to see recovery. Refreshes have only happened four times since the original Penguin penalty was released, making opportunities for contrition few and far between.

Penguin has been problematic for Google because, at the end of the day, Penguin penalizations and the effects they have on businesses both large and small have been a PR nightmare for the search engine. Many would argue that Google could care less about negative sentiment among the digital marketing (specifically SEO) community, but the ire toward Google doesn’t stop there; many major mainstream publications like The Wall Street Journal, Forbes and CNBC have featured articles that highlight Penguin penalization and its negative effect on small businesses.

Dealing with Link Spam & Negative SEO Problems

Because of the effectiveness that link building had before 2012 (and to a degree, since) Google has been dealing with a huge link spam problem. Let’s be clear about this; Google created this monster when it rewarded inorganic links in the first place. For quite some time, link building worked like a charm. If I can borrow a quote from my boss, Bruce Clay: “The old way of thinking was he who dies with the most links wins.”

This tactic was so effective that it literally changed the face of the Internet. Blog spam, comment spam, scraper sites – none of them would exist if Google’s algorithm didn’t, for quite some time, reward the acquisition of links (regardless of source) with higher rankings.

Negative SEO: a problem that Google says doesn’t exist, while many documented examples indicate otherwise.

And then there’s negative SEO — the problem that Google has gone on record as saying is not a problem, while there have been many documented examples that indicate otherwise. Google even released the disavow tool, designed in part to address the negative SEO problem they deny exists.

The Penguin algorithm, intended to address Google’s original link spam issues, has fallen well short of solving the problem of link spam; when you add in the PR headache that Penguin has become, you could argue that Penguin has been an abject failure, ultimately causing more problems than it has solved. All things considered, Google is highly motivated to rethink how they handle link signals. Put simply, they need to build a better mousetrap – and the launch of a “new Penguin” is an opportunity to do just that.

A Solution: Penguin Reimagined

Given these problems, what is the collection of PhDs in Mountain View, CA, to do? What if, rather than policing spammers, they could change the rules and disqualify spammers from the game altogether?

By changing their algorithm to no longer penalize nor reward inorganic linking, Google can, in one fell swoop, solve their link problem once and for all. The motivation for spammy link building would be removed because it simply would not work any longer. Negative SEO based on building spammy backlinks to competitors would no longer work if inorganic links cease to pass negative trust signals.

Search Engine Technologies Defined

Knowledge Graph, Hummingbird and RankBrain — Oh My!

What is the Knowledge Graph?

The Knowledge Graph is Google’s database of semantic facts about people, places and things (called entities). Knowledge Graph can also refer to a boxed area on a Google search results page where summary information about an entity is displayed.

What is Google Hummingbird?

Google Hummingbird is the name of the Google search algorithm. It was launched in 2013 as an overhaul of the engine powering search results, allowing Google to understand the meaning behind words and relationships between synonyms (rather than matching results to keywords) and to process conversational (spoken style) queries.

What is RankBrain?

RankBrain is the name of Google’s artificial intelligence technology used to process search results with machine learning capabilities. Machine learning is the process where a computer teaches itself by collecting and interpreting data; in the case of a ranking algorithm, a machine learning algorithm may refine search results based on feedback from user interaction with those results.

What prevents Google from accomplishing this is that it requires the ability to accurately judge which links are relevant for any site or, as the case may be, subject. Developing this ability to judge link relevance is easier said than done, you say – and I agree. But, looking at the most recent changes that Google has made to their algorithm, we see that the groundwork for this type of algorithmic framework may already be in place. In fact, one could infer that Google has been working towards this solution for quite some time now.

The Semantic Web, Hummingbird & Machine Learning

In case you haven’t noticed, Google has made substantial investments to increase their understanding of the semantic relationships between entities on the web.

With the introduction of the Knowledge Graph in May of 2012, the launch of Hummingbird in September of 2013 and the recent confirmation of the RankBrain machine learning algorithm, Google has recently taken quantum leaps forward in their ability to recognize the relationships between objects and their attributes.

Google understands semantic relationships by examining and extracting data from existing web pages and by leveraging insights from the queries that searchers use on their search engine.

Google’s search algorithm has been getting “smarter” for quite some time now, but as far as we know, these advances are not being applied to one of Google’s core ranking signals – external links. We’ve had no reason to suspect that the main tenets of PageRank have changed since they were first introduced by Sergey Brin and Larry Page back in 1998.

Why not now?

What if Google could leverage their semantic understanding of the web to not only identify the relationships between keywords, topics and themes, but also the relationships between the websites that discuss them? Now take things a step further; is it possible that Google could identify whether a link should pass equity (link juice) to its target based on topic relevance and authority?

Bill Slawski, the SEO industry’s foremost Google patent analyzer, has written countless articles about the semantic web, detailing Google’s process for extracting and associating facts and entities from web pages. It is fascinating (and complicated) analysis with major implications for SEO.

For our purposes, we will simplify things a bit. We know that Google has developed a method for understanding entities and the relationship that they have to specific web pages. An entity, in this case, is “a specifically named person, place, or thing (including ideas and objects) that could be connected to other entities based upon relationships between them.” This sounds an awful lot like the type of algorithmic heavy lifting that would need to be done if Google intended to leverage its knowledge of the authoritativeness of websites in analyzing the value of backlinks based on their relevance and authority to a subject.

Moving Beyond Links

SEOs are hyper-focused on backlinks, and with good reason; correlation studies that analyze ranking factors continue to score quality backlinks as one of Google’s major ranking influences. It was this correlation that started the influx of inorganic linking that landed us in our current state of affairs.

But, what if Google could move beyond links to a model that also rewarded mentions from authoritative sites in any niche? De-emphasizing links while still rewarding references from pertinent sources would expand the signals that Google relied on to gauge relevance and authority and help move them away from their dependence on links as a ranking factor. It would also, presumably, be harder to “game” as true authorities on any subject would be unlikely to reference brands or sites that weren’t worthy of the mention.

This is an important point. In the current environment, websites have very little motivation to link to outside sources. This has been a problem that Google has never been able to solve. Authorities have never been motivated to link out to potential competitors, and the lack of organic links in niches has led to a climate where the buying and selling of links can seem to be the only viable link acquisition option for some websites. Why limit the passage of link equity to a hyperlink? Isn’t a mention from a true authority just as strong a signal?

There is definitely precedent for this concept. “Co-occurrence” and “co-citation” are terms that have been used by SEOs for years now, but Google has never confirmed that they are ranking factors. Recently however, Google began to list unlinked mentions in the “latest links” report in Search Console. John Mueller indicated in a series of tweets that Google does in fact pick up URL mentions from text, but that those mentions do not pass PageRank.

What’s notable here is not only that Google is monitoring text-only domain mentions, but also that they are associating those mentions with the domain that they reference. If Google can connect the dots in this fashion, can they expand beyond URLs that appear as text on a page to entity references, as well? The same references that trigger Google’s Knowledge Graph, perhaps?

In Summary

We’ve built a case based on much supposition and conjecture, but we certainly hope that this is the direction in which Google is taking their algorithm. Whether Google acknowledges it or not, the link spam problem has not yet been resolved. Penguin penalties are punitive in nature and exceedingly difficult to escape from, and the fact of the matter is that penalizing wrongdoers doesn’t address the problem at its source. The motivation to build inorganic backlinks will exist as long as the tactic is perceived to work. Under the current algorithm, we can expect to continue seeing shady SEOs selling snake oil, and unsuspecting businesses finding themselves penalized.

Google’s best option is to remove the negative signals attached to inorganic links and only reward links that they identify as relevant. By doing so, they immediately eviscerate spam link builders, whose only quick, scalable option for building links is placing them on websites that have little to no real value.

By tweaking their algorithm to only reward links that have expertness, authority and trust in the relevant niche, Google can move closer than ever before to solving their link spam problem.

Is Google about to Kill Its Penguin?

Is Google about to Kill Its Penguin? was originally published on BruceClay.com, home of expert search engine optimization tips.

TL;DR – A theory: The next Google Penguin update, expected to roll out before year’s end, will kill link spam outright by eliminating the signals associated with inorganic backlinks. Google will selectively pass link equity based on the topical relevance of linked sites, made possible by semantic analysis. Google will reward organic links and perhaps even mentions from authoritative sites in any niche. As a side effect, link-based negative SEO and Penguin “penalization” will be eliminated.

Is the End of Link Spam Upon Us?

Google’s Gary Illyes has recently gone on record regarding Google’s next Penguin update. What he’s saying has many in the SEO industry taking note:

The Penguin update will launch before the end of 2015. (Since it’s been more than a year since the last update, this would be a welcome release.)

The next Penguin will be a “real-time” version of the algorithm.

Many anticipate that once Penguin is rolled into the standard ranking algorithm, ranking decreases and increases will be doled out in near real-time as Google considers negative and positive backlink signals. Presumably, this would include a more immediate impact from disavow file submissions — a tool that has been the topic of much debate in the SEO industry.

But what if Google’s plan is to actually change the way Penguin works altogether? What if we lived in a world where inorganic backlinks didn’t penalize a site, but were instead simply ignored by Google’s algorithm and offered no value? What if the next iteration of Penguin, the one that is set to run as part of the algorithm, is actually Google’s opportunity to kill the Penguin algorithm altogether and change the way they consider links by leveraging their knowledge of authority and semantic relationships on the web?

We at Bruce Clay, Inc. have arrived at this theory after much discussion, supposition and, like any good SEO company, reverse engineering. Let’s start with the main problems that the Penguin penalty was designed to address, leading to our hypothesis on how a newly designed algorithm would deal with them more effectively.

Working Backwards: The Problems with Penguin

Of all of the algorithmic changes geared at addressing webspam, the Penguin penalty has been the most problematic for webmasters and Google alike.

It’s been problematic for webmasters because of how difficult it is to get out from under. If some webmasters knew just how difficult it would be to recover from Penguin penalties starting in April of 2012, they may have decided to scrap their sites and start from scratch. Unlike manual webspam penalties, where (we’re told) a Google employee reviews link pruning and disavow file work, algorithmic actions are reliant on Google refreshing their algorithm in order to see recovery. Refreshes have only happened four times since the original Penguin penalty was released, making opportunities for contrition few and far between.

Penguin has been problematic for Google because, at the end of the day, Penguin penalizations and the effects they have on businesses both large and small have been a PR nightmare for the search engine. Many would argue that Google could care less about negative sentiment among the digital marketing (specifically SEO) community, but the ire toward Google doesn’t stop there; many major mainstream publications like The Wall Street Journal, Forbes and CNBC have featured articles that highlight Penguin penalization and its negative effect on small businesses.

Dealing with Link Spam & Negative SEO Problems

Because of the effectiveness that link building had before 2012 (and to a degree, since) Google has been dealing with a huge link spam problem. Let’s be clear about this; Google created this monster when it rewarded inorganic links in the first place. For quite some time, link building worked like a charm. If I can borrow a quote from my boss, Bruce Clay: “The old way of thinking was he who dies with the most links wins.”

This tactic was so effective that it literally changed the face of the Internet. Blog spam, comment spam, scraper sites – none of them would exist if Google’s algorithm didn’t, for quite some time, reward the acquisition of links (regardless of source) with higher rankings.

Negative SEO: a problem that Google says doesn’t exist, while many documented examples indicate otherwise.

And then there’s negative SEO — the problem that Google has gone on record as saying is not a problem, while there have been many documented examples that indicate otherwise. Google even released the disavow tool, designed in part to address the negative SEO problem they deny exists.

The Penguin algorithm, intended to address Google’s original link spam issues, has fallen well short of solving the problem of link spam; when you add in the PR headache that Penguin has become, you could argue that Penguin has been an abject failure, ultimately causing more problems than it has solved. All things considered, Google is highly motivated to rethink how they handle link signals. Put simply, they need to build a better mousetrap – and the launch of a “new Penguin” is an opportunity to do just that.

A Solution: Penguin Reimagined

Given these problems, what is the collection of PhDs in Mountain View, CA, to do? What if, rather than policing spammers, they could change the rules and disqualify spammers from the game altogether?

By changing their algorithm to no longer penalize nor reward inorganic linking, Google can, in one fell swoop, solve their link problem once and for all. The motivation for spammy link building would be removed because it simply would not work any longer. Negative SEO based on building spammy backlinks to competitors would no longer work if inorganic links cease to pass negative trust signals.

Search Engine Technologies Defined

Knowledge Graph, Hummingbird and RankBrain — Oh My!

What is the Knowledge Graph?

The Knowledge Graph is Google’s database of semantic facts about people, places and things (called entities). Knowledge Graph can also refer to a boxed area on a Google search results page where summary information about an entity is displayed.

What is Google Hummingbird?

Google Hummingbird is the name of the Google search algorithm. It was launched in 2013 as an overhaul of the engine powering search results, allowing Google to understand the meaning behind words and relationships between synonyms (rather than matching results to keywords) and to process conversational (spoken style) queries.

What is RankBrain?

RankBrain is the name of Google’s artificial intelligence technology used to process search results with machine learning capabilities. Machine learning is the process where a computer teaches itself by collecting and interpreting data; in the case of a ranking algorithm, a machine learning algorithm may refine search results based on feedback from user interaction with those results.

What prevents Google from accomplishing this is that it requires the ability to accurately judge which links are relevant for any site or, as the case may be, subject. Developing this ability to judge link relevance is easier said than done, you say – and I agree. But, looking at the most recent changes that Google has made to their algorithm, we see that the groundwork for this type of algorithmic framework may already be in place. In fact, one could infer that Google has been working towards this solution for quite some time now.

The Semantic Web, Hummingbird & Machine Learning

In case you haven’t noticed, Google has made substantial investments to increase their understanding of the semantic relationships between entities on the web.

With the introduction of the Knowledge Graph in May of 2012, the launch of Hummingbird in September of 2013 and the recent confirmation of the RankBrain machine learning algorithm, Google has recently taken quantum leaps forward in their ability to recognize the relationships between objects and their attributes.

Google understands semantic relationships by examining and extracting data from existing web pages and by leveraging insights from the queries that searchers use on their search engine.

Google’s search algorithm has been getting “smarter” for quite some time now, but as far as we know, these advances are not being applied to one of Google’s core ranking signals – external links. We’ve had no reason to suspect that the main tenets of PageRank have changed since they were first introduced by Sergey Brin and Larry Page back in 1998.

Why not now?

What if Google could leverage their semantic understanding of the web to not only identify the relationships between keywords, topics and themes, but also the relationships between the websites that discuss them? Now take things a step further; is it possible that Google could identify whether a link should pass equity (link juice) to its target based on topic relevance and authority?

Bill Slawski, the SEO industry’s foremost Google patent analyzer, has written countless articles about the semantic web, detailing Google’s process for extracting and associating facts and entities from web pages. It is fascinating (and complicated) analysis with major implications for SEO.

For our purposes, we will simplify things a bit. We know that Google has developed a method for understanding entities and the relationship that they have to specific web pages. An entity, in this case, is “a specifically named person, place, or thing (including ideas and objects) that could be connected to other entities based upon relationships between them.” This sounds an awful lot like the type of algorithmic heavy lifting that would need to be done if Google intended to leverage its knowledge of the authoritativeness of websites in analyzing the value of backlinks based on their relevance and authority to a subject.

Moving Beyond Links

SEOs are hyper-focused on backlinks, and with good reason; correlation studies that analyze ranking factors continue to score quality backlinks as one of Google’s major ranking influences. It was this correlation that started the influx of inorganic linking that landed us in our current state of affairs.

But, what if Google could move beyond links to a model that also rewarded mentions from authoritative sites in any niche? De-emphasizing links while still rewarding references from pertinent sources would expand the signals that Google relied on to gauge relevance and authority and help move them away from their dependence on links as a ranking factor. It would also, presumably, be harder to “game” as true authorities on any subject would be unlikely to reference brands or sites that weren’t worthy of the mention.

This is an important point. In the current environment, websites have very little motivation to link to outside sources. This has been a problem that Google has never been able to solve. Authorities have never been motivated to link out to potential competitors, and the lack of organic links in niches has led to a climate where the buying and selling of links can seem to be the only viable link acquisition option for some websites. Why limit the passage of link equity to a hyperlink? Isn’t a mention from a true authority just as strong a signal?

There is definitely precedent for this concept. “Co-occurrence” and “co-citation” are terms that have been used by SEOs for years now, but Google has never confirmed that they are ranking factors. Recently however, Google began to list unlinked mentions in the “latest links” report in Search Console. John Mueller indicated in a series of tweets that Google does in fact pick up URL mentions from text, but that those mentions do not pass PageRank.

What’s notable here is not only that Google is monitoring text-only domain mentions, but also that they are associating those mentions with the domain that they reference. If Google can connect the dots in this fashion, can they expand beyond URLs that appear as text on a page to entity references, as well? The same references that trigger Google’s Knowledge Graph, perhaps?

In Summary

We’ve built a case based on much supposition and conjecture, but we certainly hope that this is the direction in which Google is taking their algorithm. Whether Google acknowledges it or not, the link spam problem has not yet been resolved. Penguin penalties are punitive in nature and exceedingly difficult to escape from, and the fact of the matter is that penalizing wrongdoers doesn’t address the problem at its source. The motivation to build inorganic backlinks will exist as long as the tactic is perceived to work. Under the current algorithm, we can expect to continue seeing shady SEOs selling snake oil, and unsuspecting businesses finding themselves penalized.

Google’s best option is to remove the negative signals attached to inorganic links and only reward links that they identify as relevant. By doing so, they immediately eviscerate spam link builders, whose only quick, scalable option for building links is placing them on websites that have little to no real value.

By tweaking their algorithm to only reward links that have expertness, authority and trust in the relevant niche, Google can move closer than ever before to solving their link spam problem.

November 4, 2015

The Power of a Page Analyzer: I Ran ‘The Great Gatsby’ through an SEO Tool & This Is What Happened

The Power of a Page Analyzer: I Ran ‘The Great Gatsby’ through an SEO Tool & This Is What Happened was originally published on BruceClay.com, home of expert search engine optimization tips.

Ever wondered what would happen if you ran classic literature through an SEO tool? Me, too!

I’ve got a sweet spot for tools that give me an idea of how I’m doing as a search marketer and content publisher. One test of an SEO tool’s power is if the software can do the job of a careful human expert in a fraction of the time.

I’ve got a sweet spot for tools that give me an idea of how I’m doing as a search marketer and content publisher. One test of an SEO tool’s power is if the software can do the job of a careful human expert in a fraction of the time.

The following experiment details what happened when an SEO tool meets F. Scott Fitzgerald’s “The Great Gatsby.” While it was devised in fun and out of true curiosity, it ended up being a real-life study of SEO tools at work, worth sharing.

The Setup

I’m a writer at digital marketing agency Bruce Clay, Inc., and I’m also an avid reader. Literature lovers like me can spend hours picking apart themes and character dynamics. SEO analysis, meanwhile, should be as efficient as possible with time and resources. Can an on-page analyzer can tell me:

How hard or easy text is to understand

The relationship between characters (or in the case of websites, the relationship between keywords)

And the theme

If a tool can accomplish that with a piece of literature, then it can certainly analyze a web page for appropriate language and SEO relevance. I used a free tool, the SEOToolSet’s Single Page Analyzer, and much to my delight, the SEO tool was able to peg character relationships, point to the theme of this American classic, and crunch 46,000 words in a fraction of the time a person requires. Super sweet.

Fair warning: Yes, there are some spoilers for “The Great Gatsby” but nothing that will keep you from enjoying the book – or Baz Luhrmann’s recent cinematic stunner. (Highly recommended, by the way.)

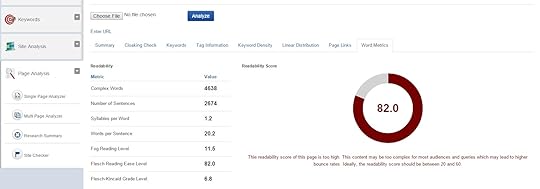

Reading Level

It’s relatively straight-forward for a computer program to grade the difficulty of text content. Readability is one of the word metrics reported by the Single Page Analyzer.

The SPA puts the text at a Fog reading level of 11.5 – which is right on target, as “The Great Gatsby” is most often taught during the junior year.

While the 11.5 grade level is fine for Fitzgerald, for your average web page, it’s a little higher than the recommended target. If this was your own website, you could use the reading level score to think about how accessible your text is to your target audience.

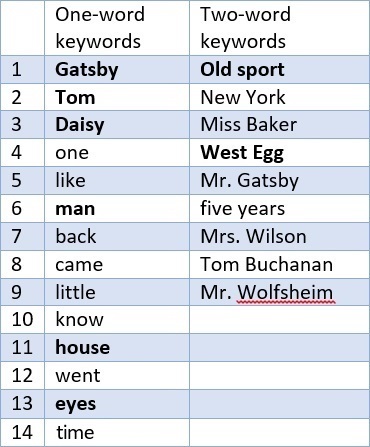

Characters and Their Relationships, or Keywords and Distribution

One of the basic tasks an on-page analyzer performs is identifying the most commonly used words and phrases on a page – in SEO world, a page’s keyword phrases.

The Single Page Analyzer reports the most-used words on a page, organized as one-word, two-word, three-word and four-word keyword phrases. Here are the one-word and two-word keywords of “The Great Gatsby” according to the SPA:

The first three one-word keywords are the main characters of the novel, a good indication that the analysis is on point. The SPA has correctly identified that “The Great Gatsby” follows the story of protagonist Gatsby, Tom, Daisy, and the narrator, Nick Carraway. In this analysis, we see Nick as he’s most often referred to as “old sport” – the most frequently used two-word keyword phrase and Gatsby’s favorite term of endearment. Also among the two-word phrases from the analyzer are more central characters and the main location of the action, West Egg.

So far, so good.

A Clue about Relationships, Care of the Keyword Heat Map

Here’s a unique feature of the SPA. Keywords get a visual treatment in the keyword heat map. The report lays out the identified keywords like a topographical map, where the words used the most are the highest peaks, and words that are physically located close together in the text are also placed near each other on the map.

Here’s the SPA’s keyword heat map for “The Great Gatsby”:

Notice that Gatsby and Tom and more closely connected than either of the men are with Daisy! A superficial reading of “The Great Gatsby” may suggest the book is a story of a romance between Gatsby and Daisy. But, this data visualization reveals the layer of meaning beyond that. There is a great distance between Gatsby and Daisy. Gatsby is much closer, in fact, to his foil, Tom, reflecting their constant competition and the story’s central tension. How’s that for in-depth character analysis?

Through these three metrics/reports we’ve assessed reading difficulty and important keywords/characters. Next, the real magic of the SPA’s analysis: understanding the theme.

Identifying the Theme

A theme is more than keywords; it is the underlying meaning or idea expressed. On a web page, a theme is pretty much determined by the topic (or subject) and the purpose (do/know/go, i.e., transaction, information, or navigation).

In a novel, a theme is more complex. We can’t understand a literary theme by looking at the keywords alone, but, of the nearly 46K words the SPA counted in “The Great Gatsby,” the most frequently used words can clue us in to where to look closer for the theme.

If you filter out character names from the list of one-word keywords, a few words stand out, namely: house, eyes, and time, all of which are critical thematic elements in “The Great Gatsby.” Together, the keywords of time, house and eyes do, in fact, point to major themes when explored further: an obsession with the past (time), a preoccupation with wealth and materialism (house), and the modernist shift away from God (eyes).

Time

“The Great Gatsby” is filled with references to time. An obsession with time is laced throughout the pages from start to end — particularly with the past. Recall narrator Nick Carraway’s opening lines: “In my younger and more vulnerable years my father gave me some advice that I’ve been turning over in my mind ever since,” as well as the closing lines: “So we beat on, boats against the current, borne back ceaselessly into the past.” The entire premise of the book is a man obsessed with a time gone by. (And note that other top keywords support this idea, too: came, went, back. The tense looks itself tends to look backward, and the actual adverb “back” is mentioned enough to appear in the top keywords.)

House

Chapter 1 holds Nick’s first description of Gatsby’s house, standing next to his own.

“My house was at the very tip of the egg, only fifty yards from the Sound, and squeezed between two huge places that rented for twelve or fifteen thousand a season. The one on my right was a colossal affair by any standard — it was a factual imitation of some Hotel de Ville in Normandy, with a tower on one side, spanking new under a thin beard of raw ivy, and a marble swimming pool, and more than forty acres of lawn and garden. It was Gatsby’s mansion.”

Countless descriptions of Gatsby’s house tell of a lavish palace in nouveau riche style —the setting of constant celebrity-studded galas that last until morning and set the night sky ablaze with lights. Both Gatsby’s house and Daisy’s house (over which Gatsby keeps near-constant vigil) play integral roles in the text. Gatsby’s house, after all, was bought for two reasons: its proximity to Daisy and its grand splendor, with which he hopes to impress her. And both homes speak to the “unprecedented prosperity and material excess” of American in the 1920s (SparkNotes).

Eyes

There are multiple descriptions of characters’ eyes throughout the book, but they’re all overshadowed by the recurring focus on Eckleburg’s eyes.

Remember T.J. Eckleburg? Eckleburg is a faded sign advertising an eye doctor that Gatsby & co. pass by every time they drive into New York from West Egg: “Above the grey land and the spasms of bleak dust which drift endlessly over it, you perceive, after a moment, the eyes of Doctor T. J. Eckleburg. The eyes of Doctor T.J. Eckleburg are blue and gigantic — their retinas are one yard high. They look out of no face but, instead, from a pair of enormous yellow spectacles which pass over a nonexistent nose … his eyes, dimmed a little by many paintless days under sun and rain, brood on over the solemn dumping ground.”

More than just a pair of eyes, Eckleburg is often seen as a god-like figure watching over the sordid affairs of the main players.

Photo by Eve Rinaldi (CC BY-SA 2.0)

Putting It All Together

Here’s my final analysis: “The Great Gatsby” would likely require a few days of engrossed reading to cover the above ground. However, the Single Page Analyzer’s computer processing power effectively discovered key elements and themes of the text, successfully performing a complex analysis of hundreds of pages of signal-dense text in minutes. Wasn’t that fun?!

November 3, 2015

Latest on Mobile: Essential Takeaways for Marketers from SMX East & Pubcon

Latest on Mobile: Essential Takeaways for Marketers from SMX East & Pubcon was originally published on BruceClay.com, home of expert search engine optimization tips.

It’s becoming clear that mobile friendliness is more than a responsive website that gets a passing grade on the Mobile-Friendly Test.

The concept of mobile friendliness covers increasingly advanced digital media territory:

Mobile apps: Do you have a mobile app? Is it indexable by Google? Are you taking advantage of Google’s stated ways of getting ranking boosts for your app?

Mobile conversions and personas: Is mobile traffic failing to convert? That’s to be expected if mobile personas, mobile-specific conversions and calls to action haven’t been identified. Mobile visitors are not the same as desktop visitors, after all.

Mobile advertising: Are you utilizing mobile search PPC features like call extensions and call-only campaigns?

At the two biggest search-industry conferences this fall, SMX East in New York and Pubcon Las Vegas, search engine reps and renowned speakers covered the full range of Internet marketing topics, especially mobile issues — everything from Google’s mobile-focused features to app indexing to mobile ads. We published 46 sessions and keynotes from these conferences on the BCI blog. Here I’ve distilled most important news and advice that was shared related to mobile.

App Indexing Is Essential

Indexing an app helps new users find it in mobile searches (image credit: Google)

App usage is growing, and Google is serving app content in search results. In the SMX session “Beyond the Web: Why App Deep Linking Is the Next Big Thing,” Webmaster Trends Analyst Mariya Moeva explained that Google currently supports deep app links for signed in and signed out users on Android. In other words, when people do a search on an Android smartphone or iPhone, they can see an install button for an app in the results. Read the developer documentation for Android app deep link indexing and the just-released developer details for indexing iOS app deep links.

Developers should implement app deep linking and get their apps indexed so they’ll show up in Google-powered searches. Session co-speaker Emily Grossman called app deep linking “the next big thing” and gave a list of in-depth recommendations and resource links to help people go from an app store model to a search engine model. See the liveblog for details.

Some tips for implementing app deep linking:

Use http instead of a custom scheme.

Associate your website with your app (in Google Search or Developer Console).

Publish your deep links with the app-indexing API and get a ranking boost.

Fetch as Google for Apps lets you test changes to your app before you push it live to Google Play.

Note for SEOs: In a recent Google Office-Hours Hangout focused on app indexing, Google reps Mariya Moeva and John Mueller clarified that there’s no concern over duplicate content if you have an app or a mobile site delivering similar content as your website; the search engine can tell the difference.

Mobile Personas

Advertisers need a mobile strategy. In “Social and Mobile PR Secrets” at Pubcon, Lisa Buyer said that social advertising is predicted to grow to $35.98 billion by 2017. While the audience is there, you’re not alone if you’re finding conversions are much lower from mobile traffic. If that’s the case, the problem is likely you, not the users.

Mobile visitors have their own unique needs and motivations. To capture their attention, it’s crucial to understand the mobile audience and do mobile persona research.

Mobile customers fall into four basic categories, according to Aaron Levy in the SMX session “Winning at Mobile PPC”:

Bored: Mostly professionals, either commuting or not wanting to talk to people. Tend to be impulsive.

Research: Often parents, these are busy people filling a few minutes of time. Tend to be thoughtful before buying and usually convert on other devices, not on their phone.

Need: People with only a phone to access the Internet, often low income. Tend to respond to promises of convenience.

Desperate: These people forgot something and need it right away. Tend to be rich enough to buy the first thing they find.

For each type of audience, Levy recommended ways to tailor mobile ads. Also in this session, co-presenter Amy Bishop explained how to effectively target local searchers by their location, and John Busby showed how to use Google’s PPC ad formats that support phone calls, enhanced campaigns and call-only campaigns.

Mobile Advertising

Along with Google’s mobile-friendly advertising options described above, some paid search-focused sessions looked at the unique challenges of mobile advertising, including the trouble with social media referrer data.

In the Pubcon session “Social Media in a Mobile World,” social media managers Cynthia Johnson and Kendall Bird presented many tips for social media marketers. Though ROI from social media is difficult to assess, social media efforts can be tracked using a combination of Google Analytics (such as the Social report under Acquisition) and the data that each network natively provides. Build a dashboard that monitors whatever KPIs are important to your business, which differ between social media platforms, and you’ll have a way to showcase economic value to stakeholders.

Be aware, however, of dark social media, which refers to the sizable chunk of direct traffic that you can’t track in analytics (for example, users clicking a link from Instagram). Speakers Johnson and Bird recommended URL building as the best way to recapture those hidden (dark) metrics.

Google AdSense came into the spotlight when two Googlers (Richard Zippel and John Brown) hosted a Q&A style Pubcon keynote. Takeaways for advertisers were:

Ad blockers are a big concern for Google. They’re looking into what they can do to help advertisers.

Faster is better with mobile, but ads can slow down a page’s loading. Google’s brand-new Accelerated Mobile Pages tool can help publishers speed up a website. Use this and avoid bogging down pages with too many ads.

Mobile is necessary, so figure out your best mix of products (Google has products for videos, AdX, etc.) and platforms (iOS, Android, wearables, etc.).

Think Mobile First — Google Does

Google’s Gary Illyes speaking at SMX East (and Barry Schwartz, moderator)

If you do nothing else, forget about mobile being a subset of the Internet. With mobile searches exceeding desktop searches, Google now focuses exclusively on mobile — that’s what Google Webmaster Trends Analyst Gary Illyes reported in the “Getting Mobile Friendly to Survive the Next Mobilegeddon” session at SMX East.

Here are three ways Illyes advised webmasters to stay mobile-friendly (watch video):

Make content legible and usable on mobile devices. Illyes said Googlebot looks at “5 or so” properties of a page to see whether they appear correctly.

Don’t disallow resources (such as CSS, JavaScript or image files). You don’t want to block Google from seeing the site as mobile-friendly — a misunderstanding that would hurt your search rankings.

Focus on giving the user a great experience on your website and “everything else will follow.”

SEOs and webmasters should follow suit by making mobile their primary framework for thinking of online visitors.

October 30, 2015

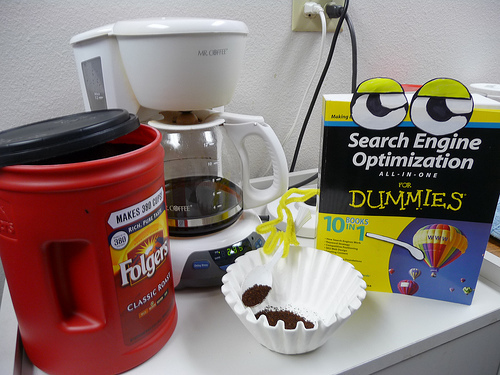

3rd Edition of SEO for Dummies is the Perfect SEO Companion

3rd Edition of SEO for Dummies is the Perfect SEO Companion was originally published on BruceClay.com, home of expert search engine optimization tips.

Remember me? Who can forget the fresh face we met in 2009, so eager to make us coffee and protect us from evil villains.

The first edition instantly became a reliable reference guide for us and that lasted until 2012 when it grew in ways that strengthened our reliance on it. The second edition of “Search Engine Optimization All-in-One For Dummies” proved that it was more than just a helper, but an expert in SEO that deserved to be served coffee instead of making it for us.

Three years has passed since the second edition and the SEO world has changed drastically. More than ever, digital marketers need a reference guide that’s accessible, reliable, and reflects the latest updates in the industry. That’s what they’re getting in the freshly released third edition. Basically, the book is the perfect companion for any business owner, digital marketer, or anyone responsible to traffic to a site. This Halloween season, it also proved the perfect companion for Darth Vader, Tinkerbell, Minnie Mouse and more as the book got into various characters:

The book wasn’t the only one in character … we all take Halloween pretty seriously, too.

Your SEO Companion is Here

It’s hard to find the perfect companion, someone who knows what you’re going through and supports you along the way. Harder yet is to find someone who will grow and change with you over time.

But when the third edition of the “Search Engine Optimization All-in-One For Dummies” book arrived at our office, our hearts jumped! Our dear companion is everything we remember it to be, plus a whole lot more.

Sure, it can still teach us the fundamentals of SEO, but it’s grown so much since then! It can also walk us through today’s hottest subjects, including mobile, advancements in search engine algorithms, and the latest internet marketing technologies that pertain to SEO.

The Spooky Side of SEO

One of the scariest things about the SEO industry is that it’s changing at such a rapid pace. It can feel quite lonely without a companion you can trust. The good news is that you’re not alone. “Search Engine Optimization All-in-One Dummies 3rd Edition” can be your trusted friend ━ it’s there to guide you on all things SEO in a way that’s easy to understand.

Get Your SEO Companion

The 3rd edition of “Search Engine Optimization All-in-One for Dummies,” is available on Amazon and Barnes & Noble. Buy a copy today, or better yet, buy it for your office or the business owner or marketer in your life.

Everyone needs an SEO companion; be sure to get the right one. You can also learn more about Bruce Clay’s nearly 800-page SEO reference guide and take a sneak peek inside the book.

October 29, 2015

2 Big Things Changing SEO Forever — What’s New in SEO from SMX & Pubcon

2 Big Things Changing SEO Forever — What’s New in SEO from SMX & Pubcon was originally published on BruceClay.com, home of expert search engine optimization tips.

Digital marketers and SEO industry insiders demand outstanding content for their limited blog-reading bandwidth. So, for SEOs who want to get straight to the heart of the latest game-changers, we offer our humble opinion that during the SMX East and Pubcon Las Vegas conferences of the last month, there were two big comets that hurtled from the sky, signaling changes to the SEO landscape forever:

Machine learning algorithms ranking content based on searcher behavior feedback

Predictive search serving searchers content before they ask

Here’s your front row seat to what’s new in SEO, straight from SMX East and Pubcon Las Vegas.

How SEOs Can Optimize for an Algorithm That Is Learning

This week, Google confirmed artificial intelligence system RankBrain as the third most important signal in the ranking algorithm — an assurance that machine learning is at play in live Google results.

What is machine learning? Machine learning is where a computer teaches itself. Rather than following defined programming or learning through human intervention, the computer is programmed to learn by collecting and interpreting data.

Important to Note

At Bruce Clay, Inc., we do not think Google is ready to surrender the fate of their entire algorithm to a black box. Machine learning is not easily undone or fixed from looking at the results. As such, we believe that the algorithm will never be wholly unleashed to machine learning capabilities. In order to maintain a clear view of the algorithm, Google engineers will control the parts of the algorithm where machine learning is in play. Search Engine Land has used the analogy of what’s happening under the hood of your car, with RankBrain being one component of a complex engine. Clarifications by Googler Gary Illyes and the Bloomberg author who broke the story, Jack Clark, add important context.

Rand Fishkin, founder of digital marketing software house Moz, painted a picture of the future of search engine optimization while on stage at Pubcon this month. With machine learning in play, the computer program that ranks results will use searcher feedback as a ranking signal. He advised SEOs to optimize for searchers’ interactions with web pages and results.

Up until now, most of us have been optimizing for traditional elements of SEO – keyword targeting, quality, uniqueness, and snippet optimization. The algorithm now powered by machine learning theoretically allows Google to boost sites in SERPs using signals that aren’t on the page and the web but rather are coming from searchers as they interact with results. High relative click-through rate, signs of task completion, and long click vs. short click are among the signals Fishkin refers to as “searcher outputs.” An example of the latter (long click vs. short click): if a searcher clicks back to a results page quickly, that signals a poor result, and if this happens enough times, the algorithm will stop including this result as a query match.

What Optimizers Can Do: Searcher Output Signals

In Fishkin’s keynote presentation at Pubcon, he said that SEOs need to start optimizing for a new set of factors. So what are these new factors? “Searcher outputs,” the new elements of SEO include:

Long to short click ratio

Relative CTR vs. other results

Rate of searchers conducting additional related searches

Sharing/amplification rate vs. other results

Metrics of user engagement across the domain

Metrics of user engagement on the page (How? By using Chrome and Android)

For searcher outputs defined, and tips on how to optimize for them, read our coverage of “SEO in a Two Algorithm World” from Pubcon.

Predicting the Impact of Predictive Search

Predictive search (PS) technology is evolving rapidly. Are you getting notifications on your smartphone that the drive home will take 20 minutes in the currently heavy traffic? That’s predictive search in action — relevant notifications before you search. Google Now and Cortana are the virtual assistant applications at the forefront of predictive search.

What is predictive search? Predictive search is where an Internet connected device gives a user information relevant to his or her current situation and interests, often before the user asks for it. A user’s pattern of personal behaviors, the place, time, rate of movement, and of course, a user asking for information, are the factors that feed predictive search.

Cindy Krum’s presentation at SMX East, “Google Now, Microsoft Cortana, and The Predictive Search World,” is the best place to start for background on the signals being used to predict searches, where we’re seeing predictive search in our daily lives, and how predictive search is going to change SEO.

Note that predictive search is closely tied to Internet connected devices beyond the phone and computer, like wearables. As more Internet connected devices without keyboards come to market, voice powered search and served-before-you-asked information becomes necessary.

Predictive Search with Google Now

Of course, you’re likely familiar with predictive search if you’re a Google Now or Cortana user. Google Now, Google’s predictive search app, uses a “card” format to show users information they might find useful before they search for it.

Google Now collects a signed-in users online history across devices (phone, tablet, computers, TV, smart watch). It accounts for a user’s current conditions, including location, date, time and even velocity (are you on the way to work?). If it senses from your GPS location that you’re staying out of town, Google Now will provide you with the local weather, nearby attractions, and traffic information.

Your data from every Google app (including Gmail, Google Calendar, Google Maps, Google Play, Google Plus, Google Hangouts, YouTube, Google News) is used to aggregate a complete view of your interests so Google Now can serve up: meeting notifications, reminders, news stories you want to read, upcoming travel notifications, weather, traffic and alternate routes, TV shows, and nearby events. The list is growing every day.

What Optimizers Can Do: Voice Search SEO, Speed & App Indexing

During her presentation, Krum offered a possible avenue of exploration for getting your business into a users’ Google Now results: email markup for Google Now.

In his keynote message at Pubcon Las Vegas, Google’s Gary Illyes, explained that Google Now was created in part to satisfy the “needy,” and “impatient,” expectations of millennials. He provided tips on how to provide content for this generation that will make them “feel special” and “make life easier for them.” Read the liveblog of Illyes’ keynote for dos and don’ts of creating content that builds loyalty with millennials.

Along with Google Now, Illyes talked about other Google products that reveal where search is today and where it’s headed.

Illyes talked about voice search and how it interprets a string of questions in relation to the previous questions (Where is the Eiffel Tower? How tall is it?). For SEOs, voice search optimization is a technical and emerging practice that includes direct answer optimization and developing a depth of content expertise.

Illyes explained that search is now location-aware. He gives an example of this: you can stand in front of a restaurant and find out the hours by asking your phone “What are the opening times?” Keep in mind, you don’t have to tell your phone which restaurant you’re talking about because it will know by your location. Ensure that Google has clear and positive signals about your brick-and-mortar business location with proactive local SEO.

Illyes also pointed the audience to Accelerated Mobile Pages (AMP). The Accelerated Mobile Pages Project is a brand new, open source framework for building high performance, lightweight websites that work seamlessly across mobile devices. AMP is intended to deliver a faster, user-friendly experience for the mobile web. Investigate whether AMP standards should be in your next development cycle.

Illyes and Krum both talked about app indexing, and how Google is working to seamlessly integrate and open app results. For a starting place on mobile app indexing and deep linking see our SMX East report on “Why App Deep Linking Is the Next Big Thing.”

The New SEO

The SEO industry has always had a front row seat to the technological evolution. We’re at the forefront of machine learning algorithm factors and Internet connected devices serving you content before you ask for it. Ready to adapt?

October 22, 2015

Millennials in the Spotlight: The Market Segment Everyone’s Clamoring to Crack

Millennials in the Spotlight: The Market Segment Everyone’s Clamoring to Crack was originally published on BruceClay.com, home of expert search engine optimization tips.

From campaign headquarters to digital marketing agencies, strategists everywhere are thinking about how to entice America’s most talked-about and statistically impactful group: millennials. Bing’s former search industry spokesperson Duane Forrester and Google Web Trends Analyst Gary Illyes are no exception – millennials are on their minds, too, and both of the search leaders’ recent keynote sessions at Pubcon centered on how search engines are moving to serve the rising millennial class.

There are the stats and facts you may have heard and industry watchers trade back and forth like the older millennials once traded Pogs:

Millennials (now aged 20-34) are nothing if not tech-savvy. They are described as everything from needy and egocentric to highly educated and highly connected.

They value experiences over products and services.

They’re passionate about causes and 40 percent of them believe they are capable of global impact.

Their attention spans are microscopic, and they’re shrinking by the second — literally. In 2000, the average attention span was ten seconds. In 2014 it was eight seconds. It has now whittled down to 2.8 seconds.

In “Millennial Expectations and Search Behavior Trends,” Illyes talks about how digital marketers can tap into that 2.8 attention span. He also shares insights into how Google is adapting its own features to better serve millennial users.

Turn your products into experiences. Use bright colors. These & more #millennial #marketing tips.

Click To Tweet

As to the millennials’ gravitation toward experiences, Forrester has a piece of advice: provide them. Turn your products into experiences. Just this week, Macy’s CEO Terry Lundgren shared the same advice. It’s not about changing your product, but changing the way you market it. Shopping in a store can be turned into an experience. That’s why Macy’s has invested $400 million dollars into a complete renovation of the first floor Herald’s Square Macy’s in New York, which now features things like virtual mannequins.

In the same way that physical shopping can become an experience, so can shopping and interacting with businesses online. In his keynote, “The Future of Search,” Forrester dives into how retailers can use technology and the Internet to more strongly appeal to millennials. He speaks about the Starbucks app specifically, noting how its interactive functionality is the kind of the thing that attracts young adults. Eleven percent of in-store transactions at U.S. Starbucks occur with a mobile device. According to Forrester, millennials love having the ability to:

Pay and tip via mobile (or wearable)

Shake to pay

Earn rewards and status

Locate stores

Purchase eGift cards

For insight you can apply immediately, consider this: how do you approach graphics and web design when thinking about millennials? In “Designing Ad Images for Non-Designers,” Dustin Stout had straightforward advice: implement bright colors and use pictures of millennials (people gravitate towards people that look like themselves).

Another thought: don’t ever call millennials “millennials.” There’s no quicker way to alienate your audience than by making it obvious you’re marketing to them. Take a cue from the style guide of BuzzFeed, a social media mecca for the target market in question:

Generally avoid using this term when possible; use “twenty-somethings,” “twenty- and thirty-somethings,” or “young adults,” depending on what’s most appropriate/accurate.

How are you thinking about and marketing to millennials? Share your strategies in the comments!

October 14, 2015

SEO & SEM in the Competitive Automotive Space – Pubcon Liveblog

SEO & SEM in the Competitive Automotive Space – Pubcon Liveblog was originally published on BruceClay.com, home of expert search engine optimization tips.

Greg Gifford and Ira Kates at Pubcon

The automotive industry is incredibly competitive when it comes to search engine marketing. To rise to the competition requires in-depth local SEO knowledge and PPC know-how. This session offers strategies for search marketing, both paid and organic, that all marketers can use. Our speakers are Ira Kates, who will speak to paid search, and Greg Gifford, who addresses organic local SEO.

Speakers:

Ira Kates, Senior Digital Business Strategist, 360i Canada (@IraKates)

Greg Gifford, Director of Search and Social, DealerOn (@GregGifford)

Ira Kates: Automotive Search: Lessons from the Kitchen

Ira cooked for 10 years and he’s noticed that lessons from chefs in the kitchen have application to search. Today he’ll use lessons from chefs he admires to illustrate ways to compete in PPC. It’s a crazy competitive time to be in the automotive PPC industry, Kates explains.

“Perfection is lots of little things done well.” –Fernand Point

Is the structure of your account contributing to the theme you want to tell your prospective car buyer?

The lifeblood of paid search is match type. You don’t want to compete against yourself. Funnel everything toward best performing keyword.

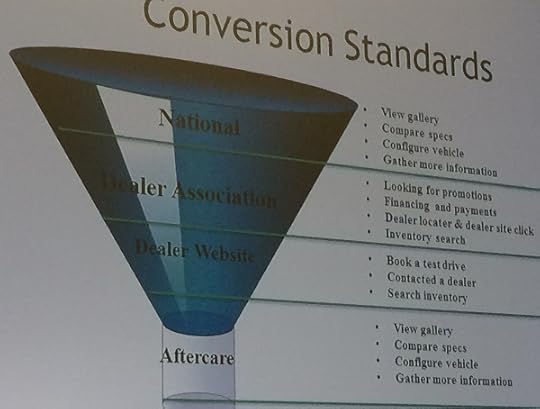

If you break this funnel down, there are funnels within funnels.

At the national level, you’re looking at people viewing galleries, comparing specs, taking an action that they’re very interested in what the car is offering.

For dealer associations, ask questions of what the purpose of the organization is to support the funnel.

At the dealer level, he explains to customers that “these are the actions” we’re looking to get (book a test drive, contact dealer, search inventory).

“Aftercare” is a new area in the funnel that Ira’s added to this visual aid since last year’s presentations. Why not focus on this, especially with remarketing available?

If you’re acquiring a new PPC account, take the campaign down for a couple weeks and scrub the account to apply the fundamentals. Implementing a simple, revised negative keyword list, for example, can result in lower CPA and CPC and you can take that new funding to reinvest in winners.

Ad Copy

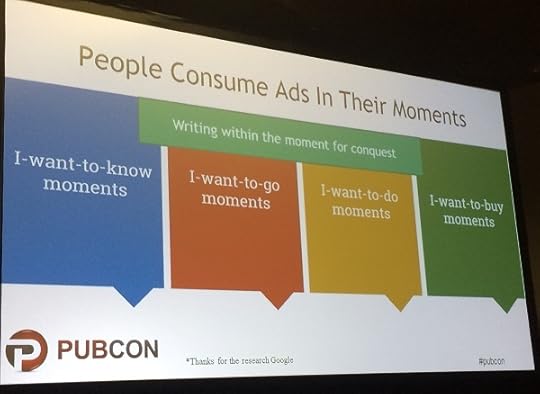

A meal tells a story of how it came to your plate. You eat with your eyes as well as your other senses. Your ad needs to tell the story of why someone needs to do something.

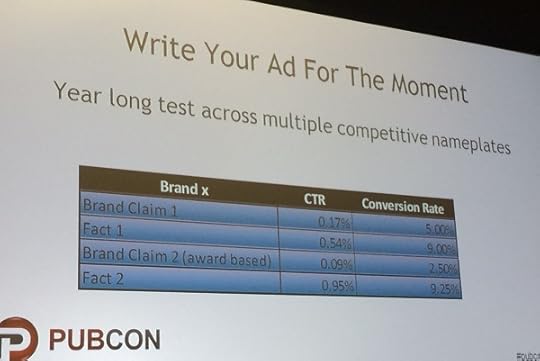

Write your ad for the moment. A year-long test across multiple competitive nameplates show the following (and the author’s take is that facts perform better than brand claims):

You can see a huge gap of almost doubled conversion rates in the results. Use the geo-gating built into AdWords and focus on people a mile or two within your lot. That’s how you can test claims like this.

Creative ads on competitor brand queries:

“Don’t Go Rogue – Escape the Mundane” – a search ad on the query “nissan rogue”

“Did You Mean Ford Focus? – Phew, Dodged That Bullet” – a search ad on the query “hyundai elantra”

These are fun headlines. For a national brand to do this adds character to the SERP. Don’t be afraid to test.

Testing and Learning

Start small and prove something with a little test on one ad group.

“Good food doesn’t come from following a recipe to the letter. It’s about having the confidence to experiment.” –Marco-Pierre White

This is especially true at the dealership level because 90% of competitors aren’t testing and experimenting; they aren’t rotating their ad creative out enough.

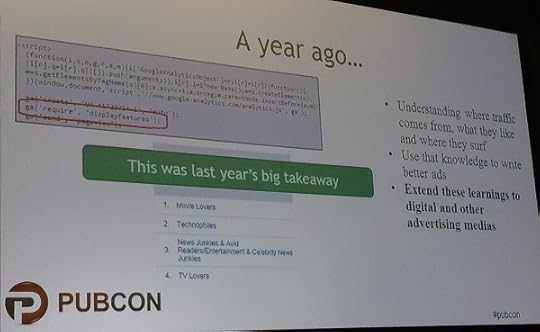

A year ago, his big takeaways was:

Pay attention to things like “ga(require, displayfeatures)”. Build up audiences and bucket them. Now you can test your ad copy and speak to these people again.

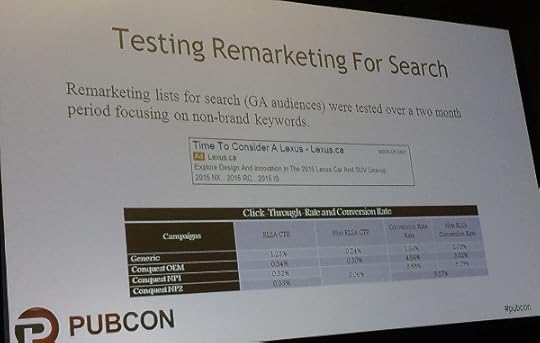

Test remarketing audiences for search. He tested Remarketing Audiences in Google Analytics over a two-month period focusing on non-brand keywords.

The conversions they’re driving are dealership leads.

Favorite research method: Use SEMRush to find competitor’s top SEO keywords that aren’t super competitive and focus limited conquest dollars against those keywords.

Jump on Google Customer Match – announced 2 weeks ago – to be ahead of the curve. You can use this to stop your customers from going to a third-tier oil change business. The most powerful use case for Google Customer Match is you know exactly what customer is ending their lease in 3 months. Why not talk to them when they’re starting their research on search?

Finally, here are flags to watch out for if someone comes to your dealership and says they want your digital business. They should be able to speak to all these things.

Flags to watch out for:

No examples of work

Price slashing with no offer of how

No clear vision of what your success is

Not asking questions about the business

Only PPC concern is bulking up accounts

Can’t define CRO, new extensions or how they measure success

Require you to purchase a website or any other service just for them to run PPC

Greg Gifford: SEO Lessons Learned from the Competitive Automotive Vertical

Or: You Suck at Local SEO

Get his full presentation here: http://bit.ly/you-suck-at-local

You Suck at Local SEO from Greg Gifford

These local SEO tips will help your dealerships. Check out the weekly Wednesday workshop video on the DealerOn blog. Local SEO is a tough puzzle to crack. You can’t skate by because otherwise you’re blending into the background and will be invisible to customers. He’s going to show us the code behind the Google algo.

The automotive niche is crazy competitive, second only to maybe payday loans, bankruptcy attorneys and divorce attorneys. Every major market has 300-600 dealerships (used and new) competing for top SERP spots. No one understands local SEO is different than web search SEO.

A dealership called up complaining he wasn’t showing up in Google but really he meant Google Maps. He didn’t understand how Google works. Every dealer thinks they should be #1 and that SEO is “instant on.” Everyone thinks they’re so unique:

“We treat our customers like family.”

“We’re family owned.”

“We have a state-of-the-art showroom.”

“No haggle pricing/up front pricing.”

Every dealership says this. Automotive SEO vendors are just as bad. Things shady SEO vendors do:

Junk duplicated content (same content for every trim with year/make/model replaced)

Outdated SEO strategies (keyword stuffing meta description, multiple city names stuffed into a title tag, hidden text in a “read more” link)

Go 6 months without touching a site

X pages of content per month without a strategy

Local SEO is an easy win because no one is doing it. Do the extra stuff and blow past everyone not doing it. It changes all the time so it’s important to keep up to date and update your strategy.

Recent big updates important for local SEO:

Pigeon update (July 2014)

GMB Quality Guidelines update (December 2014)

Most importantly don’t include a descriptor in your GMB business name

Choose only the most specific categories (he suspects weight/value given to category choices)

Different departments with different pages must choose unique categories

New three-result local pack (August 2015) (read the BCI blog report of for the latest on this change)

Local Search Ranking Factors Study 2015 (released by Moz two weeks ago)

The rest of the presentation covers the findings of the newly released study Local Search Ranking Factors Study (LSRF). What’s changed since Pubcon 2014? See the image to the right.

of the presentation covers the findings of the newly released study Local Search Ranking Factors Study (LSRF). What’s changed since Pubcon 2014? See the image to the right.

Big drop in GMB pages

Drop in link signals

Drop in on-page

Increase in behavior and mobile

On-Site Signals – 23% of the LSRF

The most important question to ask is why do you deserve to rank? Content matters but don’t take that the wrong way. It’s quality of content and not quantity of content. Don’t push out so much junk.

This stuff sucks:

No home page content (like all jpeg images)

Only a few sentences on a page

Default page text – this is a pervasive problem in auto right now because an OEM like Audi requires all dealers to use a certain platform and the dealer never customizes the content so their site is made up of default content

Blatant keyword spamming

Awful title tags – this is the most important SEO element

Stop trying to fool the nerds at Google. Write your content for people, not search engines. For local search, when you put anything on your site, do it to make your website better for your customers. Be unique and useful.

PRO TIP: read your content out loud to someone else. This is how you can hear if the content is helpful.

Optimize content for local:

Include City, ST in

title tag – don’t put company name first (you don’t need to optimize for it)

alt text

body content

URLs

Meta description

Embedded GMB map (from GMB page, not from Maps)

Consistent NAP

You must have a blog (not a luxury)

You need to post things people will actually want to read. Write posts about local topics. http://bit.ly/local-content-ideas

Want to rank in nearby cities? Use local content silos. http://bit.ly/local-content-silos

Link Signals – 20% of the LSRF

Thanks to Penguin, links are no longer simply a numbers game

Get local links (example: small church websites) to local content pages (not all pointed to home page)

Take advantage of sponsorships, events, things you’re already doing in the community

Pay attention to internal linking!

GMB – 14.7% of the LSRF

The most important thing you can do is claim your GMB. If you’re having trouble getting the postcard or it’s claimed by an ex-employees personal account, use GMB phone support. http://bit.ly/google-phone-support

Choose the right categories

Upload custom user and cover image

Citation Signals

It’s your mentions of your NAP on other websites

Most dealers have a ton of citation problems. Your citations have to be 100% consistent.

Do a quick check of major citations with Moz Local

Use Whitespark to check all your citations

Review Signals – 8.4%

88% of consumers trust online reviews as much as reviews from friends or family.

4 out of 5 people will decide not to do business with you if you have bad reviews. You can’t fake good reviews. You can’t fake caring about your customers. He turns down business from shady car dealerships. You have to be legit before you do SEO. Don’t ignore Yelp; it powers the stars on Apple Maps.

Read up on review strategy: http://bit.ly/pp-review-strategy

Make sure you have more reviews than your competitors. You need five before you get the aggregate star ratings. Get more reviews but not too many more; customers will think you’re faking results.

Bonus tip: Don’t tether Facebook and Twitter. Use any of the many tools that you can post to both at the same time without it looking to the user like it’s out of place in the native environment.

October 12, 2015

Social and Mobile PR Secrets – Pubcon Liveblog

Social and Mobile PR Secrets – Pubcon Liveblog was originally published on BruceClay.com, home of expert search engine optimization tips.

Do you want press for your business? Yes please! Here’s how to use social media in traditional and new ways to reach and influence the media. New opportunities to be covered by today’s speakers include making sure your content is mobile friendly and targeting the media through paid Facebook promotions.

Moderator: Melanie Mitchell (@melaniemitchell)

Speakers:

Lisa Buyer, President/CEO, The Buyer Group (@lisabuyer)

Marty Weintraub, Founder, aimClear (@aimclear)

Murray Newlands, Founder, Due (@murraynewlands)

Murray Newlands: How to Meet the Press

How to get press? That’s the big question for all of us. He’s getting emails and messages asking him, as a writer for Forbes, requesting he write about your company. He asks why should he? You have to be amazing or tell a great story. Ask: why am I creating the content? Ask: who are you writing for? Ask: why do you stand out? Ask: what does my audience care about?

Generally, people care because they have a problem that needs to be solved. Go back to the basics. What’s the company do, what problem does it solve, who are you solving it for, how can you present the context to the audience and illustrate the solution.

When you answer that question, look at the publications you’re targeting and then research that publication to see what is the most popular content there. Fit your story into that format. When they write about you, promote the heck out of it. Writers are trying to get their content to have tons of views and shares. If someone takes the time to write about your company, take that third-party endorsement and share that with your current audience and to grow a new audience. Use Outbrain to promote it. Make it a promoted tweet.

How do you meet press and get started with outreach?

Go to events, especially targeted events, and meet press in person. Find a local media group meet up and go to it. Those press people also probably have social profiles you can find.