Gennaro Cuofano's Blog, page 30

August 31, 2025

Interoperability Tax: The $500B Hidden Cost Killing Digital Transformation

The interoperability tax represents the compounding cost of making systems work together—a hidden drain that consumes up to 40% of IT budgets and kills more digital transformations than any technology failure. While executives celebrate new system deployments, integration costs silently multiply. Each API adds latency. Every middleware layer reduces efficiency. Security requirements compound complexity. What starts as simple connectivity becomes an architectural nightmare costing billions.

The evidence surrounds us. Healthcare spends $30 billion annually just making systems talk. Financial services allocate 35% of IT budgets to integration. Manufacturing loses 20% productivity to data silos. Enterprise companies average 1,000+ applications that barely communicate. The promise of digital transformation crashes against the reality of interoperability tax.

[image error]Interoperability Tax: The Hidden Cost of System IntegrationThe Integration IllusionEvery system promises easy integration through “open APIs” and “standard protocols”—lies that cost enterprises millions. Sales demos show seamless data flows. Marketing materials tout “plug-and-play” connectivity. Reality delivers authentication nightmares, version conflicts, and performance degradation that compounds with each connection.

The tax begins innocently. Connect CRM to email—lose 50ms per transaction. Add marketing automation—another 100ms. Integrate analytics—200ms more. Soon, simple operations take seconds instead of milliseconds. Users complain about sluggish performance while IT scrambles to optimize what can’t be optimized—the fundamental overhead of translation between systems.

Security multiplies the burden exponentially. Each integration point requires authentication, encryption, and audit trails. A simple data query traverses multiple security checkpoints, each adding latency and complexity. What would take nanoseconds in a monolithic system requires seconds across integrated platforms.

Complexity compounds faster than linear addition. Two systems have one integration point. Three systems need three. Ten systems require forty-five. Twenty systems demand 190 integration points. Each connection needs maintenance, monitoring, and management. The web of dependencies becomes unmaintainable.

The Real Cost CalculationTraditional TCO models completely miss interoperability costs by focusing on licensing and implementation. The true tax includes performance degradation, increased complexity, security overhead, maintenance burden, and opportunity costs from things you can’t build because integration consumes all resources.

Performance tax strikes first and hardest. Native operations executing in microseconds balloon to seconds across systems. A financial calculation requiring 100 data points from 10 systems takes 10x longer than the same calculation within one system. Multiply by millions of daily operations—the tax becomes crushing.

Complexity tax appears in debugging nightmares. When integrated systems fail, finding root causes requires archaeology across multiple platforms, each with different logging formats, time zones, and error codes. A simple bug that would take minutes to fix in isolation requires days of cross-system investigation.

Security tax never stops growing. Each system connection creates new attack surfaces. Authentication tokens multiply. Encryption overhead accumulates. Compliance requirements compound. Security teams spend more time managing integrations than protecting core systems.

Enterprise Architecture ArchaeologyModern enterprises resemble archaeological sites with technology layers from different eras forced to coexist. Mainframes from the 1980s connect to client-server systems from the 1990s integrated with web applications from the 2000s talking to cloud services from the 2010s interfacing with AI systems from the 2020s. Each layer speaks different languages requiring constant translation.

Legacy systems impose the highest tax. That “stable” mainframe running core banking requires COBOL-to-REST translation. The ERP system speaks only SOAP. The new microservices expect GraphQL. Translation layers pile upon translation layers, each adding latency and potential failure points.

Vendor lock-in amplifies the tax through proprietary protocols. Oracle databases require Oracle connectors. SAP systems prefer SAP integration. Salesforce pushes Salesforce APIs. Each vendor’s “open” standards work best within their ecosystem, forcing enterprises to pay integration tax at every boundary.

Technical debt compounds through integration shortcuts. Quick fixes become permanent. Temporary bridges calcify into critical infrastructure. Point-to-point connections proliferate because proper enterprise service buses seem too expensive—until the spaghetti architecture costs more than proper design would have.

The Microservices MultiplicationMicroservices promised to solve monolithic problems but multiplied interoperability tax exponentially. Instead of one integration challenge, enterprises now face hundreds. Each microservice requires service discovery, load balancing, circuit breaking, and distributed tracing. The operational overhead explodes.

Network latency becomes the dominant performance factor. A monolithic application processes requests in-memory at nanosecond speed. The same logic distributed across microservices requires network calls measured in milliseconds—a million-fold increase in latency. No amount of optimization overcomes physics.

Distributed transactions create consistency nightmares. What was a simple database transaction becomes a complex saga pattern across multiple services. Failure scenarios multiply. Rollback complexity explodes. Eventually consistent systems create eventually consistent problems.

Observability costs skyrocket with distribution. Monitoring one application requires one dashboard. Monitoring 100 microservices requires distributed tracing, service mesh visibility, log aggregation, and correlation engines. The tools to manage complexity often add more complexity than they remove.

The API Economy ParadoxAPIs promise connectivity but deliver complexity at scale. Every API requires versioning strategies, deprecation policies, rate limiting, authentication, documentation, and support. The “simple” REST endpoint becomes a product requiring product management, generating more overhead than the functionality it exposes.

Version hell multiplies across integrations. System A uses API v1. System B needs API v2. System C requires features from both. Maintaining backward compatibility while adding features creates FrankenAPIs serving no one well. Breaking changes ripple through integration chains causing cascade failures.

Rate limiting creates artificial scarcity from abundance. Cloud APIs throttle requests to prevent abuse, forcing enterprises to implement caching, queuing, and retry logic. What should be simple becomes complex. What should be fast becomes slow. What should be reliable becomes fragile.

API governance emerges as a full-time job. Who approves new endpoints? How do we deprecate old ones? What’s our versioning strategy? How do we handle breaking changes? Companies need API product managers, creating overhead that didn’t exist in monolithic architectures.

Data Integration DisastersData integration represents the deepest circle of interoperability hell. Every system stores data differently. Field names don’t match. Data types conflict. Business logic embedded in storage makes transformation mandatory. What should be simple copying becomes complex ETL pipelines.

Schema evolution creates perpetual migration. Adding a field requires updating every integration. Changing data types breaks downstream systems. Renaming for clarity causes production outages. The cost of change becomes so high that broken schemas persist forever.

Real-time synchronization promises instant consistency but delivers eventual chaos. Change data capture, event streaming, and message queues create complex choreography where simple database triggers once sufficed. Debugging distributed data flows requires PhD-level expertise.

Master data management becomes a career. Which system owns customer truth? How do we reconcile conflicts? What happens when systems disagree? Companies spend millions on MDM platforms that add another integration layer to solve integration problems.

The Cloud Native TrapCloud native architectures maximize interoperability tax through service proliferation. Kubernetes orchestrates containers talking to service meshes connected by API gateways authenticated by identity providers monitored by observability platforms. The stack to run “hello world” requires dozens of integrated systems.

Serverless functions fragment logic across providers. AWS Lambda doesn’t play nicely with Google Cloud Functions. Azure Functions speak different dialects. Edge functions add another layer. Vendor-specific features create lock-in disguised as innovation.

Multi-cloud strategies multiply complexity without delivering promised resilience. Different IAM systems. Incompatible services. Network complexity. Data egress fees. The dream of cloud portability crashes against the reality of cloud-specific implementations.

Container orchestration adds operational overhead. Kubernetes solves problems most companies don’t have while creating problems they can’t solve. The learning curve, operational complexity, and integration requirements often exceed the benefits for typical enterprise workloads.

Quantifying the UnquantifiableThe true cost of interoperability tax hides in places accounting can’t reach. Developer productivity lost to integration debugging. Innovation stifled by maintenance burden. Customer satisfaction eroded by system latency. Competitive advantage surrendered to complexity.

Time-to-market suffers most. Features that take days to build require months to integrate. Simple changes trigger complex regression testing across integrated systems. Agility disappears under integration burden. Startups disrupt while enterprises coordinate.

Talent waste reaches tragic proportions. Brilliant engineers spend careers building integration plumbing instead of innovative features. Architects design around integration constraints rather than business value. Product managers manage dependencies instead of delighting customers.

Opportunity costs compound invisibly. Projects canceled because integration looks too hard. Features abandoned due to system constraints. Innovations unexplored because resources are tied up maintaining existing integrations. The tax isn’t just what you pay—it’s what you can’t build.

Breaking the Tax CycleEscaping interoperability tax requires acknowledging that integration isn’t free and designing accordingly. Start by calculating true integration costs including performance, complexity, security, and maintenance. The numbers will shock. Use that shock to drive architectural changes.

Consolidation reduces tax more effectively than optimization. Ten well-integrated systems outperform fifty poorly connected ones. Fewer, more capable platforms beat many specialized tools. The efficiency gains from consolidation often exceed the feature losses.

Design for integration from the start. Build systems that speak common languages. Use standard data formats. Implement proper service boundaries. Invest in integration platforms that reduce point-to-point connections. Pay integration tax consciously rather than accidentally.

Question every integration’s value. Does this connection deliver more value than its tax? Can we achieve the goal without integration? Would manual processes cost less than automated integration? Sometimes spreadsheet uploads beat complex ETL pipelines.

Future-Proofing Against TaxEmerging architectures promise to reduce interoperability tax through new paradigms. Event-driven architectures decouple systems. Data mesh distributes ownership. Zero-trust networks eliminate perimeter complexity. Whether these solutions or create new taxes remains uncertain.

AI might finally crack the integration challenge. Models that understand multiple system languages. Automatic API translation. Intelligent data mapping. Self-healing integrations. The same technology creating new complexity might solve old complexity.

Standards evolution offers hope through convergence. GraphQL subsumes REST. Protocol buffers replace JSON. WebAssembly enables portable compute. As standards mature and consolidate, integration complexity might actually decrease.

Edge computing redistributes integration burden. Processing at the edge reduces cloud round trips. Local integration eliminates network latency. Distributed systems become truly distributed rather than centrally integrated.

The Integration ImperativeInteroperability tax is not optional in modern enterprises—but conscious management of it is. Every system will integrate with others. Every integration will impose costs. Success comes from minimizing tax through architectural decisions, not ignoring it through wishful thinking.

The companies that win will be those that treat integration as a first-class concern. They’ll measure interoperability tax. Design to minimize it. Invest in platforms that reduce it. Build cultures that acknowledge it. Integration excellence becomes competitive advantage.

Master interoperability tax to build systems that deliver value rather than complexity. Whether architecting new platforms or managing existing ones, understanding and minimizing integration overhead determines success more than any feature or function.

Start your tax reduction today. Audit your integrations. Calculate true costs. Eliminate unnecessary connections. Consolidate where possible. Design for simplicity. The interoperability tax you don’t pay funds the innovations that matter.

Master interoperability economics to build efficient, scalable systems. The Business Engineer provides frameworks for minimizing integration complexity while maximizing business value. Explore more concepts.

The post Interoperability Tax: The $500B Hidden Cost Killing Digital Transformation appeared first on FourWeekMBA.

Protocol Economics: The $2 Trillion Revolution in Value Creation Without Companies

Protocol economics represents the most radical shift in value creation since the joint-stock company—enabling networks to generate, distribute, and capture trillions in value without traditional corporate structures. While companies rely on legal entities, employment contracts, and centralized control, protocols use cryptographic guarantees, token incentives, and decentralized governance to coordinate global economic activity. Bitcoin proved a protocol could be worth $1.2 trillion without a CEO, board, or headquarters.

The numbers validate this new economic model. DeFi protocols process $150 billion in value with zero employees. Uniswap facilitates $1 trillion in annual trading volume through 3,000 lines of immutable code. Ethereum settles more value than PayPal while operating as a decentralized protocol. These aren’t companies disrupting industries—they’re protocols replacing entire financial systems.

[image error]Protocol Economics: Value Creation Through Decentralized NetworksThe Protocol RevolutionProtocols solve the fundamental paradox of digital networks—how to create value without central control. Traditional platforms extract value through monopoly positions. Facebook monetizes social graphs. Google taxes information flows. Amazon levies commerce fees. Users create value but platforms capture it. Protocols flip this model entirely.

Bitcoin demonstrated the breakthrough. A protocol that enables peer-to-peer value transfer without intermediaries. No company controls Bitcoin. No entity can shut it down. No shareholders extract profits. Yet it secures $1.2 trillion in value through pure economic incentives. Miners secure the network for rewards. Users pay fees for inclusion. Everyone benefits proportionally.

Ethereum expanded protocol economics beyond payments. Smart contracts enable programmable value flows. Any economic relationship encodable in code becomes protocolizable. Lending without banks. Trading without exchanges. Insurance without insurers. Each protocol coordinates billions in value through algorithmic rules rather than corporate hierarchies.

The implications stagger traditional economists. Protocols achieve coordination without management, scale without employees, and trust without regulation. They’re economic machines that run themselves, generating value 24/7/365 without human intervention once deployed.

Value Creation MechanismsProtocol tokens align incentives across participants unlike equity in companies. Token holders aren’t passive shareholders but active network participants. Holding tokens means having skin in the game. Using tokens creates network effects. Staking tokens provides security. Each action strengthens the protocol while rewarding participants.

Network effects compound differently in protocols. Liquidity attracts traders. Traders generate fees. Fees attract liquidity providers. More liquidity enables larger trades. The cycle reinforces itself without central coordination. Uniswap’s $5 billion in liquidity emerged organically through incentive alignment, not corporate development.

Composability multiplies protocol value exponentially. Protocols integrate permissionlessly. Aave lending connects to Uniswap trading connects to Yearn yield optimization. Each protocol becomes a building block for others. Innovation compounds as developers combine protocols in ways original creators never imagined.

Value accrual mechanisms evolved beyond simple fee capture. Governance rights command premiums. Revenue sharing motivates holding. Staking rewards ensure security. Liquidity mining bootstraps networks. Protocols discovered numerous ways to capture value while maintaining decentralization.

The MEV EconomyMaximum Extractable Value (MEV) represents protocol economics’ hidden layer. Every transaction on a blockchain has an order. That order has value. Arbitrageurs compete to capture price differences. Liquidators race to claim collateral. Traders pay for priority. MEV extracts billions annually from transaction ordering alone.

Sophisticated actors built infrastructure to capture MEV. Flashbots created private mempools. Searchers run algorithms finding profitable opportunities. Builders construct optimal blocks. Validators auction blockspace. An entire economy emerged around transaction ordering rights.

Protocols increasingly internalize MEV rather than leak it. CowSwap batches trades to minimize MEV. Uniswap v4 enables custom pools capturing arbitrage. Protocols that control their MEV accrue more value than those that don’t. MEV becomes a core protocol economic consideration.

The MEV wars resemble high-frequency trading but with higher stakes. Milliseconds determine millions in profits. Better algorithms win. Faster infrastructure dominates. Yet unlike traditional HFT, MEV happens transparently on-chain where everyone sees the game being played.

Protocol Business ModelsFee switches emerged as the dominant protocol revenue model. Uniswap charges 0.3% per trade. Aave takes a spread on interest. GMX captures trading fees. These micro-fees aggregate to billions annually. Protocol tokens often govern these fee switches, creating direct value accrual.

Protocol Owned Liquidity (POL) revolutionizes treasury management. Instead of holding dollars, protocols hold productive assets. Liquidity positions generate fees. Staked tokens earn rewards. Treasuries become profit centers rather than cost centers. OlympusDAO pioneered POL, inspiring hundreds of imitators.

Vote-escrowed tokenomics align long-term incentives. Curve’s veCRV model locks tokens for up to 4 years. Longer locks receive more voting power and fee share. This reduces selling pressure while rewarding committed participants. The model became crypto’s most forked mechanism.

Bribe markets emerged organically around governance power. Protocols pay CRV holders to vote for their pools. Convex aggregates voting power for efficiency. Bribes exceed $100 million annually. Governance became monetizable, creating secondary markets around protocol control.

Fork Defense and MoatsProtocols face constant fork threats since code is open source. Anyone can copy Uniswap’s code and launch a competitor. Yet successful forks remain rare. SushiSwap’s vampire attack initially succeeded but Uniswap retained dominance. The code might be forkable but the network isn’t.

Liquidity moats prove most defensible. Deep liquidity enables better pricing. Better pricing attracts more volume. More volume deepens liquidity. Breaking this cycle requires massive capital—capital forkers rarely possess. Uniswap’s $5 billion liquidity moat effectively prevents successful forks.

Brand and trust create intangible protocol moats. Users trust battle-tested protocols over new forks. Developers integrate established protocols. Auditors scrutinize popular code. Lindy effects strengthen over time—protocols that survived gain trust that they’ll continue surviving.

Integration dependencies multiply switching costs. When hundreds of protocols integrate Chainlink oracles, switching becomes impossible. When DeFi protocols build on Aave, forking Aave alone accomplishes nothing. Protocol economies create mutual dependencies stronger than corporate partnerships.

Layer EconomicsProtocol layers capture value differently based on their stack position. Base layers like Ethereum prioritize security and decentralization. They capture value through transaction fees and token appreciation. Layer 2s optimize for scalability, competing on speed and cost while inheriting base layer security.

Application protocols face different economics. They must balance token distribution for growth against value capture for sustainability. Too much extraction kills adoption. Too little extraction prevents sustainability. Finding equilibrium determines protocol success.

Middleware protocols often struggle with value capture. Oracles provide critical services but commoditize over time. Bridges enable interoperability but face security challenges. Infrastructure protocols require different economic models than user-facing applications.

Cross-chain protocols unlock new value creation. Assets bridged between chains. Liquidity shared across ecosystems. Messages passed between protocols. Interoperability protocols capture value from integration rather than isolation.

Protocol Governance EvolutionGovernance tokens evolved from simple voting to complex economic systems. Early protocols used basic token voting. One token, one vote led to plutocracy. Wealthy holders dominated decisions. Protocols experimented with new mechanisms to balance power.

Delegation systems improved participation. Token holders delegate voting power to informed participants. Delegates campaign on platforms. Compensation structures incentivize good governance. Professional protocol politicians emerged, similar to corporate board members but selected through delegation.

Optimistic governance reduces friction. Instead of voting on everything, protocols assume proposals pass unless challenged. This enables faster iteration while maintaining security. Only controversial changes trigger full votes. Efficiency improves without sacrificing decentralization.

Treasury management professionalized. Protocol DAOs hire treasury managers. Diversification strategies protect value. Yield generation funds operations. Multi-billion treasuries require sophisticated management rivaling corporate finance departments.

Protocol Sustainability ModelsReal yield emerged as protocols matured beyond inflationary rewards. Early protocols printed tokens for growth. Unsustainable emissions created death spirals. Sustainable protocols generate revenue exceeding emissions. Real yield attracts institutional capital seeking returns.

Fee optimization balances growth and revenue. Too high fees kill volume. Too low fees prevent sustainability. Dynamic fees adjust to market conditions. Protocols becoming sophisticated at revenue optimization while maintaining competitiveness.

Protocol-to-protocol revenue streams multiply. Protocols pay other protocols for services. Yield aggregators pay lending protocols. DEXs pay liquidity providers. Trading bots pay for MEV protection. B2B protocol economics creates stable revenue streams.

Grant programs fund ecosystem development. Protocols allocate treasury funds to builders. Retroactive public goods funding rewards past contributions. Bounties incentivize specific development. Protocols invest in their ecosystems like companies invest in R&D.

Regulatory and Legal EvolutionProtocol economics challenges every assumption of corporate law. No legal entity to regulate. No employees to tax. No headquarters to jurisdiction. Regulators struggle applying 20th-century frameworks to 21st-century protocols. Yet protocols process trillions under regulatory uncertainty.

Liability questions remain unresolved. When a protocol bug loses millions, who’s responsible? Developers who wrote code? Token holders who govern? Users who interact? Traditional liability frameworks assume corporate structures that protocols lack.

Tax treatment varies wildly across jurisdictions. Some countries tax protocol tokens as securities. Others treat them as commodities. Many haven’t decided. Protocol participants face complex compliance requirements that change by geography.

Regulatory arbitrage drives protocol development. Teams incorporate in crypto-friendly jurisdictions. Protocols design around regulatory constraints. Decentralization becomes a regulatory defense. The most successful protocols navigate regulation through architecture.

Protocol Economic MetricsTotal Value Locked (TVL) became protocol’s primary metric. TVL represents capital deployed in protocols. Higher TVL suggests user trust and utility. Yet TVL can be gamed through incentives. Sophisticated analysis looks beyond headline numbers.

Revenue metrics evolved from traditional finance. Protocol revenue differs from company revenue. Fee generation, MEV capture, and treasury growth matter more than token price. Price-to-fees ratios help value protocols like P/E ratios value companies.

Active developer metrics indicate protocol health. GitHub commits. New deployments. Developer grants distributed. Ecosystem activity. Protocols with vibrant developer communities outperform those without. Code velocity predicts protocol success.

User metrics require blockchain-native analysis. Daily active addresses. Transaction frequency. User retention. Cross-protocol usage. On-chain data provides transparency impossible in traditional businesses. Every metric is auditable and real-time.

Future Protocol EvolutionAI-governed protocols represent the next frontier. Protocols run by algorithms rather than token votes. AI optimizes parameters. Machine learning predicts optimal fee levels. Governance becomes algorithmic rather than political. Human oversight remains but execution automates.

Privacy protocols enable new economic models. Zero-knowledge proofs allow private transactions. Secret shared validators hide MEV. Privacy-preserving DeFi protects users while maintaining composability. The next protocol generation balances transparency with privacy.

Real-world asset protocols bridge traditional and crypto economies. Tokenized real estate. On-chain bonds. Commodity protocols. Physical asset backing creates stable protocol value. The membrane between traditional and protocol economics becomes permeable.

Cross-protocol standards emerge. Token standards like ERC-20 enabled DeFi. New standards will enable cross-chain protocols. Universal identity. Portable reputation. Composable governance. Standards multiply protocol possibilities.

The Protocol Economy ThesisProtocol economics isn’t replacing corporate economics—it’s expanding what’s possible. Some activities benefit from corporate structures. Others thrive as protocols. The future economy combines both models. Understanding when to build companies versus protocols determines success.

The opportunity remains massive. Financial services represent $20 trillion globally. Protocols captured less than 1%. Every financial primitive can be protocolized. Every trusted intermediary can be replaced by code. We’re in the first innings of protocol economics.

Master protocol economics to build the next generation of value creation systems. Whether launching new protocols, investing in tokens, or building on existing infrastructure, protocol economics provides frameworks for thinking about decentralized value creation.

Start your protocol journey today. Study existing protocols. Understand their economics. Identify underserved markets. Design sustainable token models. Build your own protocols. The protocol economy rewards builders who understand incentive alignment through code.

Master protocol economics to build trillion-dollar networks without traditional companies. The Business Engineer provides frameworks for designing sustainable protocol economies. Explore more concepts.

The post Protocol Economics: The $2 Trillion Revolution in Value Creation Without Companies appeared first on FourWeekMBA.

August 30, 2025

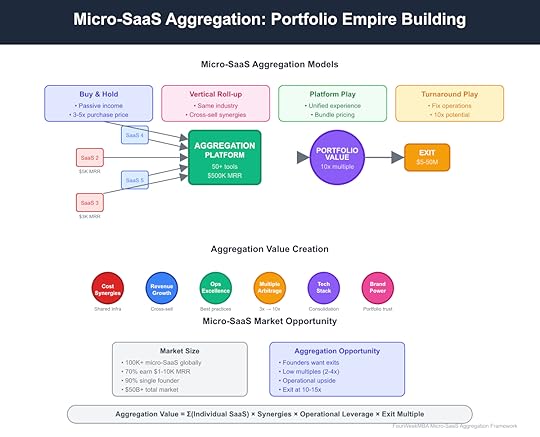

Micro-SaaS Aggregation: The $50B Hidden Empire of Internet Businesses

Micro-SaaS aggregation represents the most overlooked wealth-building opportunity in software—acquiring dozens of small, profitable SaaS businesses and transforming them into valuable portfolios worth 10x their individual sum. While VCs chase unicorns and founders dream of billion-dollar exits, a quiet revolution unfolds where aggregators buy $50K micro-SaaS businesses, optimize operations, and build empires generating millions in passive income.

The opportunity is massive yet hidden. Over 100,000 micro-SaaS businesses generate $1K-50K monthly revenue. Most founders want exits but can’t find buyers. Traditional investors ignore them as too small. This gap creates arbitrage—buying at 2-4x annual revenue and selling portfolios at 10-15x. The math is compelling: acquire 50 micro-SaaS at $100K each, optimize to double revenue, exit for $50-100M.

[image error]Micro-SaaS Aggregation: Building Empires From Internet AtomsThe Micro-SaaS PhenomenonMicro-SaaS businesses solve specific problems for niche audiences with minimal complexity. A Chrome extension for Amazon sellers making $5K/month. A Shopify app for inventory management at $10K MRR. A WordPress plugin for photographers generating $3K monthly. These aren’t venture-scale businesses, but they print money with 80%+ margins.

The economics are beautiful in their simplicity. No office, no employees, often no marketing spend. Just recurring revenue from customers who found a tool that saves them time or money. Customer acquisition happens through SEO, app stores, or word-of-mouth. Support is minimal—good products largely run themselves.

Founders build micro-SaaS for freedom, not scale. They wanted to escape corporate life, not build the next corporate giant. After 2-5 years, many achieve their lifestyle goals but face burnout, boredom, or life changes. They want exits but lack options—too small for traditional M&A, too valuable to shut down.

This creates unprecedented buying opportunities. Thousands of profitable, proven businesses available at reasonable multiples. Sellers motivated by factors beyond price. Minimal competition from institutional buyers. It’s like buying profitable real estate in 1980—obvious in hindsight, invisible today.

The Aggregation PlaybookSuccessful micro-SaaS aggregation follows a repeatable playbook perfected by pioneers like Tiny Capital and SureSwift Capital. Identify profitable micro-SaaS with stable revenue. Acquire at 2-4x annual profit. Implement operational improvements. Cross-sell to existing portfolio customers. Build towards strategic exit or hold for cash flow.

Due diligence focuses on sustainability over growth. Is the code maintainable? Are customers sticky? Does the business run without the founder? Can you improve it with minimal effort? The best acquisitions are boring businesses in boring markets with boring, predictable revenue.

Operational improvements unlock immediate value. Most micro-SaaS founders are developers, not marketers or operators. Simple changes—improved onboarding, email automation, pricing optimization, SEO improvements—can double revenue within months. What founders couldn’t or wouldn’t do becomes your value creation.

Portfolio effects multiply individual values. Shared infrastructure reduces hosting costs. Centralized support improves efficiency. Cross-promotion drives new customers. Bundle deals increase average order values. Twenty micro-SaaS businesses operating separately might be worth $2M; integrated properly, they’re worth $20M.

Acquisition StrategiesDirect outreach remains the most effective acquisition channel. Identify micro-SaaS through app stores, Product Hunt, indie hacker communities. Email founders with personalized messages showing you understand their business. Many receive their first serious acquisition interest this way.

Marketplaces democratize discovery but increase competition. MicroAcquire, Flippa, and FE International list hundreds of micro-SaaS monthly. Empire Flippers vets businesses before listing. Acquire.com connects buyers and sellers directly. Prices tend higher on marketplaces but due diligence is easier.

Broker relationships unlock off-market deals. Business brokers handling sub-$1M deals often have micro-SaaS in their portfolios. Building relationships gets you first look at new listings. Many deals never hit the public market.

Creative deal structures overcome capital constraints. Seller financing for 50-70% is common. Earnouts based on performance align incentives. Revenue shares let sellers participate in upside. You don’t need millions in cash to start aggregating—creativity and credibility matter more.

Operational Excellence at ScaleStandardization transforms chaotic micro-businesses into efficient operations. Migrate to common infrastructure. Implement shared monitoring and alerting. Standardize customer support processes. Create playbooks for common tasks. What was impossible for individual founders becomes trivial at scale.

Technology consolidation reduces complexity and cost. Move from 20 different hosting providers to one. Consolidate payment processing. Unify analytics and reporting. Implement single sign-on across properties. Each standardization reduces overhead and improves margins.

Talent leverage changes the game. One growth marketer can optimize 20 micro-SaaS. One DevOps engineer can maintain 50 applications. One customer success manager can handle support across the portfolio. Specialists at scale deliver results impossible for solo founders.

Data insights compound across the portfolio. Pattern recognition from dozens of SaaS reveals optimization opportunities. A/B tests on one property inform improvements on others. Customer behavior insights transfer between related tools. The portfolio becomes a learning machine.

Value Creation MechanismsRevenue optimization often doubles income within 12 months. Founders undercharge because they fear losing customers. Aggregators test price increases systematically. A $29/month tool becoming $49/month with grandfather pricing for existing customers adds 69% revenue growth instantly.

Customer expansion unlocks hidden value. Most micro-SaaS serve a fraction of their addressable market. SEO improvements, content marketing, and paid acquisition profitably grow customer bases. A tool with 1,000 customers in a 100,000-person market has 100x growth potential.

Product improvements drive retention and expansion. Founders often stop developing after reaching lifestyle income. Adding requested features, improving UX, and modernizing design re-energizes growth. Small improvements compound into transformation.

Strategic bundling multiplies value. Twenty WordPress plugins sold separately might generate $200K MRR. Bundled as “The Ultimate WordPress Toolkit” for $99/month, they could generate $1M MRR. Customers prefer one vendor, one bill, one support channel.

Exit Strategies and MultiplesPortfolio exits command premium multiples over individual sales. A single micro-SaaS might sell for 3x revenue. A portfolio of 20 integrated micro-SaaS can sell for 10-15x. Strategic buyers pay for customer base, technology stack, and operational efficiency.

Private equity interest in micro-SaaS portfolios grows rapidly. PE firms can’t diligence 50 micro acquisitions but gladly buy assembled portfolios. They bring operational expertise and capital to accelerate growth. Your aggregation work becomes their platform investment.

Strategic acquirers seek customer access and technology. A portfolio of developer tools might interest GitHub or Atlassian. E-commerce SaaS collections attract Shopify or BigCommerce. Marketing tools appeal to HubSpot or Salesforce. Your niche focus becomes their product expansion.

Permanent holding produces exceptional cash flow. A portfolio generating $500K monthly with 80% margins throws off $400K in free cash flow. At conservative growth rates, that’s $100M+ over 20 years. Some aggregators never plan to sell, building generational wealth through cash flow.

Risk Management and PitfallsTechnology debt accumulates without careful management. Old codebases, outdated frameworks, and security vulnerabilities hide in acquisitions. Budget for modernization or accept higher maintenance costs. Technical due diligence prevents expensive surprises.

Key person dependencies threaten continuity. Some micro-SaaS depend on founder relationships, specialized knowledge, or manual processes. Ensure knowledge transfer and process documentation before closing. The best acquisitions run themselves; the worst require constant founder involvement.

Platform risk concentrates in app ecosystems. Shopify apps face API changes and policy updates. Chrome extensions risk Google’s algorithm shifts. WordPress plugins depend on core updates. Diversify across platforms or accept concentrated risk.

Market shifts can obsolete entire categories. iOS privacy changes killed attribution tools. AI advances might replace simple automation tools. Build portfolios resilient to technology shifts or maintain reserves for pivots.

Building Your Aggregation EmpireStart small with one acquisition to learn the process. Your first micro-SaaS teaches due diligence, transfer procedures, and operations. Make mistakes on a $50K acquisition, not a $500K one. Experience compounds faster than capital.

Build systems before scaling acquisitions. Create templates for due diligence, contracts, and migrations. Document operational procedures. Establish financial reporting standards. Systems enable scaling; chaos ensures failure.

Network aggressively within micro-SaaS communities. Join indie hacker forums. Attend MicroConf and similar events. Build relationships with founders before they’re ready to sell. The best deals come through relationships, not marketplaces.

Consider partnership models to accelerate. Partner with operators who lack capital. Team with investors who need execution. Join existing aggregators as EIR (Entrepreneur in Residence). Learning from experienced aggregators accelerates your journey.

The Future of Micro-SaaS AggregationAI transforms both threats and opportunities in micro-SaaS. AI might obsolete simple tools but enables new categories. Smart aggregators buy AI-resistant businesses or quickly adapt portfolios to leverage AI. The disruption creates buying opportunities from panicked sellers.

Vertical integration strategies emerge among sophisticated aggregators. Instead of random acquisitions, focus on specific verticals—e-commerce tools, developer utilities, creative software. Deep vertical expertise enables better operations and strategic exits.

International arbitrage expands opportunities. US micro-SaaS sells for higher multiples than international equivalents. Buy profitable SaaS in emerging markets, improve operations, sell to US aggregators. Geographic arbitrage multiplies returns.

Democratization tools lower barriers further. No-code platforms enable faster micro-SaaS creation. AI accelerates development. Payment infrastructure simplifies monetization. More micro-SaaS means more aggregation opportunities.

The Aggregation ImperativeMicro-SaaS aggregation transforms from niche strategy to mainstream investment thesis as the market matures. Early movers build portfolios worth tens of millions. Fast followers still find opportunities. Laggards will pay premium prices for assembled portfolios.

The window remains open but won’t indefinitely. As success stories multiply, competition increases. Multiples rise. Quality deals become scarcer. The gold rush phase ends when institutional capital fully discovers the opportunity.

Master micro-SaaS aggregation to build wealth through small, profitable internet businesses. Whether seeking passive income, building toward major exits, or creating generational wealth, aggregation offers a proven path with manageable risks.

Start your aggregation journey today. Browse marketplaces. Email founders. Make offers. Learn by doing. The micro-SaaS aggregation opportunity rewards action over analysis, persistence over perfection.

Master micro-SaaS aggregation to build million-dollar portfolios from tiny internet businesses. The Business Engineer provides frameworks for identifying, acquiring, and optimizing micro-SaaS. Explore more concepts.

The post Micro-SaaS Aggregation: The $50B Hidden Empire of Internet Businesses appeared first on FourWeekMBA.

Super-App Theory: The $1 Trillion Race to Own Digital Daily Life

Super-apps represent the ultimate platform play—digital ecosystems that handle messaging, payments, shopping, transportation, entertainment, and dozens of other services within a single application. While Western markets fragment into specialized apps, Asian super-apps like WeChat and Grab prove that owning the daily digital routine creates trillion-dollar opportunities. The battle isn’t for user attention anymore—it’s for complete digital life integration.

The numbers validate the model’s supremacy. WeChat serves 1.3 billion users conducting $250 billion in annual transactions. Alipay processes $17 trillion yearly. Grab operates in 400 cities across 8 countries. Gojek pivoted from ride-hailing to handling 2% of Indonesia’s GDP. These aren’t just apps—they’re economic infrastructure that nations depend upon.

[image error]Super-App Theory: Building Digital Life Operating Systems Worth TrillionsThe Super-App ParadoxSuper-apps violate every Western product principle—they’re bloated, complex, and try to do everything. Silicon Valley orthodoxy preaches focus, simplicity, and doing one thing well. Yet super-apps succeed by embracing complexity, bundling dozens of services that individually might fail but collectively create inescapable utility.

Context drives divergence between markets. In emerging economies, super-apps leapfrog fragmented infrastructure. No credit cards? Mobile payments. Limited banking? Digital wallets. Poor retail distribution? E-commerce integration. Super-apps don’t just digitize existing services—they create infrastructure where none existed.

Trust concentration enables super-app dominance. In markets with weak institutions, users trust established platforms over unknown startups. Would you rather store money with WeChat, used by everyone you know, or a new fintech with no track record? Trust accumulation creates permission for horizontal expansion.

Mobile-first societies favor consolidation over fragmentation. When your phone is your primary computer, screen space becomes precious. Installing 50 apps for 50 services creates friction. One app handling everything reduces cognitive load and storage requirements. Simplicity through consolidation, not minimalism.

The Economics of EverythingSuper-apps monetize through portfolio effects rather than individual service profitability. Messaging loses money but drives engagement. Payments break even but enable commerce. Ride-hailing operates at losses but generates transaction data. The ecosystem profits while components subsidize each other.

Data integration multiplies value exponentially. Knowing your communication patterns, payment history, location data, shopping preferences, and social graph enables prediction accuracy impossible for specialized apps. This data fusion powers credit scoring, targeted advertising, demand prediction, and personalization that keeps users locked in.

Cross-selling dynamics transform customer economics. Acquiring a messaging user costs $5. That user trying payments generates $20 in lifetime value. Adding shopping creates $200 LTV. Financial services push it to $2,000. Each service layer multiplies monetization while acquisition cost stays flat.

Network effects compound across services. More users attract more merchants. More merchants draw more users. More transactions enable better financial services. Better services increase user lock-in. Each service reinforces others, creating multiplicative rather than additive value.

The Architecture of Digital LifeSuccessful super-apps follow predictable evolution patterns. Start with high-frequency, low-monetization services like messaging or transportation. Build daily habits and trust. Add payments to enable transactions. Layer commerce once payments work. Expand to adjacent services leveraging existing infrastructure.

Mini-programs revolutionize platform architecture. Instead of building everything internally, super-apps become operating systems where third parties deploy mini-applications. WeChat hosts 3.5 million mini-programs. Users access services without downloads. Developers reach billions without app stores. The platform tax funds core infrastructure.

Identity layers unify disparate services. Single sign-on seems trivial but proves transformative. One identity accessing everything reduces friction to near-zero. Shared wallets eliminate payment setup. Unified profiles enable cross-service personalization. Technical integration creates business model innovation.

API strategies determine ecosystem health. Open enough to attract developers. Controlled enough to maintain quality. Standardized for interoperability. Monetized to sustain investment. The best super-apps balance openness with control, creating vibrant ecosystems within boundaries.

Geographic Arbitrage in Super-AppsAsia leads super-app adoption due to mobile leapfrogging and regulatory environments. China’s WeChat and Alipay duopoly emerged from unique conditions—massive mobile-first population, limited legacy infrastructure, regulatory protection, and cultural acceptance of platform concentration.

Southeast Asia replicates the model with local variations. Grab dominates Singapore to Myanmar. Gojek owns Indonesia. GCash leads the Philippines. Each adapts the super-app playbook to local needs—Islamic banking features, motorcycle taxis, market-specific payment methods.

Latin America accelerates super-app development. Mercado Libre evolves from e-commerce to financial services. Rappi expands from delivery to banking. NuBank adds commerce to digital banking. The playbook translates across emerging markets with similar dynamics.

Western markets resist super-app consolidation. Regulatory scrutiny, privacy concerns, established infrastructure, and cultural preferences for specialization limit bundling. Facebook’s cryptocurrency faced immediate backlash. Apple Pay stays narrowly focused. Amazon segregates services. The West may never see true super-apps.

The Platform Power PlaySuper-apps accumulate power that transcends business into infrastructure. When WeChat goes down, Chinese commerce freezes. When Grab stops, Southeast Asian cities face transportation crisis. This infrastructure criticality grants pricing power, regulatory influence, and competitive moats approaching natural monopolies.

Government relationships become complex dances. Regulators need super-apps for digital economy development but fear concentration risk. China’s tech crackdown targeted super-app power. India banned Chinese super-apps for security. The balance between enabling innovation and preventing dominance shapes markets.

Financial services represent the end game. Every super-app eventually becomes a bank. Payments lead to wallets. Wallets enable lending. Lending requires deposits. Soon the messaging app holds more assets than traditional banks. The real money isn’t in app features—it’s in becoming the financial system.

Platform economics create winner-take-all dynamics. Users won’t maintain multiple super-apps doing the same things. Merchants can’t integrate with dozens of platforms. Markets naturally consolidate to 1-2 dominant players. Second place might survive; third place struggles; fourth place dies.

Building Super-App MoatsSwitching costs in super-apps exceed any individual service. Leave WeChat and you lose messaging history, payment methods, mini-program access, social connections, and daily routines. The accumulated friction makes switching nearly impossible even when alternatives exist.

Data advantages compound over time. Seven years of transaction history enables credit scoring no competitor can match. Behavioral patterns predict needs before users know them. Social graphs reveal influence and trust networks. Time becomes the ultimate moat.

Ecosystem lock-in multiplies defensibility. Merchants invest in mini-programs. Developers learn proprietary systems. Users store value in wallets. Partners integrate APIs. Each stakeholder’s investment raises switching costs for all others.

Brand trust transcends features in super-app competition. Users store life savings in these apps. Share personal messages. Depend on them for transportation. Trust, once earned, creates permission for expansion that new entrants can’t quickly replicate.

The Western Super-App ChallengeTech giants attempt super-app strategies with mixed results. Facebook (Meta) bundles messaging, marketplace, and payments but faces regulatory resistance. Amazon separates services across apps. Google’s integration attempts feel forced. Apple maintains strict app boundaries. Western culture resists centralization.

Regulatory frameworks prevent Asian-style consolidation. Antitrust law targets bundling. Privacy regulations limit data sharing. Financial regulations separate banking from commerce. The regulatory environment designed to prevent monopolies inadvertently prevents super-app innovation.

Specialized apps dominate through excellence. Venmo owns P2P payments. Uber dominates ride-sharing. DoorDash leads delivery. Each optimizes for specific use cases rather than broad integration. The West chose best-of-breed over all-in-one.

Cultural preferences shape product acceptance. Western users value privacy, choice, and specialization. Asian users prioritize convenience, integration, and efficiency. Neither is wrong—they reflect different societal values embedded in technology design.

Super-App Investment StrategiesVenture capital struggles with super-app economics. Early growth requires massive subsidies across multiple services. Profitability comes from portfolio effects taking years to materialize. The capital requirements and timeline mismatch typical venture fund structures.

Strategic investors and sovereign wealth funds lead super-app funding. Alibaba invested in Paytm. Tencent backed Gojek. SoftBank funded Grab. Patient capital willing to wait decades for ecosystem maturity drives super-app development.

Public markets misunderstand super-app valuations. Analysts evaluate individual services missing ecosystem value. Grab trades below private valuations despite growth. Investors struggle pricing optionality across dozens of potential business lines.

Acquisition strategies focus on capability addition. Buy payment companies for financial services. Acquire logistics for delivery networks. Purchase content for engagement. Each acquisition adds a service layer while leveraging existing user base.

Future Evolution PathsAI transforms super-apps into predictive life assistants. Instead of users choosing services, AI anticipates needs. Order food before hunger strikes. Book transportation for calendar events. Manage finances automatically. The super-app evolves from utility to intelligence.

Voice and conversational interfaces eliminate app boundaries. Tell your assistant what you need; it handles everything through the super-app backend. The interface disappears while the ecosystem strengthens. Natural language becomes the universal API.

Blockchain enables decentralized super-apps. User-owned identity portable across services. Decentralized finance replacing traditional banking. Smart contracts automating commerce. The super-app architecture might persist while ownership decentralizes.

AR/VR creates spatial super-apps. Digital layers over physical world accessed through single platforms. Shop by looking at products. Pay by gesture. Navigate through visual overlays. The super-app transcends screens into reality itself.

Strategic ImplicationsEvery company must develop a super-app strategy—build, partner, or defend. Ignoring ecosystem plays while competitors aggregate services ensures disruption. The question isn’t whether consolidation happens but who controls the aggregation point.

Geographic expansion requires local adaptation. Super-app models don’t translate directly across cultures. Payment preferences, service priorities, and trust factors vary. Successful expansion localizes while maintaining core infrastructure.

Data integration capabilities determine competitive advantage. Companies that can’t combine data across services can’t compete with those that do. Privacy-preserving integration techniques become crucial for Western markets.

Platform thinking supersedes product thinking. Stop building features; start building ecosystems. Enable others to create value on your platform. The most successful super-apps do less themselves while enabling more for others.

The Super-App ImperativeSuper-apps represent the logical endpoint of digital platform evolution—maximum utility through minimum friction. As digital services proliferate, users demand consolidation. As data becomes currency, integration creates value. As attention fragments, unified experiences win.

The trillion-dollar question remains: Will the West develop indigenous super-apps or import Asian models? Cultural and regulatory barriers suggest continued fragmentation, but user demands and competitive pressure might force consolidation.

Master super-app theory to build the next generation of platform businesses. Whether creating regional super-apps, building specialized ecosystems, or defending against platform aggregation, understanding super-app dynamics determines digital economy success.

Start your super-app journey today. Identify high-frequency services to build habits. Add payment capabilities for transactions. Expand to adjacent services. Build developer ecosystems. The path from app to super-app is clear—execution separates winners from dreamers.

Master super-app theory to build trillion-dollar digital life platforms. The Business Engineer provides frameworks for creating ecosystem businesses that dominate daily digital routines. Explore more concepts.

The post Super-App Theory: The $1 Trillion Race to Own Digital Daily Life appeared first on FourWeekMBA.

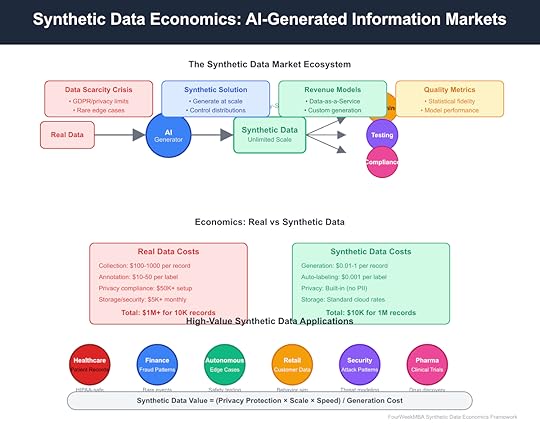

Synthetic Data Economics: The Trillion-Dollar Market for Artificial Information

Synthetic data represents the most underappreciated revolution in AI economics—artificially generated information that trains models better than real data while solving privacy, scale, and cost challenges simultaneously. As regulations tighten and data becomes the new oil, synthetic data emerges as the refinery that transforms limited raw material into unlimited fuel for AI advancement. This isn’t about fake data—it’s about engineered information optimized for machine learning.

The market validates this transformation. Gartner predicts 60% of AI training data will be synthetic by 2024. The synthetic data market is projected to reach $3.5 billion by 2028, growing at 35% CAGR. Companies like Synthesis AI, Mostly AI, and Datagen have raised hundreds of millions to generate data that never existed but works better than reality. Understanding synthetic data economics is crucial for anyone building or investing in AI.

[image error]Synthetic Data Economics: Transforming Data Scarcity Into Abundance Through AI GenerationThe Data Paradox Driving Synthetic SolutionsModern AI faces a fundamental paradox: models need massive data to improve, but privacy regulations and practical constraints make real data increasingly inaccessible. GDPR fines reach hundreds of millions. Healthcare data requires years of compliance work. Financial data faces regulatory scrutiny. The very data AI needs most is hardest to obtain legally.

Real-world data suffers from inherent limitations beyond privacy. Rare events—like specific medical conditions or fraud patterns—appear too infrequently for effective model training. Biased historical data perpetuates discrimination. Incomplete datasets create blind spots. Real data reflects the messy, unfair, incomplete world as it is, not the balanced training sets AI needs.

Cost compounds the problem. Collecting real customer data costs $100-1,000 per complete record when including acquisition, cleaning, annotation, and compliance. A modest 10,000-record dataset for a specialized AI application can cost over $1 million before storage and security. These economics limit AI development to well-funded corporations.

Synthetic data flips these economics entirely. Generated data costs $0.01-1 per record, includes perfect labels, contains no personal information, and can represent any distribution desired. Need a million medical records with rare conditions properly represented? Generate them. Want fraud patterns without compromising customer data? Create them. The impossible becomes routine.

The Economics of Data GenerationSynthetic data economics follow software patterns rather than physical collection costs. High initial investment in generation models and validation systems, near-zero marginal cost per record, infinite scalability without quality degradation. This cost structure enables business models impossible with real data.

Generation costs vary by complexity and fidelity requirements. Simple tabular data (customer records, transactions) costs $0.01-0.10 per record. Complex unstructured data (images, video, text) ranges $0.10-1.00 per item. Specialized domains (medical imaging, autonomous driving scenarios) can reach $1-10 per instance. Still 100-1000x cheaper than real equivalents.

Quality drives pricing power. Low-fidelity synthetic data for basic testing commands commodity prices. High-fidelity data indistinguishable from real data in statistical properties and model performance commands premium prices. The best synthetic data providers guarantee model performance parity or improvement versus real data.

Infrastructure requirements create barriers to entry. Generating high-quality synthetic data requires sophisticated AI models, domain expertise, and validation frameworks. A synthetic medical imaging company needs radiologists, AI researchers, and computational infrastructure. These requirements limit competition and support pricing power for quality providers.

Business Models in Synthetic DataData-as-a-Service (DaaS) dominates current synthetic data business models. Providers maintain generation infrastructure and deliver data through APIs or batch downloads. Customers pay per record, per dataset, or through subscriptions. This model minimizes customer complexity while maximizing provider leverage.

Platform models emerge as the market matures. Rather than generating data directly, platforms provide tools for customers to create their own synthetic data. Mostly AI and Synthesized offer platforms where enterprises can upload their data schemas and privacy requirements, receiving synthetic versions that maintain statistical properties while removing personal information.

Vertical specialization creates premium opportunities. Healthcare synthetic data commands 10-100x higher prices than generic data due to regulatory requirements and domain expertise needed. Synthesis AI focuses on synthetic human data for computer vision. Datagen specializes in human motion and behavior. Specialization enables differentiation.

Hybrid models combine real and synthetic data. Start with limited real data, amplify with synthetic variations, validate performance on real test sets. This approach maximizes the value of scarce real data while leveraging synthetic data’s scale advantages. Many providers offer hybrid solutions as enterprises rarely abandon real data entirely.

Quality Metrics and ValidationSynthetic data quality determines its economic value—poor synthetic data performs worse than no data. Quality measurement requires sophisticated statistical and performance metrics. Distribution matching ensures synthetic data follows the same statistical patterns as real data. Feature correlation preservation maintains relationships between variables.

Privacy preservation adds complexity to quality metrics. Differential privacy guarantees that no individual record from the source data can be inferred from synthetic data. But stronger privacy often means lower fidelity. Providers must balance privacy guarantees with data utility, creating different tiers for different use cases.

Model performance provides the ultimate quality metric. If models trained on synthetic data perform equally to those trained on real data in production, statistical differences matter less. Leading providers guarantee performance parity or money back, shifting quality risk from customers to providers.

Validation costs can exceed generation costs for critical applications. Healthcare synthetic data requires clinical validation. Financial synthetic data needs risk model testing. Autonomous vehicle data demands safety verification. These validation requirements create moats for established providers with proven track records.

Market Dynamics and CompetitionThe synthetic data market fragments across dimensions of data type, industry vertical, and quality requirements. No single provider dominates across all segments. Mostly AI leads in structured data privacy. Synthesis AI dominates synthetic humans. Parallel Domain owns synthetic sensor data for autonomous systems.

Big Tech enters aggressively. Amazon offers synthetic data through SageMaker. Microsoft provides synthetic data tools in Azure. Google’s Vertex AI includes data synthesis capabilities. These platforms commoditize basic synthetic data while specialized providers move upmarket into higher-value, domain-specific offerings.

Open source challenges proprietary models. Tools like SDV (Synthetic Data Vault) from MIT and Synthpop provide free synthetic data generation. While these lack the sophistication and support of commercial offerings, they pressure pricing for basic use cases and force commercial providers to differentiate through quality and specialization.

Acquisition activity accelerates as larger companies recognize synthetic data’s strategic value. Datagen raised $50 million. Synthesized raised $20 million. AI21 Labs acquired Dataloop. Expect consolidation as cloud providers and AI platforms acquire specialized synthetic data companies to enhance their offerings.

Industry-Specific ApplicationsHealthcare leads synthetic data adoption due to privacy requirements and data scarcity. Real patient data faces HIPAA restrictions, limited availability for rare conditions, and ethical concerns about commercialization. Synthetic patient records, medical images, and genomic data enable AI development without privacy risks. MDClone and Syntegra specialize in healthcare synthetic data.

Financial services leverage synthetic data for fraud detection and risk modeling. Real fraud data is scarce (thankfully) but essential for model training. Synthetic fraud patterns allow models to learn from thousands of variations of known attacks. J.P. Morgan and American Express use synthetic data to improve detection while protecting customer privacy.

Autonomous vehicles depend on synthetic data for edge case training. Real-world data collection cannot safely capture all dangerous scenarios. Synthetic data generates millions of accident scenarios, weather conditions, and pedestrian behaviors impossible to collect safely. Parallel Domain and Applied Intuition lead this market.

Retail and e-commerce use synthetic customer data for personalization without privacy risks. Generate diverse customer profiles, purchase histories, and behavioral patterns that maintain statistical validity while containing no real individuals. This enables AI development in privacy-conscious markets like Europe.

Technical Architecture of Synthetic Data SystemsGenerative AI powers modern synthetic data creation. GANs (Generative Adversarial Networks) create realistic images and unstructured data. VAEs (Variational Autoencoders) generate structured data with controlled properties. Diffusion models produce high-quality synthetic media. The choice of architecture depends on data type and quality requirements.

Privacy preservation requires careful architectural choices. Differential privacy adds mathematical noise to prevent individual identification. Federated learning generates synthetic data without centralizing real data. Secure enclaves protect sensitive source data during synthesis. These technical requirements add complexity but ensure compliance.

Validation pipelines ensure quality at scale. Statistical tests verify distribution matching. Discriminator networks attempt to distinguish synthetic from real data. Downstream task performance measures ultimate utility. Automated validation enables quality guarantees at scale, essential for enterprise adoption.

Infrastructure costs drive business model decisions. High-quality generation requires significant GPU resources. A single synthetic MRI might require $10-100 in compute costs. Providers must balance generation quality with computational efficiency to maintain margins while meeting quality requirements.

Regulatory and Ethical ConsiderationsSynthetic data exists in regulatory gray areas that create both opportunities and risks. While synthetic data contains no personal information, it derives from real data, raising questions about derived rights and obligations. Current regulations like GDPR don’t explicitly address synthetic data, creating uncertainty.

Ethical concerns emerge around bias amplification. If source data contains biases, synthetic data can amplify them through the generation process. Conversely, synthetic data enables bias correction by generating balanced datasets. Providers must navigate between preserving statistical accuracy and promoting fairness.

Intellectual property questions remain unresolved. Who owns synthetic data derived from proprietary datasets? Can synthetic data trained on copyrighted images be freely used? These questions await legal clarification but create risks for synthetic data businesses and their customers.

Industry standards slowly emerge. IEEE works on synthetic data standards. ISO develops quality metrics. Industry groups create best practices. Standardization will reduce uncertainty and accelerate adoption but may commoditize basic offerings.

Investment and Market OpportunityVenture capital floods into synthetic data, recognizing its fundamental role in AI development. Over $500 million invested in synthetic data companies in recent years. Valuations reach hundreds of millions for companies with minimal revenue, reflecting future potential rather than current traction.

Market sizing depends on AI adoption rates. If 60% of AI training uses synthetic data and the AI market reaches $1 trillion, synthetic data could represent a $50-100 billion market. More conservative estimates focusing on current enterprise adoption suggest a $5-10 billion near-term market.

Geographic differences create opportunities. Europe’s strict privacy regulations drive synthetic data adoption. China’s vast data resources reduce immediate need. The US market balances between innovation and regulation. Companies positioning across geographies capture diverse opportunities.

Exit opportunities multiply as the market matures. Strategic acquisitions by cloud providers, AI platforms, and data companies. IPO potential for market leaders. Private equity rollups of specialized providers. The synthetic data market offers multiple paths to liquidity.

Future EvolutionSynthetic data evolution follows predictable patterns toward higher quality, lower cost, and broader applications. Generation quality improves exponentially with AI advances. Costs decrease with computational efficiency. New applications emerge as quality thresholds are crossed.

Real-time synthesis enables new use cases. Instead of pre-generating datasets, create synthetic data on-demand for specific model requirements. Dynamic synthetic data that evolves with model needs. This shifts synthetic data from static resource to dynamic capability.

Synthetic-first development paradigms emerge. Rather than collecting real data then creating synthetic versions, start with synthetic data and validate with minimal real data. This inverts traditional ML workflows and enables rapid experimentation without privacy concerns.

Market consolidation seems inevitable. Platform players acquire specialists. Quality leaders merge for scale. Open source commoditizes basics. The synthetic data market will likely mirror other enterprise software markets with 3-5 major players and numerous specialists.

Strategic ImplicationsEvery AI company must develop a synthetic data strategy. Whether building internally, partnering with providers, or acquiring capabilities, synthetic data becomes essential for competitive AI development. Ignoring synthetic data means accepting permanent disadvantages in data access and model improvement.

Data moats erode as synthetic alternatives emerge. Companies relying on proprietary data for competitive advantage must recognize that synthetic data can replicate their moats. New moats must be built on model performance, customer relationships, or network effects rather than data exclusivity.

Privacy-preserving AI becomes the default. Synthetic data enables AI development without privacy compromises, removing excuses for invasive data practices. Companies clinging to personal data collection face regulatory, reputational, and competitive risks.

First-mover advantages exist in specialized domains. Companies establishing synthetic data leadership in specific verticals can build lasting advantages through quality reputation, domain expertise, and customer relationships. The window for establishing category leadership remains open but closing.

The Synthetic FutureSynthetic data transforms from necessity to advantage as AI practitioners recognize its benefits beyond privacy. Perfect labels, balanced distributions, edge case generation, and infinite scale make synthetic data superior to real data for many applications. The question shifts from “why synthetic?” to “why not synthetic?”

Business models evolve to embrace abundance. When data is infinite and cheap, new applications become possible. Train thousands of model variations. Test every edge case. Personalize to extreme degrees. Synthetic data enables AI applications impossible with scarce real data.

Master synthetic data economics to thrive in the AI economy. Understand generation costs, quality metrics, and business models. Build or partner for synthetic data capabilities. Embrace synthetic-first development where appropriate. The future of AI is synthetic—position accordingly.

Start leveraging synthetic data today. Identify data bottlenecks in your AI development. Evaluate synthetic alternatives for non-sensitive applications. Test quality and performance parity. Build expertise before synthetic data becomes table stakes. The synthetic revolution has begun—lead or be left behind.

Master synthetic data economics to accelerate AI development while preserving privacy. The Business Engineer provides frameworks for leveraging artificial information to build competitive advantages. Explore more concepts.

The post Synthetic Data Economics: The Trillion-Dollar Market for Artificial Information appeared first on FourWeekMBA.

Platform Shifting: How Moving to New Platforms Creates Billion-Dollar Opportunities

Platform shifting represents one of the most powerful strategies for creating massive value—taking an existing solution and rebuilding it on a fundamentally better platform. Figma didn’t just create better design software; they shifted design from desktop to browser. Shopify didn’t compete with enterprise commerce; they shifted e-commerce to the cloud. Each shift unlocked 10-100x more value than incremental innovation ever could.

The opportunity has never been greater. Every industry built on legacy platforms faces disruption from entrepreneurs who recognize platform shifts. Mobile, cloud, AI, AR/VR, blockchain—each new platform enables reimagining entire categories. Master platform shifting, and you don’t compete in markets; you recreate them on your terms.

[image error]Platform Shifting: Moving Industries to Superior Technology FoundationsThe Anatomy of Platform ShiftsPlatform shifts occur when new technology foundations offer fundamentally superior characteristics. The shift from mainframes to PCs wasn’t about smaller computers; it democratized computing. The shift from desktop to web wasn’t about internet access; it eliminated installation friction. Each platform shift changes the rules of what’s possible.

Superior platforms share common advantages. Lower friction enables broader adoption. Better distribution reaches new users. Enhanced capabilities unlock new use cases. Network effects become possible. The cumulative impact transforms niche products into mass market phenomena.

Timing determines success or failure in platform shifts. Too early, and the platform lacks maturity—WebVan failed delivering groceries online in 1999. Too late, and incumbents have already shifted—competing with Google Docs today means fighting an entrenched platform leader. The window of opportunity opens briefly.

Platform shifts create discontinuities incumbents can’t navigate. Their existing advantages become liabilities on new platforms. Adobe’s desktop dominance meant nothing against Canva’s web simplicity. Blockbuster’s retail footprint became overhead against Netflix’s digital distribution. New platforms reset competitive dynamics.

Recognizing Platform Shift OpportunitiesThe best platform shifts target successful products on aging platforms. Look for industries where everyone accepts platform limitations as “how things work.” Desktop software requiring installation. Enterprise software needing IT departments. Hardware solutions to software problems. These constraints signal opportunity.

Customer frustration reveals platform limitations. “Why can’t I access this from my phone?” “Why does this require downloads?” “Why can’t my team collaborate in real-time?” Each complaint about platform constraints points toward shift opportunities. Listen for what customers wish was possible.

New platform capabilities enable previously impossible features. Real-time collaboration required web platforms. Mobile sensors enabled location-based services. AI enables natural language interfaces. When platforms gain new powers, entire categories become shiftable.

Watch for generational divides in platform preferences. Younger users often adopt new platforms while older users cling to familiar ones. TikTok shifted social video from YouTube’s platform to mobile-native. Discord shifted communication from forums to real-time chat. Generational platform preferences create natural wedges.

The Figma Playbook: Desktop to BrowserFigma’s platform shift from desktop to browser design tools created a $20 billion outcome. Adobe dominated design software for decades with Photoshop, Illustrator, and XD. These powerful tools required expensive licenses, powerful computers, and constant file management. Figma saw the browser as a superior platform.

Browser-based design solved fundamental collaboration problems. Multiple designers could work simultaneously in the same file. Stakeholders could comment without installing software. Versions were managed automatically in the cloud. Features impossible on desktop became table stakes on the web.

Figma’s platform shift changed the entire design workflow. Design moved from solo creation to collaborative process. Files shifted from email attachments to shared links. Updates went from annual releases to continuous deployment. The platform shift transformed how design teams operated.

Adobe’s response proved the power of platform shifts. Despite decades of dominance and unlimited resources, Adobe couldn’t effectively compete on the web platform. They ultimately paid $20 billion to acquire Figma—validation that platform shifts create insurmountable advantages.

The Shopify Model: Enterprise to SMB CloudShopify shifted e-commerce from complex enterprise software to simple cloud services. Traditional e-commerce required servers, developers, and six-figure investments. Shopify recognized that cloud platforms could democratize online selling, making it accessible to anyone.

The platform shift wasn’t just technical—it was philosophical. Enterprise e-commerce focused on customization and control. Shopify emphasized simplicity and speed. By constraining options while enabling essentials, they made e-commerce accessible to millions of small businesses.

Shopify’s app ecosystem leveraged platform advantages. Third-party developers could extend functionality without touching core code. Updates deployed instantly to all stores. The platform approach created network effects traditional software couldn’t match.