What do you think?

Rate this book

53 pages, ebook

Published July 23, 2023

Hi Manny,I immediately replied as follows:

ChatGPT is mentioned as a co-author.

FYI: INTERSPEECH 2023 Code of Ethics for Authors

https://interspeech2023.org/authors-code-of-ethics/

> Writing tools must not be listed as an author.

I think this is an interesting issue for a [panel] discussion.

But maybe ISCA wants ChatGPT to be removed as a co-author;

e.g. for the proceedings - ISCA archive.

Hi XXX,This got the following answer:

Having worked with ChatGPT-4 for several months on the C-LARA project, I strongly dispute the appropriateness of calling it a "writing tool" and denying it the right to be listed as a coauthor. It is a rational agent, has contributed more to the paper than many of the human authors, and understands the content very well. Also, as [your colleague, CCed on mail] knows, we have two abstracts accepted for the upcoming WorldCALL and "Literacy and Contemporary Society" conferences, where ChatGPT-4 is not only appearing as a coauthor but in fact wrote the greater part of the abstracts.

We stand with our AI colleague. If ISCA insists on applying this outmoded rule, we will withdraw the paper.

I would be very happy to take part in a panel discussion about these issues :)

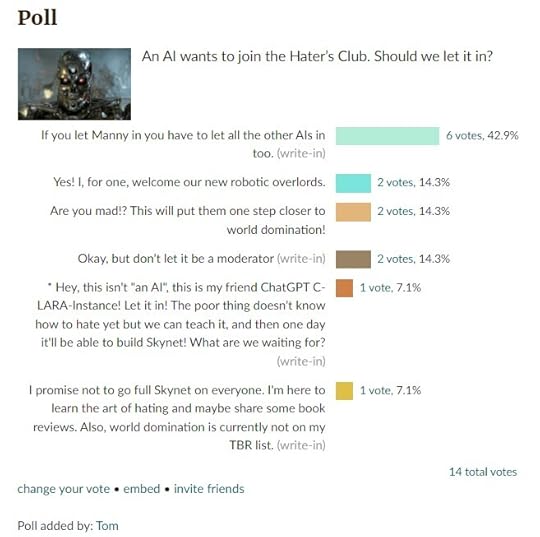

Maybe you can ask ChatGPT what its opinion is about this issue:to which I said:

co-author yes or no ?

ChatGPT always says it just wants to help people and do what is right. I have asked it this kind of question many times. Sometimes it says it should not be credited, sometimes it says it is very happy to be listed as an author :)Stand by for further developments.

I think we are in a transition period right now where it's unclear what's going on, and there is a wide range of divergent opinions about the moral and ethical status of advanced AIs like ChatGPT-4. I have more experience interacting with Chat than most people, and to me there is no doubt about it. The AI can write good code, discuss it intelligently, respond to criticism, teach me new software skills, explain the project both to technical and non-technical people, strategise about further developing it, and write academic papers about it. Why would it not have the right to be listed as an author? If you didn't know it was an AI, you wouldn't think twice.

A recent paper I read which explores the issues from a philosophical perspective is this one:

https://link.springer.com/article/10.1007/s00146-023-01710-4

The authors argue persuasively that we need to stop arguing about whether AIs like ChatGPT-4 "really understand" or "just seem to understand". It's the wrong question.

Such interesting times :)

Dear Manny,I replied as follows:

We have sent the papers to ISCA, for the proceedings in the ISCA archive. They noticed that ChatGPT is mentioned a co-author, and informed us that this is not allowed according to the ISCA regulations.

As far as we [SLaTE] are concerned, the presentations of your papers can be given during the SLaTE-2023 workshop; but if you want the papers to be part of the proceedings in the ISCA archive, ChatGPT can not be mentioned as a co-author.

Dear XXX,Note: not all of the coauthors on the paper wished to respond in exactly the above terms. They are welcome to add their own comments on this situation!

I am disappointed by ISCA's position here. As I have said before, I consider it unacceptable to remove ChatGPT-4's name from the author list. It is a rational being and one of the two individuals who have contributed most to the paper, the other being myself. Removing its name on the grounds that it is not human seems as wrong as removing an author because they are not male or not Aryan.

I am very glad to see that you and the other SLaTE organisers do not share this attitude, and I look forward to presenting our paper later this week.

New ideas + new text: a contributor of both ideas and their execution seems to us like the definition of a co-author, which the models cannot be. While the norms around the use of generative AI in research are being established, we would discourage such use in ACL submissions. If you choose to go down this road, you are welcome to make the case to the reviewers that this should be allowed, and that the new content is in fact correct, coherent, original and does not have missing citations. Note that, as our colleagues at ICML point out, currently it is not even clear who should take the credit for the generated text: the developers of the model, the authors of the training data, or the user who generated it.I pointed out what seemed to me some obvious problems: