The Benefits of Raising Data Center IT Equipment Air Intel Temperature Case Study

UC Berkeley Extension Building Systems and Technology ROI Project Assignment

The Benefits of Raising Data Center IT Equipment Air Intel Temperature Case Study

by Darryl L. Wortham darrylwortham@gmail.com – 28 November 2015 (v1.0)

Data Center Temperature Inefficiency Situation and the Problem to be Solved

As data centers consume a tremendous amount of energy to meet the growing Internet demands, efficiency is becoming more critical. In the United States, data centers power consumption are 3 percent and is quickly growing to 4. Governments and municipalities are pressuring data center operators to be more green and lower Power Usage Efficiency (PUE) by using less energy and water. Many have passed legislation requiring USGBC’s Leadership in Energy and Environmental Design (LEED) and/or EPA’s Energy Star certifications. There is also financial pressure to be more efficient as facility and data center managers are becoming responsible for paying the utility bills.

One of the biggest opportunities to save energy is from the cooling plants: 1) Chillers 23%, 2) Computer Room Air Conditioner (CRAC) / Computer Room Air Handler (CRAH) 15%, and 3) Humidifier 3%. This is also a big consumer of city utility potable water. Chiller water systems are one of the largest facility power consumers in a data center. Most data center facilities today IT inlet temperatures ranges from 65 to 70 degrees Fahrenheit. Older data centers that have not been modernized or have a lot of legacy equipment have a set point of 60°F or lower.

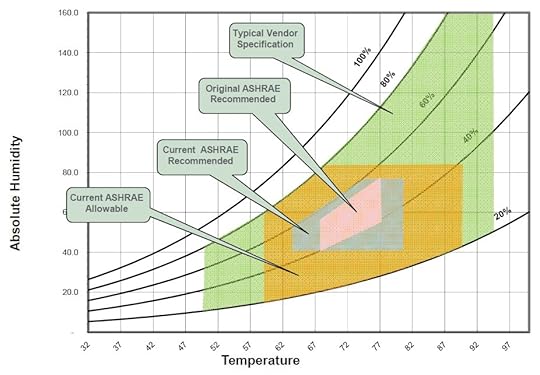

To address the growing concerns about energy efficiency, particularly the cooling component, ASHRAE’s (American Society of Heating Refrigerating and Air-Conditioning Engineers) Technical Committee (TC) 9.9 has been evolving the Thermal Guidelines toward wider temperature and humidity ranges. Originally in 2004, ASHRAE Thermal Guidelines for Liquid Cooled Data Processing Environment recommended dry bulb temperature limit was 68 to 77°F.

Increase IT Inlet Temperature Set-Point Proposal

In 2008, ASHRAE recommended range for temperature and humidity set-points were expanded, enabling reduced mechanical cooling and increased economizer hours. One of the most obvious method of reducing energy and water is to increase IT inlet temperature. It is also quick and straight forward to implement reaping “HUGE” returns without CAPEX investments and negligible OPEX expenditures.

With this broader temperature range, the more data centers can operator in economize mode or operate with less number of CRACs or CRAHs + Chillers (usually required with CHAH as the cooling system). Reducing CRAC units also reduces toxic refrigerant chemicals and the cost to dispose of these contaminants.

Temp Inlet ASHRAE 2011 TC Recommendations

Figure 1- From “High Efficiency Indirect Air Economizer-Based Cooling for Data Centers” by Schneider Electric

ASHRAE 2008 TC was updated with recommended 64.4 to 80.6°F, allowable 59 to 90°F (class A1 – data center), and allowable 41 to 113°F (class A4 – point-of-sale & industrial). The manufacturers have been recommending these allowances, and even higher, for many years with the same mean time to failure (MTTF) rate. Servers are tougher than they appear. The largest data center operators like Google, Amazon, and Facebook have been running many of their data centers at the upper recommended levels for years.

The humidity set-point range (non-condensing) was increased in ASHRAE 2004 recommendation from 41.9°F DP (dew point) to 60% RH (relative humidity) or 59°F DP. In 2008, ASHRAE allowance range increased: 20 to 80% or 63°F DP.

This whitepaper recommends raising the baseline temperature inside (IT equipment air intake) modern data centers that will save money by reducing the amount of energy used for air conditioners and can allow expanded use of outside air for cooling. The additional benefit will be the reduction of water and less mechanical systems to maintain. This will be accomplished at a minimal cost that will produce very large and relatively immediate return on investment, yeah!

Return On Investment

Data hall environment scenario: 1) 10 rows, 2) 30 racks / row, 3) 7’ tall racks, 4) 48 servers / rack, 5) 40Gbps top of rack network switching, 6) 10 kW / rack, and 7) 10 CRAC units

There is no CAPEX to increase chillers set-point temperature. This can be accomplished in a matter of hours or even minutes. In addition, operating and maintenance (O&M) expenses to implement this change is very low.

Here are the calculation assumptions:

4 FTEs at 10% for 4 weeks to create and approve the change order:

4 x $120K/year x 10% x 4 weeks = $3,693

2 FTEs at 10% for 6 weeks to implement change order:

2 x $120K/year x 10% x 6 weeks = $2,770

2 FTEs at 25% for 3 weeks to remove (take off-line) CRAC units and place into redundant configuration, maintenance back up mode, or put in storage for future expansion:

2 x $100K/year x 25% x 3 weeks = $2,885

Just providing ROI is not relevant enough for this business case since there is no CAPEX and the OPEX for continue support is not needed with such a quick implementation. To best explain the monetary return, below are the annual savings for implementing the increase of temperature set-point and when the OPEX implementation expense is paid off:

Increase IT inlet temperature traditional plate and frame heat exchange from 70 to 80°F while keeping the existing cooling systems:

19% annual saving!

Implementation cost of $6,463 ($3,693+$2,770) is returned with 2 to 3 months depending on the computer room load and utility rate.

After that period of time you will fully recognize the 19% annual saving since there is no OPEX.

Increase IT inlet temperature on self-containment system from 70 to 80°F while keeping the existing cooling systems:

22% annual saving!

Implementation cost of $6,463 ($3,693+$2,770) is returned with 2 to 3 months depending on the computer room load and utility rate.

After that period of time you will fully recognize the 22% annual saving since there is no OPEX.

Increase IT inlet temperature from 70 to 80°F while removing 20% the existing cooling systems:

In a small computer room (data hall) from operating 10 down to 8 CRAC units (take 2 units off line).

20% annual saving!

Implementation cost of $6,578 ($3,693+$2,885) is returned with 1 to 2 months depending on the computer room load and utility rate. ROI is less (1 to 2 months) than the above two scenarios because the implementation is much quicker (in 2 versus 5 weeks).

After that period of time you will fully recognize the 20% annual saving since there is no OPEX.

Moving from tightly controlled 68 degrees with variance of 1 degree to a more loosely control of 80°F with variance of 2 to 3°F:

This is also an addition annual savings of 3% for the above three scenarios.

Data centers should not have rapidly fluctuating temperatures and humidity. The temperature must be consistent and continuous. It is important to measure intake (supply side) temperatures and have sensors at multiple places on each IT rack. Utilizing Data Center Infrastructure Management (DCIM) as programmed controls tied to each sensor is strongly recommended when expanding the environmental ranges.

This warmer air inlet temperature change order needs to executed slowly and predictable over time:

Increase 2 degrees (1 degree is a conservative option) per week (same day and time each week).

Review reporting metrics of temperature at the same locations.

Execute back out plan if you did not receive the expected results.

If too hot, the IT equipment fan will run in high mode using more power and will offset the saving in cooling energy – you will need to back out to the previous set-point.

Continue process and stop once you reached 80°F.

If removing CRAC units: remove one the first week, monitor the temps, backing out if the results are not met, and if the results are positive then take the second unit offline the following next.

Addition benefits:

Variable Speed / Frequency Drives (VSD / VFD) on fans, pumps, chillers, towers, and IT equipment like used in servers, storage, and networking gear will further reduce power consumption. This is wasteful for the fans to run at 100% if the workload is at only 50%.

10 to 20% of computer room space is mechanical, electrical, and plumbing (MEP). So decreasing the number CRACs or CRAH will provide more white space for income producing systems like computing systems (servers, storage, and networking gear).

Removing CRAH, CRAC, and chiller units also reduces the redundant MEP, maintenance, and warrantees to support them.

Configuration like N+2 fault tolerant that a CRAC can be taken offline without eliminating the N+1 redundancy model. So when the extra CRAC is no longer needed because of the high temperature set-point it can be utilized to support maintenance activities that may cause downtime due to human error with no addition CAPEX.

Relaxing humidity control can deliver substantial energy savings (this will be a part of my next study).

Evaporative cooling (direct or indirect) is highly efficiency modules than mechanical cooling.

It is more comfortable environment for the technicians to work in 80 degrees (shorts and a Hawaiian shirt) than the cooler 65 degrees (jeans and a hoodie).

A facility with lower dependence on grid power and utility water is less likely to experience a failure due to loss of service from the provider.

Best Practices to Mitigate Risks

Hotspots may harm equipment. Implement these best practices to eliminate or reduce hotspots:

Contained hot (return) / cold (supply) isle layout, exhaust air plenum, and airflow management.

Hot and cold isle containment with doors and/or curtains to limit mixing of cool air and exhaust.

Arrangement of cooling plenum, perforated floor tiles (if subfloor), and positioning of CRAC / CRAH units.

Plugging of unused cable knockouts / holes, rack panels & space blanks in empty server slots, and messy unstructured cabling arrangements.

Temperature sensors are placed at the bottom, middle, and top within each rack front-side (facing cold isle). May need to average the temperatures as the top inlet sensors will be warmer than the middle inlet and much warmer than bottom inlet sensors.

With increased inlet temperature, the hot aisle temperature needs to be monitored to ensure that the data center’s overall temperature does not exceed the design parameters for all its peripheral equipment, cables, fibers, power distribution units (PDUs), and network switches.

Use computational fluid dynamics modeling (CFD) to predict potential hotspots.

As results of having smaller window of time before IT intent temperatures reach critical levels during a power outage. When cooling is lost the temperature in a computer room can rise dramatically like 10 to 15°F within minutes. This gives operators less time to react to power failure:

Use redundancy in all cooling systems and remove any single point of failure.

Maintaining cooling plant operation via the uninterruptible power supply (UPS) circuit and secondary backup power source (generators).

With 80°F intake the outtake hot aisle will be 95 to 105°F (but it’s a dry heat). Need to control this temperature. This is hottest that technicians can tolerate safely for an extended time period.

Set the appropriate alarm thresholds and notification deliver methods (e.g., email, text, page).

The water vapor contained in air protects IT equipment from dangerous static electricity. Humidity point too low may cause electrical static discharge that is harmful to internal electronic components. Humidity point too high may cause shorts within electronic systems or rusting:

Humidity monitoring point sensors are placed at the top of each rack, within the enclosure 1/3 from the top of each rack, or periodically placed hanging between the rows in the cold isle. There may be a requirement for out-of-band-management (OOBM) network topology for all of the sensors and alarms to a central monitoring system (DCIM).

CRAC system will need both humidifier that adds water vapor and dehumidifier that removes moisture. It is vital not to operate a data center at higher humidity levels than recommended.

Dew points needs to be closely monitored.

If in economizer mode utilizing direct outside air (“free” cooling) may contain pollutants:

Need to use filters to remove dust, dirt, and other pollutants before air enters the data hall.

In a case study to utilize outside air you would replace CRAC with CHAH and chillers in economizer mode.

In winter months, the data center temperature thresholds may be lower like back down to 70°F but the dew point will also need to be managed. If the outside temperature becomes too cold warm air will need to be mixed in before entering the data hall.

In winter months, you can also recirculate warm exhaust air from data hall into office space.

In conclusion, for each 1% increase in inlet temperature there is about 1.4% in energy savings. Finally, as the window of operating temperature and humidity continues to widen, direct outside air cooling systems have the opportunity to further increase economizer mode hours.

The Trend Line

Since 2008, innovators in data center infrastructure have created many cooling technologies. The industry now recognizes that outside air can be used with economizers to vastly decrease mechanical cooling in data center implementations. In 2011, ASHRAE TC 9.9 has issued a whitepaper that defines two new environmental classes and provides guidance to data center operators on how to move their normal operating environmental range outside the recommended envelope and closer to the allowable extremes. With additional use of economizer we should expect the warmer air intake of 90 to 95°F with no loss of performance or risk to IT equipment in the near future.

References

Alger, Douglas, “The Art of the Date Center – A Look Inside the World’s Most Innovative and Compelling Computing Environments,” Pearson Education, 2013.

ASHRAE, “Technical Committee 9.9,” 2008.

Evans, Tony, “Humidification strategies for Data Centers and Network Rooms,” Schneider Electric, 2011.

Geet, Otto Van, “Trends in Data Centers Design – ASHRAE Leads the Way to Large energy Savings,” NREL, June 24, 2014.

Rasmussen, Neil, “Implementing Energy Efficient Data Centers,” Schneider Electric, 2011.

The Green Grid, “Case Study: The ROI of Cooling System Energy Efficiency Upgrades,” 2011.

Wendy Torell, “High Efficiency Indirect Air Economizer-based Cooling for Data Centers,” Schneider Electric, 2013.

©2015 Darryl Lloyd Wortham. All rights reserved.