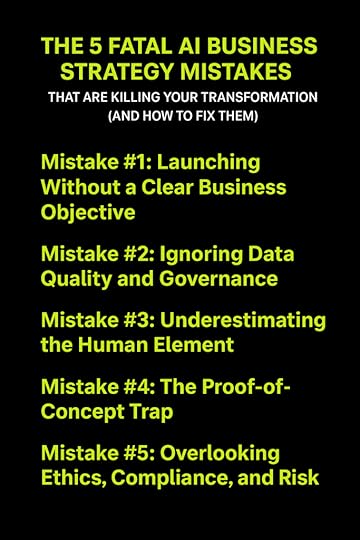

The 5 Fatal AI Business Strategy Mistakes That Are Killing Your Transformation (And How to Fix Them)

Every boardroom I know is buzzing about AI right now.

CEOs are signing off on massive AI budgets. CTOs are building innovation labs. Marketing teams are racing to integrate ChatGPT into everything.

But here’s the uncomfortable truth: most of these AI initiatives are failing to deliver real ROI or getting stuck in endless pilot phases.

And it’s rarely the technology’s fault.

The real culprit? Strategic blindness. Organizational dysfunction. A fundamental misunderstanding of what AI transformation actually requires.

I’ve watched countless companies make the same AI business strategy mistakes over and over. These aren’t small missteps—they’re transformation killers that waste millions and leave teams burned out and cynical.

Let’s break down the five most dangerous AI business strategy mistakes companies make, and more importantly, how to avoid them.

Mistake #1: Launching Without a Clear Business Objective (The Strategy Gap)

Mistake #1: Launching Without a Clear Business Objective (The Strategy Gap)This is the number one killer of AI projects.

Companies fall in love with the technology before they’ve identified the business problem it should solve.

The “Shiny Object” SyndromeI call it AI-for-AI’s-sake syndrome.

It looks like this: A company hears about GPT-5’s capabilities, gets excited, and immediately starts building something with it. They invest months and millions into development. Then someone finally asks: “What business problem are we actually solving?”

Crickets.

The fix is brutally simple: Start with the business problem, not the technology.

Don’t say “We need to build a large language model.” Say “We need to reduce customer churn by 15% in the next quarter.”

The technology becomes the solution, not the starting point.

The KPI DesertEven worse than having no objective? Having an objective but no way to measure if you’ve achieved it.

I’ve seen teams launch AI projects without defining a single KPI upfront. They build impressive models that do… something. But no one can say if it’s actually moving the needle on revenue, cost savings, or customer satisfaction.

Before you write a single line of code, answer these questions:

What specific business metric will this improve?By how much?In what timeframe?How will we measure it?If you can’t answer all four, you’re not ready to start.

Mistake #2: Ignoring Data Quality and Governance (Garbage In, Garbage Out)Here’s a truth that’ll save you millions: AI models are only as good as the data you feed them.

Yet somehow, this is still one of the most common AI business strategy mistakes.

Treating Data as an AfterthoughtCompanies rush to model development like it’s a race.

They skip the unglamorous work of cleaning, normalizing, and structuring their foundational data. They pull from fragmented databases. They ignore inconsistencies. They overlook missing fields.

Then they wonder why their AI produces garbage results.

Real talk: If your data is messy, your AI will be messier.

The fix? Invest in data readiness before you invest in the algorithm. It’s not sexy, but it’s essential. Clean your data. Standardize your formats. Break down your data silos. This groundwork determines whether your AI succeeds or fails.

The Bias Blind SpotThere’s another landmine here: data bias.

If your training data contains systemic biases (and it probably does), your AI will amplify them. You’ll end up with models that discriminate, make inaccurate predictions for certain groups, or produce outcomes that damage your reputation and expose you to legal risk.

Audit your training data. Question your assumptions. Test for bias across different demographic groups and scenarios.

Your AI will reflect whatever patterns exist in your data—good and bad.

Mistake #3: Underestimating the Human Element (Change Management Failure)Implementing AI isn’t a technical deployment.

It’s a cultural earthquake.

And most companies completely underestimate the human challenges that come with it.

The Fear FactorEmployees hear “AI implementation” and think “job elimination.”

When companies fail to communicate how AI will augment (not replace) existing roles, resistance builds. Sometimes it’s passive—people just don’t adopt the new tools. Sometimes it’s active—they actively work against the initiative.

I’ve seen AI projects sabotaged by teams who felt threatened and left in the dark.

The fix? Overcommunicate. Be transparent about what AI will and won’t do. Show people how it makes their jobs easier, not obsolete. Train them. Involve them in the process.

The AI Literacy GapHere’s another bottleneck: Most of your workforce doesn’t understand AI.

Your sales team doesn’t know how to interpret the model’s recommendations. Your operations managers don’t trust predictions they can’t explain. Your customer service reps don’t know when to override the AI’s suggestions.

This literacy gap creates friction at every level.

Create mandatory AI literacy programs. Not technical deep dives—practical education on how to work alongside AI tools effectively.

Siloed ExpertiseData science teams building AI models in isolation is a recipe for failure.

Your data scientists might be brilliant, but they don’t understand the nuances of your sales process, the quirks of your manufacturing line, or the real pain points your customer service team faces daily.

When you develop AI purely in a lab without integrating domain expertise from the actual business units using it, you build solutions that look impressive but don’t work in reality.

Break down these silos. Create cross-functional teams. Put your data scientists in regular contact with the people who will actually use what they’re building.

Mistake #4: The Proof-of-Concept Trap (The Scaling Bottleneck)Many AI initiatives die right here.

The pilot works beautifully. Leadership celebrates. Everyone’s excited about scaling it across the organization.

Then… nothing happens.

The PoC never makes it to production because companies neglect the industrialization stage—one of the most overlooked AI business strategy mistakes.

Lack of MLOps and Infrastructure PlanningThat impressive model you built in the lab? It was designed to handle a small, clean dataset under controlled conditions.

Now try running it on real-world data volume. With production latency requirements. Integrating with your legacy systems that were built in 2005.

Suddenly, it falls apart.

Companies fail to plan for the cost and complexity of actually deploying AI at scale. They don’t think about continuous integration, automated testing, version control, or the infrastructure needed to keep models running smoothly.

MLOps (Machine Learning Operations) isn’t optional. It’s how you move from “this works in the lab” to “this works for our business.”

Treating AI as a Project, Not a ProductHere’s the thing about AI models: They decay.

The world changes. Customer behavior shifts. Market conditions evolve. That model you trained on 2023 data? By mid-2024, it’s already losing accuracy.

This is called model drift, and it kills AI initiatives that were never designed for ongoing maintenance.

Too many companies treat AI like a one-time project with a beginning and an end. Build it, deploy it, move on.

But AI requires continuous monitoring, maintenance, and retraining. It’s a product that needs ongoing investment and attention.

If you’re not planning for the long-term care and feeding of your AI systems, you’re setting yourself up for failure.

Mistake #5: Overlooking Ethics, Compliance, and Risk (The Governance Failure)This is the mistake that can halt your AI strategy overnight—or worse, expose you to massive legal and reputational damage.

Ignoring Regulatory HeadwindsPrivacy laws like GDPR and CCPA aren’t suggestions. Industry-specific regulations like HIPAA in healthcare or compliance requirements in finance aren’t optional.

Yet companies rush into AI implementation without considering how they’ll comply with these requirements when handling sensitive data.

I’ve watched AI projects get killed after months of development because someone finally asked, “Wait, are we even allowed to use customer data this way?”

Build compliance into your AI strategy from day one, not as an afterthought.

The Black Box ProblemUsing AI models you can’t explain in critical decision-making processes is playing with fire.

When your AI denies someone a loan, flags a transaction as fraudulent, or makes a hiring recommendation, can you explain why it made that decision?

If the answer is no, you have a problem.

“Black box” models without explainability create legal liability. They erode trust. They make it impossible to identify and fix errors or biases.

Establish a Responsible AI framework and governance board from the start. Define clear principles for how AI will be used ethically in your organization. Build in explainability and audit trails.

Your future self will thank you when the regulators come knocking—or when a critical decision needs to be defended in court.

Turning Mistakes into MomentumHere’s the bottom line: The difference between a costly AI experiment and a successful transformation isn’t the technology.

It’s strategy.

Avoiding these five fundamental AI business strategy mistakes is what separates companies that thrive with AI from those that waste millions chasing hype.

Successful AI starts with:

Business goals first, technology secondClean, unbiased data as your foundationCultural change and AI literacy across your organizationInfrastructure and processes that support scalingEthics and compliance baked in from day oneThe companies getting AI right aren’t the ones with the biggest budgets or the fanciest models.

They’re the ones who approach it strategically, avoiding these common pitfalls and building sustainable, scalable AI capabilities that actually deliver business value.

Take a hard look at your current AI roadmap. How many of these mistakes are you making right now?

Fix them today, before they become transformation killers.

FAQs About AI Business Strategy MistakesQ: What’s the most common reason AI projects fail?

Launching without a clear business objective. Companies invest in AI technology before identifying the specific business problem it should solve, leading to impressive demos that deliver no real ROI.

Q: How much should I invest in data preparation before building AI models?

Most experts recommend spending 60-80% of your AI project timeline on data preparation, cleaning, and governance. If your data isn’t ready, your AI won’t work—no matter how sophisticated the model.

Q: How do I know if my company is ready for AI implementation?

Ask yourself: Do we have clean, accessible data? Do we have clear business objectives with measurable KPIs? Do we have leadership buy-in and a plan for change management? If you can’t answer yes to all three, you’re not ready yet.Retry

JM

please change the FAQs, don’t make them negative to AI change and implementation

The 5 Fatal AI Business Strategy Mistakes That Are Killing Your Transformation (And How to Fix Them)Every boardroom I know is buzzing about AI right now.

CEOs are signing off on massive AI budgets. CTOs are building innovation labs. Marketing teams are racing to integrate ChatGPT into everything.

But here’s the uncomfortable truth: most of these AI initiatives are failing to deliver real ROI or getting stuck in endless pilot phases.

And it’s rarely the technology’s fault.

The real culprit? Strategic blindness. Organizational dysfunction. A fundamental misunderstanding of what AI transformation actually requires.

I’ve watched countless companies make the same AI business strategy mistakes over and over. These aren’t small missteps—they’re transformation killers that waste millions and leave teams burned out and cynical.

Let’s break down the five most dangerous AI business strategy mistakes companies make, and more importantly, how to avoid them.

Mistake #1: Launching Without a Clear Business Objective (The Strategy Gap)This is the number one killer of AI projects.

Companies fall in love with the technology before they’ve identified the business problem it should solve.

The “Shiny Object” SyndromeI call it AI-for-AI’s-sake syndrome.

It looks like this: A company hears about GPT-4’s capabilities, gets excited, and immediately starts building something with it. They invest months and millions into development. Then someone finally asks: “What business problem are we actually solving?”

Crickets.

The fix is brutally simple: Start with the business problem, not the technology.

Don’t say “We need to build a large language model.” Say “We need to reduce customer churn by 15% in the next quarter.”

The technology becomes the solution, not the starting point.

The KPI DesertEven worse than having no objective? Having an objective but no way to measure if you’ve achieved it.

I’ve seen teams launch AI projects without defining a single KPI upfront. They build impressive models that do… something. But no one can say if it’s actually moving the needle on revenue, cost savings, or customer satisfaction.

Before you write a single line of code, answer these questions:

What specific business metric will this improve?By how much?In what timeframe?How will we measure it?If you can’t answer all four, you’re not ready to start.

Mistake #2: Ignoring Data Quality and Governance (Garbage In, Garbage Out)Here’s a truth that’ll save you millions: AI models are only as good as the data you feed them.

Yet somehow, this is still one of the most common AI business strategy mistakes.

Treating Data as an AfterthoughtCompanies rush to model development like it’s a race.

They skip the unglamorous work of cleaning, normalizing, and structuring their foundational data. They pull from fragmented databases. They ignore inconsistencies. They overlook missing fields.

Then they wonder why their AI produces garbage results.

Real talk: If your data is messy, your AI will be messier.

The fix? Invest in data readiness before you invest in the algorithm. It’s not sexy, but it’s essential. Clean your data. Standardize your formats. Break down your data silos. This groundwork determines whether your AI succeeds or fails.

The Bias Blind SpotThere’s another landmine here: data bias.

If your training data contains systemic biases (and it probably does), your AI will amplify them. You’ll end up with models that discriminate, make inaccurate predictions for certain groups, or produce outcomes that damage your reputation and expose you to legal risk.

Audit your training data. Question your assumptions. Test for bias across different demographic groups and scenarios.

Your AI will reflect whatever patterns exist in your data—good and bad.

Mistake #3: Underestimating the Human Element (Change Management Failure)Implementing AI isn’t a technical deployment.

It’s a cultural earthquake.

And most companies completely underestimate the human challenges that come with it.

The Fear FactorEmployees hear “AI implementation” and think “job elimination.”

When companies fail to communicate how AI will augment (not replace) existing roles, resistance builds. Sometimes it’s passive—people just don’t adopt the new tools. Sometimes it’s active—they actively work against the initiative.

I’ve seen AI projects sabotaged by teams who felt threatened and left in the dark.

The fix? Overcommunicate. Be transparent about what AI will and won’t do. Show people how it makes their jobs easier, not obsolete. Train them. Involve them in the process.

The AI Literacy GapHere’s another bottleneck: Most of your workforce doesn’t understand AI.

Your sales team doesn’t know how to interpret the model’s recommendations. Your operations managers don’t trust predictions they can’t explain. Your customer service reps don’t know when to override the AI’s suggestions.

This literacy gap creates friction at every level.

Create mandatory AI literacy programs. Not technical deep dives—practical education on how to work alongside AI tools effectively.

Siloed ExpertiseData science teams building AI models in isolation is a recipe for failure.

Your data scientists might be brilliant, but they don’t understand the nuances of your sales process, the quirks of your manufacturing line, or the real pain points your customer service team faces daily.

When you develop AI purely in a lab without integrating domain expertise from the actual business units using it, you build solutions that look impressive but don’t work in reality.

Break down these silos. Create cross-functional teams. Put your data scientists in regular contact with the people who will actually use what they’re building.

Mistake #4: The Proof-of-Concept Trap (The Scaling Bottleneck)Many AI initiatives die right here.

The pilot works beautifully. Leadership celebrates. Everyone’s excited about scaling it across the organization.

Then… nothing happens.

The PoC never makes it to production because companies neglect the industrialization stage—one of the most overlooked AI business strategy mistakes.

Lack of MLOps and Infrastructure PlanningThat impressive model you built in the lab? It was designed to handle a small, clean dataset under controlled conditions.

Now try running it on real-world data volume. With production latency requirements. Integrating with your legacy systems that were built in 2005.

Suddenly, it falls apart.

Companies fail to plan for the cost and complexity of actually deploying AI at scale. They don’t think about continuous integration, automated testing, version control, or the infrastructure needed to keep models running smoothly.

MLOps (Machine Learning Operations) isn’t optional. It’s how you move from “this works in the lab” to “this works for our business.”

Treating AI as a Project, Not a ProductHere’s the thing about AI models: They decay.

The world changes. Customer behavior shifts. Market conditions evolve. That model you trained on 2023 data? By mid-2024, it’s already losing accuracy.

This is called model drift, and it kills AI initiatives that were never designed for ongoing maintenance.

Too many companies treat AI like a one-time project with a beginning and an end. Build it, deploy it, move on.

But AI requires continuous monitoring, maintenance, and retraining. It’s a product that needs ongoing investment and attention.

If you’re not planning for the long-term care and feeding of your AI systems, you’re setting yourself up for failure.

Mistake #5: Overlooking Ethics, Compliance, and Risk (The Governance Failure)This is the mistake that can halt your AI strategy overnight—or worse, expose you to massive legal and reputational damage.

Ignoring Regulatory HeadwindsPrivacy laws like GDPR and CCPA aren’t suggestions. Industry-specific regulations like HIPAA in healthcare or compliance requirements in finance aren’t optional.

Yet companies rush into AI implementation without considering how they’ll comply with these requirements when handling sensitive data.

I’ve watched AI projects get killed after months of development because someone finally asked, “Wait, are we even allowed to use customer data this way?”

Build compliance into your AI strategy from day one, not as an afterthought.

The Black Box ProblemUsing AI models you can’t explain in critical decision-making processes is playing with fire.

When your AI denies someone a loan, flags a transaction as fraudulent, or makes a hiring recommendation, can you explain why it made that decision?

If the answer is no, you have a problem.

“Black box” models without explainability create legal liability. They erode trust. They make it impossible to identify and fix errors or biases.

Establish a Responsible AI framework and governance board from the start. Define clear principles for how AI will be used ethically in your organization. Build in explainability and audit trails.

Your future self will thank you when the regulators come knocking—or when a critical decision needs to be defended in court.

Turning Mistakes into MomentumHere’s the bottom line: The difference between a costly AI experiment and a successful transformation isn’t the technology.

It’s strategy.

Avoiding these five fundamental AI business strategy mistakes is what separates companies that thrive with AI from those that waste millions chasing hype.

Successful AI starts with:

Business goals first, technology secondClean, unbiased data as your foundationCultural change and AI literacy across your organizationInfrastructure and processes that support scalingEthics and compliance baked in from day oneThe companies getting AI right aren’t the ones with the biggest budgets or the fanciest models.

They’re the ones who approach it strategically, avoiding these common pitfalls and building sustainable, scalable AI capabilities that actually deliver business value.

Take a hard look at your current AI roadmap. How many of these mistakes are you making right now?

Fix them today, before they become transformation killers.

FAQs About AI Business Strategy MistakesQ: What’s the first step to building a successful AI strategy?

Start by identifying a specific business problem with measurable outcomes. The most successful AI implementations begin with clear objectives like “reduce customer service response time by 40%” or “increase sales forecast accuracy by 25%,” then select the right AI tools to achieve those goals.

Q: How can we accelerate our AI implementation while maintaining quality?

Focus 60-80% of your initial effort on data preparation and governance. Companies that invest upfront in clean, well-structured data can deploy AI models faster and more successfully than those who rush to implementation with messy data.

Q: What makes the difference between AI pilots that scale and those that don’t?

Planning for production from day one. Successful companies build their pilots with MLOps infrastructure, cross-functional teams, and long-term maintenance plans already in place, treating AI as an evolving product rather than a one-time project.Retry

JM

please take out the percentages

The 5 Fatal AI Business Strategy Mistakes That Are Killing Your Transformation (And How to Fix Them)Every boardroom I know is buzzing about AI right now.

CEOs are signing off on massive AI budgets. CTOs are building innovation labs. Marketing teams are racing to integrate ChatGPT into everything.

But here’s the uncomfortable truth: most of these AI initiatives are failing to deliver real ROI or getting stuck in endless pilot phases.

And it’s rarely the technology’s fault.

The real culprit? Strategic blindness. Organizational dysfunction. A fundamental misunderstanding of what AI transformation actually requires.

I’ve watched countless companies make the same AI business strategy mistakes over and over. These aren’t small missteps—they’re transformation killers that waste millions and leave teams burned out and cynical.

Let’s break down the five most dangerous AI business strategy mistakes companies make, and more importantly, how to avoid them.

Mistake #1: Launching Without a Clear Business Objective (The Strategy Gap)This is the number one killer of AI projects.

Companies fall in love with the technology before they’ve identified the business problem it should solve.

The “Shiny Object” SyndromeI call it AI-for-AI’s-sake syndrome.

It looks like this: A company hears about GPT-4’s capabilities, gets excited, and immediately starts building something with it. They invest months and millions into development. Then someone finally asks: “What business problem are we actually solving?”

Crickets.

The fix is brutally simple: Start with the business problem, not the technology.

Don’t say “We need to build a large language model.” Say “We need to reduce customer churn in the next quarter.”

The technology becomes the solution, not the starting point.

The KPI DesertEven worse than having no objective? Having an objective but no way to measure if you’ve achieved it.

I’ve seen teams launch AI projects without defining a single KPI upfront. They build impressive models that do… something. But no one can say if it’s actually moving the needle on revenue, cost savings, or customer satisfaction.

Before you write a single line of code, answer these questions:

What specific business metric will this improve?By how much?In what timeframe?How will we measure it?If you can’t answer all four, you’re not ready to start.

Mistake #2: Ignoring Data Quality and Governance (Garbage In, Garbage Out)Here’s a truth that’ll save you millions: AI models are only as good as the data you feed them.

Yet somehow, this is still one of the most common AI business strategy mistakes.

Treating Data as an AfterthoughtCompanies rush to model development like it’s a race.

They skip the unglamorous work of cleaning, normalizing, and structuring their foundational data. They pull from fragmented databases. They ignore inconsistencies. They overlook missing fields.

Then they wonder why their AI produces garbage results.

Real talk: If your data is messy, your AI will be messier.

The fix? Invest in data readiness before you invest in the algorithm. It’s not sexy, but it’s essential. Clean your data. Standardize your formats. Break down your data silos. This groundwork determines whether your AI succeeds or fails.

The Bias Blind SpotThere’s another landmine here: data bias.

If your training data contains systemic biases (and it probably does), your AI will amplify them. You’ll end up with models that discriminate, make inaccurate predictions for certain groups, or produce outcomes that damage your reputation and expose you to legal risk.

Audit your training data. Question your assumptions. Test for bias across different demographic groups and scenarios.

Your AI will reflect whatever patterns exist in your data—good and bad.

Mistake #3: Underestimating the Human Element (Change Management Failure)Implementing AI isn’t a technical deployment.

It’s a cultural earthquake.

And most companies completely underestimate the human challenges that come with it.

The Fear FactorEmployees hear “AI implementation” and think “job elimination.”

When companies fail to communicate how AI will augment (not replace) existing roles, resistance builds. Sometimes it’s passive—people just don’t adopt the new tools. Sometimes it’s active—they actively work against the initiative.

I’ve seen AI projects sabotaged by teams who felt threatened and left in the dark.

The fix? Overcommunicate. Be transparent about what AI will and won’t do. Show people how it makes their jobs easier, not obsolete. Train them. Involve them in the process.

The AI Literacy GapHere’s another bottleneck: Most of your workforce doesn’t understand AI.

Your sales team doesn’t know how to interpret the model’s recommendations. Your operations managers don’t trust predictions they can’t explain. Your customer service reps don’t know when to override the AI’s suggestions.

This literacy gap creates friction at every level.

Create mandatory AI literacy programs. Not technical deep dives—practical education on how to work alongside AI tools effectively.

Siloed ExpertiseData science teams building AI models in isolation is a recipe for failure.

Your data scientists might be brilliant, but they don’t understand the nuances of your sales process, the quirks of your manufacturing line, or the real pain points your customer service team faces daily.

When you develop AI purely in a lab without integrating domain expertise from the actual business units using it, you build solutions that look impressive but don’t work in reality.

Break down these silos. Create cross-functional teams. Put your data scientists in regular contact with the people who will actually use what they’re building.

Mistake #4: The Proof-of-Concept Trap (The Scaling Bottleneck)Many AI initiatives die right here.

The pilot works beautifully. Leadership celebrates. Everyone’s excited about scaling it across the organization.

Then… nothing happens.

The PoC never makes it to production because companies neglect the industrialization stage—one of the most overlooked AI business strategy mistakes.

Lack of MLOps and Infrastructure PlanningThat impressive model you built in the lab? It was designed to handle a small, clean dataset under controlled conditions.

Now try running it on real-world data volume. With production latency requirements. Integrating with your legacy systems that were built in 2005.

Suddenly, it falls apart.

Companies fail to plan for the cost and complexity of actually deploying AI at scale. They don’t think about continuous integration, automated testing, version control, or the infrastructure needed to keep models running smoothly.

MLOps (Machine Learning Operations) isn’t optional. It’s how you move from “this works in the lab” to “this works for our business.”

Treating AI as a Project, Not a ProductHere’s the thing about AI models: They decay.

The world changes. Customer behavior shifts. Market conditions evolve. That model you trained on 2023 data? By mid-2024, it’s already losing accuracy.

This is called model drift, and it kills AI initiatives that were never designed for ongoing maintenance.

Too many companies treat AI like a one-time project with a beginning and an end. Build it, deploy it, move on.

But AI requires continuous monitoring, maintenance, and retraining. It’s a product that needs ongoing investment and attention.

If you’re not planning for the long-term care and feeding of your AI systems, you’re setting yourself up for failure.

Mistake #5: Overlooking Ethics, Compliance, and Risk (The Governance Failure)This is the mistake that can halt your AI strategy overnight—or worse, expose you to massive legal and reputational damage.

Ignoring Regulatory HeadwindsPrivacy laws like GDPR and CCPA aren’t suggestions. Industry-specific regulations like HIPAA in healthcare or compliance requirements in finance aren’t optional.

Yet companies rush into AI implementation without considering how they’ll comply with these requirements when handling sensitive data.

I’ve watched AI projects get killed after months of development because someone finally asked, “Wait, are we even allowed to use customer data this way?”

Build compliance into your AI strategy from day one, not as an afterthought.

The Black Box ProblemUsing AI models you can’t explain in critical decision-making processes is playing with fire.

When your AI denies someone a loan, flags a transaction as fraudulent, or makes a hiring recommendation, can you explain why it made that decision?

If the answer is no, you have a problem.

“Black box” models without explainability create legal liability. They erode trust. They make it impossible to identify and fix errors or biases.

Establish a Responsible AI framework and governance board from the start. Define clear principles for how AI will be used ethically in your organization. Build in explainability and audit trails.

Your future self will thank you when the regulators come knocking—or when a critical decision needs to be defended in court.

Turning Mistakes into MomentumHere’s the bottom line: The difference between a costly AI experiment and a successful transformation isn’t the technology.

It’s strategy.

Avoiding these five fundamental AI business strategy mistakes is what separates companies that thrive with AI from those that waste millions chasing hype.

Successful AI starts with:

Business goals first, technology secondClean, unbiased data as your foundationCultural change and AI literacy across your organizationInfrastructure and processes that support scalingEthics and compliance baked in from day oneThe companies getting AI right aren’t the ones with the biggest budgets or the fanciest models.

They’re the ones who approach it strategically, avoiding these common pitfalls and building sustainable, scalable AI capabilities that actually deliver business value.

Take a hard look at your current AI roadmap. How many of these mistakes are you making right now?

Fix them today, before they become transformation killers.

FAQs About AI Business Strategy MistakesQ: What’s the first step to building a successful AI strategy?

Start by identifying a specific business problem with measurable outcomes. The most successful AI implementations begin with clear objectives like “reduce customer service response time” or “increase sales forecast accuracy,” then select the right AI tools to achieve those goals.

Q: How can we accelerate our AI implementation while maintaining quality?

Focus most of your initial effort on data preparation and governance. Companies that invest upfront in clean, well-structured data can deploy AI models faster and more successfully than those who rush to implementation with messy data.

Q: What makes the difference between AI pilots that scale and those that don’t?

Planning for production from day one. Successful companies build their pilots with MLOps infrastructure, cross-functional teams, and long-term maintenance plans already in place, treating AI as an evolving product rather than a one-time project.

AI Business Strategy Mistakes: How to Avoid Them for GoodNo, you don’t want to jump into AI simply to lose yourself in mistakes.

You want to start and hit the ground running, avoiding these mistakes and flying over your competitors’ heads.

That’s what we’re here for.

At First Movers R&D AI Labs, we guide you through all the hurdles of AI adaptation.

We provide customized consultation, courses, guides, blueprints…

…everything you need to land you need to avoid every AI mistake every made.