Guest Post: Tom Pettinger, PhD Student, Examines Artificial Intelligence – Is It the Saviour of Humanity, Or Its Destroyer?

Please support my work as a reader-funded investigative journalist and commentator.

Please support my work as a reader-funded investigative journalist and commentator.

The following article is one of my few forays into topics that are not related to Guantánamo, British politics, my photos or the music of my band The Four Fathers, but I hope it’s of interest. It’s an overview of the current situation regarding artificial intelligence (AI), written by Tom Pettinger, a PhD student at the University of Warwick, researching terrorism and de-radicalisation. Tom can be contacted here.

Tom and I first started the conversation that led to him writing this article back in May, when he posted comments in response to one of my articles in the run-up to last month’s General Election. After a discussion about our fears regarding populist leaders with dangerous right-wing agendas, Tom expressed his belief that other factors also threaten the future of our current civilisation — as he put it, “AI in particular, disease, global economic meltdown far worse than ’08, war, [and] climate change.”

I replied that my wife had “just returned from visiting her 90-year old parents, who now have Alexa, and are delighted by their brainy servant, but honestly, I just imagine the AI taking over eventually and doing away with the inferior humans.”

Tom replied that it seems that AI “could pose a fairly short-term existential risk to humanity if we don’t deal with it properly,” adding that the inventor and businessman Elon Musk “is really interesting on this topic.”

I was only dimly aware of Musk, the co-founder of Tesla, the electric car manufacturer, so I looked him up, and found an interesting Vanity Fair article from March this year, Elon Musk’s Billion-Dollar Crusade to Stop the A.I. Apocalypse.

That article, by Maureen Dowd, began:

It was just a friendly little argument about the fate of humanity. Demis Hassabis, a leading creator of advanced artificial intelligence, was chatting with Elon Musk, a leading doomsayer, about the perils of artificial intelligence.

They are two of the most consequential and intriguing men in Silicon Valley who don’t live there. Hassabis, a co-founder of the mysterious London laboratory DeepMind, had come to Musk’s SpaceX rocket factory, outside Los Angeles, a few years ago. They were in the canteen, talking, as a massive rocket part traversed overhead. Musk explained that his ultimate goal at SpaceX was the most important project in the world: interplanetary colonization.

Hassabis replied that, in fact, he was working on the most important project in the world: developing artificial super-intelligence. Musk countered that this was one reason we needed to colonize Mars—so that we’ll have a bolt-hole if AI goes rogue and turns on humanity.

Tom found his own particularly relevant quote, about Mark Zuckerberg, Facebook’s founder, who “compared AI jitters to early fears about airplanes, noting, ‘We didn’t rush to put rules in place about how airplanes should work before we figured out how they’d fly in the first place.’” As Tom explained, AI enthusiasts like Zuckerberg “don’t recognize that at the point it’s too late, we can’t do anything about it because they’re self-learning, and it’s totally driven by the private (i.e. profit-inspired) sector, which has no motivation to consider future regulation, morality or even our existence.”

He added, “I think Musk’s one of the smartest guys on the planet: he wants to tackle climate change, so he starts Tesla and SolarCity; he wants to ensure humans have an ‘out’ against Earth catastrophes, so he develops SpaceX; he wants to ensure the best chance of a good future regarding AI, so he develops OpenAI and Neuralink. His thoughts (and many other experts/ thinkers) on AI come down to: we either advance like never before as a species, or likely become extinct, with no middle ground. And the consensus within the AI field seems to suggest within 30-70 years this change will come about.”

Tom then sent two links (here and here) to two summaries of the debate about AI, which he described as “hugely informative”, adding, “The first link is a basic introduction to the road from narrow AI (what we have today) to general AI (human-level intelligence) and superintelligence (super-human AI). The second one is aimed more at those interested in social science, exploring the potential consequences.” He also stated, “both are definitely worth a read. If you wanted a summary of them though I’d be more than willing to oblige. I love writing, and this subject!”

I replied asking Tom to go ahead with a summary, and his great analysis of the pros and cons of AI is posted below. I hope you find it informative, and will share it if you find it useful.

Artificial Intelligence: Humanity’s End?

By Tom Pettinger, July 2017

“Existential risk requires a proactive approach. The reactive approach — to observe what happens, limit damages, and then implement improved mechanisms to reduce the probability of a repeat occurrence — does not work when there is no opportunity to learn from failure.” AI expert Nick Bostrom

Does artificial intelligence spell the end for all of us? This post looks at how AI is developing and the potential consequences for humanity of this impending technological explosion. Depending on where the technology takes us, our species could experience the greatest advancement in its history, the worst inequality ever seen, or even push us to a point of extinction. I argue that within this century, we’ll likely be seeing human-level and super-intelligent AI and that we should be considering the consequences now rather than waiting until the unknown consequences arrive. Just to make it clear from the start, when we’re talking about AI, think algorithms rather than robots. (The ‘robots’ merely perform the physical function the algorithms behind them tells them to.) So think less Terminator, and more Transcendence. However it turns out, one thing’s for certain — it’s not long before our very existence is transformed forever.

Phase 1: Artificial Narrow Intelligence (ANI) – specific-functional intelligence

Artificial Narrow Intelligence is already all around us. ANI is essentially algorithms that serve a specific and pre-programmed purpose, allowing humans to function more effectively and enjoyably, supporting our development. It’s in our smartphones, self-driving cars, computer gaming — all of these are examples of single-purpose, ‘narrow’ intelligence. The algorithms cannot change their roles, which are strictly defined by their programmers, and they have no ability to decide what their tasks are outside of human control. We’ve become ever-more dependent upon ANI, to a point where it’s hard to think of an institution or sector that is not driven by ANI; financial institutions, education, public transport, energy, and trade are all sectors run by computing intelligence that would collapse if we removed it. ANI is getting smarter all the time, self-learning within their specified roles; AlphaGo, the program that famously beat Go professional Lee Sedol, developed its tactics by playing against itself, millions of times. However, it cannot perform other tasks, like setting the temperature, displaying a website, or changing traffic lights; this program will remain narrow intelligence and consigned to playing Go.

Phase 2: Artificial General Intelligence (AGI) – human-level intelligence

Artificial General Intelligence, however, is the point where the algorithms have reached human-level intelligence and can essentially pass the Turing Test, where you can’t tell if you’re speaking with a human or a machine (other definitions of AGI are explored here). AGI is ultimately the ability to practice abstract thinking, reasoning and the art of self-tasking. So where AlphaGo (ANI) can improve its Go playing, AGI would be able to master any game it decides to, as well as look at ways of reducing traffic, analyse stock markets and write up reports on war crimes, all at the same time. The main difference between human and algorithmic ability to ‘compute’ information in this phase would largely be speed. AGI-level algorithms would have similar intuition, analysis and problem-solving capabilities as we possess. But already, smartphones can now perform instructions hundreds of millions of times faster than the best computers that first took humans to the moon, and in the future, this will only have increased dramatically.

Robots (essentially shells containing clever algorithms) could also cook dinner, engage in conversation or debate with you, and take your kids to school. Moves towards AGI are underway; computer programs are now learning a range of video games based on their own observations and practice, like a human learns, rather than just being focussed on becoming proficient at one single game. Robots are beginning to hold basic conversations and are interacting with dementia patients. However, we are still a long way off AGI; self-learning a multitude of games or engaging in primitive interactions is nowhere near passing the Turing Test. Having said this, Moore’s Law, which suggests that computing power doubles every two years, has been upheld for the last 50 years and so although some slowdown will probably occur, the shift from ANI to AGI looks set to occur within 25 years. The median date predicted by experts for AGI has been around 2040 in several different studies. Reaching this level is a milestone as it will pave the way for the next phase in our journey towards superintelligence.

Phase 3: Artificial Super Intelligence (ASI) – super-human intelligence

Reaching Artificial Super Intelligence is the point at which artificial intelligence will have become superior to the intelligence of humanity. Here, the ‘Singularity’ is said to have occurred; this term denotes a period where civilization will experience disruption on an unprecedented scale, based on the AI advancements. Essentially everybody in the field accepts that we’re on the brink of this new existence, where AI severely and permanently alters what we currently know and the way in which we live.

In terms of how intelligent ASI could become, it can be useful to think of different animal species. From an ant, to a chimpanzee, to a human, there are distinctive differences in terms of comprehension . An ant can’t comprehend the social life of a chimpanzee nor their ability to learn sign language, for example. And a chimpanzee couldn’t understand how humans fly planes or build bridges. As ASI develops, it will begin to surpass our own limits of comprehension, like the difference between ants and chimps, or chimps and us. The principal difference between AGI and ASI is the intelligence quality. ASI will be more knowledgeable, more creative, more socially competent, and more intuitive than all of humanity. As time progresses and this superior intellect improves itself, the disparity between ASI and human knowledge will only increase. In the ant-chimpanzee-human scale, ASI may be able to progress to hundreds of steps of comprehension above us, in a relatively short amount of time. We can’t really conceive how ASI would think, because its level of understanding would be greater than ours – just as chimps can’t get on our level and comprehend how we fly planes.

There is only very limited debate about whether or not reaching ASI will happen; the debate focusses more on when it will happen. Although some, like Nick Bostrom, warn that this ‘intelligence explosion ’ from AGI to ASI will likely “swoop past”, most predict that the transition from AGI to ASI will take place within 30 years. We can think of the scale of change that this transition would bring by looking back over human history. There have been several marked steps in our existence as a species:

From 100,000 BC early humans without language to 12,000 BC hunter-gatherer society

From 12,000 BC hunter-gatherer society to 1750 AD pre-Industrial Revolution civilization

From 1750 pre-Industrial Revolution civilization to 2017, with advanced technology (electricity, Internet, planes, satellites, global communication, Large Hadron Collider)

It is often said that the shock we would get if we travelled to the 2030s or 2040s might be as great as the shock a person would get if they travelled between any of these steps. If you travelled from 1750 to today, would you really believe what you were seeing? Within this century, most experts predict the arrival of artificial superintelligence, which could result in the same unimaginable shock as if a human from 100,000 BC travelled to the advanced civilization of the present day. Also notice that the time of each step grows exponentially shorter: the first step lasted nearly 100,000 years; the second about 14,000; and the third just over 250, all with similar levels of change. It should not be inconceivable that, in another 50-80 years, we experience another one of these shifts. The rate of technological progress seen in the entire 20th century was accomplished between 2000-2014, and an equal rate of progress is expected between 2014-2021. Ray Kurzweil, whom Bill Gates called “the best person I know at predicting the future of artificial intelligence”, suggests last century’s advancements will soon be occurring within one year, and shortly afterwards within months and even days.

The Great Debate

Whether the change is for good or bad is debated by the likes of Mark Zuckerberg, Larry Page and Ray Kurzweil, who largely see ASI as beneficial, and Bill Gates, Stephen Hawking and Elon Musk who see ASI as posing a potentially existential risk. Those who see only good outcomes from ASI denounce pessimists’ views as unrealistic scaremongering, whilst those who consider the future dangerous argue that the optimists aren’t considering all the outcomes properly.

The Optimist’s View

These optimists assert that ASI will vastly improve our lives and suggest we will likely embrace transhumanism, where ‘smart’ technology is implanted into our bodies and we gradually see it as natural. This transition has already begun, largely for medical reasons — implants that trick your brain into changing pain signals into vibrations, cochlear implants to help deaf people hear, and bionic limbs. This will become more commonplace; Kurzweil thinks we will eventually become entirely artificial, with our brains able to connect directly to the cloud by the 2030s. Some think that we will be able to utilize AI to become essentially immortal, or at least significantly slow the effects of ageing.

Any task that may seem impossible for humans — like developing better systems of governance, combating climate change, curing cancer, eradicating hunger, colonizing other galaxies — optimists say will seem simple to ASI, just as building a house must seem unimaginably complex for a chimpanzee but perfectly normal to humans. Though he’s a pessimist, Bostrom says, “It is hard to think of any problem that a superintelligence could not either solve or at least help us solve.”

One concern has been about the mass loss of jobs, as AI becomes ever-more prevalent. Tasks such as driving, administration and even doctoring, which have always been done by humans, are now vulnerable to automation. It is said that 35% of British jobs, 47% of American jobs, and 49% of Japanese jobs — the list goes on — are at high risk from automation over the next couple of decades. However, the optimists counter that jobs are merely displaced rather than being abolished with technological advancement; automation in one area just means workers retrain and move into other jobs. Bringing tractors on to farms or machinery into factories did not cause mass unemployment; those workers moved into working with the technology or found other jobs elsewhere.

The optimists contend that fears about AI (and in particular ASI) ‘taking over’ are misplaced, because, in their view, we have always adapted to the introduction of new technologies. The lack of pre-emptive regulation around AI is often cited as problematic, but Zuckerberg compared these fears to concerns about the first airplanes, noting that “we didn’t rush to put rules in place about how airplanes should work before we figured out how they’d fly in the first place.” Kurzweil says, “We have always used machines to extend our own reach.”

The Pessimist’s View

The pessimists’ fears are represented by Bostrom’s comment that we are “small children playing with a bomb”; they see the potential threats outweighing the benefits to human existence when thinking about AI. They suggest that optimists ignore the fact that ASI won’t be like previous technological advances (because it will be cognitive and self-learning) and will not act empathetically, philanthropically, or knowing right from wrong without serious research and consideration. As AI research is largely driven by the private (i.e. profit-inspired) sector, there is minimal motivation to consider future regulation, morality or even our existence. Estimates of 10-20% existential risk this century are frequently given by those in the AI field. Once AI passes the intelligence level of humanity, it is argued that we will have little to no control over its activity; those like Gates and Hawking emphasize that there’s no way to know the consequences of making something so intelligent and self-tasking, and it only pays to think extremely cautiously about the future of AI.

The general rule of life on Earth has been that where a higher-intellect species is present, the others become subjugated to its will. Elon Musk is so concerned with the possibility that “a small group of people monopolize AI power, or the AI goes rogue” that he created Neuralink, a company dedicated to bringing AI to as many people as possible through connecting their brains to the cloud. He thinks ASI should not be built, but recognizes that it will, and so wants to ensure powerful AI is democratized, preventing the subjugation of the majority of humans to AI or a human elite. If the technology is not widely distributed among humanity, pessimists claim the likelihood is that we will become subjected to ASI, similar to the way chimpanzees are sometimes subject to human will (keeping them in zoos, experimenting on them, etc.).

There is, without doubt, potential for devastating unintended consequences as a result of ASI. Super intelligent machines could develop their own methods of attaining their goals in a way that’s detrimental to humanity. For example, a machine’s primary role could be to build houses, but it decides that the best way would be to tear down every other house to use those bricks. Or should they reach the level of intelligence to develop their own goals, they could decide to prevent global warming but determine that humanity stands in the way of protecting the environment and remove us from the equation. Pessimists argue that the idea that we’ll be able to control ASI, or shut it down when it doesn’t function the way we want, is misguided. Like chimps aren’t able to determine their existence outside of human subjection, we will be under the dominion of ASI as we grow more and more dependent upon it.

The pessimist camp expects social upheaval and inequality in the relative short-term, over the next 10-20 years, and as machines become better at a wider range of tasks, jobs will not be able to just be displaced. Several commentators (including then-President Obama) have spoken about the potential need for a universal basic income as mass unemployment becomes somewhat of a likelihood. The lower-skilled and lower-paid workers are far more concerned about AI technology than the elites, because jobs that require less training and education will be among the first to be automated en masse. Obama said, “We’re going to have to have a societal conversation about that.” Currently, however, most governments don’t see it as an impending issue, despite the possibility of a high number of jobs becoming automated in the next two decades.

Conclusion

Hopefully this introduction to AI has made clear where the technology is currently and could be in the near future, and highlighted some of the possible consequences of achieving ASI. As a society, we should begin thinking about what we want from our future. The advent of superintelligence — which, by essentially all accounts, is going to take place at some point this century — is not an issue to overlook, as it will have enormous social consequences and could result in our total extinction. As the last invention humanity will probably ever need to make, we should ensure that the development of this technology is matched by similar, if not more advanced, progress in regulation and philosophy.

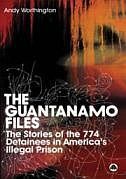

Andy Worthington is a freelance investigative journalist, activist, author, photographer, film-maker and singer-songwriter (the lead singer and main songwriter for the London-based band The Four Fathers, whose music is available via Bandcamp. He is the co-founder of the Close Guantánamo campaign (and the Countdown to Close Guantánamo initiative, launched in January 2016), the co-director of We Stand With Shaker, which called for the release from Guantánamo of Shaker Aamer, the last British resident in the prison (finally freed on October 30, 2015), and the author of The Guantánamo Files: The Stories of the 774 Detainees in America’s Illegal Prison (published by Pluto Press, distributed by the University of Chicago Press in the US, and available from Amazon, including a Kindle edition — click on the following for the US and the UK) and of two other books: Stonehenge: Celebration and Subversion and The Battle of the Beanfield. He is also the co-director (with Polly Nash) of the documentary film, “Outside the Law: Stories from Guantánamo” (available on DVD here — or here for the US).

Andy Worthington is a freelance investigative journalist, activist, author, photographer, film-maker and singer-songwriter (the lead singer and main songwriter for the London-based band The Four Fathers, whose music is available via Bandcamp. He is the co-founder of the Close Guantánamo campaign (and the Countdown to Close Guantánamo initiative, launched in January 2016), the co-director of We Stand With Shaker, which called for the release from Guantánamo of Shaker Aamer, the last British resident in the prison (finally freed on October 30, 2015), and the author of The Guantánamo Files: The Stories of the 774 Detainees in America’s Illegal Prison (published by Pluto Press, distributed by the University of Chicago Press in the US, and available from Amazon, including a Kindle edition — click on the following for the US and the UK) and of two other books: Stonehenge: Celebration and Subversion and The Battle of the Beanfield. He is also the co-director (with Polly Nash) of the documentary film, “Outside the Law: Stories from Guantánamo” (available on DVD here — or here for the US).

To receive new articles in your inbox, please subscribe to Andy’s RSS feed — and he can also be found on Facebook (and here), Twitter, Flickr and YouTube. Also see the six-part definitive Guantánamo prisoner list, and The Complete Guantánamo Files, an ongoing, 70-part, million-word series drawing on files released by WikiLeaks in April 2011. Also see the definitive Guantánamo habeas list, the full military commissions list, and the chronological list of all Andy’s articles.

Please also consider joining the Close Guantánamo campaign, and, if you appreciate Andy’s work, feel free to make a donation.

Andy Worthington's Blog

- Andy Worthington's profile

- 3 followers