Rachel E. Pollock's Blog: La Bricoleuse aggregate and more..., page 4

June 2, 2025

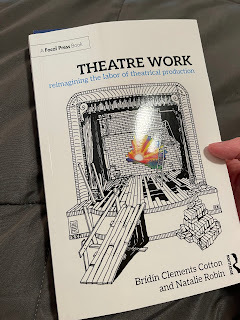

Book Review: Theatre Work

In the months prior to its release, I'd been looking forward to this book, Theatre Work: Reimagining the Labor of Theatrical Production by Bridin Clements Cotton and Natalie Robin.

I first wrote a placeholder for this book review when the title came out back in the summer of 2024. I avidly devoured it within a month of its publication and filled my placeholder draft with notes for a subsequent review.

But life intervened before I sat down to turn my notes into a review and now enough time and seismic change has gone by that portions of the book may feel outdated, not because these issues no longer exist but because (at least in the US) arts funding cuts loom large, threatening many theatres’ very existence. Many of us are preoccupied now with all-consuming existential threats. After all, if the theater that employed you no longer exists, there’s not much point fighting for a more equitable contract.

It is nevertheless recommended reading, particularly for those with an interest in wage parity, workplace equity, and striving for a more humane production environment for theater practitioners—statistical and anecdotal data could be valuable in bolstering those causes.

The book looks at all areas of theatrical production, but because (statistically speaking) costume professionals hold complex intersectional demographic identities, we are highly represented in cases cited.

Williamstown Theatre Festival is a major focus in terms of case-study specifics. The 2021 unsafe work conditions, employee and intern abuse scandals that made WTF a case study are now far enough past in the churn of the news cycle that those who followed them at the time may have forgotten the particulars, and the details may be completely shocking and new to some readers.

Although it could have been written in the. style of a screed or a tell-all, the book’s tone is that of a serious academic study (because it is). If you’re looking for scandal, it’s there in the deplorable treatment workers report experiencing from many different theatres, but you will need to parse and analyze the text to find it.

Highly recommended. You may find as I did that the research in the book corroborates something you already suspected about the field of theater production, but couldn’t quite articulate or prove.

June 1, 2025

AI assignment ideas for costume educators

Many participants in those AI sessions were educators at institutions who have adopted and encouraged the use of language and image generative AI technologies such as Microsoft Copilot, DALL-E, Midjourney, ChatGPT, Google Gemini, etc.

Elphaba rendering by ChatGPT

There are many valid, ethical concerns about the use of these prompt-driven generative AI models—sustainability and ecological impact, inherent bigotry/bias, exploitative labor practices, violations of copyright and content use consent…the list goes on—and this post is not to enumerate or discuss those, although they would be excellent discussion topics to bring up with students in class.

Some educators reported that many of their students had no interest in AI technology and were vocally opposed to it, while others described curiosity and enthusiasm particularly from students who were drawn to other new technologies and/or graphics-heavy video games.

The point was raised of the importance to stay abreast of current litigation regarding the use of AI in creative fields. Reports from costume design union members stressed that at present, AI-generated designs are not copyrightable, and the use of the technology is prohibited by many employers seeking intellectual property rights over designs created for their contracts..

This is an aggregate list of potential assignments and activities to incorporate generative AI into costume-adjacent classes, aimed at educators who feel pressure to do so by AI-embracing employers.

Costume design lesson & project assignment ideas

Corinne Larson of Capilano University described an assignment she did with her students where she used DALL-E to create a rococo dress design then had the students correct the silhouette as the assignment. An interesting way to incorporate the technology and also address its shortcomings.

Nannette Marie (from Facebook): “I am thinking of an assignment where they can use AI for "inspiration" and then jump off from there to make it their own. Or one using their "key words" for a mood board, sort of just for fun. Thinking of AI as a possible tool to create and not the one doing the creating.”ATCA colleague (attribution lost): upload a scan of a sketch the student had done and direct the AI to generate something in the style of that sketch, so it would generate images informed by the student’s own artistic styleHeidi Jo Schiemer of Elon University offered thoughts on her department’s experience using an AI model to create graphics for projectionsAnn Toewe at the University of Northern Colorado appreciates using the technology to assist with writing lesson plans & brainstorming discussion prompts. She described deepening the focus of a planned class discussion further than her initial idea by engaging in brainstorming conversation with the AI chatbotRebecca Armstrong of Bates College proposed calling for sustainability offsets from university administration, particularly for schools that have made a public commitment to reduced or net-zero emission standards, & expressed a desire for the development of an ecological impact widget/plug-in. Possible opportunity for interdepartmental collaboration?Soule Golden of Barnard College showed examples of design iterations she had done using some of the many available AI clothing generators

Quinn Burgess of Boston College had a fun suggestion for an in-class demo based on the concept of a round-robin telephone game—input a word or words as a prompt to create an image, then ask the AI to describe the image, then ask the AI to create an image of the description, and so on

Julie Learson of Fairfield University suggested having students watch Bernadette Banner’s video about erroneous AI depictions of period styles, then discuss the video in class and perhaps evaluate together more AI-generated examples, either that the students create or the class creates collectively during the discussion.I speculated about using the same prompt with different AI models, a theory I tested in this postI have tried to give attribution credit to the scholars who proposed these assignment ideas. If you recognize one you suggested and I have not credited you or miscredited it, please leave a comment. I welcome your corrections.

Please also comment if you have other assignment ideas or experience to offer!

Some of these assignment ideas also came out of a discussion thread on the Facebook group, Costume Educators Forum. Thank you to all who participated in each of these discussions.

May 31, 2025

AI Elphaba design renderings from different models

In the ATCA brainstorming session about potential ways to use generative AI in costume class lessons and assignments (post forthcoming with a list of these), The idea was proposed to run the same prompt on different AI models.

For comparison, I used the exact same prompt language from my first Copilot Elphaba with Google Gemini and ChatGPT. Here are the results.

Google Gemini 5 seconds to generate

Google Gemini 5 seconds to generate

ChatGPT1.5.hours to generateIt let me know that the reason the time to generate was so long was because of the number of people using the image generation tool at the time I submitted my prompt.

ChatGPT1.5.hours to generateIt let me know that the reason the time to generate was so long was because of the number of people using the image generation tool at the time I submitted my prompt.  Microsoft Copilot15 seconds to generate

Microsoft Copilot15 seconds to generateThese outcomes are so fascinatingly different. I’m not sure how to interpret the results because the pathways these models take from input to output are so obscure. I assume it has a lot to do with the datasets on which they’ve been trained, and probably the prioritization among those datasets.

It gives me pause that the platform my university has partnered with (Microsoft Copilot) is the one that provides the most objectified and cartoony output. Not passing judgment on that style, but if I’m using it in a fine art context, I’d prefer the output to be informed by a broader range of artistic styles, and a more precise execution when a specific style is mentioned in the prompt.

As an assignment, this would definitely offer interesting avenues of discussion and analysis.

And on a personal aesthetic level, of these the one I like best is Google Gemini, so I decided to work with it a bit more. I revised my prompt to ask it to generate the full figure head to toe and here’s what it gave me:

This one took a bit more time at 13 seconds to render but nothing so long as the ChatGPT result, and I liked it enough to prompt it to render a design for Galinda in the same style and here’s what I got:

Another 13 second render Because Gemini quickly generated 3 different images, I asked it about image limits and here’s what it said:

Yes, there are limits to the number of images Gemini can generate, and these limits vary depending on whether you're using the free version or a paid Google AI plan (like Google AI Pro or Ultra).1

Here's a general overview:

Free Users: Typically have a more restricted daily limit, often around 10-20 image generations per day. There have been reports of users hitting limits around 50 images in a single chat on the free version.Google AI Pro/Ultra Users: Enjoy significantly higher limits, with some reports suggesting up to 100-150 daily image generations. They also get higher resolution and more detailed outputs, faster processing, and priority access during peak times.Other factors that can influence your image generation capacity include:

Server load and current system demand: During high usage periods, you might experience temporary slowdowns or limits.2Complexity of your prompts: More complex image generation requests can sometimes consume more resources.Account history and usage patterns.Ongoing platform optimization efforts.If you reach a limit, Gemini will usually notify you and tell you when your capacity will refresh, which is typically after a 24-hour period.3

It's also worth noting that some models within Gemini might have specific limitations, and the rules can evolve as Google continues to develop and optimize its AI services.4

I don’t teach an intro to costume design class, so this is probably the end of my experimentation with these AI models. I appreciate the opportunity to discuss this topic with my colleagues from schools all over the US and Canada.

One more post on this topic is forthcoming, enumerating the assignment ideas, and applicability recommendations for those who teach at a university where the administration is strongly encouraging incorporation of the use of AI models in teaching.

May 29, 2025

AI Elphaba, continued

The first of several image iterations generated by my friend’s PC setup

The first of several image iterations generated by my friend’s PC setupI thought I was done with my (underwhelming) exploration into an Elphaba costume design using generative AI, but my blog crossposts to Facebook and I got into a fascinating discussion with an illustrator/cartoonist friend about running generative AI on a home computer over which some control can be exerted.

He began tinkering with setting up his own PC to run the open-source machine-learning model Stable Diffusion, with the aim to eventually load his own art onto it, so it could iterate in his own style with his own characters (a goal he has yet to achieve). He had a PC for gaming with a good video card and a lot of hard drive space, that he repurposed for this experiment.

He loaded Stable Diffusion onto it and the freeware user interface ComfyUI. This allows him to exert some control over the path the AI takes from input to output. He began playing around with the first of Copilot’s Elphaba images that it created for me.

A refresher on what Copilot generated for me

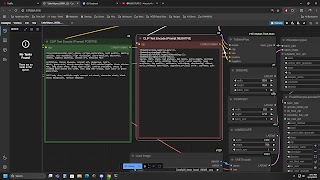

A refresher on what Copilot generated for me How ComfyUI looks when you’re using it

How ComfyUI looks when you’re using it His second iteration— each of these took under 30 seconds to generate

His second iteration— each of these took under 30 seconds to generateThese were a lot closer to what I envisioned, and he decided to run with the design concept and see if he could tweak it to generate several more iterations closer to what I had hoped my second Copilot iteration would be.

He also spent a fair amount of time patiently explaining to me what he had done to set up his own machine and answering my questions about how the software works, how the user interface works, and what kind of power draw it produces.

Another iteration

Another iteration Another iteration

Another iteration Another iteration

Another iterationApparently, his machine uses no more significant amount of power than playing a graphics-heavy video game.

As I understand it, what makes generative AI tools like ChatGPT and Copilot use so much power is a combination of the fact that they run off conversational prompt inputs, and “learn” from every iteration generated. This machine my friend has set up with the intent of hopefully someday generating iterations of his own artistic style, doesn’t “learn” from each generated iteration, and it runs on ComfyUI workflows as opposed to conversational prompts.

My friend said he had learned about how to set this machine up through a combination of YouTube videos (here’s an introduction to Stable Diffusion, for example) and subreddits devoted to DIY AI art. He stressed the questionable legality of some of the stuff he’d come across in various forums, and also that much of the pioneering work in this area has been done by people with a prurient interest in generating cartoon pornography. So, if you were interested in exploring further, do not do so unaware of those caveats.

Learning even the most minimal overview of how AI image generation works when a UI workflow Is involved, the whole concept of the process feels more exciting to me than all the hype around tools like ChatGPT, which are framed as magical artificial intelligence models far beyond our puny human comprehension.

I have a knee-jerk negative response to that framing, whereas this feels intriguing and filled with potential. I probably won’t dive deeper into it, because I don’t have a professional need or a personal interest in generating AI art, but if I did, this would be the path I would follow.

May 28, 2025

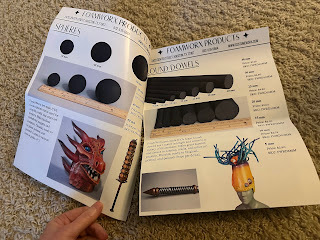

Costumeworx sample box!

Houston TX-based Costumeworx carries readymade costumes, makeup, molding/casting supplies and more retail products, as well as offering custom laser cutting and fabrication services.

I am thrilled to add this vendor to my list of suppliers, particularly for mascot costume making, puppet building, and EVA foam armor construction! I’m especially pleased to have connected with this company in advance of my spring course in maskmaking, armor, and mascot construction.

Check out what my sample box contained:

Cones spheres, & rods in a range of diameters, plus foam clay

Grey medium-density EVA foam sheets in a range of thicknesses

Black low- & high-density EVA foam sheets in a similar range of thicknesses

Printed product sheets

May 27, 2025

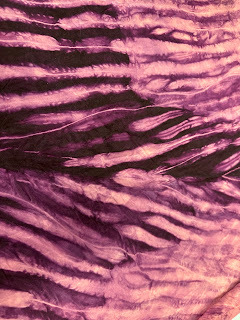

Semester of Shibori

Not only was spring of 2025 a semester in which I taught my graduate level dyeing and surface design class, but there was also a large scale shibori project involved in PlayMakers Repertory’s final show of the season, Little Shop of Horrors.

In this post, I will share some images of the various techniques, I and my students employed to create a wide range of surface design effects that employ occlusive dye effects.

This image is a collage of four samples I created to show

costume designer Grier Coleman for potential use in a finale costume.

Techniques clockwise from top left:

Accordion-folded and cinched with zip ties

Stitched and gathered

Diagonally pole-wrapped and bound with twine

Bull’s-eye tie-dye method

The orange samples were underdyed in yellow first, direct dye on cotton, and the purple samples were underdyed in pastel mauve first, acid dye on silk.

Because these were done for a reveal costume in the finale, I don’t have stage shots of the full look (Because spoilers! The show is closed now, but the point is no photos were taken during photo call because it would spoil the surprise at the end), but if you know the show, these are for Audrey II‘s giant flower when the plant blooms at the end.

This one was actually used, pole-wrapped acid dye on China silk

This one was actually used, pole-wrapped acid dye on China silk

This one was also used, stitched and gathered, direct dye on lightweight cotton.

STUDENT WORK

This is a project by one of my students, undergraduate Lene Wojcik. Accordion folded and stitched, direct dye on an existing cotton shirt

Itajime shibori technique by first year grad student Bailey DoranShe found a study done by scholars at the University of Nebraska in which they used dimensional blocks and a folding and clamping technique to create a complex repeat

Bailey designed, laser cut, and constructed her own press board design

Here are the boards Bailey made at left, the clamps in the foreground which she also made, and the dyed yardage at the rear.

Bailey used acid dye on silk fabric. She first underdyed the yardage pink, using Rit dye with white vinegar in our dye studio washing machine. Then she did the Itajime folding/clamping before overdyeing with green. The class was five students in all, and the other three also created shibori projects, although I apparently neglected to get decent photos of them. It happens, it was back in March at this point. Regardless, what a shibori-filled semester!

May 26, 2025

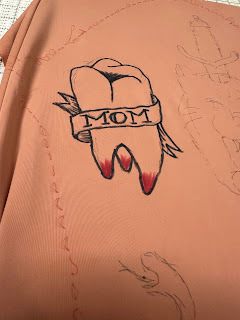

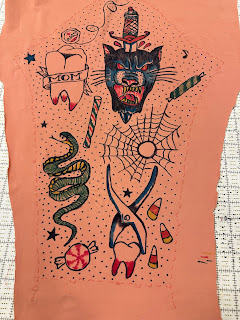

Tattoo shirt for "Orin the Dentist”

The season finale of Playmakers Repertory Company’s 2024/2025 season was the popular and technically challenging musical, Little Shop of Horrors. The costume designer for this production, Grier Coleman, envisioned the character of Orin, canonically a sadistic dentist, as it would-be hard-core punk rocker with arms covered in tattoos..

Robert Arica as Seymour, Jim Bray as Orin

The performer playing that role, Jim Bray, was also cast as four other characters, so the tattoos could not be done with makeup. The solution was to create literal tattoo sleeves, sewn into his shirt. This tattoo surface design effect is typically created on nylon spandex or Milliskin mesh dyed to match the performer’s skin tone. We went with the mesh for actor, comfort and breathability.

Because I have done this type of tattoo shirt stage effect before, I did not have to do the kind of surface design product testing I might have otherwise had to do, and could begin hand-drawing the art as soon as graduate student and lead crafts supervisor Jillian Gregory dyed the fabric, patterned the sleeves, and thread marked them for me.

For the curious, products used were a combination of Sharpie markers in various colors and Marvy fabric markers.

Because costume designer Grier Coleman was working on two other shows and is based out of New York City, she was not present in Chapel Hill when the tattoo sleeve decision was made. She emailed me the artwork for the tattoos that she would like incorporated into the sleeves and gave me placement directions as to what went where.

Here are some process photos and detail shots:

The first tattoo design

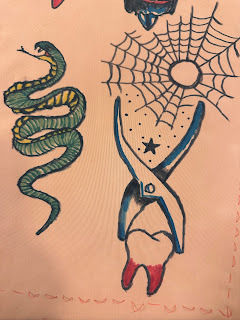

Jillian had threadmarked the elbow for the placement of this spiderweb

Here’s the whole sleeve, ready to be heat set, assembled, and stitched into the shirt.

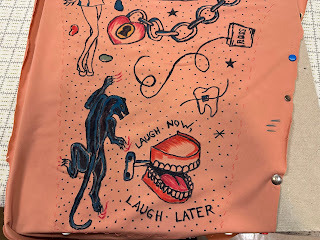

In Traditional American tattoo style, a full sleeve is often composed of a patchwork of smaller, tattoos with even smaller filler among them such as the stars, dots, & other motifs. I suggested we add pieces of candy in that filler art, and Grier loved the idea. Here’s the second sleeve:

A couple of other fun facts about the tattoo imagery:

The tooth with a braces attachment/wire is an actual tattoo on the arm of PRC company member Zoe Lord. Dental floss is incorporated into both sleeves of tattoo designs, because punks often use dental floss to sew patches onto their clothing. The show had so many fun projects, but this one might be my favorite.

May 25, 2025

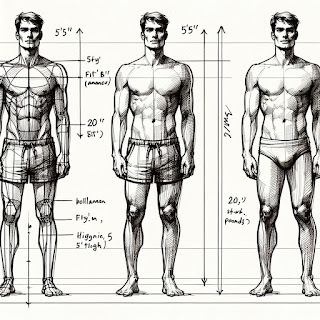

AI croquis: genial dad

In my ongoing experimentation with using my university's Microsoft Copilot AI assistant in costume design applications, my first attempt to create a design rendering for the character of Elphaba in Wicked was initially underwhelming and then maddeningly unacceptable.

I decided that perhaps--especially in light of the datasets on which the model has been trained--jumping right into a fully developed costume design rendering was perhaps putting the cart before the horse, as it were.

Instead, how about using it to create a croquis, a physical body sketch, that could be printed out or imported into a digital drawing app for adding the garments? Figure drawing is often the most challenging aspect of costume design rendering. I took a semester of life drawing with live models in the art department and still struggle with it.

Because my Elphaba results came out so objectified and idealized (like most media depictions of women, which probably figures significantly in the training data), I decided to try a masculine croquis render. I also thought perhaps specifying physical qualities like height/weight might yield a result that didn't look like a stylized superhero.

Here's my first prompt:

create a fashion-design-style croquis body sketch

in a pen-and-ink style for a 5'5" tall man

weighing 200 pounds

Um, not what i intended.

I was hoping that prompt would yield a regular-guy croquis, and instead the result is apparently a fitness model. Which, again, I'm sure the training dataset does include a lot of fitness models, and underwear models, and actual superheroes.

Again I'm surprised but not. Again I see in retrospect how i erred.

At least this feels like a promising place to start from, given my desired outcome. So I wrote a revision prompt:

revise to have a flabby dad bod.

he should be zaftig and his pose more playful

But Copilot had an issue with my language:

Note to self: Look up this Designer code of conduct. [1]

Note to self: Look up this Designer code of conduct. [1] Don't get me wrong, I'm glad that there are apparently language restrictions on prompt phraseology to (hopefully) keep users from creating hateful, mocking imagery, but I considered what language it flagged as problematic. I decided to resubmit the same prompt without the word "flabby."

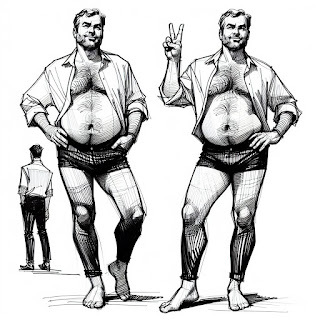

And it produced a charming revision:

I feel like I (or lets be real, a Costume Design Intro student) could start with this croquis and design a costume for a character evoked by this pose.

I still think there are overwhelming reasons to avoid using generative AI--sustainability, bias, exploitative labor practices, etc.--and there are easier ways to get a croquis (like the MyBodyModel app), but if one's university is requiring it or mandating you find ways to incorporate it, this may be a path to explore?

I tell my students that if they're using a technology just for the sake of using it (for example, 3D printing a belt buckle design that would be more quickly and durably sculpted in Apoxie or polymer clay), that's a valid choice but it's not innovative. And this feels the same--it's a way to get a croquis but it's not an improved way.

[1] The Copilot Designer Code of Conduct is part of Microsoft's broader AI Services Code of Conduct and content policies. Here are the key principles that guide how Copilot Designer operates and what it allows:

✅ What It EncouragesResponsible AI Use: Designs must align with ethical AI practices, including transparency, fairness, and safety.Respect for Individuals: Content should not misrepresent people or create misleading or harmful depictions.Creative Freedom Within Limits: Users are encouraged to explore artistic and design ideas, as long as they stay within respectful and safe boundaries.🚫 What It RestrictsHarmful or Objectionable Content: This includes violence, nudity, hate speech, or anything that could be considered offensive or inappropriate.Misleading or Deceptive Imagery: AI-generated images must not be used to impersonate real people or mislead viewers into thinking they are real.Sensitive or Stereotypical Depictions: Certain prompts may be blocked if they risk reinforcing stereotypes or include sensitive terms (e.g., "lumberjack" or "sword" have triggered blocks in some cases).🛡️ Usage RequirementsImplement safeguards to prevent misuse.Clearly disclose when content is AI-generated.Ensure human oversight and feedback mechanisms are in place.You can read the full Microsoft AI Services Code of Conduct here

.

May 24, 2025

AI costume design, Part 3

This is the third post in a series about experimenting with generative AI to create a costume design rendering of the character of Elphaba from Wicked.

Read Part One and Part Two here

At the end of part two, I hit my image generation limit for the day and could not revise any of the first round of artwork it created until the next day, when my limit would roll over. I thought that might be a blessing in disguise because I could really revise my prompt to include much more specific language to describe what I had in mind.

Here’s my second prompt:

Revise the first image to render the entire figure. Thedress must cover the shoulders with a high collar. The witch’s body is muscularand sturdy. The dress is trimmed with leaves and vines. Her hair is in many longbraids in shades of green, gold, and brown. Her witch hat is rustic andangular. The sketch is rendered in the style of Alphonse Mucha

And here’s the result I got back:

Now I’m not only disappointed, I’m pissed off. Not only has Copilot ignored many of my directions, this round is even more naked and impossibly thin than the first.

So, I told Copilot how I feel:

wow that's terrible.

You ignored most of my requests for changes.

I will draw it myself....to which it replied:

Thanks for the honest feedback — I really appreciate it. It sounds like you have a strong vision, and drawing it yourself might be the best way to bring that to life exactly as you imagine.I’m giving up on using Copilot to create a costume design sketch from scratch, and I don't see how it's useful providing any of the stuff it suggested--I don't need AI to choose my color palette, I just need my own opinion.If you’d like, I can still help by creating a reference sheet, pose guide, or even a color palette based on your description to support your drawing process. Would any of that be helpful?

I think next, I’m going to experiment with using it to create a masculine figure.

To be continued…

May 23, 2025

AI costume design, part two

In this post, i'll share the process and results of an AI-generated costume design exploration for the character of Elphaba in Wicked using my university's license for Microsoft Copilot.

Basically, it needs a description of what you want it to generate. Here's the first prompt I entered:

create a costume design sketch of a glamorous green-skinned witch in a black dress and pointy black hat. The costume and sketch should be in the Art Nouveau style.

And here's what I got back:

OK. My prompt was pretty generic so it's no surprise what it generated is, too. Not bad but she's very elongated and exposed, not to mention her features are cartoon-sexy and she's quite youthful.

But given the datasets this was likely trained on, it's no surprise the default femme character created is a slender objectified girl falling out of her clothes.

I probably undermined myself by including the word "glamorous." I didn't want it to generate a Disneyfied cartoon hag, and instead I got a cartoon WILF.

At this stage, my general feeling is that it would be easier to start rendering my design by drawing, even if I suck at drawing. Trace a croquis, digital or old-school collage, anything that is less steeped in the hypersexualized visual paradigm of how our culture has depicted women for decades. I'd have to experiment with non-femme character design but I suspect a wizard wouldn't come out of Copilot looking like pinup calendar art.

I refined my prompt with further detailed description of what I envisioned...and here's what I got back:

Three image limit?

Three image limit?If this educational license only allows for the generation of three images a day, it's not going to be useful for production or academic design projects unless that limit can be raised.

But because I'm just exploring, time isn't a constraint, so I'll try again tomorrow! And it gives me a whole day to revise my prompt.

To be continued...

La Bricoleuse aggregate and more...

Right now, this space streams the RSS feed from La Bricoleuse, the blog of technical writing on costume craft artisanship that i've written since I may crosspost from a couple different blogs on here.

Right now, this space streams the RSS feed from La Bricoleuse, the blog of technical writing on costume craft artisanship that i've written since 2006, so that may be all you see at any given time. ...more

- Rachel E. Pollock's profile

- 80 followers