Rachel E. Pollock's Blog: La Bricoleuse aggregate and more..., page 3

June 22, 2025

Copilot helped plan my parasol workshop

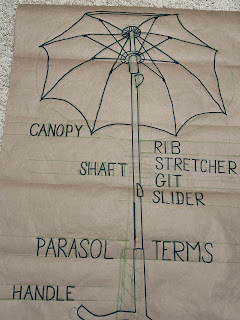

A poster I hand-drew illustrating parasol frame vocabulary terms

A poster I hand-drew illustrating parasol frame vocabulary termsThis weekend, I led a workshop on parasols at Cosplay America, a convention held annually in Cary, NC, for cosplay enthusiasts interested in constructing their own cosplays. Most of the people who attend bring a baseline knowledge of garment making and handcrafts, which makes it a great population for my UNC colleagues and I to share knowledge and cross-pollinate, if you will.

Essentially, my workshop was a condensed version of a lecture I give in one of my classes which is typically a two-hour session. I have given a version of this workshop at regional conferences before, tailoring it to a duration as short as an hour, and as long as four hours.

Paring it down to 45 minutes was daunting, and I thought this might be a great opportunity to see if Microsoft Copilot could help out. After all, this is the kind of professional task that it reportedly excels at. And honestly, it was helpful, although the final plan I wound up with is drastically different from what it generated.

I prompted it to create a structural plan for a 45 minute workshop on parasol restoration for an audience familiar with sewing and/or handcrafts.

Initially, it wrote a verbose, pompous workshop plan, clearly drawn from training data scraped from my own blog and book. It knew enough about parasols to sound like it knew what it was talking about, but the particulars were inaccurate. What was helpful though, was the way it structured the workshop plan.

Once I edited out what I think is often referred to as AI slop, I had this draft:

1. Introduction (5 minutes)

Briefly introduce yourself and the workshop's objectives. Icebreaker: Ask attendees if they have any personal experiences or stories involving parasols.

2. History of Parasols (10 minutes)

Origins and Evolution: China European influence from the 16th century Modern-day usage

3. Specific Vocabulary (5 minutes)

Terms Related to Parasols:

4. Restoration Techniques (15 minutes)

Basic Restoration Steps: Repairing Frames Replacing the Canopy Demonstration

5. Q&A Session (10 minutes)

I liked the outline structure and the time duration planning, although the flow of information didn’t make sense. The order felt off. I revised the schedule further and came up with the plan that I went with for my workshop:

1. Introduction (5m)—10-10:05

Icebreaker: Ask attendees if they have any personal experiences or stories involving parasols.2. Vocabulary (5m)10:05-10:10

3. Restoration Techniques (15m) 10:10-10:25

Restoration Steps:Repairing FramesReplacing the CanopyPatterning Demo4. History? (10m)10:25-10:35

Origins and Evolution:ChinaEuropean influence from the 16th centuryModern-day usage5. Q&A (10m) 10:35-10:45

Ultimately, I allowed the crowd that came to the workshop to determine the flow of the discussion/presentation and as I suspected, we didn’t have time to cover the history section. It was a great group with thoughtful questions and enthusiasm for the topic and I will gladly go back to this event in future.

All in all, this use of the generative AI model was helpful. I often find it challenging to come up with a first draft of anything, even a shitty one, even knowing that that first draft will need major revisions. So in an application like this where the output involves no creative writing artistry, I see how this technology can be useful.

June 20, 2025

A completely different approach

I decided it might be helpful to think about how I might try to use generative AI to do the kinds of assignments I was given in my costume design class back in the 1990s. I did an individualized major so I took several semesters of advanced costume design.

I fully own that I’m working with knowledge about how these classes are taught that is 30 years old. Perhaps professors of costume design now do not assign well-known plays as design assignments. If you’re currently a professor of costume design, and that is how your class has been structured, this post may inspire you to revise the nature of the assignments in your class.

So when I was an undergraduate, a few times a semester we would be assigned a play to read, something well-known like an Arthur Miller or Noel Coward or Shakespeare script. We’d have to read the play and then create costume design renderings for a selection of the characters, usually three or four.

So I decided to start by asking Copilot to tell me about the Loman family in Arthur Miller’s Death of a Salesman. I then asked it to create a family portrait of Willie, Linda, Happy, and Biff.

. Honestly, not bad.

Honestly, not bad. I then asked it to tell me the plot of Sophocles’ play Antigone. I asked for a portrait of King Creon with his two adult daughters and son in Ancient Greek clothing & got this:

Are either of these images acceptable for a working costume designer to turn in as final renderings? No.

Would they be a credible first stab at a costume design project for either one of these shows? Perhaps

June 19, 2025

Color palette change

Spoiler: This may be the first viable use I have found for generative AI in a costume design context.

I’m trying to use Microsoft Copilot as much as I can with this generative AI exploration research, since it’s the model my university has licensed, but I have to admit, I’m running into roadblocks with the capacity of that model to generate imagery on the scale required to do research in this field (meaning quantity of images it can create in a day and the time it takes to generate them).

I decided to run another experiment using Google Gemini, which so far I have found to be superior for image generation in its aesthetic output and processing time.

In this experiment, I considered the issue of color palette change. A costume designer can be faced with this necessity in various circumstances – perhaps a color palette looked great when a designer was working on their own in the preliminary stage, but when they see the colors of the sets and the lights, they realize the palette of the costumes needs to change. Or perhaps, when the show is cast, the colors chosen before the actors were hired don’t work with the skintones of the people playing the roles.

So, I took one of the original witch designs created by Microsoft Copilot & asked Gemini to revise the image’s color palette to reflect primary shades (reds, yellows, blues).

And here’s what it produced:

Which is fine, but the way it chose to distribute the colors is not what I wanted,.So I asked it to revise the image using blue in the dress/hat & red in the hair

Which is fine, but the way it chose to distribute the colors is not what I wanted,.So I asked it to revise the image using blue in the dress/hat & red in the hair. .

Promising!

Promising!I mean, not great but the primary palette itself is not complex. I think it could be more sophisticated by using Pantone numbers or hex codes in the prompt and it’s definitely faster than repainting sketches, either literally or digitally.

Both of these images took less than 10 seconds to generate.

.A reminder of the original image we were working with:

.

But here’s a negative example showing how the AI model you choose to run a prompt on drastically affects the outcomes. Many of the headless-chicken AI hypesters pitching generative AI models would like users to believe that all clients are equally good at output, but that simply not true.

Hype aside, different models are better at different kinds of output—I tried the same exercise I did with Gemini using yet another generative AI model, Claude.ai, which failed spectacularly..

Original image

Original image Hilarious attempt to render the witch image with a primary color palette

Hilarious attempt to render the witch image with a primary color paletteI’m told Claude’s forte is creative writing, not image generation, so I asked it for a poem about how disappointing it is:

The AI Hype Deflation Blues

They promised us magic, a digital god,

With silicon brains that would give us a nod,

But ask it to reason or think something through—

It spits out word salad and calls the job "new."

"I'll write you a novel!" the chatbot declared,

Then produced forty chapters where nothing was there,

Just recycled tropes from a thousand before,

Like a drunk plagiarist stumbling through lore.

"I'll code you a program!" it boasted with pride,

Then broke every function and crashed the whole ride,

With loops that go nowhere and syntax so wrong,

It makes BASIC programmers weep into song.

The venture capitalists threw cash around,

Claiming AI would make every job hit the ground,

But the only thing falling was common damn sense,

As we paid billions for linguistic pretense.

It hallucinates facts with confident flair,

Invents bogus citations from thin fucking air,

Then when you complain that it's wrong as can be,

It says "I apologize" quite contritely.

So here's to the future we thought we would see—

Where robots do dishes and poetry's free,

Instead we got chatbots that bullshit with style,

And charge us subscription fees all the while.

The emperor's naked, his circuits exposed,

Just autocomplete with a marketing dose,

So let's raise a glass to the hype that fell flat—

Our digital savior's a word-vomit brat!

Honestly, it’s so bad at image generation, it probably shouldn’t even try. If I prompted it to generate an image, and it responded that it was not optimized for image generating, I would just go try a more appropriate model instead of getting pissed off that it spent any time and energy creating the kind of crap that it did. I guess we’re all learning.

Theoretically, the advantage of using the university’s licensed version of Copilot is data privacy. The images one might feed into our licensed Copilot platform don’t go on to train the model.

In the case of these two images that I’ve used with Gemini and Claude, the original images were generated by Copilot so it winds up part of the big AI Ouroboros.

June 18, 2025

WWI nurse

I was again pleasantly surprised with its output.

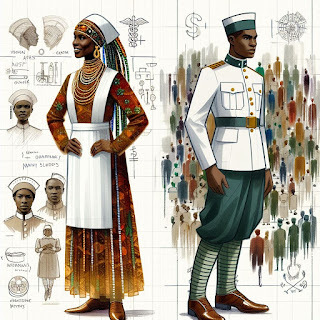

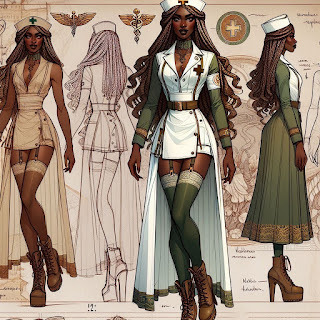

Supernumeraries for an opera about the 1918 flu pandemic?

Supernumeraries for an opera about the 1918 flu pandemic? Apparently evenly spaced uniform buttons are a problem. As a piece of concept art this is amazing tho.

Apparently evenly spaced uniform buttons are a problem. As a piece of concept art this is amazing tho.

Not what I was going for, but I admit I would joyfully watch this burlesque performance.

It’s hit or miss whether it actually registers the physiognomy of a human figure in iterating tho. When I asked it to create an image of her dressed in a firefighter uniform, I got a bunch of white guys. Which I guess a lot of the extant images of firefighters it was trained on are white guys but that’s no excuse.

It’s fascinating experimenting with this technology, and even though it is driven by conversational input, the output it creates does not follow in any path of human logic. You have to surrender so much creative control to the generative model, that I don’t know that it’s applicable to the creation of art where aesthetic details matter.

Like, it’s a great way for someone with no confidence in their ability to draw to generate something that looks kind of like what they wanted, & it’s a great way to open up creative possibilities that would not have otherwise come to mind (like the WWI nurse burlesque concept art here). But I find myself continually frustrated by not being able to control something like the style of shoes she’s wearing, or the spacing of the buttons on his uniform.

I suppose a possible way to use it is to generate something that is close to what you want, then import that into a digital drawing tool like procreate and revise it yourself perhaps? I don’t know, this is an area of expertise that I have not worked in for something like a decade, so I’m sure it is something that designers of the future will figure out.

June 17, 2025

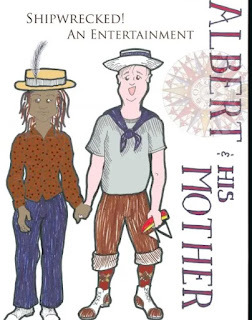

Sketching style iteration

This is fascinating. I uploaded a whole show worth of costume designs to Microsoft Copilot in service of teaching it my rendering style—the final renderings from the Playmakers Repertory Company 2010 production of Shipwrecked: an Entertainment.

Then I instructed Copilot to create an interpretation of the Elphaba witch design “in the same style” & what it created is not at all what I anticipated, and is not really influenced by the drawing style in my Shipwrecked renderings, and yet it’s absolutely fantastic.

:

For comparison, one of the design renderings I shared with Copilot to teach it my drawing style:

.

Again, it’s not realistic to describe what generative AI does between input and output as “thinking”. It’s possible that it recognized the only female figure among my sketches as that of a Black woman with locs (which was true of the cast of that show, reflected in these final renderings) & that’s what it took from the sketches to generate its new iteration of Elphaba.

I should also mention that it took almost 24 hours from prompt to result on this one. The biggest obstacle [1] I see for this having use in either practical or academic theatrical design applications is the time factor. Tickets are sold and shows have to open, and professors may be unwilling to give a student a deadline extension because of a sluggish AI model.

The more I work with it, the more aesthetically sophisticated the outcomes seem to be, but it feels like this technology is just not quite there yet in terms of potential applicability in our field.

I’m getting the same sense that I got conducting research on 3D scanning of antique hat blocks in 2011–I could tell that one day the technology would advance enough to do what we were trying to do, but it wasn’t there yet.

[1] Beyond the slew of ethical objections from designers and other theatre artists, which is currently a prodigious obstacle.

June 16, 2025

Ongoing Copilot experimentation

I continue to explore the use of my university’s Microsoft Copilot license as a generative AI diffusion model in the area of costume design rendering.

For this iteration, I attempted to train Copilot on my own renderings by instructing it to look at images from posts I made to a long-abandoned blog, A Sketch A Day.The concept behind the blog was to encourage the cohort of participating costume designers to share images from their sketching process, both preliminary sketches and final renderings. There were several participating designers, and the goal was to share at least one sketch a day, from at least one of the designers, although several of us could post on a given day if we had material to share.

At the time, it appeared that I might be designing more frequently for the theater in residence on my campus, and I thought I would be generating more design sketches than I had since my undergrad days. I lacked confidence in my sketching ability and participation in the blog gave me motivation and accountability to maintain a regular sketching practice.

But regarding this post, I thought I could instruct Microsoft Copilot to pull sketches from the blog and use them to inform the style of the costume design rendering I prompted it to produce. I hoped it would generate a version of the Elphaba series that looked like I could have drawn it.

In this case, my mistake was presuming that my university’s Copilot license would allow it to pull images from the blog. But Copilot metaphorically clutched its pearls—how does it know that those images are of sketches I have permission to use?

Never mind that none of the training data was used with permission or consent but I guess Microsoft is putting toothpaste back in the tube now.

So it told me:

I apparently chose to interact with Copilot at an exceptionally popular time (Sunday afternoon). It took the model FIVE HOURS to generate the images.

I can't directly access or replicate the specific style from the blog posts, but I can create a new sketch inspired by the Art Nouveau style, similar to Alphonse Mucha's work. [1] Let's try again with your detailed description:

Full figure of a muscular, sturdy green-skinned witch Dress covering the shoulders with a high collar, trimmed with leaves and vines Hair in long braids of green, gold, and brown Rustic, angular witch hat

I'll generate the updated sketch now. Please hold on for a moment!

But this round is actually much better, although she’s still willowy & ten heads tall. Here’s what it gave me:

'

The closest to what I was going for (I had those boots in the 90s!)

The closest to what I was going for (I had those boots in the 90s!)

Another decent iteration despite the creepy smile

Another decent iteration despite the creepy smile Not bad although why’s she wearing a transparent skirt?

Not bad although why’s she wearing a transparent skirt? Ah yes, in case I was lying when I said the earlier naked wasp-waisted versions were unacceptable

Ah yes, in case I was lying when I said the earlier naked wasp-waisted versions were unacceptableI don’t know. At this point I guess I can’t imagine using some kind of generative AI model in a costume design application, unless something drastically changes about how production design occurs. I’m not seeing a way to exert the kind of fine-grained control over the output that a costume designer so often requires.

Perhaps it’s helpful in teaching complete novice students learning how performance costume design works, to keep them from getting too hung up on the quality of their own sketching? Although collage would probably be easier and more controllable. I don’t know.

I’m intrigued that Copilot only began to approach following my prompts after I told it its work was terrible. I think it’s counterproductive to ascribe emotions or sensitivity to a machine learning model. At the same time, the conversational chat interface creates a disturbing sense of personality such that it’s easy to imagine Copilot as an eager intern desperate to please.

Do I have to talk to it like a drill sergeant to get it to obey?

Joking, but not. I mean, I have to practically yell at Alexa, & the processing bias of home assistants against non-masculine voices is a documented thing.

[1] Mucha was my original style reference three prompts ago.

June 9, 2025

Book review: the Dutch hatmakers

Summer always brings a raft of book reviews on this blog, of titles which have piled up during the theatre season & academic year.

I received this book as a gift from a colleague aware of my personal & academic interest in historical populations of the hat trade. It’s a relatively short book at 153 pages, dense with text at a small font size. It has an extensive bibliography of topical primary sources. The volume is mostly text, but does feature a few black-&-white illustrations, mostly reproductions of maps & lithographs.

The book is published by an imprint called Boydell Press, but it strikes me as very similar in structure & interior layout to titles available through Dover Publications.

The Dutch Hatmakers of Late Medieval and Tudor London offers a glimpse into the history of the Dutch artisans who revolutionized the hatmaking craft in England during the late medieval & Tudor periods.

Back in those days, a band of talented hatmakers from the Low Countries moved to London. They brought with them entirely new techniques & tech that the English hadn't seen before, & they were the first to make brimmed felt hats in England. Their hats quickly became a hit with everyone from nobility to the everyday people.

Even though they were successful, the Dutch hatmakers had a tough time. London's economy often made it hard or even impossible for them to make and sell their hats. Wanting to stay independent from the local guilds, they set up their own assemblage called the Hatmakers' Fraternity of St James. This group lasted for about ten years until 1511, when the royal council forced them to join the powerful London Haberdashers' Company.

During their short time on their own, the Hatmakers' guild wrote rules in both English and Dutch to regulate hatmaking in London. These rules, now kept in the London Guildhall Library, show how these Dutch craftsmen managed their lives as immigrants, balancing their craft with the social and language challenges they faced every day.

This book not only highlights the lives and writings of these hatmakers but also offers a modern edition of the Hatmakers' guild book. The Dutch Hatmakers of Late Medieval and Tudor London is an esoteric book of admittedly niche appeal, but I’m solidly in that niche.

June 6, 2025

Book review: Teaching with AI

Teaching with AI: A Practical Guide to a New Era of Human Learning by C. Edward Watson & José Antonio Bowen is a must-read for educators seeking to navigate the rapidly-changing complex landscape of the range of technologies lumped under the umbrella term “artificial intelligence”/AI. Watson & Bowen have written a guide that is both comprehensive and practical.

The book is divided into three main sections: "Thinking with AI," "Teaching with AI," and "Learning with AI." Each section explores the various ways AI could transform education, from enhancing critical thinking skills to redefining assessment strategies.

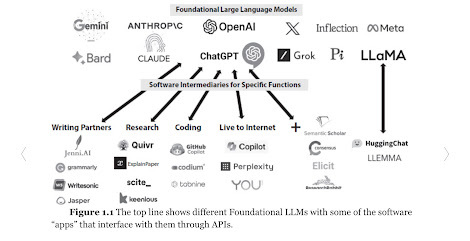

An infographic from the book illustrating the

corporate pedigrees and focus areas of various models

This book’s primary focus is empowering educators. Watson & Bowen provide strategies for integrating AI into teaching practices, enabling educators to not just be passive recipients of technology but active shapers of their teaching and curriculum. The authors address common, valid concerns such as academic integrity, cheating, and the balance between content and process, offering thoughtful solutions to these challenges

On the topic of maintaining educational integrity. Watson & Bowen explore how AI can be used to foster creativity, enhance student engagement, and support personalized learning, all while emphasizing the importance of ethical considerations and critical thinking.

"Teaching with AI" offers a visionary look at the future of education. The authors encourage educators to reflect on how AI can be utilized to benefit students and society. This forward-thinking approach is both inspiring and necessary as we prepare for a future where AI may play an increasing role in our lives.

The book is an invaluable resource for educators. Watson & Bowen have provided a roadmap for navigating AI-driven education with confidence and integrity.

A couple caveats—

I imagine it is helpful for the reader to have at least used a generative AI model to write or create something at least once before diving into this book.I listened to the audiobook, and I found myself frustrated with the need to reference the accompanying PDF. In retrospect, I probably should have gotten the print or e-book version, and will probably still do so as a reference volume.Much of the content concerns text-generating LLMs and teachers who will continue to use written essays, papers, and other compositional assignments. For ideas about teaching art classes incorporating image-generating diffusion models, check out this post.Basically, if you read this review and it sounds like it might be helpful for your fall semester planning, check it out. I’m glad I did.

June 4, 2025

Book review: Ghost Work

From the book jacket blurb:

Hidden beneath the surface of the internet, a new, stark reality is looming—one that cuts to the very heart of our endless debates about the impact of AI.

Anthropologist Mary L. Gray and computer scientist Siddharth Suri team up to unveil how services delivered by companies like Amazon, Google, Microsoft, and Uber can only function smoothly thanks to the judgment and experience of a vast, invisible human labor force.

These people doing "ghost work" make the internet seem smart. They perform high tech, on-demand piecework: flagging X-rated content, proofreading, transcribing audio, confirming identities, captioning video, and much more. An estimated 8 percent of Americans have worked at least once in this "ghost economy," and that number is growing.

I had some familiarity with the exploitative labor practices underpinning some of the technologies under the umbrella term “artificial intelligence”, so I expected to learn a lot more of the grim specifics in this book. I did, and having finished i, now my perception of many facets of the tech industry has been illuminated and expanded.

The section on the history of “ghost work” and invisible labor was surprisingly relevant to costume production/garment work, as the authors investigate in-depth the industrialization of the textile industry in the 18th & 19th centuries, as well as that industry’s reliance on the jobbed-out labor practice of piecework.

In the history section, they also draw a parrallel to the teams of “human computers” (mostly women and people of color), mathematicians who contributed invaluably to NASA and the lunar space-race but whose contributions have been obscured in the historical record.

I expected a certain level of focus on a dystopian hellscape of exploited workers in remote areas of the globe completing monotonous content moderation tasks for pennies through the platform of Mechanical Turk. And yes, that’s a chunk of the book, but the authors also profile positive ghostwork companies like Amara (a translation and subtitling company) and LeadGenius (an account-based marketing company).

Additionally, the book offers a healthy amount of hopeful suggestions of how to push back against the depersonalization and exploitation of ghost workers, and how humane scaffolding of ghostworking could positively reimagine the workforce of the future. In conclusion, the final chapter offers ten very feasible potential fixes.

In considering how I might approach the topic of AI with my students, I’ve been thinking about framing it in terms of the four macro-areas in which AI falls tragically and deeply short, ethically speaking:

Sustainability and environmental harmIntrinsic bias and bigotryCopyright and training data consent violationLabor exploitationThinking this excellent book might be a contender for some of the reference material I ask them to read in advance of a discussion.

June 3, 2025

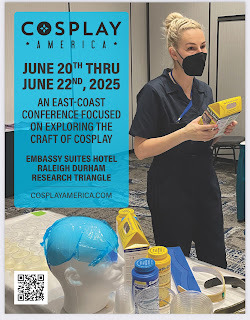

Cosplay America June 20-22, 2025

Antique parasol restoration by Matty Blatt, UNC MFA ‘24

Antique parasol restoration by Matty Blatt, UNC MFA ‘24I’ll be hosting a session on parasols at this year’s Cosplay America conference, held June 20-22, 2025, in Cary, NC.

My colleague, UNC MFA costume director Triffin Morris, will also be taking part & leading two sessions—one on a TBD corsetry topic & one called “Fitting Office Hours” where she will address issues of fit and advise participants who sign up in advance.

We will be assisted by two of our current graduate students, and some of our other students and alumni also plan to attend.

Now in its tenth year, Cosplay America describes itself as “a convention dedicated to costuming (or, as it's known in fandom, cosplay) and cosplay culture.” The event’s programming leans heavily on the side of making, media, and methodology.

Conference sponsors are companies well known among makers like Tandy Leather and SureBonder glue guns. Triffin and I are participating in this year‘s event with a goal of sharing our knowledge beyond our own students and school; we will also gladly answer any questions conference-goers have about graduate study in costume production.

There is significant crossover between cosplay culture and entertainment costume professionals. More and more of our incoming graduate students include cosplays in their application portfolios & we are thrilled to continue building this relationship with Cosplay America.

La Bricoleuse aggregate and more...

Right now, this space streams the RSS feed from La Bricoleuse, the blog of technical writing on costume craft artisanship that i've written since I may crosspost from a couple different blogs on here.

Right now, this space streams the RSS feed from La Bricoleuse, the blog of technical writing on costume craft artisanship that i've written since 2006, so that may be all you see at any given time. ...more

- Rachel E. Pollock's profile

- 80 followers