Rachel E. Pollock's Blog: La Bricoleuse aggregate and more..., page 2

July 5, 2025

AI in Higher Ed report 2025

If students can use AI to cheat on assignments, we must reimagine the assignments.

The Chronicle of Higher Education released a special report recently, “Adapting to AI,” about the impact AI is having and will have on institutions of higher learning.You can get a copy here if you’d like to read the whole thing. Much like with the fashion industry 2025 AI report, I read it and compiled this summary.

Spotlight features address topics like how staff and non-academic administrative departments are using it, advice on developing guidelines for different campus populations, and a now-outdated section on securing government grants for R&D.

The report offers some general advice to faculty across the board, particularly those teaching in creative fields:Engage with the Tools: Experiment with generative AI to understand its capabilities and limitations. I can say from firsthand experience that this is enormously important—in working with it, I quickly discovered its shortcomings and vulnerabilities. Foster Critical Literacy: Help students develop the skills to use AI responsibly and ethically [1]. This includes understanding how AI works, recognizing its biases, and evaluating its outputs critically. I would argue that this also includes educating students about its obscene ecological impacts and the lack of consent intrinsic to its training data. Advocate for Thoughtful Policy: If your institution lacks clear guidelines on AI use, consider advocating for policies that protect academic freedom & integrity while promoting ethical standards. And proactively craft course-specific AI policy for your syllabi. Collaborate Across Disciplines: Theater educators bring a unique perspective to conversations about creativity, authorship, and interpretation. Our voices are essential in shaping how AI is integrated into the arts and humanities, on our campuses and in the world. AI is a structural shift in how knowledge is produced, shared, and evaluated. As educators, we have a responsibility to engage with these technologies so we are informed about how our students may be using them, and perhaps not only to protect our disciplines but to enrich them.

The arts have always been at the forefront of cultural transformation. This is another such moment. By approaching AI with curiosity, caution, and creativity, theater educators can help ensure that these technologies serve our students, our institutions, and the broader goals of education.

[1] Since this report is aimed at educators, I assume they are talking about Ethics in academic integrity here. I’m not sure generative AI can be used ethically when considered through the lens of consent in training data, or sustainability.

Ok, that’s the end of my report summary and it’s time for my Real Talk.

I’ve been reading up on and experimenting with generative AI since the theatre season ended, because it was clear with the campus-wide rollout of Copilot across the university’s Microsoft Office suite of tools, our institution was going all-in for generative AI.

No matter how I might feel about it, my students now would have access to it with its use facilitated and encouraged by the institution, so I felt I needed to develop an informed perspective on it and how students and peers might use it.

Many colleagues within my own college and globally advocate a ban on AI in their courses & departments. I empathize with that impulse. Is it realistic, at a campus that has integrated AI access into our very email client? And this CoHE report has an extensive section about the fallibility of so-called “AI detectors.”

If students can use AI to cheat on assignments, we must reimagine the assignments.

I’ve decided to teach my students about what generative AI is, how it works and where at least some of the pitfalls are, and let them decide when, where, how, and if they want to use it. And if so, how to disclose & cite it.

For costume educators interested in reading more about how I’ve been experimenting with generative AI in our area of specialty, check out the posts with this blog’s “AI” tag.

Also, I’d love to read a think-piece by a dramaturg about theatre as the original analog diffusion model. Or attend a panel discussion on the topic.

July 2, 2025

AI presentations

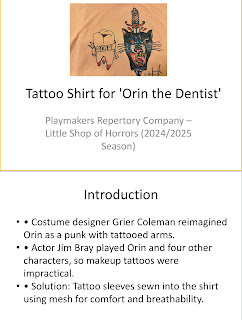

In yet another training provided by my employer, I learned about several AI tools specifically intended for creating presentation slide decks, as well as Microsoft Copilot, which can also be used in that capacity. Although I wouldn’t say I have to create these regularly, I probably put together three or four of them a year and my students create them for every project they complete in my classes. So I decided to experiment with this capability.

I used this blog post as a topic for a presentation slide deck. When a theater artist comes up with some unexpected way to address a stage conundrum, sometimes it’s presented at a conference using just this kind of slide deck. Because I often write these things up and post to my blog, I thought that would be a good test case to run.

I created one slide deck with Microsoft Copilot and a second with a tool specifically aimed at this purpose, Slidesgo. I tried the third one, PresentationsAI, but it quickly became obvious it was going to be more work trying to get my presentation to fit into its template then I was willing to invest, so I bailed before I even got it to generate a draft.

Here are a couple of slides from the presentation Copilot generated:

(A few more slides here)

(A few more slides here)

It’s not horrible, but it’s basically a first draft and I’m not sure why I would create a slide deck this way instead of just doing it myself with PowerPoint or Google Slides. The one created with Slidesgo was less acceptable and would have required more tweaking to even make it something I wanted to screenshot and share here.

This feels like another “application” for this technology which doesn’t improve upon the existing way to do it & which in fact might make it even more of a pain in the butt than just starting from scratch yourself.

July 1, 2025

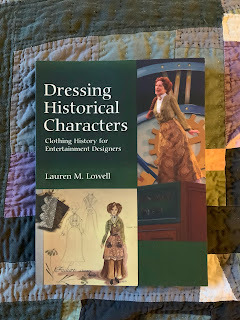

Book review: dressing historical characters

Yet another book review! Lest you think I’ve been speed-reading these titles, a lot of of these are reviews I’ve been saving throughout the academic year & theater season but never felt like I had time to sit down and proofread/edit them.

And full disclosure: I received this book from Waveland Press (the publisher) as a review copy. That has not influenced this review.

Lauren M. Lowell's Dressing Historical Characters is a broad-focused guide that delves into the details of historical costume for students of entertainment design across a broad spectrum—yes, theatre and dance, but also film, performance, video games and other digital media, etc. This book is an invaluable resource for professors and educators who may consider it as a textbook for courses in costume design, theater, history, or material culture..

Lowell's book provides a detailed survey of the evolution of Western clothing styles across various historical periods, from ancient civilizations to the 20th century. The author combines historical accuracy with practical insights, making it an essential tool for those involved in the study or creation of period costumes.

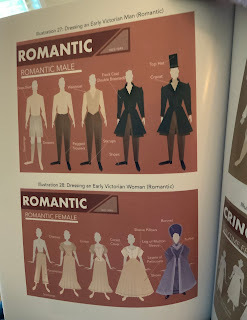

The book is illustrated with photographs, drawings, and diagrams that enhance the reader's comprehension and provide visual examples of the discussed garments.

Of note—the breakdown of each period silhouette into geometric shapes like rectangle, inverted triangle, etc., as well as an illustrated look at the undergarments that create those silhouettes.

In the acknowledgments and foreword material, the author describes how a student’s unexpected response to an assignment—in which they were supposed to draw “everything a person from the time period was wearing”—resulted in these composite drawings showing all the layers of structure; this book grew out of that student’s innovative interpretation of the assignment.

For professors, Dressing Historical Characters offers significant pedagogical value. It could serve as a primary textbook for courses on historical costume or clothing in material culture. Highly recommend as a new reference in the field.

June 30, 2025

AI freelance bid

Reference images from the 2013 job

Reference images from the 2013 jobFor this generative AI usage example, I worked with Microsoft Copilot to put together a price quote for a hypothetical freelance bid, based on an actual project I did in 2013, for a collection of ballet headpieces – a group of identical hair ornaments for the corps de ballet and a trio of tiaras for principal dancers.

In this case, the ballet costumes already existed, so I had the worn-out old items to work from in terms of the design.

My initial AI prompt was essentially to assist me in formulating a price quote for a custom millinery project. Copilot had some questions:

I'd be happy to help you create a price quote for a custom millinery commission! To make sure the quote is accurate and professional, I’ll need a few details:

I appreciated that the response enumerated specific details and factors I would need to take into consideration when formulating the quote. I responded:

1. Project Details

What type of hat or headpiece is being commissioned?

Is it for a special occasion (e.g., wedding, races, theater)?

Are there any specific materials or embellishments required (e.g., feathers, veiling, silk flowers)?

2. Labor & Time

How many hours do you estimate the project will take?

Will there be fittings or consultations involved?

3. Materials Cost

What is the estimated cost of materials?

Are any materials being sourced specially or imported?

4. Additional Costs

Packaging or shipping?

Rush order fees?

Custom design fee?

5. Your Pricing Structure

Do you charge hourly, flat rate, or a combination?

Do you include a markup on materials?

Once I have this information, I can help you draft a detailed and professional quote.

This price quote is for a ballet company that wants a dozen identical hair ornaments, and three identical custom tiaras for principal ballerinas. They will provide rhinestones and embellishments. I will provide 5 yards of heavy millinery wire and other notions/materials I have in my studio stock.

I project that the hair ornaments will take 30 minutes each to make, and the tiaras will take eight hours each. I am copying existing pieces for both of these styles so no fittings or consultations will be involved.

The client will pick these up from my studio, so no packing or shipping fees are required. This is not a rush order and no design is involved. I will structure this quote as a flat rate, although I would like to make $25 an hour for my time.

I came up with that hourly rate by researching what plumbers and carpenters make in my area. As artists & artisans, It’s important not to undervalue our work, but it’s also important not to price ourselves out of the work. (Unless we don’t actually want the job, and that’s one way to say no without saying no.)

Most people don’t have any idea what a fair hourly rate is for a custom costume commission, but It helps to frame it as commensurate with other skilled trades like plumbing and carpentry.

I should note that, at this point in my career I’m very familiar with how long it takes me to do different kinds of craftwork, and I know how creating multiples of the same item affects the time it takes to produce any individual piece.

When I teach my students how to come up with a price quote for their work, we talk about how to gauge time estimates for things you have no idea how to build, or extrapolate for something you’ve never done before but which is kinda close to something else you have.

In putting together this price quote, I went back and forth with the AI to clarify things like an overhead charge and a kill fee. If you don’t know what those are, I made a video about this whole process of pricing costume work that defines both overhead and kill fees, which you can watch here.

This process made it clear that using an AI assistant for something like this is an exchange. You don’t ask it for something and get a perfect response ready to send to a client. It’s a back-and-forth process of refining the outcome. At the end of the exchange, Copilot came up with an excellent price quote document:

Here’s your finalized price quote with the payment terms included:

⸻

Custom Millinery Commission Quote

Client: [Ballet Company Name]

Artist: [Your Name or Studio Name]

Date: [Insert Date]

⸻

Project Overview

12 Identical Hair Ornaments

Labor: 6 hours total (30 minutes each)

Labor Cost: $150

3 Identical Custom Tiaras

Labor: 24 hours total (8 rs each)

Labor Cost: $600

Materials Provided by Client: Rhinestones and embellishments

Materials Provided by Artist: 5 yards of heavy millinery wire and other notions from studio stock

Flat Materials Fee: $25

⸻

Cost Breakdown

Total Labor: $750

Studio Overhead (20%): $150

Materials Fee: $25

🔹 Grand Total: $925

⸻

Payment Terms

A 50% deposit ($462.50) is required to begin work.

This deposit is non-refundable and will be forfeited in the event of cancellation before completion and delivery of the commissioned pieces.

The remaining balance is due upon pickup from the studio.

This will probably be one way I incorporate Copilot into my classes, moving forward. I always have them do a project where they generate a quote like this for something outside the scope of what could be made in class and they have historically worked from a template of what the document should include.

Based on my experience of generating this quote in collaboration with an AI assistant, I think the exchange you have with Copilot to get from project parameters to the price quote makes it more impactful for a student learning to consider all the things you need to account for besides just the cost of materials used.

This is a genuinely useful application for the technology, and a way I will probably actually use it myself, because it’s more efficient than the way I used to do it.

Up to this point, everything I have tried using generative AI for has felt like I was using the technology just to be using it and not because it was a better way to do something.

I tell my students that using a new technology just for the sake of using it is a valid choice if you want the experience, but in the greater scheme of things, if it’s not the best way to solve a problem or achieve an outcome, it’s not the choice to make. An example would be to 3D print an art deco belt buckle design, that might actually be created more quickly & durably using something like epoxy clay on a plain buckle.

So for all the generative AI costume design renderings I have shared here, they have all seemed like Projects for which I was using the technology to investigate how it might generate something functional or acceptable, but not better than the way I would have otherwise done it, had I not been using the generative AI.

This process of bid creation is a case where I do feel like using Copilot to help me put together this hypothetical bid is an excellent application for the tool.

June 29, 2025

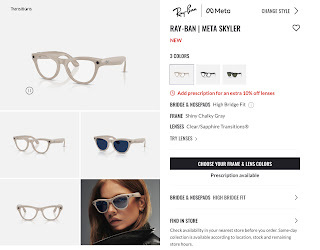

Ray-Ban/Meta AI glasses

For context, in addition to working as a costume craftperson, I am also an author by which I mean, I have a degree in creative writing and have published stuff [1]. As you might imagine, that field is as concerned about generative AI text models as visual artists and designers are about AI Image models.

So, I sometimes learn about new-to-me AI technology topics from fellow authors, and that’s the source of this post subject. And coincidentally, it also concerns a fashion accessory and accessibility tool: eyeglasses.

At a recent conference for librarians and authors, alarm was raised about a man wearing Meta‘s new Ray-Ban smart glasses having pushy conversations with conference attendees. The authors who spoke with him believe he was covertly recording them with the glasses, because of vibes. (I don’t know how you would confirm that, unless you heard him speak a trigger phrase like, “Meta start recording this.”)

Disclaimer: I did not attend this conference or talk to this man, I only read about the incident in a conference report by a colleague.

These are the glasses, which can have prescription lenses or clear ones that transition to tinted colors in sunlight:

Many states have two-party-consent recording laws, which makes covert recording illegal, but some people just break the law and face consequences later if they’re caught/charged.

Be advised that these glasses exist, & people are wearing and using them. I’m not suggesting everyone wearing a pair of Ray-Bans is wearing smart glasses, nor that everyone wearing smart glasses is nefariously recording people without their permission.

What do you think about them?

If you watch the video on that page highlighting potential helpful ways to use them, the live translation feature is promising, but it also seems to require that you’re talking to someone who understands English, but doesn’t speak it.

Personally, they’re not for me. I do wear eyeglasses for accessibility – I’ve had poor eyesight for as long as I can remember and got my first pair of glasses when I was six years old. So, because I need them to see better, the style of my eyeglasses matters to my overall personal style, and these are not my taste. I do also find the AI functionality excessive and kind of creepy, but that takes a backseat to the fact I wouldn’t wear the dumb version of these frames either.

[1] For example, this parasol book and this costume history book.

June 28, 2025

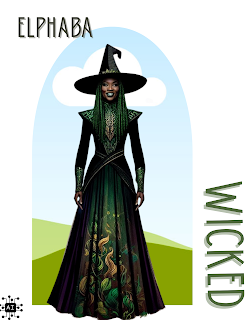

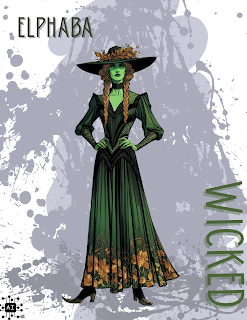

Two more Elphabas

I got inspired to create a couple more Elphaba renderings using the Canva app & my two favorite diffusion-model-generated versions.

I’ll reiterate that I don’t think generative AI/diffusion models are necessarily a viable way to create actual costume designs for a real-world theater production, in which the costumes will be custom-built based upon the renderings. It’s not that it’s impossible, it’s just that the technology does not allow for the kind of aesthetic control that costume designers seem to want, and I think the shady ethics of training model data will probably be insurmountable for most artists/designers.

In all of this research, I’ve been approaching it as an educator, with a mind to the fact that many of us are teaching at institutions encouraging the use of these technologies (or in some cases requiring their incorporation into pedagogy) and there will be student use no matter how restrictive your syllabus AI policy.

Perhaps an assignment idea could be to create a costume rendering using only digital tools like generative AI and digital image editing/drawing apps, and then create the same one using analog media, like pencils and paint, croquis tracing, etc.

I don’t know, & I don’t teach these kinds of classes. I do know through the grapevine that students at reputable MFA programs around the US and Canada are already incorporating generative AI into their design processes, whether their professors can see that’s what they’re using or not.

Anyhow, I’m revisiting Elphaba, recalling that one of my design professors criticized renderings with no background to situate the figure in space. Even a horizon line or a vague shadow was preferred.

.

Incidentally, I asked Microsoft Copilot how much energy it takes to generate one image. Here’s what it said:

Generating a single image with Copilot typically consumes about 0.0029 kWh (10.44 kJ) of energy 1. This is roughly equivalent to charging a smartphone battery to 24% 1. The energy consumption can vary depending on the complexity of the image and the model used.

I asked it what factors influence that variability.

Several factors influence the energy consumption of generating images with AI:

Model Complexity: More complex models with a higher number of parameters require more computational power, leading to increased energy use 1.

Hardware Efficiency: The type and efficiency of the hardware used (e.g., GPUs, TPUs) can significantly impact energy consumption 2.

Image Resolution and Detail: Higher resolution and more detailed images require more processing power, thus consuming more energy 1.

Optimization Techniques: The use of optimized algorithms and techniques can reduce the energy required for image generation 2.

Cooling Systems: The energy needed to cool the hardware also contributes to the overall energy consumption 2.

Understanding these factors can help in making more energy-efficient choices when using AI for image generation.

1: MIT Technology Review 2: MIT News

Someone want to create a browser plug-in with that data?

June 27, 2025

GenAI watercolor

When I learned costume design rendering in the 1990s, my professors created designs predominantly with pencil and watercolor, so that is the method I was taught. I knew people at other schools learned methods using gouache or art markers or alcohol inks—I feel like you learned whatever art-making medium your professor preferred. I know we also did a project using collage to create a set of renderings from a pile of magazines, but the emphasis was on mastering watercolor as a means of conveying your creative ideas.

I admit, I never felt confident in that medium, although I did get passable enough. I spent much of my years of study, struggling with mastering the watercolor medium and figure drawing, with forays into construction techniques, like pattern drafting, fabric dyeing, millinery, etc., and almost no experience in conducting fittings with actors, tracking show budgets, generating documents like pieces lists or costume plots, or communicating ideas to directors and other members of a creative team.

In the course of exploring possible applications for diffusion models and image generators, I find myself questioning the very nature of teaching costume design and why it’s taught the way it is. Of course, what do I know, it may no longer be taught the way it was 30 years ago. And it seems there will be a seismic shift in how it will be taught moving forward.

All that is a lot of nostalgic navel-gazing to preface why I decided to see how Copilot might generate watercolor sketches. For the exercise, I decided to go with something simple: a young Caucasian man in a red T-shirt and blue jeans. Here are the first couple of attempts:

. . .

Great but there’s a person wearing these clothes. I then asked it to generate the whole figure, and specified brown hair and white tennis shoes . '.

Great but there’s a person wearing these clothes. I then asked it to generate the whole figure, and specified brown hair and white tennis shoes . '.

.

.

Honestly, pretty good. The model seems pretty committed to those rolled jeans hems, but whatever.

Every time I use the tool, I learn something new further about how to talk to it to get a desired result. In related news, there’s been some movement in the litigation front on the data sets that generative models have been trained on, and it feels like that realm is going to be key in reconciling, ethical obstacles, if that’s even possible.

June 26, 2025

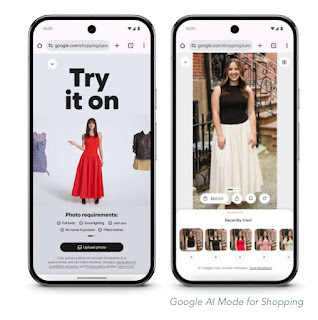

Fashion AI Report 2025

As machine learning reshapes the fashion industry, The Interline’s Fashion AI Report 2025 offers a look at how these changes are unfolding throughout the supply chain and what they might mean for those of us in the parallel field of entertainment costume design and production. While the report focuses on the fashion and beauty industries, costuming coexists in the margins of both; its insights are relevant.

You can access the entire 140-page report at this link if you’d like to read any or all of it — I’ve read the whole thing and I’m sharing this summary in case you are interested but don’t have the time or mental real estate to check it out right now.

The report marks a shift from speculative AI to practical tools. When the 2024 report came out, “AI” was still a buzzword. In 2025, it’s a force to consider. For costume designers, this might mean:

Faster design development: AI can generate visual concepts based on scripts, moods, or historical references—although the kleptomaniacal ethics of generative AI models’ training datasets may be insurmountable for theatre artists, nevermind the issue of script rights Enhanced research & procurement: Tools now assist with fabric sourcing, garment shopping, and even predicting wearability under stage conditions particularly for long-running & touring shows (this feels like the more likely area of applicability in our field).

Google/Shopify partnership’s AI shopping assistant

Google/Shopify partnership’s AI shopping assistant

The report is structured around four sections:

Introductions & Context — why this report exists & who compiled it.Essays & Interviews— written by journalists & scholars.Technology Vendor Profiles — these highlight companies providing AI tools to global fashion brands. They paid for the compilation of this report so the profiles should be viewed as advertising content. That said, they include interviews with executives with interesting perspectives.Market Analysis — largely irrelevant given the scale most theatrical productions operate at, barring perhaps Cirque du Soleil/Disney.Despite the focus on the rise of AI, the report emphasizes that people remain at the heart of fashion—and by extension, costume. AI can assist but it can’t replace the intuition, empathy, and narrative understanding that costume designers bring to the stage.

The Interline hints at deeper automation in the years ahead. For theatres, this could mean:

AI-assisted costume stock archiving and reuse planningDigital wardrobe previews for directors and actors.Sustainability insights for eco-conscious companies, departments, or designers

If you want to read some of the report, but not the whole 140 pages, I have a couple of sections to recommend, which you can find via the table of contents on pp. 9-10:

The essay on “Upstream AI” has some fascinating strategies for sustainability, including AI-driven genetic engineering of the cotton plantThe essay “Trust Me, I’m Not Real” is a dystopian look into AI Influencers and “post-human authenticity”The interview with Isaac Korn at Perry Ellis “AI in Practice” has some good comments about their AI Governance Policy [1] and how it helps with ethics and securityAs I was writing and compiling this post, I reached out to former students of mine working in the fashion industry and corporate costume production as patternmakers and technical designers (and an imagineer) to inquire whether they knew about AI capabilities their companies had incorporated. Most had no idea and said that if so, it was in areas outside of their field of responsibility.

I got the idea from the report that machine learning adoptions in the field were not widespread, and that the report was perhaps created to engender broader uptake.

[1] I recommend all theaters consider writing one of these policies, particularly those embedded in academic departments..

Tl;dr — It sounds like there are some applications that will come into play for big companies like Cirque/Disney, but for much of the costume industry, once the Midjourney/ChatGPT hype dies down, we might find value in machine learning tools primarily aimed at consumers like AI shoppers.

June 24, 2025

Book review: Blood in the Machine

At first, this may seem like a non sequitur book review for this blog, & yet as a costume-maker, I found Brian Merchant's Blood in the Machine a fascinating read, weaving together threads of textile manufacturing history, technology, & rebellion. This book is not just a chronicle of the Luddite movement or a critique of the gig economy; it's a tapestry of human resilience against the relentless march of automation.

Merchant's chronicle begins in the early 19th century, where British textile workers, facing the threat of mechanization, rose up in defiance to smash machines with hammers and axes. These “Luddites,” named after the mythic Ned Ludd, weren't merely vandalizing machines out of spite or proto-punk contrarianism. They were fighting for their livelihoods.

In a harrowing strand of the narrative, Merchant delves into the traumatic life of Charles Ball, an enslaved man whose story intertwines with the broader story of resistance against dehumanizing systems. Ball's journey, marked by extreme hardship,tragedy, and resilience, provides an avenue to discuss the scourge of the cotton trade & serves as a stark reminder of the human cost of progress.

Born enslaved, he freed himself, served in the military in the War of 1812, gained skilled employment in textile manufacturing, then was betrayed and re-enslaved. Because of his expertise, he wrote in a memoir about the decline he noticed in fabric quality of the clothing of enslaved people since his pre-industrial childhood when most wore homespun.

His narrative thread underscores the universal quest for dignity and justice, resonating deeply with contemporary battles against exploitation and systemic oppression. Through Ball's story, Merchant not only honors the past but also illuminates an enduring spirit of striving and resistance.

Those familiar with the literary giants of the past will be intrigued by cameos from Lord Byron, Mary Shelley, Charlottes Brontë, and other literary luminaries.

Merchant connects the plight of modern gig workers and the historical struggle of the Luddites. Today's tech titans, with their sprawling empires and algorithm-driven platforms, often mirror the oppressive forces faced by 19th-century textile workers. Gig economy laborers, much like the Luddites, find themselves battling against a system that prioritizes efficiency and profit over human dignity.

Merchant illustrates how these workers, akin to the Luddites smashing mechanized looms, resist the relentless march of automation and exploitation. This comparison highlights the cyclical nature of labor struggles and the enduring fight for fair treatment in the face of technological advancement. Through this lens, Merchant's narrative becomes a call to recognize and address the human stories behind the digital age's shiny facade.

In Blood in the Machine, Merchant doesn't just recount events; he knits together a narrative that is both informative and engaging. His respect for the Luddites' cause is evident, making this book a compelling read for anyone who has ever felt the ill-treatment of abusive bosses or the sting of technological displacement.

A couple caveats: I listened to the audiobook, which was fine, but I think I might have preferred to read a text copy. Also, be advised—CW for several episodes of gruesome violence and death.

June 23, 2025

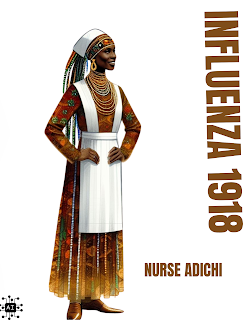

Digital costume designs for Influenza 1918

I realized that I had been letting the AI hype gaslight me into thinking that I could expect the technology to completely “do my job,” instead of create raw material or a draft that I could then manipulate using other tools.

Be advised that I am not super proficient with the Canva image-editing app, so I’m sure a person more proficient in digital rendering tools could do more with this idea, but I was able to eliminate busy backgrounds and that one weird uniform button that was pissing me off.

I envisioned these designs were for an opera about the influenza pandemic of 1918 and its impact on Africa. Which, I realized I knew nothing about so I read about it on this website. An interesting and tragic research side-quest, as it were.

Of course, if this were an actual opera, an actual production, I would hope that the costume designer would be a talented designer of color and not me. So I’m treating this kind of like a class assignment with the instruction that I’m trying to create some initial renderings I could take into a first creative team meeting with the director & the other designers.

All this is entirely backwards from how something this like this would happen in actuality, and presumably the composer and librettist of the opera would determine at least the location (because Africa is a big continent& not a monolith) and I would be able to do research and then draw these costume designs or have that information to impact the prompt language for how the renderings were generated.

But, i digress. II was able to create a rendering template in Canva and import the Copilot artwork, clean things up and produce these just like I would do if I had sketched these. When I was working as a costume designer, it was at a point in the progress of technology such that I often drew my renderings by hand on paper, scanned them, and imported them into a template just like this. In creating that template I put thought into font choice and layout of the rendering for concise communication to the costume crew, my fellow designers on the creative team, and the director.

I also added a little AI graphic in the corner of each to communicate clearly that the generation of these designs was done with the help of a diffusion model.

Here are my results:

La Bricoleuse aggregate and more...

Right now, this space streams the RSS feed from La Bricoleuse, the blog of technical writing on costume craft artisanship that i've written since I may crosspost from a couple different blogs on here.

Right now, this space streams the RSS feed from La Bricoleuse, the blog of technical writing on costume craft artisanship that i've written since 2006, so that may be all you see at any given time. ...more

- Rachel E. Pollock's profile

- 80 followers