Scott Meyers's Blog

October 29, 2025

Bill Gaver's 1989 Demo of The SonicFinder™

While digitizing my analog videotapes, I came across a short 1989 demo by Bill Gaver of his SonicFinder™, an interesting piece of early work on audio in user interfaces. It's easy to find online copies of research papers describing The SonicFinder™, but I was surprised to find that the Internet doesn't seem to offer anything like the video demo I have. That's a shame, because hearing audio and seeing it in action is a lot more illuminating than reading about it.

With Bill's kind permission, here is a digitized copy of the videotape he gave me in 1989 (presumably when he came to give a talk at Brown University, where I was pursuing graduate work in Computer Science). It's short--not quite three minutes long. Enjoy!

August 20, 2025

Escaping from iCloud Photos

After more than a decade storing photos and videos in iCloud, I decided to download my stuff to my PC and delete the copies at Apple. I figured I'd log into iCloud using my web browser, click the Download link, wait a while for the eighty-plus gigabytes to make their way to my computer, delete the cloud copies, and be done with it.

It didn't work out that way.

What I expected to take a few minutes ended up taking more than a week of screen-staring, head-scratching, teeth-gnashing research, installation of a couple of new apps, and a bunch of manual work that I didn't know how to avoid until most of it was already done.

A good place to start the story is the term "my stuff." I had photos and videos at iCloud, which I'd organized into a few albums, and that's what I wanted to download: photos, videos, and albums. For now, we'll set aside albums and deal with photos and videos.

iCloud Photos is unambiguous about how many it stores for me:

That's a total of 23,251 items. However, if I install the iCloud for Windows app and sync my photos, this is what File Explorer shows me:

That's a total of 23,251 items. However, if I install the iCloud for Windows app and sync my photos, this is what File Explorer shows me:

That's 958 more items than iCloud says exist. Not a good sign. Things got worse when I did the same thing with Cloudly, a program I tried in an attempt to deal with the albums issue I'll address later. Here's what File Explorer has to say about the result of syncing Cloudly with iCloud Photos:

That's 958 more items than iCloud says exist. Not a good sign. Things got worse when I did the same thing with Cloudly, a program I tried in an attempt to deal with the albums issue I'll address later. Here's what File Explorer has to say about the result of syncing Cloudly with iCloud Photos:

That's 1,717 more items than iCloud reports and 759 more than iCloud for Windows downloaded. How can there be so much trouble simply agreeing on how many photos and videos are to be downloaded?!

That's 1,717 more items than iCloud reports and 759 more than iCloud for Windows downloaded. How can there be so much trouble simply agreeing on how many photos and videos are to be downloaded?!The answer involves a detour into Apple's options for downloading.

Apple Download OptionsIn the web browser interface to iCloud Photos, you can click a simple download icon to get what I'll call the Default download. You can also click on the "..." icon and then on "More Download Options..." to select from three more choices. They are Unmodified Originals, Highest Resolution, and Most Compatible. My simple experiments suggest that the Default and Most Compatible options are the same, so the iCloud web site effectively offers these download choices:

Unmodified Originals.This means exactly what it says. It is probably not the droid you're looking for.

When you take a photo with an Apple device and edit it (e.g., crop it or adjust the colors), Apple saves both the original photo and the result of your edits. The edited photo is what you see in iCloud (and in the Photos app on your device), but the original is still lurking in the background in case you decide you'd like revert to it. The same applies to videos. Using the Unmodified Originals download mode gives you your pictures and videos just as you took them--without any of the edits you applied to make them look better.

Sometimes edits are applied automatically, and you may not realize they've been performed. A good example is photos taken in Portrait Mode. The only image you see on your device is the one with the background blurred, but behind the scenes an ordinary (unblurred) photo is taken and some transformations (i.e., edits) are applied. If you download the Unmodified Original for a Portrait Mode photo, you'll get the truly original one, the one without any blurring.

The iCloud item count doesn't include the original versions of edited photos. However, when Cloudly downloads edited photos, it downloads both the final image and its first forebear. That's one of the reasons Cloudly downloaded so many more items for me than iCloud said it contained.

Most Compatible (the default). Apple says that using this download mode yields JPEG photos and videos that are MP4/H.264, the idea being that these formats are more widely supported (especially on non-Apple systems) than the HEIC photos and H.265 videos Apple devices typically produce. In contrast to Unmodified Originals mode, the files you download include edits you've applied.

What Apple fails to mention is that Most Compatible downloading incurs a significant reduction in resolution in the photos and videos you end up with. In my testing on originals taken on an iPhone SE 2, photos generally went from 3024 x 4032 to 1536 x 2048 (a pixel loss of 74%) and videos from 1920 x 1080 to 1280 x 720 (56%).

I can imagine why Apple made this the default download option. Users would find it frustrating to download something from iCloud and then not be able to view it. However, I think they should be a lot clearer about the implications that has for the quality of the downloaded content.

Highest Resolution. As its name suggests, this download option yields photos and videos with the full resolution available. The versions you download include the edits you've made to them.

If this were the end of the story, I'd download my stuff at Highest Resolution and be done with it. But the story's not finished.

File TimestampsOf the 23,000+ items I have in iCloud Photos, several thousand were sent to me as WhatsApp messages. WhatsApp strips out the internal metadata for, among other things, when a photo or video was taken, so the file itself can't tell me when the item was created. I can approximate it, however, by using the date and time when I got the message. That's enshrined in the creation timestamp of the file that held the photo or video on my device and was subsequently copied into iCloud. That means that when I download something from iCloud onto my PC, I want the creation date and time of the file on my PC to match the creation date and time of the file in iCloud Photos. Otherwise I'd have to review all my old WhatsApp messages to determine that, say, this photo was sent to me on March 13, 2017:

None of the download options above--the ones offered by the web browser interface to iCloud Photos--preserve file creation timestamps. If I download that photo using a web browser today, the file creation timestamp will be for today. Nothing about the picture or the file will tell me that it's from 2017.

For me, that's a deal-killer. Downloading using a web browser is out. (It was already pretty close to out, anyway, because iCloud limits browser-based downloads to 1000 items at a time. Downloading my stuff would have required that I select and download batches of items 23 times. That's doable, but ridiculous.)

Fortunately, Apple has an alternative way to download photos and videos from iCloud to a PC: the iCloud for Windows app. Its design approach is quite different from that in the browser. There no choice in which type of download you want (nor is the type it uses documented, from what I can tell), but my testing suggests that it's the same as Highest Resolution. That's good for me, because it's what I want, but even better is that files downloaded using iCloud for Windows retain the creation timestamps they have in iCloud. That means the photos and videos sent to me in old WhatsApp messages are in files with creation dates and times corresponding to when I received them. That, in turn, will allow me to use exiftool to copy those file-based timestamps into the files' metadata, thus restoring (more or less) the "date taken" information that was originally there and that WhatsApp got rid of.

iCloud for Windows thus solves two-thirds of the problem of getting my stuff from iCloud Photos: the parts for photos and videos. For the final part--albums--iCloud for Windows is sadly and infuriatingly useless.

iCloud has two kinds of albums, shared and unshared. There are important differences between them (which Apple doesn't seem to directly describe anywhere), but for my purposes, both are just collections of photos and videos that are in my photo library. When downloading my stuff, I want to download these collections, too. At the very least, I want to download representations of the albums I have and the photos and videos that are in each of them. Almost as fundamental is the ability to have the downloaded representations retain any custom ordering I've done on each album's contents. That is, if I've arranged the photos and videos in an album to tell a story in a particular way, I want my arrangement to be preserved.

For Windows users, Apple offers no support for any of this. You can do things manually, of course (e.g., create folders in your PC's file system for each of your albums, use the iCloud web interface to download album contents (in 1000-item batches) into the folders, then use software like FastStone Image Viewer to arrange and rename the items so they stay in the order you want them in, etc.), but you're completely on your own. It's dismissive--even insulting--on Apple's part.

If you have a Mac, things are better. There you can use the Photos app to export album contents to the file system. Using the Sequential File Naming option gives the exported items names such that when you list them in alphabetical order, you get them in the order shown in the album. File timestamps are correctly preserved.

The only thing missing is the ability to export all albums at once. You have to manually export them one by one. It's a lot better than the nothing offered Windows users, but it's not perfect. The more albums you have, the further from perfect it is.

I have a Mac, but I preferentially use Windows, so I scoured the web looking for a way to download albums from iCloud. Pickings are slim, but I eventually came upon Cloudly, a program promising to "[preserve the] album structure of your iCloud Photo Library." It's a largely hollow claim. Cloudly downloads only unshared albums (not shared ones), and it doesn't preserve item orderings within the albums. As for the downloads themselves, they're, um, quirky.

For edited photos, Cloudly downloads both the unmodified originals as well as the highest resolution versions. For videos, it downloads unmodified originals only; there is no way to download the revised versions of videos you've edited. On the plus side, file timestamps are properly copied from iCloud.

Shared albums are downloaded as separate copies of the items in your iCloud library following the rules above, so if you have an edited photo in five unshared albums, you'll end up with six downloaded copies of the unmodified original--one for each of the albums plus one for the library as a whole--and six copies of its highest resolution version. (Similar file replication results from the Mac-album-export approach: each exported album contains its own copy of the Highest Resolution version of each item in the album.)

Cloudly's only download option is for an entire iCloud library. For me, Cloudly said when it finished that about 500 items had failed to download. It gave me a "retry" option, which caused it to try to download the missing items again. It got about half of them, thus leaving me about 250 items short of my full library. I clicked "retry" again, and the number of un-downloaded items was reduced again. After about a dozen iterations of this, it finally told me that everything had been downloaded.

My experience with Cloudly made me wish I'd been less reluctant to go the Mac route...

"Downloading My Stuff" RevisitedI can now be more precise about what I mean when I say I want to download my stuff:

I want a copy of all my photos and all my videos in the best available quality. For edited items, I want the most recent version. I want the file creation timestamps to match those in iCloud.I want all my albums (shared and unshared) to have some kind of representation (presumably folders) in what I download. I want the order of the items in my albums to be preserved. I want the downloads to proceed without manual intervention.What I don't want is to have to break my downloads into 1000-item batches or sacrifice file-creation timestamps (as the iCloud web browser interface demands), to have my albums ignored (as iCloud for Windows does), to have to export albums manually (as the Mac Photos app requires), or to have the item orderings in my albums disregarded (as Cloudly does). Pardon me for channeling the feelings of every user of every piece of software everywhere, but really, people, I don't think I'm asking for anything unreasonable!

An iCloud Photos Escape PlanIf you find yourself wanting to get your stuff out of iCloud and on to Windows, this is my suggested approach:

Install iCloud for Windows and let it sync. This gives you high-quality copies of your photos and videos with the correct file timestamps.If you have a Mac, configure its Photos app's iCloud settings to "Download Originals to this Mac", then export the albums one bv one (sigh) into folders named after the albums. Specify sequential file naming for the exporting. Copy the resulting folders to Windows.I'm a little abashed to admit that this is pretty close to what ChatGPT suggested when I asked it how I could get my stuff. In my defense, I also asked Perplexity, Grok, Claude, Gemini and Copilot, and none recommended using a Mac. Copilot even implied that ChatGPT's approach wouldn't work: "The native Photos app on macOS does not preserve album structure when exporting..." At the time I asked these chatbots for advice, I was hoping to stick with a Windows-based solution. I didn't try using a Mac for albums until I'd gone down a bunch of Windows-centric dead ends.

If you don't have a Mac, things are more complicated, but ChatGPT had some suggestions. In an act of contrition for failing to take its advice as seriously as I should have, here's a link to our discussion. My contrition is minimal, however, because, well, read on.

Aside: Chatbots Again Fail as Research AssistantsAll the information above is based on experiments I ran, but when deciding which experiments to perform, I sometimes took the opportunity to test LLM chatbots by asking them questions I needed to answer. Here's one of the questions I posed (in each case to the free version of whichever chatbot I was using):

When downloading photos and videos to a PC from iCloud using a web browser, there are three download options: Unmodified Originals, Highest Resolution, and Most compatible. When downloading using the iCloud for Windows app, there are no download options, the files are just downloaded. Which web browser download option does iCloud for Windows use?

The results were terrible. Grok, Perplexity, Copilot, and Gemini all confidently stated that iCloud for Windows downloads unmodified originals. ChatGPT was equally sure that the answer was Most Compatible, and Copilot agreed (though with less certainty). All of them were wrong. iCloud for Windows uses Highest Resolution. At least it does for me. All that chatbot opposition spurred me to check many, many times.

As I've said before (e.g., here and here), LLM chatbots--or at least the free versions thereof--have a long way to go before they'll be reliable research aids. I continue to look forward to their getting there.

July 31, 2025

Video File Metadata: First Thoughts

A few years ago, I published a series on metadata in image files with a focus on old slides and photos that had been scanned. I've since turned my attention to metadata for video files, because I had a number of old home movies digitized.

I assumed that most of what I'd learned about image file metadata would apply. I also assumed that because widespread consumer use of digital video came after mass adoption of digital photography, the inconsistent, competing, overlapping metadata standards for images would have been avoided and that a single, widely-supported standard for video metadata would exist. I was wrong on both counts. I was reminded again of the the remark at Stack Exchange Photography that "Image and video metadata is a complete hot mess."

The video mess seems even hotter than for images, because

There's no accepted standard for video metadata. That's worse than the three competing standards that bedevil metadata for image files.It's harder to find video players that show metadata. Google Photos (GP) exhibits less predictable behavior for video file metadata.Nevertheless, the case for metadata in videos is as strong as it is for images, so into the mire we wade!

Data to StoreI store the following information in image file metadata, and I want to store the same things in video files:

A description of what's in the video. When the video was taken.When the video was digitized.Who did the digitizing.A copyright notice.I also want to store something I can't believe I overlooked for image files:

GPS coordinates for where the video was filmed.My excuse for this oversight with images is that when I started on the metadata problem, I was mostly dealing with pictures where I had only a vague idea where they were taken.Metadata Fields to UseIn a perfect world, there'd be a widely-supported standard for video file metadata, and that standard would prescribe which fields (i.e., tags) should be used for the information I want to store. Heck, in a really perfect world, that standard would apply not just to videos, but to images as well.

Our world falls short of perfection. Fields for image file metadata are largely ignored in the video world, and while there is an IPTC standard for video metadata, it has nothing to say about digitization, e.g., when it was done and by whom. Furthermore, my experiments indicate that support for the IPTC standard by Google Photos (GP), Windows File Explorer, and other programs I care about is poor.

I've written elsewhere about how GP's search capabilities make it indispensable for me, and that indispensability gives it a lot of clout in the metadata decisions I make. For images, I didn't find that to be constraining, but for videos, GP seems to like to throw its weight around. One of my goals is to be able to round-trip a video to GP (i.e., upload a video, then download it) without a loss of metadata. GP seems to enjoy interfering with that process. Often, fields present in uploaded videos are missing when those videos are downloaded. Sometimes GP keeps the data in downloads, but puts it in different fields. It's irritating.

I aspire to use metadata fields with these features:

They are part of the IPTC standard. They are visible in commonly-used programs (e.g., video players).They have these GP-related characteristics:Are displayed in the web browser UI.Are consulted by GP during searches. For example, the results of searching for videos taken in 1998 in California should include videos with such information in the metadata for when and where they were taken as well as in the videos' "description" field.Survive round-tripping.I didn't find any fields that check all these boxes, so compromise was the name of the game.

Identifying and testing candidate fields was a big job. I initially got help from a capable human, Amelia Huchley, but her time was limited, and when it ran out, I turned to marginally-capable AI chatbots. They've read pretty much everything on the internet, I figured, so they should be able to help choose metadata fields suited to my purposes.

I've blogged about how AI chatbots--or at least the free versions of them--are lousy search assistants, but desperate times, any port in a storm, etc. I turned to ChatGPT, Perplexity, Claude, Gemini, and Copilot and sought to use them as a sort of advisory committee to help me identify prospective fields and evaluate how well each was likely to achieve my goals. As advisory committees go, this one left a lot to be desired. The chatbots helped me put together a list of candidate fields, but their advice on how well the fields were likely to do on things like being displayed in the GP interface or surviving GP round-tripping was unreliable to the point of uselessness. This reinforces my impression that, in their current form, the free AI chatbots are worth about what you pay for them when it comes to internet research.

In the end, these are the fields I decided to use:

When taken: QuickTime:CreateDate. Choosing this field should have been easy. From a metadata perspective, you can't get much more fundamental than when a video was made. I considered six fields for this information. What I found perfectly exemplifies why video file metadata is a mess.Four of the fields come from QuickTime--meaning that QuickTime has four metadata fields for the same information. Two have ridiculously similar names: QuickTime:CreateDate and QuickTime:CreationDate. Two have names differing only in namespace: Keys:CreationDate and QuickTime:CreationDate. (ExifTool unhelpfully treats these fields as the same, sigh.) Two of the fields are changed into a third during a GP round trip: uploading files containing either of the two CreationDates yields downloaded files where those fields have become QuickTime:ContentCreateDate. You get the idea.

I ultimately settled on QuickTime:CreateDate, because it has good GP support (showing in the UI, being found in searches, and remaining intact when round-tripped) and, in contrast to the other candidates, is also visible via Windows' File Explorer and MediaInfo.Where taken: QuickTime:GPSCoordinates. I identified six candidate fields for this information, but I tested only four, quitting when I found that this one offered full GP support, unlike the others I tried.

"Full" GP support is quirky. Copying GPS coordinates from Google Maps and pasting them into an ExifTool command line to set QuickTime:GPSCoordinates works, but if the coordinate values have six or more decimal places, GP will refuse to show where the video was shot. When coordinates have at most five decimal places, no such problem arises. Enlivening the situation is that (at least under Windows) right-clicking a location in Google Maps brings up a context menu showing the location's coordinates to five decimal places, and clicking these coordinates copies them to the clipboard, but pasting them yields values with up to 14 decimal places! So when pasting coordinates copied from Google Maps into an ExifTool command, the values have to be edited back to at most five decimal places. (This restriction doesn't seem to apply to image files. In that case, you can use ExifTool to write coordinates with up to 14 decimal places, yet GP will still show where the photo was taken.) Description: QuickTime:Title. Amelia and I tested a dozen fields in an attempt to find one that GP would display. None did. Many also failed the round trip test. When I found that QuickTime:Title was found in GP searches, was retained in GP round-trips, and was displayed in VLC Media Player, Windows Media Player, Windows Media Player Legacy, and Windows Explorer, I figured that no field would do better, and I stopped looking.When digitized: XMP:DateTimeDigitized. This was the only field I found that is specifically designed for when digitization took place, can be set by ExifTool, and is retained across round trips to GP.Who did the digitizing: XMP-xmpDM:LogComment. I was not able to find a field designed to store this information (Claude suggested the apparently-non-existent XMP:DigitizedBy), but the general-purpose XMP-xmpDM:LogComment seems like a reasonable choice, and it survives a round trip to GP. Copyright: QuickTime:Copyright. This was the only easy metadata field to choose. It's specifically designed to hold copyright information, it's retained across round-trips to GP, it's part of the IPTC standard, and it's visible in at least one video player (QuickTime Player).These fields are my preliminary choices. To date, my experience with them is mostly limited to simple tests. My thinking may change as I get more experience with real video files, but given the dearth of guidance about metadata in video files, I thought it would be useful to document where I am now. That's why this post has "First Thoughts" in its title. Adding TranscriptsAn important part of a video file is its audio track. For many videos, there's a lot of speech in such tracks, and it's easy to imagine uses for transcripts of what's said, e.g., subtitle generation and full-text search. The transcript for a video is a form of metadata, so, at least in concept, I'd like to include it in the video file. Given the availability of speech-to-text software, it's not unreasonable to hope for initial drafts of such transcripts to be generated automatically. (YouTube, Vimeo, and Dailymotion all offer automatic generation of captions or subtitles.) I haven't gone beyond the thinking-about-it stage for generating and embedding transcripts, but it's clear that this could be a useful avenue to explore.

On the other hand, it's possible that this is an area where advances in technology could render the issue moot. If speech can be converted to text quickly and accurately enough for real-time display and search, there'd be no need to create and store transcripts inside video files. The metadata they represent could simply be generated when needed, thus sparing people like me a lot of work.

July 5, 2025

Convertible EV Spot Check

It's been more than two years since I last looked into the availability of all-electric convertibles, so I thought I'd do a quick check to see where things stand now. Here's a rundown (in alphabetical order) on the models I mentioned in that post, plus a couple of others I've added since then:

Fisker Ronin: Fisker went bankrupt.Genesis X Convertible: Still a concept car with no production announced.Maserati GranCabrio Folgore: orderable, but it's not clear that deliveries have occurred. Pricing starts at $206,700 in the United States.MG Cyberster: available in the UK and Europe, but not in the United States. Pricing starts at £54,995 in the UK.Mini Cooper SE Convertible: Canceled. Polestar 6: Not yet available. Estimated for model year 2028.Porsche 718: Postponed and not yet available. Estimated for model year 2027.Smart Fortwo Cabrio: Discontinued.Wiesmann Project Thunderball: Postponed and not yet available.All in all, a pretty gloomy picture for those of us who yearn for an EV analogue to the Miata. Sigh.

May 27, 2025

The Dismal Failure of LLMs as EV Search Aids

This time I experimented with seven LLM-based chatbots as search assistants. I gave each the following prompt:

List all the fully electric compact SUVs for sale in the United States that have all-wheel drive, an openable moonroof or sunroof, an all-around (i.e., 360-degree) camera, an EPA range of at least 250 miles, and are no more than 180 inches in length.

My assistants were the unpaid versions of these systems (listed in the order in which I happened to test them):

ChatGPTPerplexityClaudeGeminiCopilotYou.comMistralThe results were eye-opening. None of the systems listed the only vehicle that fulfills the criteria (the Volvo EX40), and all but one listed vehicles that violate the requirements. Worse performance is hard to imagine. The false positives waste your time pursuing dead ends, while the false negatives imply that no qualified EVs exist, even though one does.

Complete failure was averted by one system (You.com) mentioning, almost as an afterthought, the Volvo XC40 Recharge. That car was renamed the EX40 last year, but searching for the old name will quickly lead you to the new name, and that will finally put you on the trail of the only car that satisfies my criteria.

The chatbots failed in a variety of ways:

ChatGPT said "here are the models that meet all requirements," then listed five EVs and their specs. For four of the five, the displayed specs were contrary to the requirements, meaning ChatGPT "knew" (to the extent that LLMs "know" things) that these cars shouldn't have been listed. For the fifth car, one of the specs it listed was simply incorrect.There was no mention of the Volvo EX40.Perplexity's behavior was similar to ChatGPT's: it claimed to list cars fulfilling the criteria, then "knowingly" listed ones that don't. The twist was that two of the three EVs Perplexity listed--the Rivian R2 and the Toyota C-HR EV--don't exist yet. (If they did, the Rivian would exceed the length constraint, and it looks like the Toyota would likely fail the openable-roof test.) Perplexity made no mention of the Volvo EX40.Claude listed only one car, Tesla's Model Y, saying it "clearly meets all your requirements. It offers ... a panoramic glass roof ... and measures approximately 187 inches in length. However, this exceeds your 180-inch length requirement." Its dithering on the car's length was disappointing, and its failure to distinguish a panoramic glass roof from one that opens was worse. There was no mention of the Volvo EX40.Gemini's response began with "Here's a breakdown of current and upcoming electric compact SUVs and how they stack up against your requirements," which was not what I had asked for. It listed five cars and their specs, noting for each vehicle the specs that violated my criteria. It ultimately concluded, "there may not be any currently available fully electric compact SUVs that precisely meet all conditions." Except there is, of course.Copilot produced a refreshingly short response featuring a very nicely formatted table that summarized the two EVs it said satisfied my requirements. Neither does, though Copilot showed no specs that reflect that, so it may not have "known" it was wrong. There was no mention of the Volvo EX40.You.com started with this rather confusing statement: "Based on the search results provided, there is no direct information listing fully electric compact SUVs in the United States that meet [your] criteria." It then launched into an explanation of how to perform my own search (<eyeroll/>). Then came a surprise. It introduced the Volvo XC40 Recharge and showed how it satisfied my requirements, though it seemed unsure of itself: "The Volvo XC40 Recharge appears to meet all your criteria. However, I recommend verifying [everything]." Ultimately, You.com found the rabbit in the hat and pulled it out, but its response was confusing and disjointed, and it referred to the rabbit by an obsolete name. Mistral followed Copilot's lead in producing a short, clear response built around a well-formatted table of information that was often incorrect or inconsistent with my requirements.There was no mention of the Volvo EX40.As a group, the systems produced responses rife with claims that were incorrect, inconsistent, and/or incomplete. The last of these is the most disturbing. Six of the seven systems didn't mention the only SUV fulfilling the requirements. The one that did hid it after an explanation of how to do your own search, and even then it referred to that car by an outdated name.

There is a lot of work to be done before LLM chatbots are reliable search assistants.

May 26, 2025

Three Experiences with Video and AI

I recently found myself wondering about a TV episode I saw decades ago. I had only the haziest memory of it, so I threw this at Gemini:

I'm looking for an episode from the original TV show The Outer Limits or The Twilight Zone. The story is about a man with a robotic hand that he has to add fingers to in order to increase its ability to help him figure out what is happening. Do you know this episode?

Gemini did, correctly identifying it as "Demon with a Glass Hand" from the 1960s series, The Outer Limits. Googling for that yielded a link to the episode at The Internet Archive, which I downloaded and added to my Plex server.

Less than 15 minutes elapsed between the time I thought about the episode and the time I had it in my video library. It's not the best television content in the world, but I marvel at how easily I was able to track down and watch a show from 60 years ago based on only a very sketchy memory.

Upscaling the Episode"Demon with a Glass Hand" isn't terribly compelling, but that doesn't mean it shouldn't look good. Unfortunately, 1963 TV was SD, and these days we're used to a lot better resolution than the 496 x 368 I got from the Internet Archive.

Earlier this year, I purchased a copy of VideoProc Converter AI to experiment with upscaling low-resolution 8mm family videos I'd had digitized. The results were impressive on everything except faces, which the upscaling process tended to turn into grotesque caricatures of the people behind them. But hope springs eternal, so I decided to see what VideoProc could do with "Demon with a Glass Hand."

Invoking the program yielded a message excitedly telling me that a new version was, you know, faster and better, and I should upgrade immediately. It was free, so I did, but I didn't expect that V3 would be noticeably better than V2. When was the last time a program upgrade lived up to its PR?

In this case, I think it does. Check it out:

Upscaling is an interesting challenge, because it involves fabricating information (pixels) not present in the original images. Simple interpolation doesn't do a very good job, and VideoProc's V2 AI-based approach fell apart on faces. V3's faces aren't perfect, but I think they're good enough for casual viewing, and that's an impressive accomplishment.Looking Forward

Upscaling is an interesting challenge, because it involves fabricating information (pixels) not present in the original images. Simple interpolation doesn't do a very good job, and VideoProc's V2 AI-based approach fell apart on faces. V3's faces aren't perfect, but I think they're good enough for casual viewing, and that's an impressive accomplishment.Looking ForwardA few days ago, Andrei Alexandrescu brought my attention to this reddit post featuring a synthesized video by Ari Kuschnir using Google's Veo. The clip takes advantage of Veo's new ability to generate audio tracks, including dialogue and singing. I find the clip pretty amazing. There are legitimate questions about how Veo was trained and how its output could be used for ill, but I prefer to focus on the technical progress it represents and the creative promise it offers.

Incongruously, I was reminded of the Veo demo after viewing another old TV episode I barely remembered, one Gemini identified from this prompt:

I'm now thinking of a different episode, again from The Twilight Zone or The Outer Limits. It involves a man who goes to a store to custom-order a woman. He chooses eye color, etc. Any idea which episode this is?

Again Gemini knew what I was looking for ("I Sing the Body Electric" from the original The Twilight Zone), Google found a downloadable link to it, and I had it on my Plex only a few minutes after issuing the query.

The episode is quite terrible (much worse than "Demon with a Glass Hand"), but I liked the hopeful ending. Not the part summarizing grandma's data collection and sharing policy ("Everything you ever said or did, everything you ever laughed or cried about, I'll share with the other machines"), but the optimistic sentiment behind Rod Serling's closing voiceover:

Who's to say at some distant moment, there might be an assembly line producing a gentle product in the form of a grandmother, whose stock in trade is love?

For countries with an aging population requiring increasingly attentive personal care, I'd expect that gentle, loving robots rolling off an assembly line could be a pretty attractive prospect.

July 20, 2024

Anthropic's Claude Aces my German Grammar Checker Tests

Yesterday I published the results of my latest testing of German grammar checking systems. Unlike my first round of tests, I included LLMs, in particular ChatGPT, Gemini, and Copilot. I had a nagging feeling that I should include Anthropic's Claude, too, but I didn't find out about Claude until near the end of my testing, and I wanted to be finished, so I decided to worry about Claude later. This was a terrible decision. Later turned out to be only a few hours after I'd published the article, when I unexpectedly found myself with enough free time to play around with the system. Claude proceeded to not only outperform every other system I'd tested, it aced my set of tests with a perfect score.

My test set is hardly exhaustive, but no other system has managed to find and correct all 21 errors in the set. Kudos to Claude and Anthropic!

Here are the updated results of my testing after the addition of Claude to the list:

ChatGPT: 76 (90%)LanguageTool: 70 (83%)

Scribbr/Quillbot: 50 (60%)

Gemini: 48 (57%)Sapling: 41 (49%)

Copilot: 40 (48%)Rechtschreibprüfung24/Korrekturen: 39 (46%)

Google Docs: 34 (40%)

GermanCorrector: 31 (37%)

Online-Spellcheck: 26 (31%)

Microsoft Word 2010: 16 (19%)

July 19, 2024

German Grammar Checkers Revisited

A couple of months ago, I blogged about how I'd (superficially) tested a number of grammar checking tools for German. A comment from jbridge introduced me to the idea of using ChatGPT as a grammar checker, and in the course of exploring that option, I expanded my set of tests to make it a little less superficial. That led to new insights, so it seems like it's time for a German grammar checking tool follow-up.

If you're not familiar with my original post, I suggest you read it.

As before, I'm testing only free tools. I generally test without signing up for or logging into any accounts, but for ChatGPT, I created a free account and logged in so I'd have access to the more powerful ChatGPT 4o rather than the no-account-required ChatGPT 3.5.

I remarked last time that Scribbr and QuillBot are sister companies using the same underlying technology, but Scribbr found more errors. That is no longer the case. In my most recent testing, they produce identical results, so we can speak of Scribbr/QuillBot as a single system. Unfortunately, the way this uniformity was achieved was by bringing Scribbr down to QuillBot's level rather than moving QuillBot up to Scribbr's. Even so, Scribbr/QuillBot remains the second-best grammar-checking tool I tested (after LanguageTool). It continues to find errors that LanguageTool misses, so my default policy remains to use both.

My prior test set consisted of six individual sentences. This time around, I added a letter I had written. It's about 870 words long (a little under two pages), and, as I found out to my chagrin, contains a variety of grammatical errors. Checking such a letter is more representative of how grammar checkers are typically employed. Just as we normally spell-check documents instead of single sentences, grammar checkers are usually applied to paragraphs or more.

That had an immediate effect on my view of DeepL Write. I noted in my original review that it's really a text-rewrite tool rather than a grammar checker. On single sentences, you can use it to find grammatical errors, but when I gave it a block of text, it typically got rid of my mistakes by rephrasing things to the point where the word choices I'd made had been eliminated. I no longer consider it reasonable to view DeepL Write as a grammar-checking tool. It's still useful, and I still use it; I just don't use it to look for mistakes in my grammar.

Adding the letter to my set of tests also led me to remove TextGears and Duden Mentor from consideration, because they both have a 500-character input limit. My letter runs more than ten times that, about 5300 characters. Eliminating these systems is little loss, because, as I noted in my original post, GermanCorrector produces the same results as TextGears, but it doesn't have the input limit. As for Duden Mentor, it flags errors, but it doesn't offer ways to fix them. I find this irritating. Using it is like working with someone who, when you ask if they know what time it is, says "Yes."

These changes don't really matter, because when I submitted my augmented test set (i.e., sentences from last time plus the new letter) to the systems from my original post, LanguageTool and Scribbr/QuillBot so far outperformed everybody else, I can't think of a reason not to use them. I'll provide numbers later, but first we need to talk about LLMs.

In his comment on my original blog post, jbridge pointed out that ChatGPT 4o found and fixed all the errors in my test sentences. That was better than any of the systems I'd tested. Microsoft's LLM, Copilot, produced equally unblemished results. Google's Gemini, however, found and fixed mistakes in only four of the six sentences.

I asked all the systems (both conventional and LLM) to take a look at my letter. ChatGPT found and fixed 13 of 15 errors, the best performance of the group. LanguageTool also found 13 of the 15 mistakes, but ChatGPT fixed all 13, while LanguageTool proposed correct fixes for only 11.

Given ChatGPT's stellar performance, I was excited to see what Copilot and Gemini could do. Copilot kept up with ChatGPT until it quit--which was when it hit its 2000-character output limit. This was less than halfway through the letter, so Copilot found and fixed only four of the 15 errors.

Gemini's output was truncated at about 4600 characters, which is better than Copilot, but still less than the full length of the text. Gemini was able to find and fix eight of the 15 errors. This is notably fewer than ChatGPT, and not just because Gemini quit too early. In the text that Gemini processed, it missed three mistakes that ChatGPT caught.

I had expected that the hype surrounding ChatGPT was mostly hype and that the performance of ChatGPT, Copilot, and Gemini would be more or less equivalent. If their performance on my letter is any indication, ChatGPT is currently much better than the offerings from Microsoft and Google when it comes to checking German texts for grammatical errors. It also does better than LanguageTool, the best-performing non-LLM system, as well as the combination of LanguageTool and Scribbr/QuillBot together. It's really quite impressive.

However, just because it's better doesn't mean it's preferable. Read on.

My interest in grammar checkers is two-fold. Sure, I want to eliminate errors in my writing (e.g., Email and text messages), thus sparing the people who receive it some of the kinks in my non-native German, but I also want to learn to make fewer mistakes. Knowing what I'm doing wrong and how to fix it will help me get there. At least I hope it will.

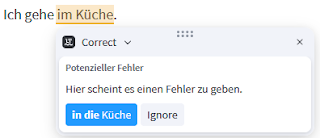

Traditional (non-LLM) grammar checkers are good at highlighting what's wrong and their suggestion(s) on how to fix it. This is what LanguageTool looks like on the second of my single-sentence tests. The problematic text is highlighted, and when you click on it, a box pops up with a suggested fix:

The other grammar checkers work in essentially the same way.

LLM systems are different. They can do all kinds of things, so if you want them to check a text for German grammar errors, you have to tell them that. This is the prompt I used before giving them the letter to check:

In the following text, correct the grammatical errors and highlight the changes you make.For the most part, they did as they were told, but only for the most part. Sometimes they made changes without highlighting them. When that was the case, it was difficult for me to identify what they'd changed. It's hard to learn from a system that changes things without telling you, and it's annoying to learn from one that disregards the instructions you give it. But that's not the real problem.

The real problem is that LLMs can be extraordinarily good at doing something (e.g., correcting German grammar errors) and unimaginably bad at explaining what they've done. Here's ChatGPT's explanation of how it corrected the last of my single-sentence tests. (Don't worry if you don't remember the sentence, because it doesn't matter).

That's nonsense on its own, but it also bears no relation to the change ChatGPT made to my text. Explanations from Gemini and Copilot were often in about the same league.

Lousy explanations may be the real problem, but they're not the whole problem. My experience with LLMs is that they complement their inability to explain what they're doing with a penchant for irreproducability. The nonsensical ChatGPT explanation above is what I got one time I had it check my test sentences, but I ran the tests more than once. On one occasion, I got this:

"Ort" is masculine, so the relative pronoun should be "der" to match the previous clause and maintain grammatical consistency.

This is absolutely correct. It's also completely different from ChatGPT's earlier explanation of the same change to the same sentence.

Copilot upped the inconsistency ante by dithering over the correctness of my single-sentence tests. The first time I had it look at them, it found errors in all six sentences. When I repeated the test some time later, it decreed that one sentence was correct as is. A while after that, Copilot was back to seeing mistakes in every sentence.

Using today's LLM systems to improve your German is like working with a skilled tutor with unpredictable mood swings. When they're good, they're very, very good, but when they're bad, they're awful.

Systems and Scores

I submitted the six single-sentence tests from my first post plus the new approximately-two-page letter to the grammar checkers in my first post as well as to ChatGPT, Gemini, and Copilot. As noted above, I disregarded the results from DeepL Write, TextGears, and Duden Mentor. I did the same for Studi-Kompass, which I was again unable to coax any error-reporting out of. I scored the remaining systems as in my original post, awarding up to four points for each error in the test set. A total of 84 points was possible: 24 from the single-sentence tests and 60 from the letter. These are the results:

ChatGPT: 76 points (90% of possible)LanguageTool: 70 (83%)

Scribbr/Quillbot: 50 (60%)

Gemini: 48 (57%)Sapling: 41 (49%)

Copilot: 40 (48%)Rechtschreibprüfung24/Korrekturen: 39 (46%)

Google Docs: 34 (40%)

GermanCorrector: 31 (37%)

Online-Spellcheck: 26 (31%)

Microsoft Word 2010: 16 (19%)

June 19, 2024

How a Bad Monitor Port Thwarted my Move to macOS

In March, I decided to move from Windows to Mac. My goal was texting on the desktop, i.e., texting via my computer. Many people insist on texting, and I was able to communicate with them only through my iPhone. I wanted to be able to do it using my computer, as I did with email and WhatsApp.

Changing operating systems is always a production, but in thirty-plus years with Windows, I had never developed an affection for it, so my only real concern was that I'd have to give up the three-monitor setup I'd used for over a decade. I connect my monitors to a docking station made by Lenovo that's compatible with my Lenovo laptop. Apple doesn't make docking stations, but an associate at the local Apple store assured me I'd have no trouble using a third-party dock. He pointed me to Plugable. Pluagable recommended their UD-ULTC4K, and I ordered one for use with the MacBook Pro M3 I purchased.

I bought a bunch of stuff at this time. I got a new keyboard with Mac-specific keys. I got a new monitor offering HDMI and DisplayPort inputs, because one of my current monitors didn't have either, and those are the video outputs on the Plugable docking station I'd ordered. I purchased new video cables to connect everything.

As we'll see, the new monitor is the villain of this story. We'll call it the ViewSonic.

Missing Monitors and Restless WindowsA few days after I started using the Mac-based system, I wrote Plugable about an intermittent problem I was having. It occurred only after a period of inactivity (POI), i.e., when the displays turned off because I wasn't interacting with the system. When I started using the Mac after a POI, one or two monitors might fail to wake up. The Mac would then shuffle the windows from the "missing" monitors to the monitor(s) it detected. Most of the time, only one monitor went blank. It was rarely the ViewSonic.

Two weeks of debugging with Plugable followed, during which logs were collected, software was updated, cables were swapped, a replacement dock was issued, and we started all over. Then I reported what I came to call restless windows:

On some occasions, after all three screens come up, some of the windows that should be on one screen have been moved to another one.

Neither Plugable nor I had theories about how this could happen, nor did we have ideas for further debugging the missing-monitor problem, which hadn't gone away. We agreed that I'd return their dock, and they'd refund my money. I was disappointed at how things worked out, but Plugable acquitted itself exemplarily throughout.

I replaced the Plugable dock with TobenONE's UDS033. It also yielded intermittent disappearing monitors after a POI. TobenONE's interest in helping me debug the problem was minimal, and it vanished entirely when they found I'd purchased the dock from Amazon instead of from them. I returned it.

The manager at the local Apple store was sympathetic about the trouble I was having. She suggested swapping out the MacBook to see if that was causing the problems, extending Apple's return period to facilitate the swap. I ordered a replacement computer matching the one I already had.

There are two basic MacBook Pro M3 models. The M3 Pro, which is what I had, natively supports up to two external monitors. To connect three, you have to use a docking station employing a technology that, from what I can tell, fools a MacBook into thinking there are two external monitors when in fact there are three. The big cheese among such technologies is DisplayLink. Both Plugable and TobenONE use it. Internet sentiment towards DisplayLink is lukewarm, but if you need to connect three monitors to an M3 Pro MacBook, it's your primary choice.

The other MacBook Pro M3 model is the M3 Max. It's more expensive than the M3 Pro, but it natively supports up to three external monitors. I'd originally purchased an M3 Pro, and the replacement I'd ordered was also an M3 Pro, but while it was in transit, I realized that by upgrading to an M3 Max, I could eliminate the need for a docking station as well as DisplayLink, thus simplify the debugging of my problem. I canceled the M3 Pro replacement before it was delivered, and I ordered an M3 Max. Apple was unfazed by this, but my credit card company was on high alert, noting that my pricey orders from Apple were unprecedented and asking for confirmation each time.

I was excited about the M3 Max. With docks and DisplayLink out of the picture, surely my missing-monitor and restless-windows problems would disappear!

Um...no. Which is not a surprise, because I've already told you that the source of my display drama was a bad port on the ViewSonic. At the time, I didn't know that.

I connected my monitors to the M3 Max and arrayed them side by side. The ViewSonic was in the middle, because it was the newest and had the spiffiest specs. Not long afterward, I had the bizarre experience of returning to my computer after a POI and seeing that the windows on my left and right monitors had swapped! Online discussions (e.g., here and here and here) showed that I was not the first to experience this. I logged several instances before calling Apple. One tech remarked, "Yeah, that happens to me, too." A second told me, "Engineering has an open issue on that." There was every reason to believe that this was a MacBook problem.

Notice that the window-swapping behavior did not involve the ViewSonic. The restless windows afflicted only the side monitors. The ViewSonic sat quietly in the middle with an innocent look on its face.

Apple told me they'd look into the problem and get back to me. In the meantime, I logged what happened after every POI. On Day 1, the windows on the left and right monitors swapped six times out of seven POIs. On Day 2, eight of ten POIs resulted in window swaps.

Then things got strange.

The Music app often jumped from the right monitor to the middle one, even though other windows on the right monitor stayed put. Sometimes the windows from the right monitor moved to the left monitor, but the windows on the left monitor remained in place. (That's half a swap.) I started taking screen shots before POIs (i.e., just before I left the computer for a while) for comparison with what I saw after a POI. I found that my restless windows sometimes did more than just jump from one monitor to another. They might take on a different size or their position on the monitor might change. Or, as in this example, both:

Screen shots before POI (above) and after (below). The ViewSonic is the middle monitor, where nothing changes.

Screen shots before POI (above) and after (below). The ViewSonic is the middle monitor, where nothing changes. I gave up. I'd been battling missing monitors and restless windows for three months, and there was no end in sight. The Internet showed that others had the kinds of problems I did, and they couldn't solve them. Apple support reps told me they experienced the behavior I did, and I'd received no follow-up from Apple Engineering. I returned my MacBook on the last day of its return period. I was sad to do it, because I'd been just as pleased with texting on the desktop as I'd hoped, and I'd grown fond of photos taken on my iPhone magically appearing on the MacBook. Apple's reputation for integration isn't for nothing. But convenient texting and synchronized photos weren't enough to compensate for nondeterministic window sizing and placement each time I returned to my machine. I retreated to my Windows system to lick my wounds and consider my options.

Back to WindowsI dusted off my Lenovo laptop and its docking station (literally!), hooked everything up, and booted into Windows. I futzed around and rued the loss of my texting window, then went away for a bit. When I came back, I was stunned to see that one of the windows that had been on my left monitor was now on the middle monitor! Hoping I had somehow imagined it, I moved it back where it belonged and left the machine for another POI. It had not been my imagination. The window was not where I'd left it. In addition, a window on the left monitor was now a different size!

You know those creepy scenes in movies and TV shows where the protagonist unplugs a TV or a computer monitor to make sure it's off, but it turns back on, anyway? It was like that. The Mac was gone, and I was using the same old Windows system I'd been using for years. Restless windows were impossible. And yet...

It took me a while to realize that it wasn't the same system I'd been using for years. I hadn't swapped back in the monitor the ViewSonic had replaced. That made the ViewSonic the only component common to the Mac-based system I'd been using and the Windows-based system sitting before me--the only component common to all configurations where I'd experienced windows on walkabouts.

I swapped out the ViewSonic for the monitor it had replaced. Everything worked fine. Time after time, my Windows remained where I put them. It was apparent that the ViewSonic was anything but innocent.

PortsThere are two digital input ports on the ViewSonic: HDMI and DisplayPort (DP). (The monitor also has a VGA input, which is analog. That input isn't germane to the story, but it's worth taking a moment to marvel at the longevity of VGA, which debuted in 1987 and remains important enough that monitor vendors continue to support it.) I'd been using the ViewSonic's DP input, because the video output from the Lenovo docking station is DP, and I figured it would be better to go DP-to-DP than DP-to-HDMI.

The ViewSonic was clearly responsible for my weeks of video despair, but I didn't know if the problem lay with the monitor in general or with the DP port in particular. To find out, I swapped the ViewSonic back in to the system, this time connecting to the HDMI port. A zillion POI trials convinced me that the monitor worked fine with HDMI. The problem had to stem from the DP connection.

That connection has three parts: the DP port on the docking station, the DP port on the ViewSonic, and the cable between them. I'd successfully used the DP port on the dock when testing the ViewSonic's HDMI connection, so the dock's DP output was in the clear. That meant the source of my monitor madness was either the ViewSonic's DP port or the cable leading to it. I bought a new cable and connected to the ViewSonic's DP port. My windows were restless again. Two cables with the same behavior meant the cable wasn't the problem. The guilty party had to be the ViewSonic's DP port. It was the last suspect standing.

To really clinch the case, I'd need to replace the ViewSonic with an identical monitor and verify that everything works over a DisplayPort connection with the replacement monitor. I'm working with ViewSonic on that now.

To me, the big mystery is how a bad port on one of three monitors in a system can, among other things, cause the window managers in two independent operating systems to swap windows on the monitors whose ports are not bad. If you have insight into this, please share!

Mac ThoughtsThe case against the ViewSonic is rock-solid, but that doesn't mean the Mac is off the hook. The Internet reports of restless windows under macOS are still there, as are the comments from Apple's support reps acknowledging the problem. It could be that the ViewSonic is defective and Macs have unreliable multiple-monitor support. Testing this would require a fourth MacBook purchase (I wonder what my credit card issuer would think of that), replacing the ViewSonic with a known-good monitor, and seeing what happens.

It'd be easy enough to do, but I'm not sure I want to. During my three months with macOS, I devoted a great deal of time to debugging missing monitors and restless windows, but I also spent many hours familiarizing myself with the operating system and working within it. Desktop texting and synced photos were great, and moving from the M3 Pro to the M3 Max was the kind of smooth experience that drives home just how sadistic Microsoft's Windows-to-Windows migration process is. (My PC is 11 years old, in no small part because moving to a new machine is so painful.) macOS is a significant upgrade to Windows in important ways, especially if you have other Apple devices, e.g., an iPhone.

However, I found day-to-day life with macOS rather uncomfortable. The menu bar's fixed position at the top of the screen often means moving the mouse a large distance to get to it. I have 24-inch monitors, and those marathon moves got old quickly. Word on the Internet is that the decision to anchor the menu bar atop the screen dates to the original 1984 Macintosh computer. That machine had a nine-inch screen and ran one application at a time, always in full-screen mode.That's nothing like the world I live in. The Mac's fixed menu bar location feels like the 40-year-old design decision it apparently is. I don't think it's stood the test of time.

Keyboard shortcuts can reduce the need to go to the menu bar, I know, but there's a steep memorization curve for them, and not everything has a keyboard shortcut. I find Windows' per-window menu bar more usable.

macOS seems focused on applications, while Windows is built around windows. Under macOS, Command-Tab cycles through applications. The Windows equivalent Alt-Tab cycles through windows. If you have multiple windows for an application, they share a single entry in the macOS Command-Tab cycle. In the Windows Alt-Tab cycle, each window get its own entry. (macOS offers a way to cycle through all windows associated with an application (Command-↑/Command-↓ after Command-Tabbing to the application), but I find it cumbersome.) The application-based focus on the Mac is so pronounced, you can hide all windows associated with an application, an operation with no Windows counterpart, as far as I know.

The Windows approach makes more sense to me. I partition my work into windows, not applications. I often have multiple independent windows open in a single application, especially browsers and spreadsheets. It's hard for me to think of situations where I'd like to close all windows associated with an application, but it's easy for me to think of situations where I'd like to close only some windows associated with an application. During my time with macOS, I tried to find scenarios where hiding made sense, but I came up empty. I ended up minimizing windows under macOS, just like I did under Windows, and I missed the ability to easily cycle through windows when I wanted to interact with one.

I was surprised to find that I often found familiar content looking rather ugly under macOS. Messages in Thunderbird looked like everything was in bold face, while Excel spreadsheet content was so small, I had to bump the magnification up to 120% to comfortably view it. It's likely that there are configuration changes I could have made to address these issues, but I really expected that an Excel spreadsheet or a Thunderbird email message I'd created under Windows would look pretty much the same when viewed in the same application (often on the same monitors) under macOS.

On Windows, I run Excel 2010. On macOS, the closest I could get was Office 365. Excel 365 on macOS lacks customization options present in Excel 2010 under Windows. The Quick Access Toolbar (QAT) on the Mac is fixed above the ribbon, for example, while in my Windows version, you can move it below the ribbon--which I do. Some commands I've got on the QAT in my Windows version of Excel--Font Name, Font Size, and Insert Symbol--can't be put in the QAT in Excel 365 on macOS. These limitations are Microsoft's fault, not Apple's, but they still chafe.

A third-party Excel plug-in I use works quite differently and less conveniently under macOS than under Windows. That's neither Microsoft's nor Apple's fault, but it further detracts from the overall Excel experience on a Mac. That matters to me, because I use Excel a lot. Given enough time and effort, I'm sure I could get used to the Mac version of Excel or I could switch to a different spreadsheet program, but between an inconveniently located menu bar, an emphasis on applications over windows, ugly window content, and restricted Excel functionality, the total cost of texting on the desktop comes to a lot more than I'd expected.

Plan BAt least that approach to texting on the desktop does. There is another way. If my going to macOS is too much trouble, it's supposed to be possible to use remote desktop software to bring macOS to me. This requires a Mac in addition to a Windows machine, but I happen to have an old MacBook Air floating around (as it were). I should be able to run a remote desktop server on the MacBook and a remote desktop client on Windows, thus giving me a way to use apps on the Mac--notably iMessage--from Windows. With suitable remote desktop support, I should be able to copy and paste from one machine to another, and the net effect should be pretty close to running Mac apps locally.

The remote desktop software most frequently mentioned for this is Google Remote Desktop (GRD). I gave it a quick try, and, well, if you accidentally make the Mac both the GRD server and client, you end up with a screen that looks like this:

Obviously, I have more work to do.

Obviously, I have more work to do.May 29, 2024

Five Years of no EV for Me

Five years ago this week my search for an electric compact SUV ended with me buying a conventional gas-powered car. I disliked the car (a Nissan Rogue) within a month after buying it, and I've been on the lookout for an electric replacement ever since.

Five years ago this week my search for an electric compact SUV ended with me buying a conventional gas-powered car. I disliked the car (a Nissan Rogue) within a month after buying it, and I've been on the lookout for an electric replacement ever since. Over the years, I've vented my frustrations with the EV market in a number of blog posts, sometimes focusing on their high cost and sometimes on the lack of models with the basic features I'm looking for: all-wheel drive, an openable moonroof, a 360-degree camera, and an EPA range of at least 235 miles. In November, I discussed the luxury-car-level pricing of the only two cars that meet these criteria: the Nissan Ariya and the Volvo XC40 Recharge. Since then, the only things that have changed are the name of the Volvo (now called the EX40) and the elimination of the prospect of Chinese imports pushing down EV prices. (The US government has adopted a policy of keeping them out of the market.)

In the meantime, what I'm looking for in an EV has evolved a bit. I still want all-wheel drive, an openable moonroof, and an all-around camera, but I now want a car on the shorter end of the compact SUV spectrum. My Rogue is 185 inches long. I'd prefer no more than 180 inches. (Tesla's Model Y is 187 inches. Ford's Mustang Mach-E is 186. VW's ID.4 is 181.)

My thinking about range has also changed. EV ranges can't touch those of gas-burners, so I understood that distance driving in an EV requires planning. But this didn't strike me as a problem. If you're driving, say, 400 miles to get from Point A to Point B, stopping for a half hour at 200 miles to recharge isn't a hardship. 200 miles represents 3-4 hours of driving, and who doesn't want to stop at that point to stretch one's legs, use the bathroom, grab a snack, etc?

A recent trip made me realize that not all long drives consist of extended driving sessions. My wife and I put 400 miles on a tank of gas while meandering along the southern Oregon coast. Most of our driving sessions were under an hour, because we stopped at various beaches (including, of course, Meyers Creek Beach, which is at the mouth of Myers Creek) and small coastal and inland towns (e.g., Bandon, Coquille, Port Orford, and Gold Beach). Many of the places we stopped had no facilities of any kind, much less charging stations.

A recent trip made me realize that not all long drives consist of extended driving sessions. My wife and I put 400 miles on a tank of gas while meandering along the southern Oregon coast. Most of our driving sessions were under an hour, because we stopped at various beaches (including, of course, Meyers Creek Beach, which is at the mouth of Myers Creek) and small coastal and inland towns (e.g., Bandon, Coquille, Port Orford, and Gold Beach). Many of the places we stopped had no facilities of any kind, much less charging stations. According to the Oregon Clean Vehicle Rebate Program EV Charging Station Map, the charging options in Gold Beach, where we spent one night, consist of one 120V charging outlet for E-bikes and one 120V outlet for use by hotel and shop patrons on the opposite side of the river from where we were staying. A two-port charging station at a motel we weren't staying at is noted as "coming soon." The source for this information is shown as PlugShare, but the PlugShare web site shows only the "coming soon" charger, so it's possible that there aren't any charging options in Gold Beach at all.

This casts the "planning" aspect of traveling by EV in a different light. If you're off the beaten track and poking along in a gas-powered car, stopping here and there as the whim moves you, you can take refueling opportunities for granted. (There are four gas stations in Gold Beach.) In an EV, you may have to actively seek out recharging options. You really do have to plan.

I haven't yet decided what that means for me as a potential EV owner. It's a simple fact that EV ranges are notably lower than ICE ranges, and it's an equally simple fact that the EV charging infrastructure is much less well developed than the gas station network. For the foreseeable future, buying an EV means accepting those facts and finding ways to cope with them. If I really want to own an EV, I'll have to figure out how to do that.

I probably have plenty of time. The only EV that satisfies my basic criteria and fulfills my new not-longer-than-180-inches criterion is the Volvo EX40 (née XC 40 Recharge). MSRP as I'd like it equipped is nearly $62,000, which is about $20,000 more than my budget.

However, I have a gas-powered riding lawn mower that's not likely to last a lot longer. I'm already thinking of replacing it with an electric version. It could be that the form my first EV takes will be that of a machine you sit on top of and cut grass with.Scott Meyers's Blog

- Scott Meyers's profile

- 118 followers